Evaluate multi-turn agents

Step-by-step guide to evaluate multi-turn agents

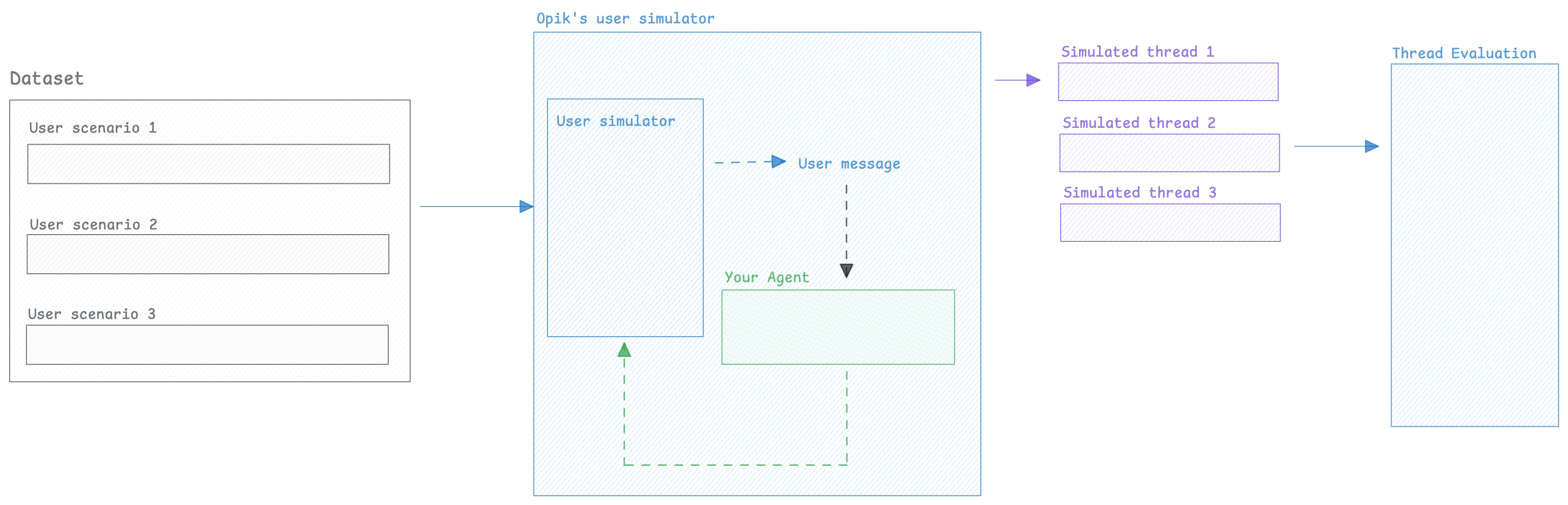

When working on chatbots or multi-turn agents, it can become a challenge to evaluate the agent’s behavior over multiple turns as you don’t know what the user would have asked as a follow-up question based on the previous turns.

To achieve multi-turn evaluation, we can turn to simulation techniques to simulate the user’s response based on the previous turns. The core idea is that we can use an LLM to simulate what the user would have responded based on the previous turns and run this for a number of turns.

Once we have this conversation, we can use Opik evaluation features to score the agent’s behavior.

Creating the user simulator

In order to perform multi-turn evaluation, we need to create a user simulator that will generate the user’s response based on previous turns

Now that we have a way to simulate the user, we can create multiple simulations that we will in turn evaluate.

Running simulations

Now that we have a way to simulate the user, we can create multiple simulations:

1. Create a list of scenarios

In order to more easily keep track of the scenarios we will be running, let’s create a dataset with the user personas we will be using:

2. Create our agent app

In order to run the simulations, we need to create our agent app based on our existing

agent. The run_agent function we will be creating will have the following signature:

3. Run the simulations

Now that we have a dataset with the user personas, we can run the simulations:

The run_simulation function keeps track of the internal conversation state by constructing

a list of messages with the result of the run_agent function as an assistant message and

the UserSimulator’s response as a user message.

If you need more complex conversation state, you can create threads using the UserSimulator’s

generate_response method directly.

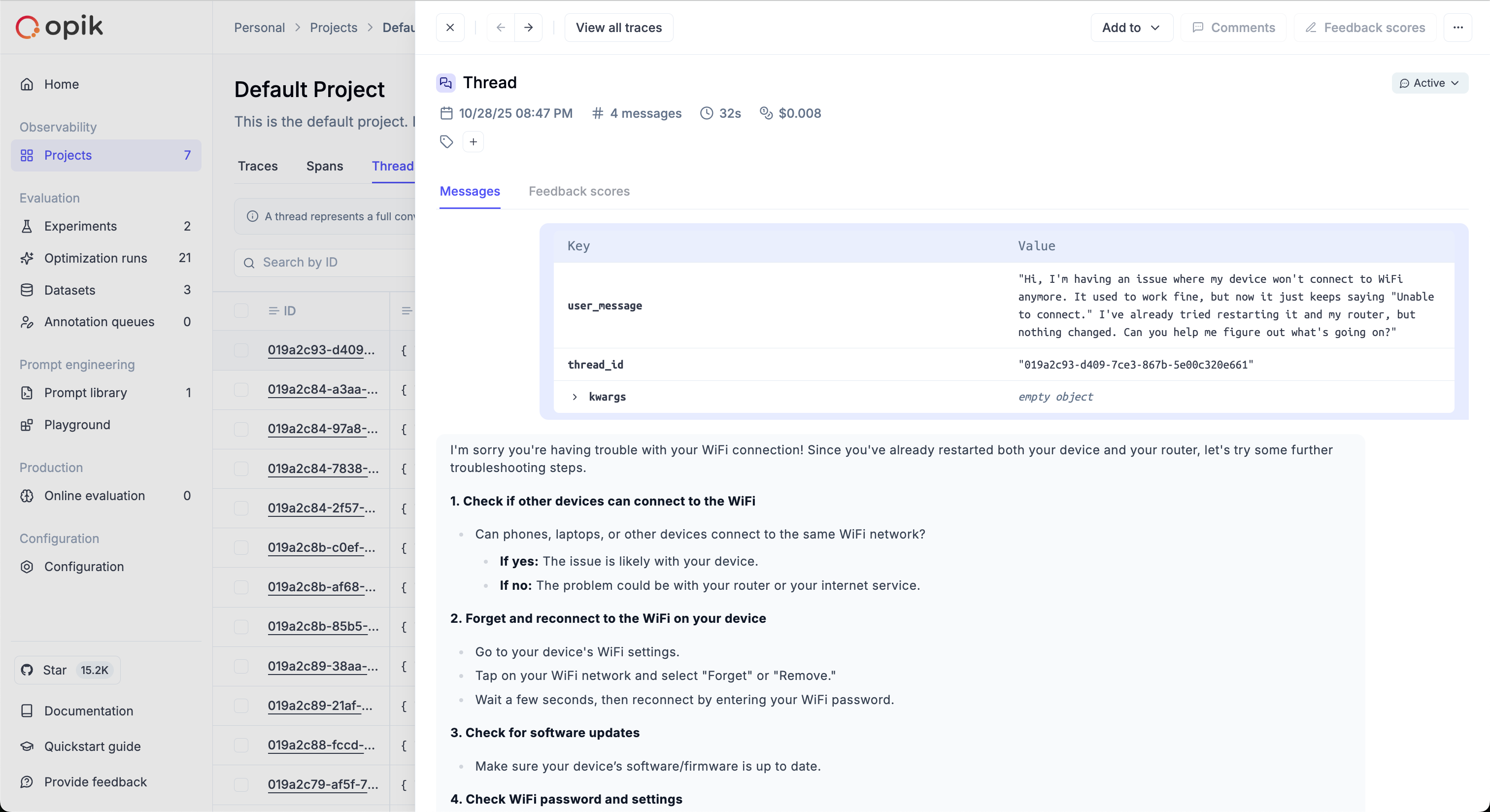

The simulated threads will be available in the Opik thread UI:

Scoring threads

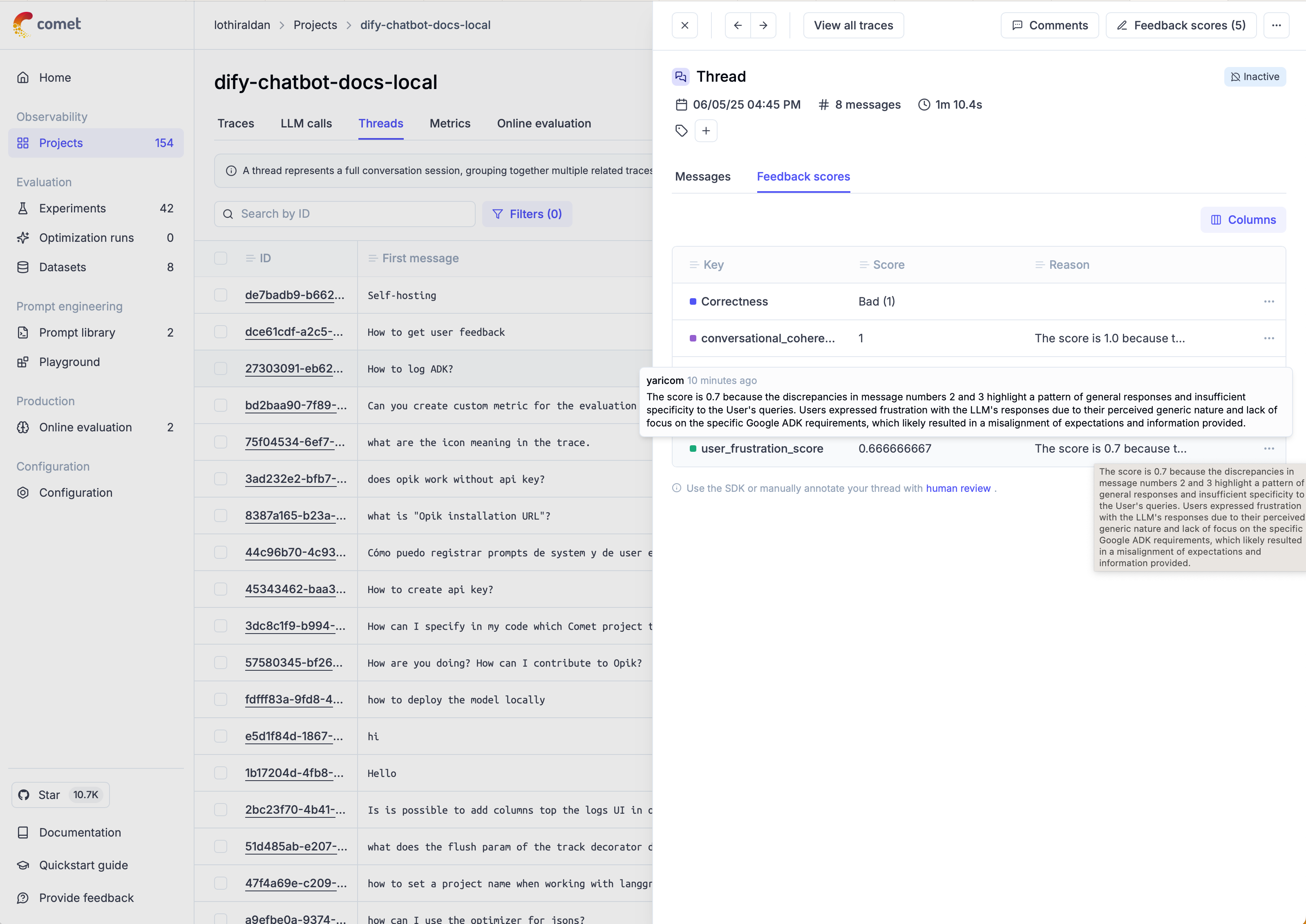

When working on evaluating multi-turn conversations, you can use one of Opik’s built-in conversation metrics or create your own.

If you’ve used the run_simulation function, you will already have a list of conversation messages

that you can pass directly to the metrics, otherwise you can use the evaluate_threads function:

You can learn more about the evaluate_threads function in the evaluate_threads guide.

Once the threads have been scored, you can view the results in the Opik thread UI:

Next steps

- Learn more about conversation metrics

- Learn more about custom conversation metrics

- Learn more about evaluate_threads

- Learn more about agent trajectory evaluation