Re-running an existing experiment

You can update existing experiments in several ways:

- Update experiment name and configuration - Change the experiment name or update its configuration metadata

- Update experiment scores - Add new scores or re-compute existing scores for experiment items

Update Experiment Name and Configuration

You can update an experiment’s name and configuration from both the Opik UI and the SDKs.

From the Opik UI

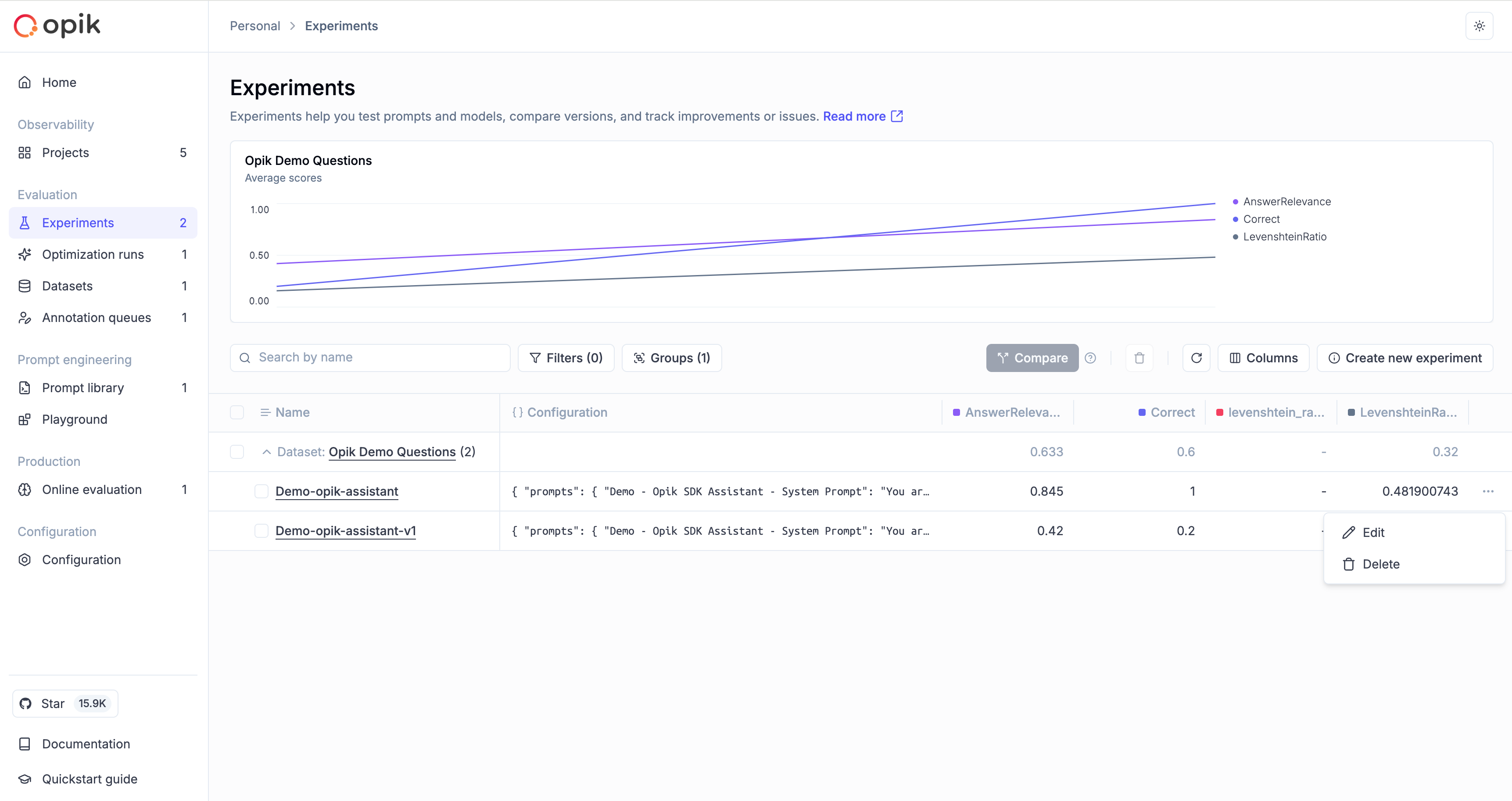

To update an experiment from the UI:

- Navigate to the Experiments page

- Find the experiment you want to update

- Click the … menu button on the experiment row

- Select Edit from the dropdown menu

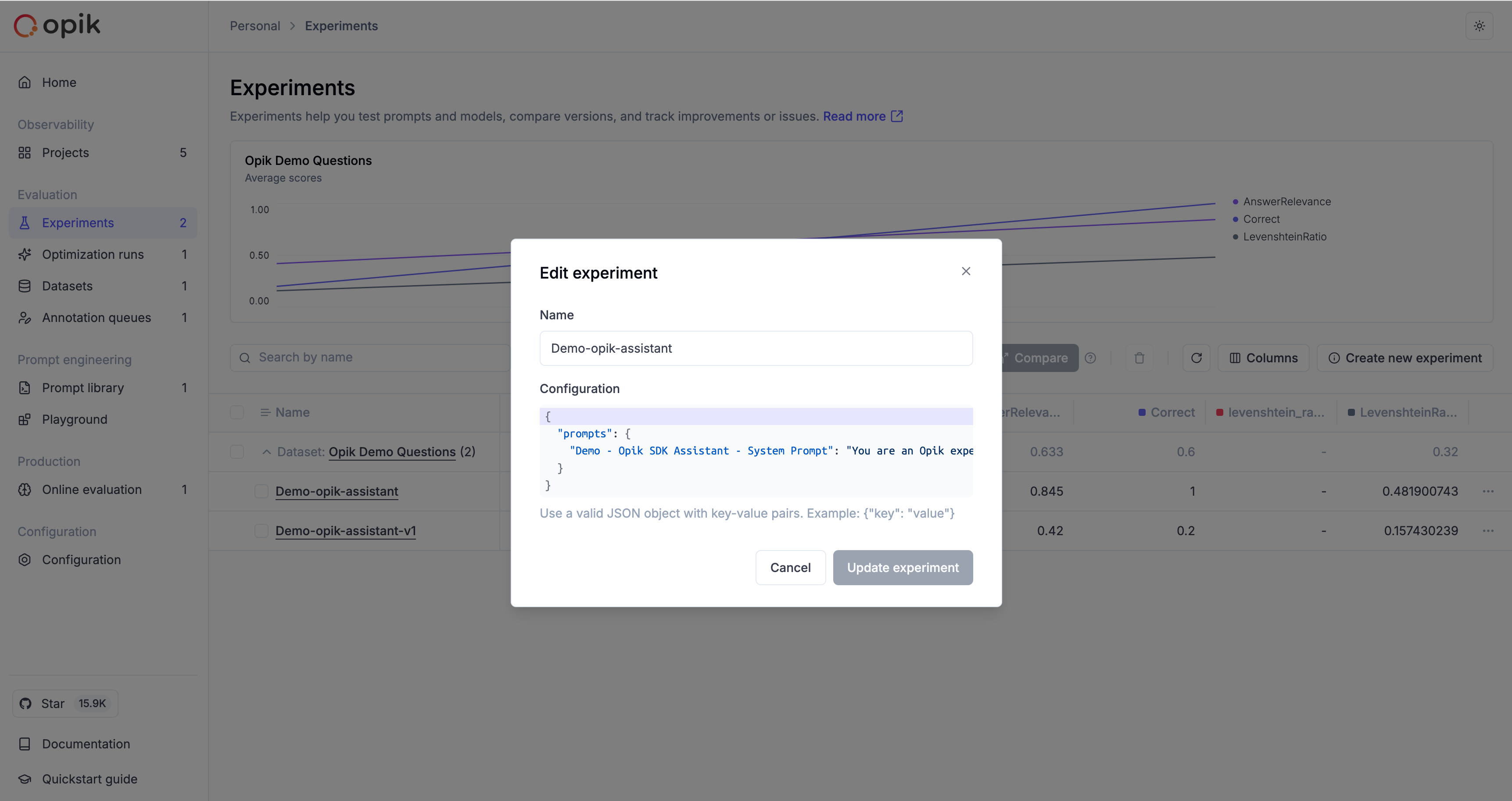

- Update the experiment name and/or configuration (JSON format)

- Click Update Experiment to save your changes

The configuration is stored as JSON and is useful for tracking parameters like model names, temperatures, prompt templates, or any other metadata relevant to your experiment.

From the Python SDK

Use the update_experiment method to update an experiment’s name and configuration:

From the TypeScript SDK

Use the updateExperiment method to update an experiment’s name and configuration:

Update Experiment Scores

Sometimes you may want to update an existing experiment with new scores, or update existing scores for an experiment. You can do this using the evaluate_experiment function.

This function will re-run the scoring metrics on the existing experiment items and update the scores:

The evaluate_experiment function can be used to update existing scores for an experiment. If you use a scoring

metric with the same name as an existing score, the scores will be updated with the new values.

You can also compute experiment-level aggregate metrics when updating experiments using the experiment_scoring_functions parameter.

Learn more about experiment-level metrics.

Example

Create an experiment

Suppose you are building a chatbot and want to compute the hallucination scores for a set of example conversations. For this you would create a first experiment with the evaluate function:

evaluate function in our LLM evaluation guide.Update the experiment

Once the first experiment is created, you realise that you also want to compute a moderation score for each example. You could re-run the experiment with new scoring metrics but this means re-running the output. Instead, you can simply update the experiment with the new scoring metrics: