Prompt Playground

The Opik prompt playground is currently in public preview, if you have any feedback or suggestions, please let us know.

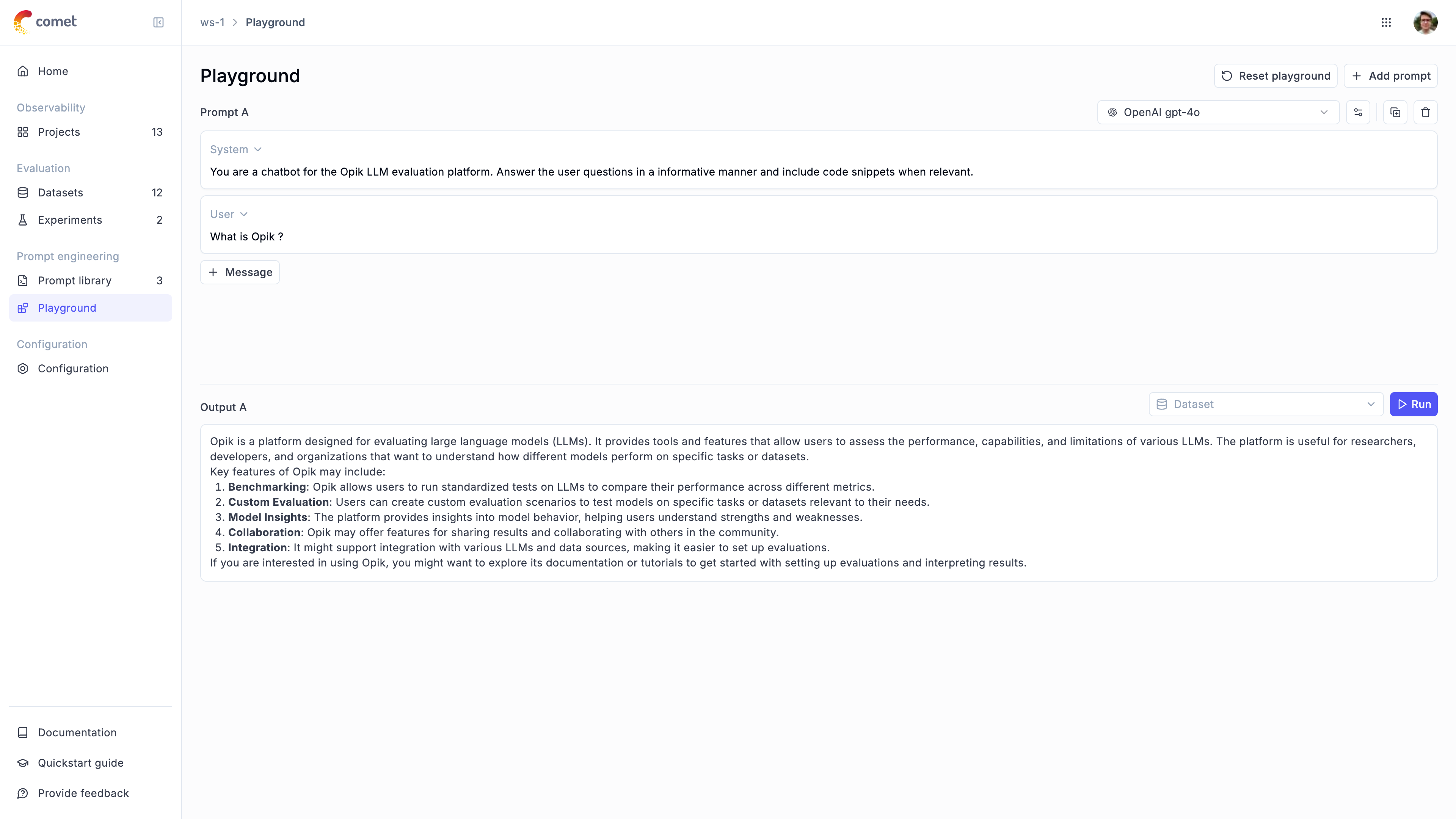

When working with LLMs, there are times when you want to quickly try out different prompts and see how they perform. Opik’s prompt playground is a great way to do just that.

Using the prompt playground

The prompt playground is a simple interface that allows you to enter prompts and see the output of the LLM. It allows you to enter system, user and assistant messages and see the output of the LLM in real time.

You can also easily evaluate how different models impact the prompt by duplicating a prompt and changing either the model or the model parameters.

All of the conversations from the playground are logged to the playground project so that you can easily refer back to them later.

Configuring the prompt playground

The playground supports the following LLM providers:

- OpenAI

- Anthropic

- OpenRouter

- Gemini

- Vertex AI

- Azure OpenAI

- Amazon Bedrock

- LM Studio (coming soon)

- vLLM / Ollama / any other OpenAI API-compliant provider

If you would like us to support additional LLM providers, please let us know by opening an issue on GitHub.

Go to configuring AI Providers to learn how to configure the prompt playground.

Running experiments in the playground

You can evaluate prompts in the playground by using variables in the prompts using the {{variable}} syntax. You can then connect a dataset and run the prompts on each dataset item. This allows both technical and non-technical users to evaluate prompts quickly and easily.

When using datasets in the playground, you need to ensure the prompt contains variables in the mustache syntax ({{variable}}) that align with the columns in the dataset. For example if the dataset contains a column named user_question you need to ensure the prompt contains {{user_question}}.

Once you are ready to run the experiment, simply select a dataset next to the run button and click on the Run button. You will then be able to see the LLM outputs for each sample in the dataset.

Accessing nested JSON dataset fields

If a dataset column contains JSON-formatted content, you can use dot notation to reference nested values directly when querying or filtering. Dot notation lets you specify the path to a nested field (e.g., {{input.user.name}}) to extract only the value you need from within a structured object. This makes it easier to work with deeply nested data without manual flattening or custom parsing.

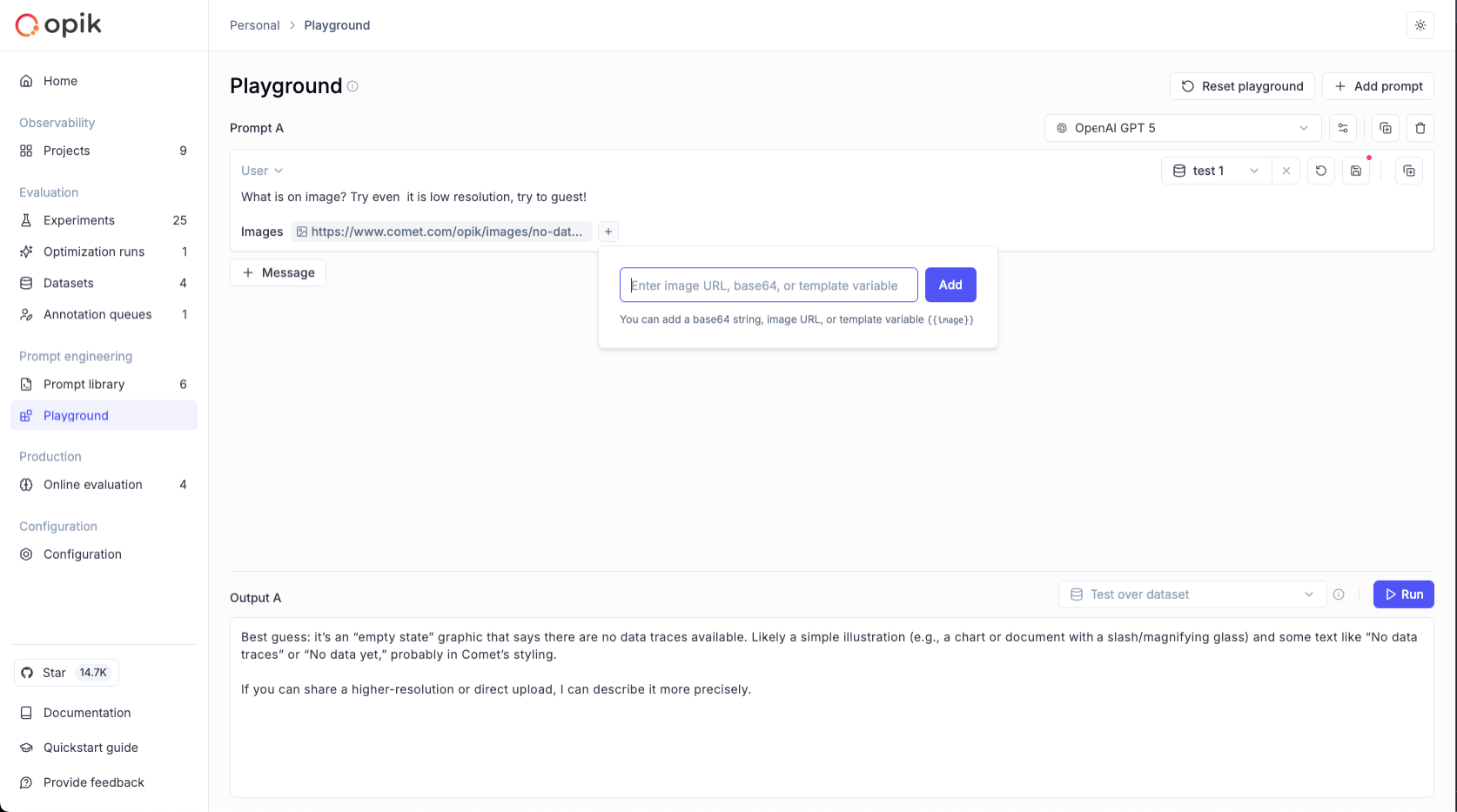

Using images in the playground

The playground supports multimodal prompts with images when using vision-capable models. You can add images in two ways:

Adding images directly in messages

You can add images directly to your prompt messages through the playground UI:

Internal representation: When you add an image through the UI, Opik internally stores it with <<<image>>><</image>>> wrapper tags on a new line in the prompt. This internal format is not visible in the UI but ensures proper serialization and processing of multimodal content.

Using images from datasets

When evaluating prompts with datasets, you can reference image columns using the standard Mustache syntax:

Where product_image is a column in your dataset containing image data.

Supported image formats:

- Image URL

- Base64 encoded image

When using images in prompts, ensure you select a vision-capable model. Opik automatically detects which models support vision based on provider capabilities.