Feedback Definitions

Feedback definitions allow you to create custom labels to systematically collect and analyze structured feedback on your LLM outputs. This page explains how to create and manage feedback definitions within Opik.

Overview

Feedback definitions enable you to:

- Create custom labels to evaluate LLM outputs

- Collect structured feedback using predefined categories

- Track quality over time with consistent evaluation criteria

- Filter and analyze feedback data across your experiments

Managing Feedback Definitions

Viewing Existing Definitions

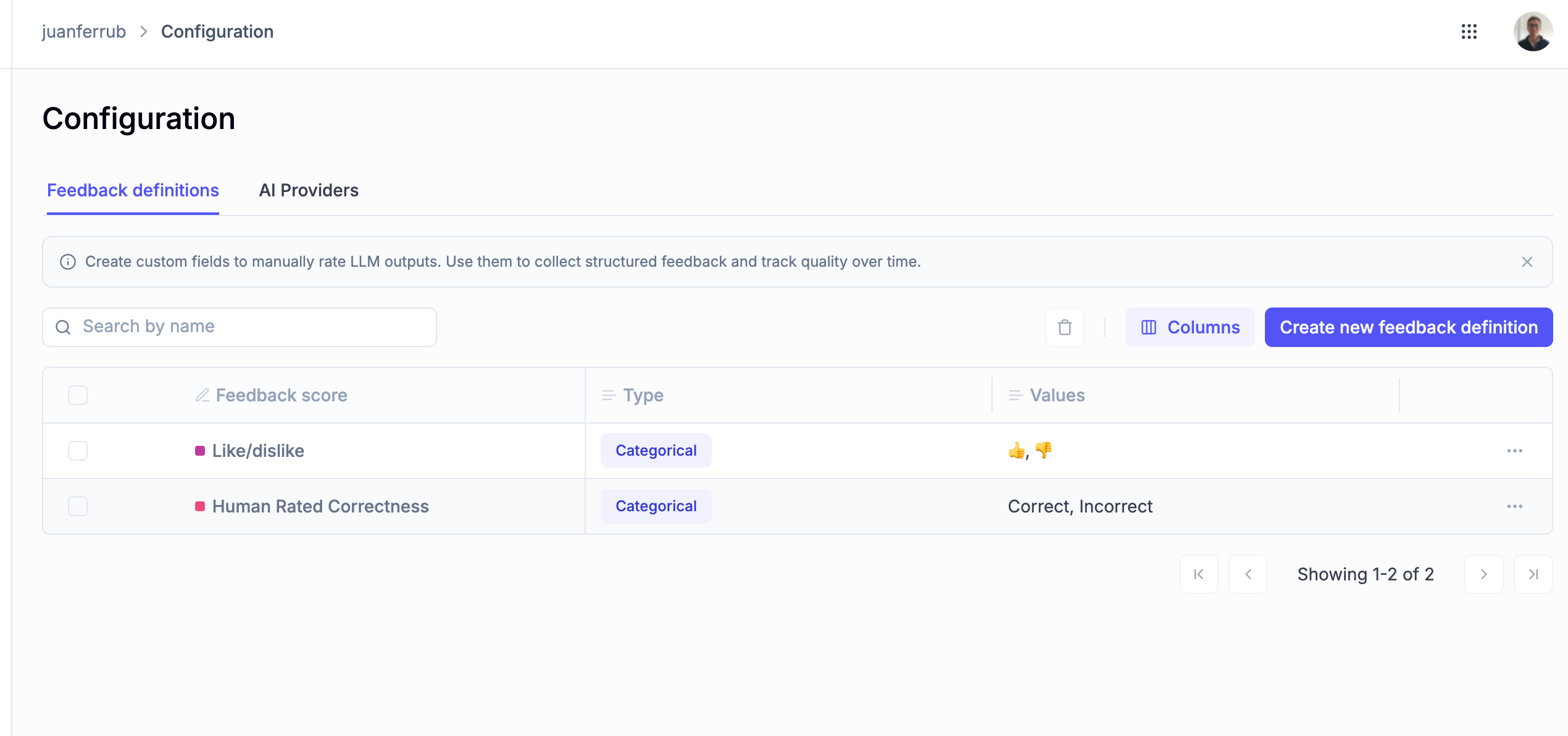

The Feedback Definitions page displays a table of all configured feedback types with the following columns:

- Feedback score: The name of the feedback definition

- Type: The data type of feedback (currently supports Categorical)

- Values: Possible values that can be assigned when providing feedback

Creating a New Feedback Definition

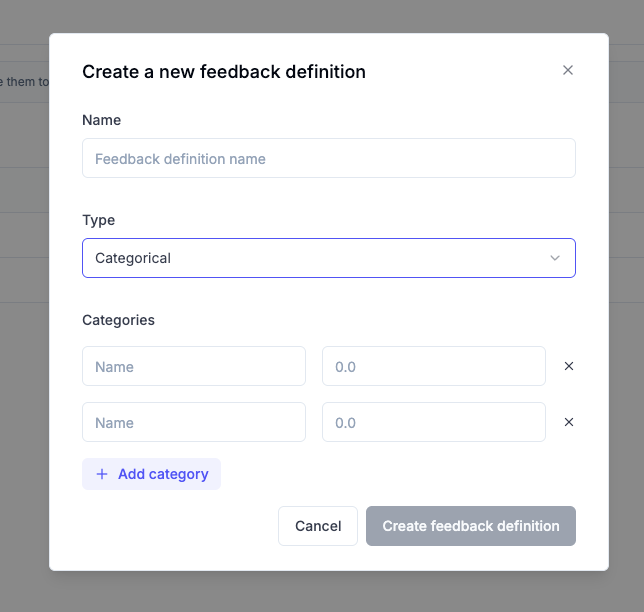

To create a new feedback definition:

- Click the Create new feedback definition button in the top-right corner of the Feedback Definitions page.

- In the modal, you will be prompted to provide:

- Name: A descriptive name for your feedback definition

- Type: Select the data type (Categorical or Numerical)

- Values / Range: Depending on the type, either define possible labels (for Categorical) or set a minimum and maximum value (for Numerical)

Examples of Common Feedback Types

As shown in the interface, common feedback definitions include:

- Thumbs Up / Thumbs Down: Simple binary feedback for quick human review (Values: 👍, 👎)

- Usefulness: Evaluates how helpful the response is (Values: Neutral, Not useful, Useful)

- Knowledge Retrieval: Assesses the accuracy of retrieved information (Values: Bad results, Good results, Unrelated results)

- Hallucination: Identifies when the model invents information (Values: No, Yes)

- Correct: Determines factual accuracy of responses (Values: Bad, Good)

- Empty: Identifies empty or non-responsive outputs (Values: No, n/a)

Best Practices

- Create meaningful, clearly differentiated feedback categories

- Use consistent naming conventions for your feedback definitions

- Limit the number of possible values to make evaluation efficient

- Consider the specific evaluation needs of your use case when designing feedback types

Using Feedback Definitions

Once created, feedback definitions can be used to:

- Evaluate outputs in experiments

- Build custom evaluations and reports

- Train and fine-tune models based on collected feedback

Additional Resources

- For programmatic access to feedback definitions, see the API Reference

- To learn about creating automated evaluation rules with feedback definitions, see Automation Rules