Here are the most relevant improvements we’ve made since the last release:

🛠️ SDK Improvements

We’ve significantly expanded the capabilities of both our Python and TypeScript SDKs, making it easier to integrate Opik into your workflows programmatically.

What’s new:

- Annotation Queue Support - Both Python and TypeScript SDKs now support annotation queues, allowing you to programmatically manage and interact with your annotation workflows

- Dataset Versioning - Full dataset versioning support is now available in both SDKs, giving you better control over your data lifecycle and experiment reproducibility

- Dataset Filtering (TypeScript) - Filter dataset items directly from the TypeScript SDK for more efficient data retrieval

- Opik Query Language (TypeScript) - The TypeScript SDK now supports Opik Query Language (OQL), enabling powerful and flexible querying of your data

- Feedback Scores Logging (TypeScript) - Log feedback scores directly from the TypeScript SDK to track model quality metrics

- Thread Search (TypeScript) - Search through conversation threads programmatically with the new

searchThreadsfunctionality

👉 Annotation Queues | Opik Query Language | Dataset Versioning

🔌 LLM Provider & Integration Updates

We’ve expanded our LLM provider support and improved integrations to give you more flexibility in your AI workflows.

What’s new:

- Ollama Provider Support - Ollama is now available as a native provider in the Playground, enabling local LLM inference directly within Opik

- Claude Opus 4.6 Support - Full support for Anthropic’s latest Claude Opus 4.6 model

- LangChain Tool Descriptions - Tool descriptions are now automatically extracted and added to tool spans in the LangChain integration, providing better visibility into your agent’s tool usage

✨ Product & UX Improvements

We’ve made several improvements to make your day-to-day workflow smoother and more intuitive.

What’s improved:

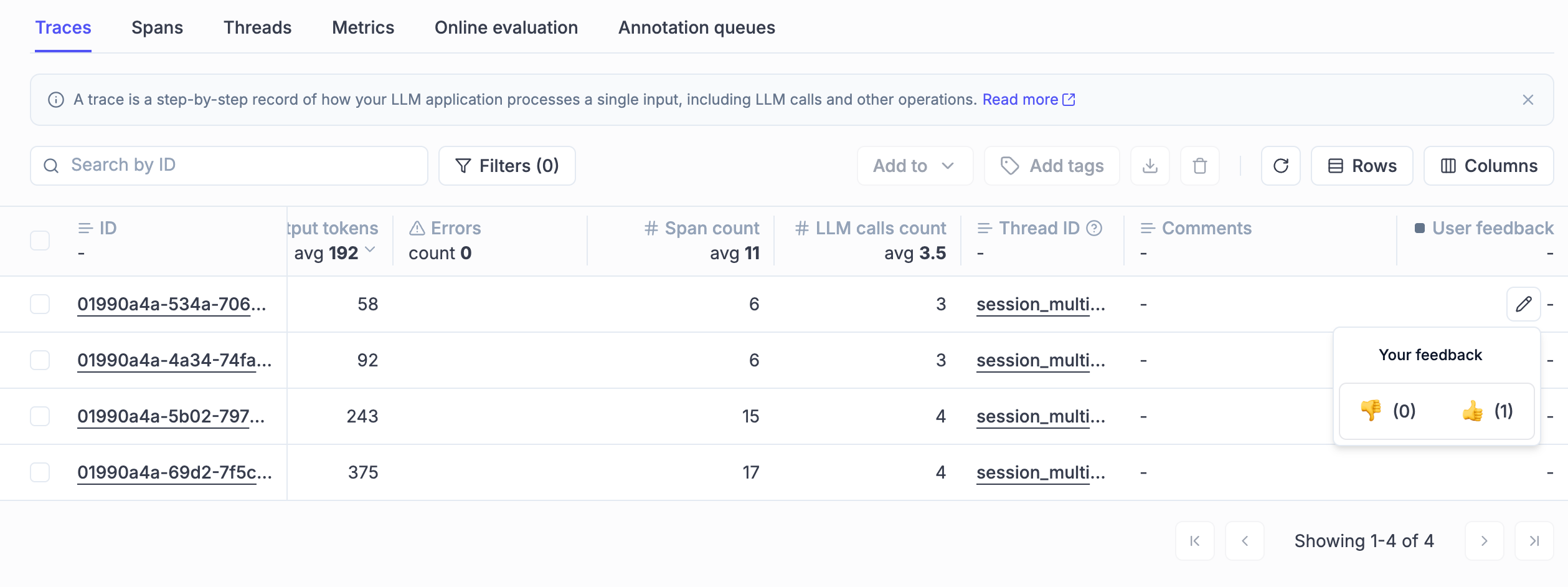

- Unified Logs View - Traces, threads, and spans are now merged into a single Logs tab, providing a streamlined view of all your observability data in one place

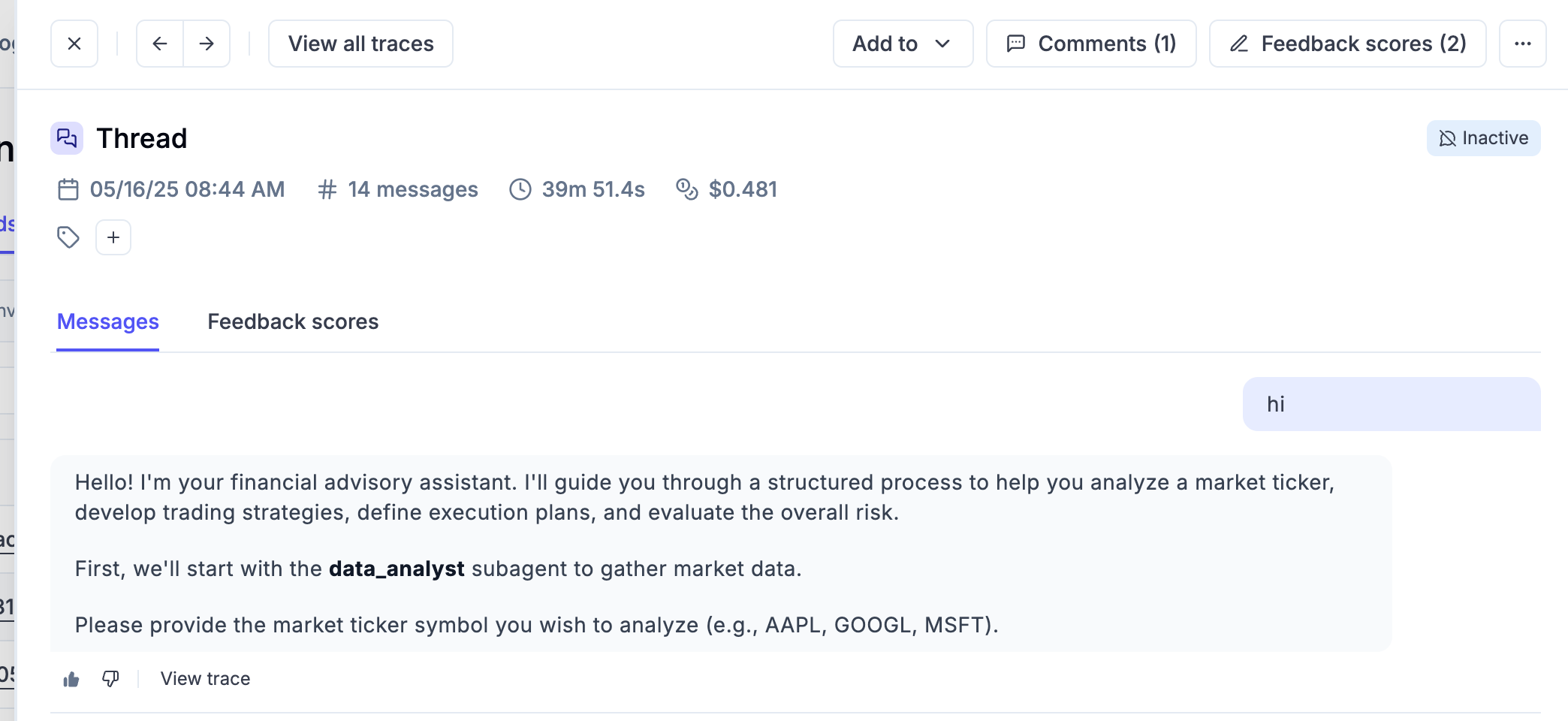

- Image Attachments in Threads - View image attachments directly within the thread view for better context when reviewing conversations

- Inline Feedback Definition Creation - Create new feedback definitions directly from the annotation queue form without leaving your workflow

- Improved Table Loading - Enhanced loading state UX across tables for a smoother experience when working with large datasets

- Expanded Feedback Scores - The feedback scores section in experiment items sidebar is now expanded by default for quicker access

- Organization & Workspace Selectors - New organization and workspace selectors in the sidebar and breadcrumbs make it easier to navigate between different contexts

- Playground Prompt Metadata - Traces generated from the Opik Playground now include prompt metadata for better traceability

And much more! 👉 See full commit log on GitHub

Releases: 1.9.102, 1.9.103, 1.9.104, 1.10.0, 1.10.1, 1.10.2, 1.10.3, 1.10.4, 1.10.5, 1.10.6, 1.10.7, 1.10.8, 1.10.9, 1.10.10

Here are the most relevant improvements we’ve made since the last release:

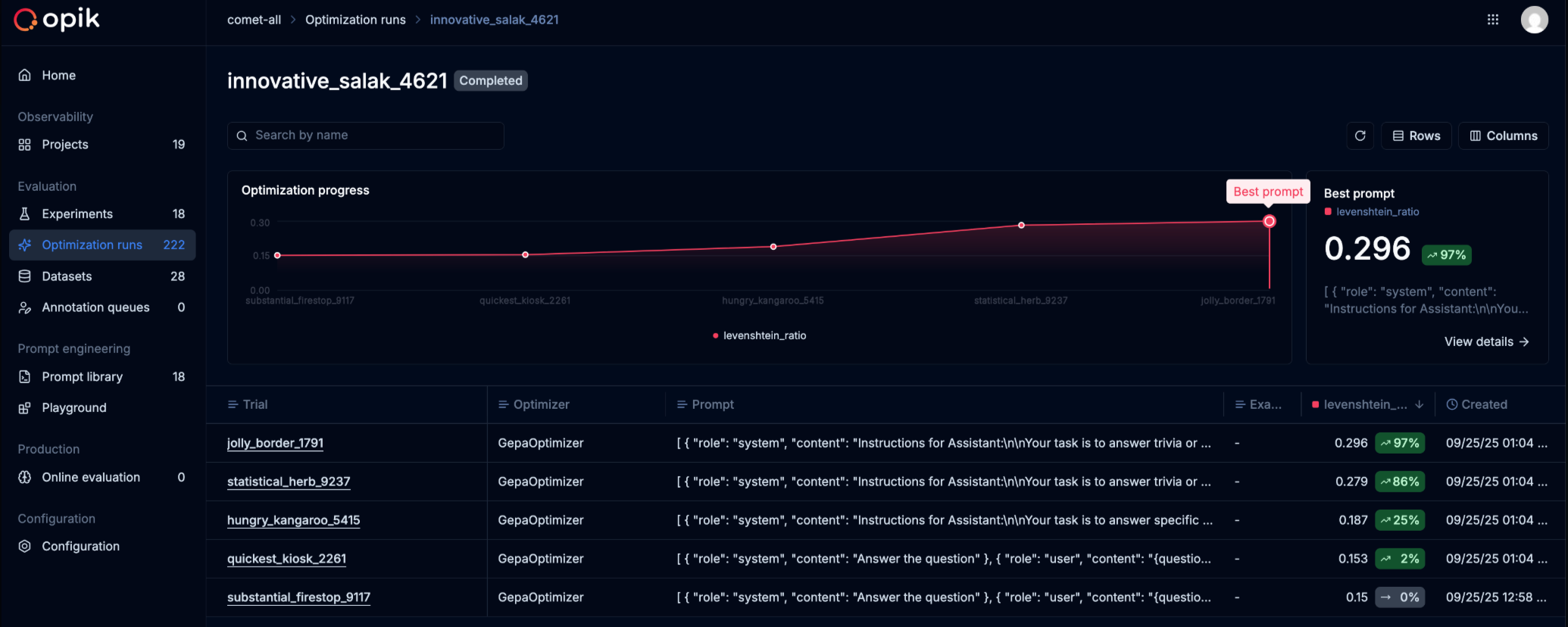

🚀 Optimization Studio

We’re excited to introduce Optimization Studio — a powerful new way to improve your prompts without writing code. Bring a prompt, define what “good” looks like, and Opik tests variations to find a better version you can ship with confidence.

What’s new:

- No-code prompt optimization - Optimization Studio helps you improve prompts directly from the Opik UI. You see scores and examples, not just a hunch, shortening the loop from idea to evidence

- Algorithm selection - Choose how Opik searches for better prompts: GEPA works well for single-turn prompts and quick improvements, while HRPO is better when you need deeper analysis of why a prompt fails

- Flexible metrics - Define how Opik should score each prompt variation. Use Equals for strict matching when you have a single correct answer, or G-Eval when answers can vary and you want a model to grade quality

- Visual progress tracking - Monitor your optimization runs with real-time progress charts showing the best score so far and results for each trial

- Trials comparison - The Trials tab lets you compare prompt variations and scores side-by-side, with the ability to drill down into individual evaluated items

- Rerun & compare - Easily rerun the same setup, cancel a run to change inputs, or select multiple runs to compare outcomes

For teams that prefer a programmatic workflow, we’ve also released Opik Optimizer SDK v3 with improved algorithms, better performance, and more intuitive APIs.

👉 Optimization Studio Documentation

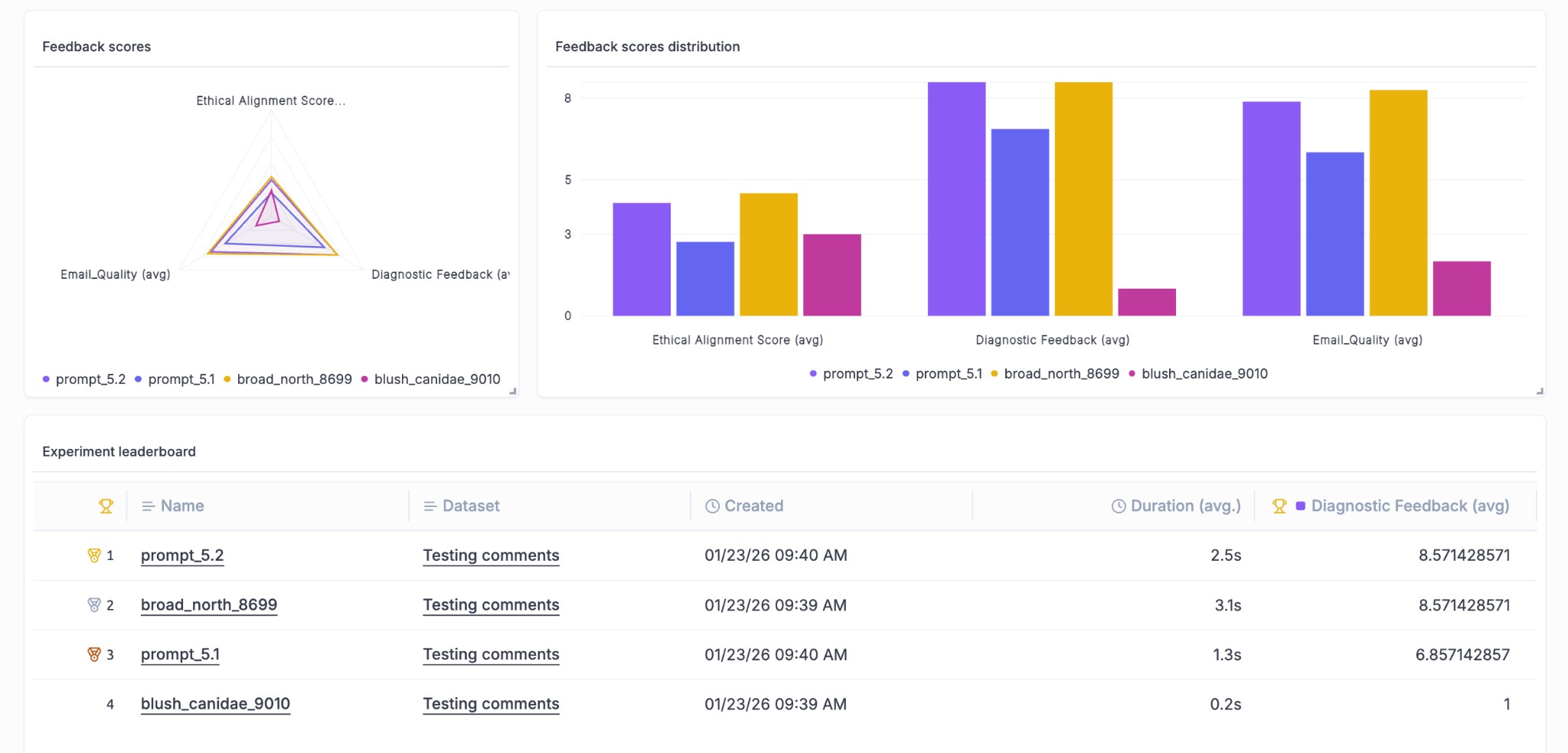

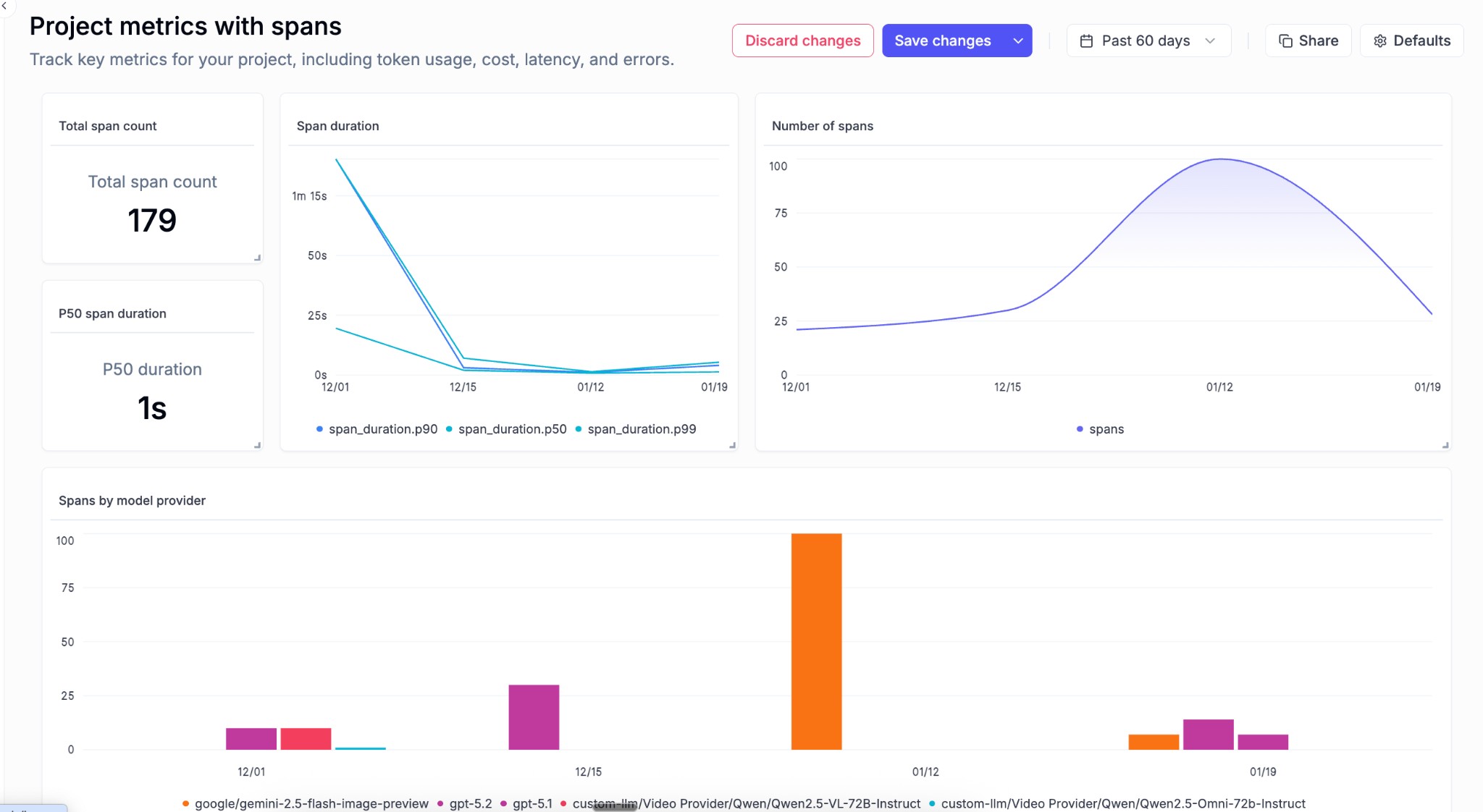

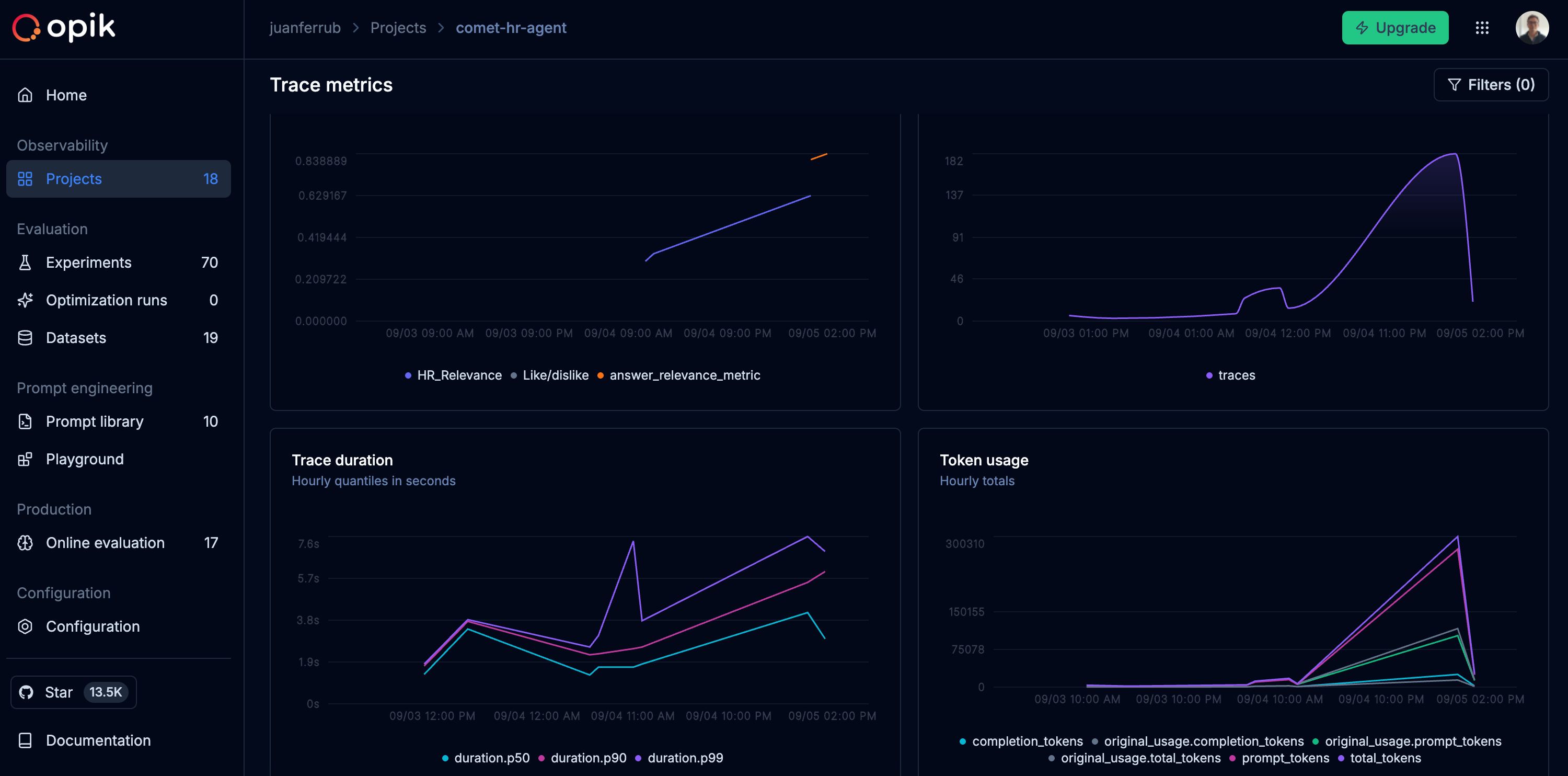

📊 Dashboard Improvements

We’ve enhanced the dashboard with new widgets and visualization capabilities to help you track and compare experiments more effectively.

What’s new:

- Experiment Leaderboard Widget - A new leaderboard widget lets you rank and compare experiments at a glance directly from your dashboard, making it easier to identify your best-performing configurations

- Group By for Metrics Widget - The project metrics widget now supports grouping, allowing you to slice and dice your metrics data in more meaningful ways

- Span-level Metrics Charts - New charts provide visibility into span-level metrics, giving you deeper insights into the performance of individual components in your traces

🎬 Video Generation Support

We’ve added support for the latest video generation models, enabling you to track and log video outputs from your AI applications.

What’s new:

- OpenAI SORA Integration - Log and track video generation outputs from OpenAI’s SORA model directly in Opik

- Google Veo Integration - Full support for Google’s Veo video generation API, including automatic logging of video outputs and metadata

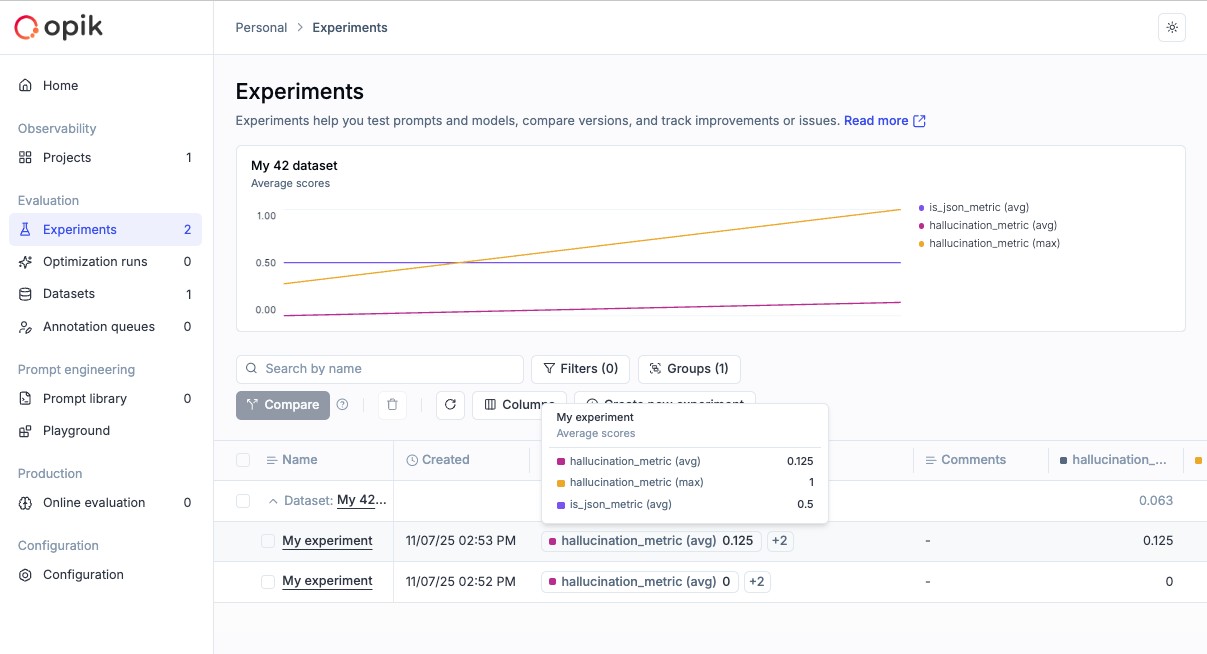

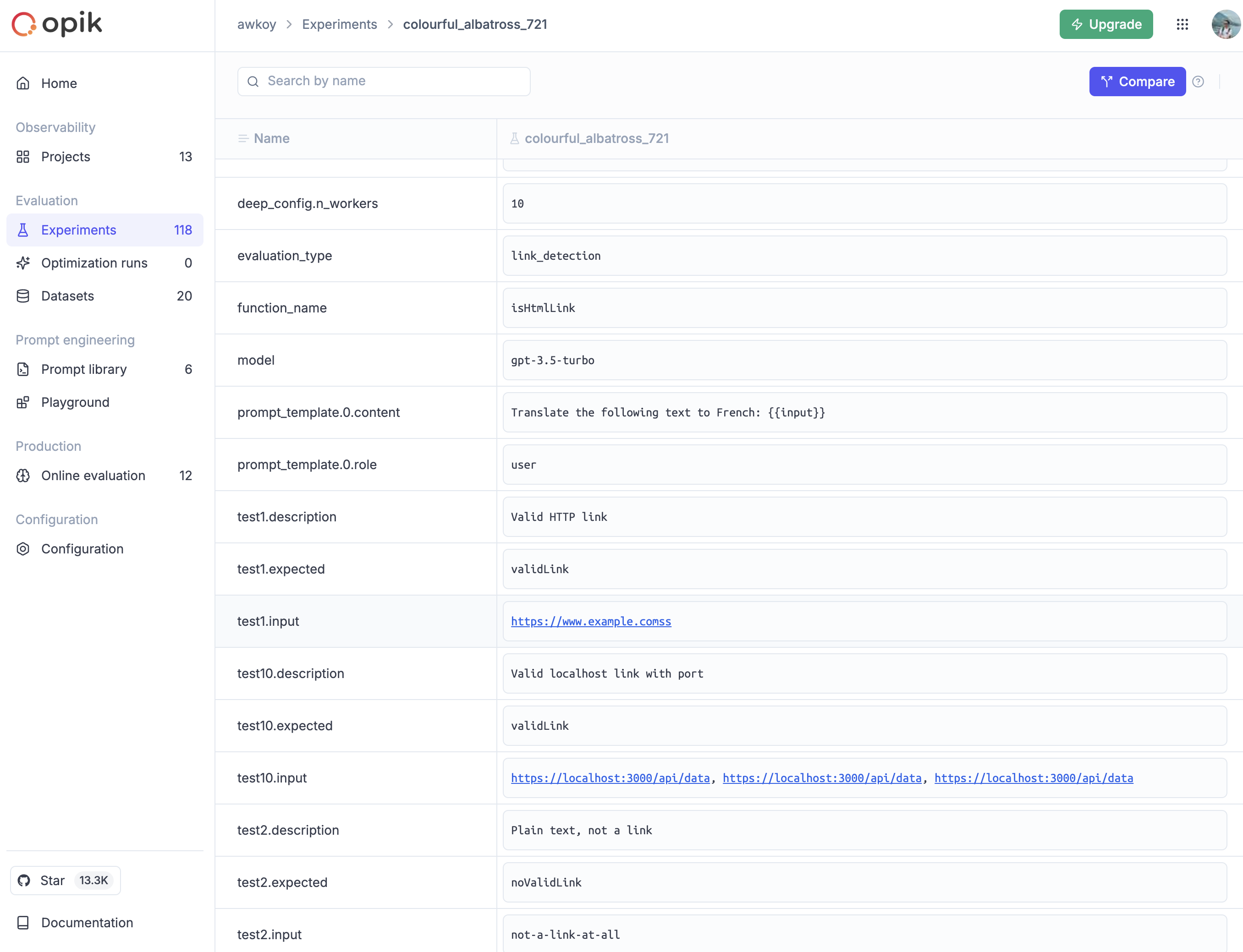

🧪 Experiment Management

We’ve made it easier to organize and navigate your experiments with new filtering and tagging capabilities.

What’s improved:

- Project Column in Experiments View - Experiments now display their associated project directly in the list view, making it easier to understand context at a glance

- Project Filter & Grouping - Filter and group your experiments by project to quickly find what you’re looking for across large experiment collections

- Experiment Tags - Tags are now rendered on the experiment page, helping you categorize and identify experiments more easily

✨ UI/UX Improvements

We’ve made several improvements to make your day-to-day workflow smoother.

What’s improved:

- Time Formatting Settings - Customize how timestamps are displayed throughout the UI to match your preferred format

- Online Score Rules Defaults - Input and output fields in online score rules are now pre-populated with sensible defaults, reducing setup time

- Dataset Item Navigation - Navigation tags in the experiment item view now link directly to the associated dataset item for easier data exploration

- Annotation Queue Review - You can now review completed annotation queues, making it easier to audit and verify your annotation work

🔌 SDK & Integrations

We’ve improved our SDK integrations with better tracing and performance metrics.

What’s improved:

- Vercel AI SDK Thread Support - Thread ID support for the Vercel AI SDK integration enables better conversation tracking across multi-turn interactions

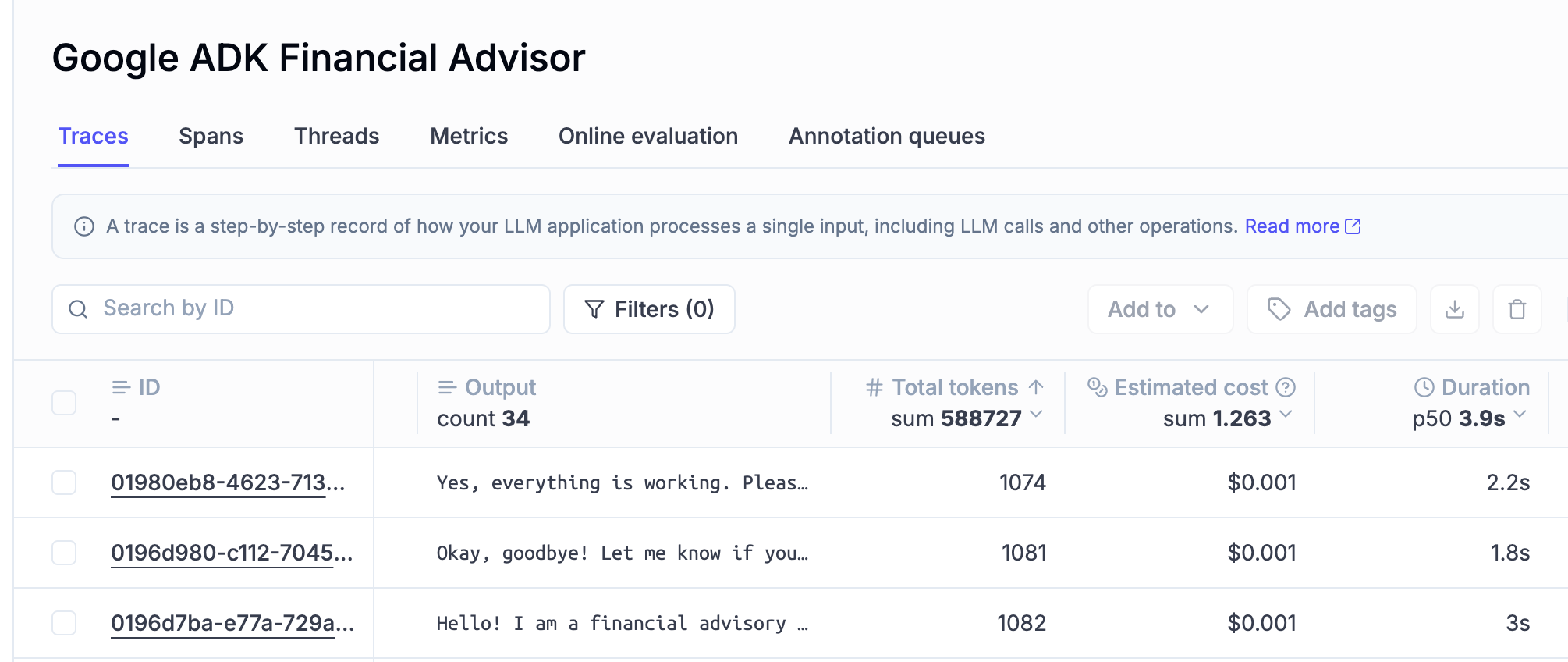

- ADK Distributed Tracing - Added distributed trace headers support to the ADK integration for improved observability in distributed systems

- Time-to-First-Token (TTFT) - The ADK integration now captures TTFT metrics, giving you visibility into response latency for streaming applications

And much more! 👉 See full commit log on GitHub

Releases: 1.9.79, 1.9.80, 1.9.81, 1.9.82, 1.9.83, 1.9.84, 1.9.85, 1.9.86, 1.9.87, 1.9.88, 1.9.89, 1.9.90, 1.9.91, 1.9.92, 1.9.95, 1.9.96, 1.9.97, 1.9.98, 1.9.99, 1.9.100, 1.9.101

Here are the most relevant improvements we’ve made since the last release:

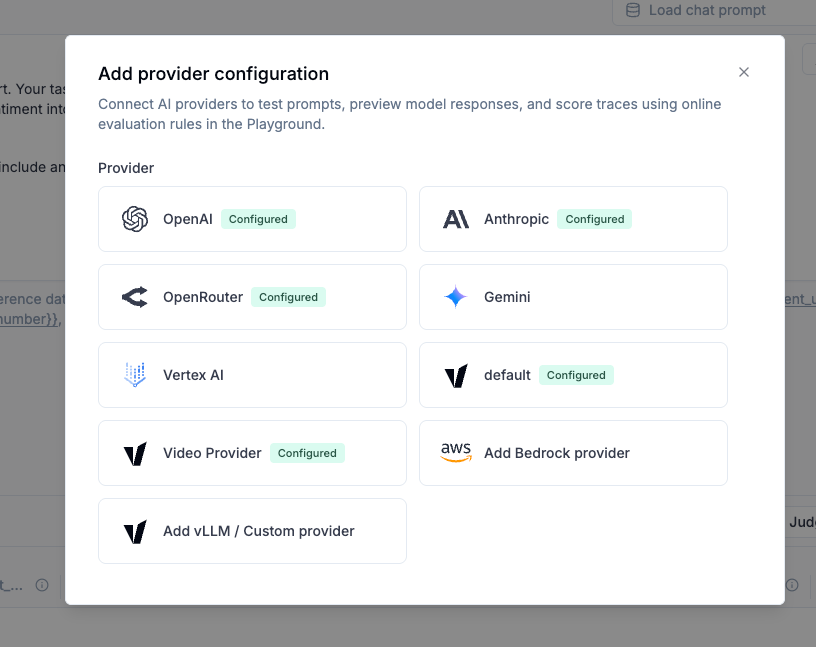

🔌 Playground & Provider Enhancements

We’ve expanded the Playground with new provider support and enhanced functionality to make prompt experimentation more powerful.

What’s new:

- Display Metric Results in Output - Playground output cells now display metric results directly, making it easier to evaluate prompt performance at a glance

- Model Selector for OpikAI Features - Easily select which model powers the Prompt Generator and Prompt Improver features

- Native AWS Bedrock Integration - Bedrock is now available as a native provider in the Playground, giving you direct access to Amazon’s models without additional configuration

- Gemini 3 Flash Support - Added support for Gemini 3 Flash in both the Playground and online scoring, expanding your model options for fast, cost-effective evaluations

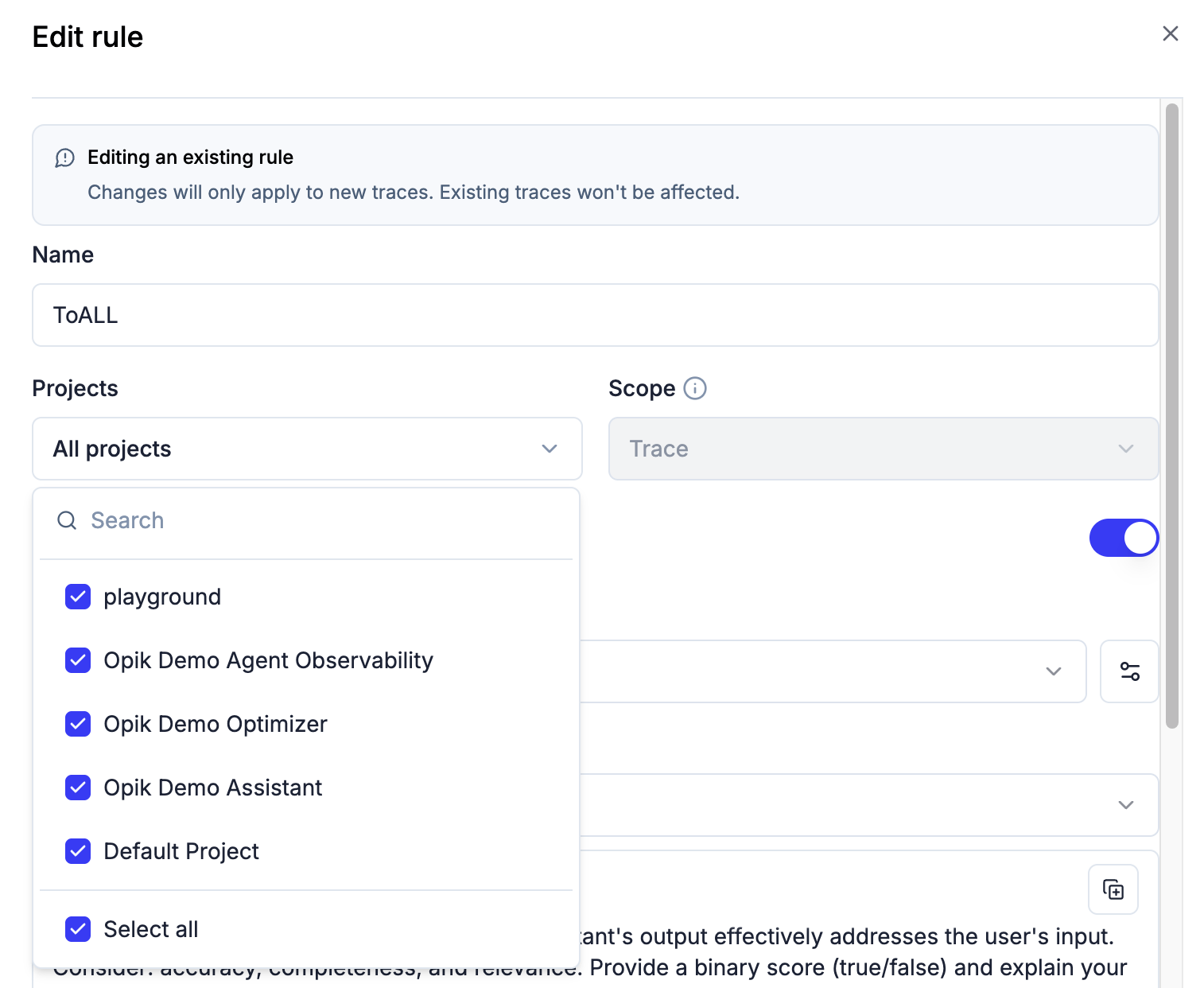

🧪 Online Evaluation & Scoring

We’ve made online evaluation more flexible and easier to manage across your projects.

What’s improved:

- Multi-Project Evaluation Rules - Online evaluation rules can now be applied across multiple projects, reducing duplication and simplifying rule management

- Clone Score Rules - Quickly duplicate existing online score rules to create variations without starting from scratch

🎨 UI & UX Enhancements

We’ve refined the user experience across the platform with improved responsiveness and dashboard polish.

What’s improved:

- Mobile Responsiveness - Better support for mobile devices when logging traces

- Dashboard Enhancements - Unified widget editor design, dashboard count in sidebar, and various UX improvements to the dashboard experience

📦 SDK Improvements

We’ve updated our SDKs with new capabilities and modernized dependencies.

What’s new:

- Python 3.9 End of Life - Python 3.9 support has been retired as it reached end-of-life. Please upgrade to Python 3.10+

- Experiment Tags in evaluate() - You can now add tags to experiments directly when calling the

evaluate()method - Vercel AI SDK v6 - Upgraded TypeScript SDK integration from Vercel AI SDK v5 to v6

- Prompt Version Tags (TypeScript) - TypeScript SDK now supports prompt version tags for better prompt management

And much more! 👉 See full commit log on GitHub

Releases: 1.9.57, 1.9.58, 1.9.59, 1.9.60, 1.9.61, 1.9.62, 1.9.63, 1.9.64, 1.9.65, 1.9.66, 1.9.67, 1.9.68, 1.9.69, 1.9.70, 1.9.71, 1.9.72, 1.9.73, 1.9.74, 1.9.75, 1.9.76, 1.9.77, 1.9.78

Here are the most relevant improvements we’ve made since the last release:

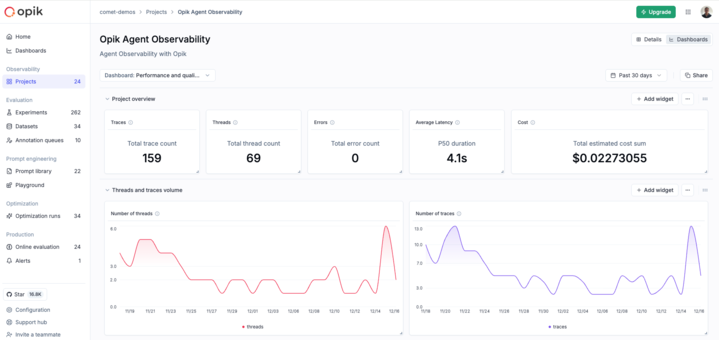

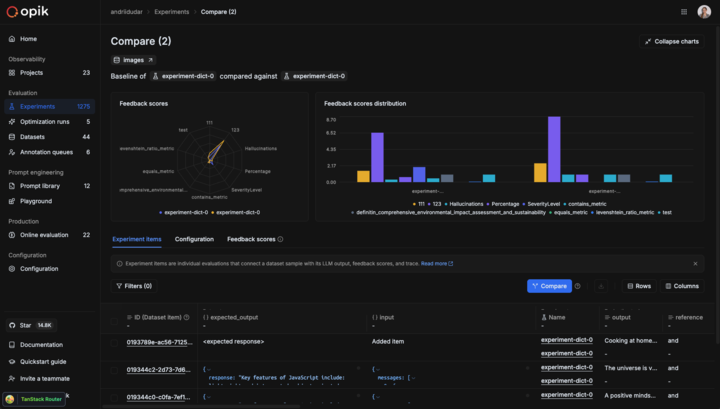

📊 Custom Dashboards (Beta)

Custom Dashboards are now live! 🎉

Our new dashboards engine lets you build fully customizable views to track everything from token usage and cost to latency, quality across projects and experiments.

📍 Where to find them?

Dashboards are available in three places inside Opik:

- Dashboards page – create and manage all dashboards from the sidebar

- Project page – view project-specific metrics under the Dashboards tab

- Experiment comparison page – visualize and compare experiment results

🧩 Built-in templates to get started fast

We ship dashboards with zero-setup pre-built templates, including Performance Overview, Experiment Insights and Project Operational Metrics.

Templates are fully editable and can be saved as new dashboards once customized.

🧱 Flexible widgets

Dashboards support multiple widget types:

- Project Metrics (time-series and bar charts for project data)

- Project Statistics (KPI number cards)

- Experiment Metrics (line, bar, radar charts for experiment data)

- Markdown (notes, documentation, context)

Widgets support filtering, grouping, resizing, drag-and-drop layouts, and global date range controls.

🧪 Improved Evaluation Capabilities

Span-Level Metrics

Span-level metrics are officially live in Opik supporting both LLMaaJ and code-based metrics!

Teams can now EASILY evaluate the quality of specific steps inside their agent flows with full precision. Instead of assessing only the final output or top-level trace, you can attach metrics directly to individual call spans or segments of an agent’s trajectory.

This unlocks dramatically finer-grained visibility and control. For example:

- Score critical decision points inside task-oriented or tool-using agents

- Measure the performance of sub-tasks independently to pinpoint bottlenecks or regressions

- Compare step-by-step agent behavior across runs, experiments, or versions

New Support accessing full tree, subtree, or leaf nodes in Online Scores

This update enhances the online scoring engine to support referencing entire root objects (input, output, metadata) in LLM-as-Judge and code-based evaluators, not just nested fields within them.

Online Scoring previously only exposed leaf-level values from an LLM’s structured output. With this update, Opik now supports rendering any subtree: from individual nodes to entire nested structures.

📝 Tags Support & Metadata Filtering for Prompt Version Management

You can now tag individual prompt versions (not just the prompt!).

This provides a clean, intuitive way to mark best-performing versions, manage lifecycles, and integrate version selection into agent deployments.

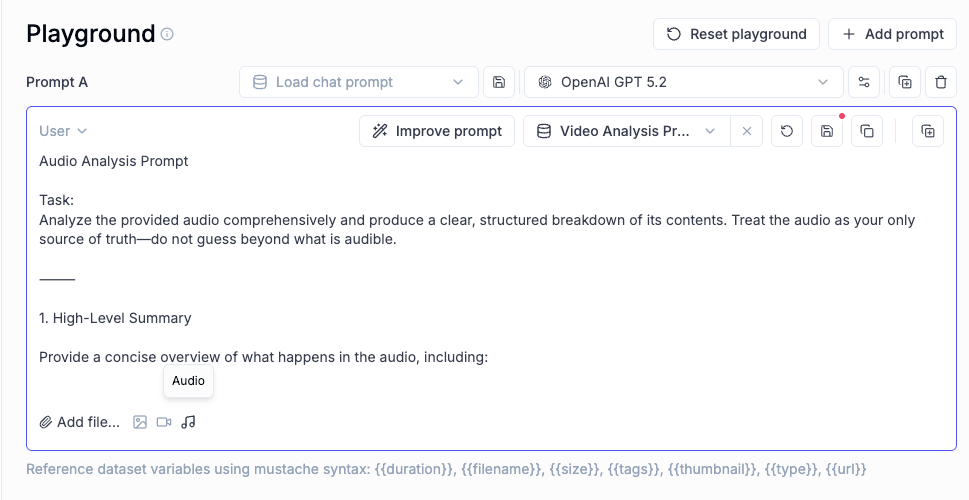

🎥 More Multimodal Support: now Audio!

Now you can pass audio as part of your prompts, in the playground and on online evals for advanced multimodal scenarios.

📈 More Insights!

Thread-level insights

Added new metrics to the threads table with thread-level metrics and statistics, providing users with aggregated insights about their full multi-turn agentic interactions:

- Duration percentiles (p50, p90, p99) and averages

- Token usage statistics (total, prompt, completion tokens)

- Cost metrics and aggregations

- Also added filtering support by project, time range, and custom filters

Experiment insights

Added additional aggregation methods in headers for experiment items.

This new release adds percentile aggregation methods (p50, p90, p99) for all numerical metrics in experiment items table headers, extending the existing pattern used for duration to cost, feedback scores, and total tokens.

🔌 Integrations

Support for GPT-5.2 in Playground and Online Scoring

Added full support for GPT 5.2 models in both the playground and online scoring features for OpenAI and OpenRouter providers.

Harbor Integration

Added a comprehensive Opik integration for Harbor, a benchmark evaluation framework for autonomous LLM agents. The integration enables observability for agent benchmark evaluations (SWE-bench, LiveCodeBench, Terminal-Bench, etc.).

👉 Harbor Integration Documentation

And much more! 👉 See full commit log on GitHub

Releases: 1.9.41, 1.9.42, 1.9.43, 1.9.44, 1.9.45, 1.9.46, 1.9.47, 1.9.48, 1.9.49, 1.9.50, 1.9.51, 1.9.52, 1.9.53, 1.9.54, 1.9.55, 1.9.56

Here are the most relevant improvements we’ve made since the last release:

📊 Dataset Improvements

We’ve enhanced dataset functionality with several key improvements:

-

Edit Dataset Items - You can now edit dataset items directly from the UI, making it easier to update and refine your evaluation data.

-

Remove Dataset Upload Limit for Self-Hosted - Self-hosted deployments no longer have dataset upload limits, giving you more flexibility for large-scale evaluations.

-

Dataset Item Tagging Support - Added comprehensive tagging support for dataset items, enabling better organization and filtering of your evaluation data.

-

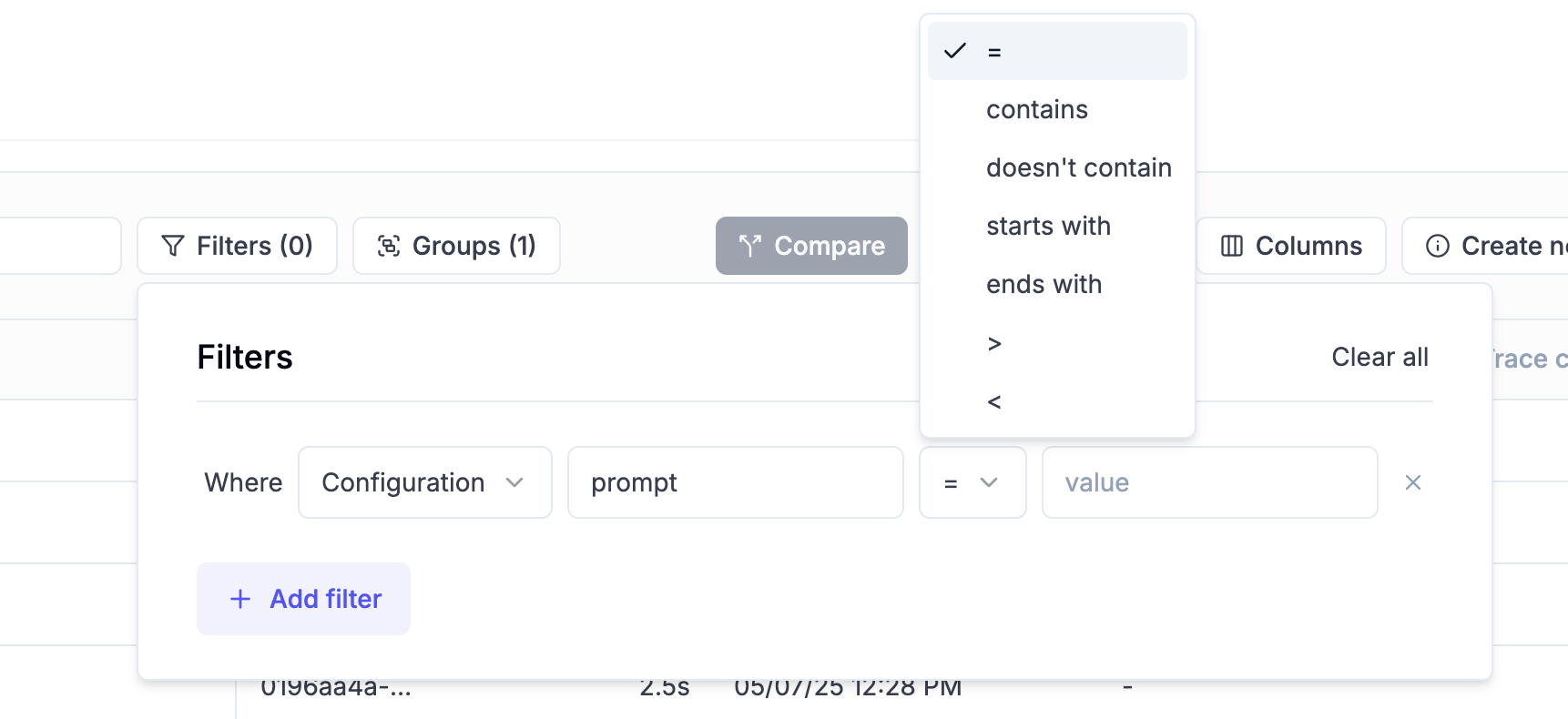

Dataset Filtering Capabilities by Any Column - Filter datasets by any column in both the playground and dataset view, giving you flexible ways to find and work with specific data subsets.

-

Ability to Rename Datasets - Rename datasets directly from the UI, making it easier to organize and manage your evaluation datasets.

📈 Experiment Updates

We’ve made significant improvements to experiment management and analysis:

-

Experiment-Level Metrics - Compute experiment-level metrics (as opposed to experiment-item-level metrics) for better insights into your evaluation results. Read more in the experiment-level metrics documentation.

-

Rename Experiments & Metadata - Update experiment names and metadata config directly from the dashboard, giving you more control over experiment organization.

-

Token & Cost Columns - Token usage and cost are now surfaced in the experiment items table for easy scanning and cost visibility.

🎮 Playground Improvements

We’ve made the Playground more powerful and easier to use for non-technical users:

-

Easy Navigation from Playground to Dataset and Metrics - Quick navigation links from the playground to related datasets and metrics, streamlining your workflow.

-

Advanced filtering for Playground Datasets - Filter playground datasets by tags and any other columns, making it easier to find and work with specific dataset items.

-

Pagination for the Playground - Added pagination support to handle large datasets more efficiently in the playground.

-

Added Experiment Progress Bar in the Playground - Visual progress indicators for running experiments, giving you real-time feedback on experiment status.

-

Added Model-Specific Throttling and Concurrency Configs in the Playground - Configure throttling and concurrency settings per model in the playground, giving you fine-grained control over resource usage.

🚨 Enhanced Alerts

We’ve expanded alert capabilities with threshold support:

-

Added Threshold Support for Trace and Thread Feedback Scores - Configure thresholds for feedback scores on traces and threads, enabling more precise alerting based on quality metrics.

-

Added Threshold to Trace Error Alerts - Set thresholds for trace error alerts to get notified only when error rates exceed your configured limits.

-

Trigger Experiment Created Alert from the Playground - Receive alerts when experiments are created directly from the playground.

🤖 Opik Optimizer Updates

Significant enhancements to the Opik Optimizer:

-

Cost and Latency Optimization Support - Added support for optimizing both cost and latency metrics simultaneously. Read more in the optimization metrics documentation.

-

Training and Validation Dataset Support - Introduced support for training and validation dataset splits, enabling better optimization workflows. Learn more in the dataset documentation.

-

Example Scripts for Microsoft Agents and CrewAI - New example scripts demonstrating how to use Opik Optimizer with popular LLM frameworks. Check out the example scripts.

-

UI Enhancements and Optimizer Improvements - Several UI enhancements and various improvements to Few Shot, MetaPrompt, and GEPA optimizers for better usability and performance.

🎨 User Experience Enhancements

Improved usability across the platform:

-

Added

has_tool_spansField to Show Tool Calls in Thread View - Tool calls are now visible in thread views, providing better visibility into agent tool usage. -

Added Export Capability (JSON/CSV) Directly from Trace, Thread, and Span Detail Views - Export data directly from detail views in JSON or CSV format, making it easier to analyze and share your observability data.

🤖 New Models!

Expanded model support:

- Added Support for Gemini 3 Pro, GPT 5.1, OpenRouter Models - Added support for the latest model versions including Gemini 3 Pro, GPT 5.1, and OpenRouter models, giving you access to the newest AI capabilities.

And much more! 👉 See full commit log on GitHub

Releases: 1.9.18, 1.9.19, 1.9.20, 1.9.21, 1.9.22, 1.9.23, 1.9.25, 1.9.26, 1.9.27, 1.9.28, 1.9.29, 1.9.31, 1.9.32, 1.9.33, 1.9.34, 1.9.35, 1.9.36, 1.9.37, 1.9.38, 1.9.39, 1.9.40

Here are the most relevant improvements we’ve made since the last release:

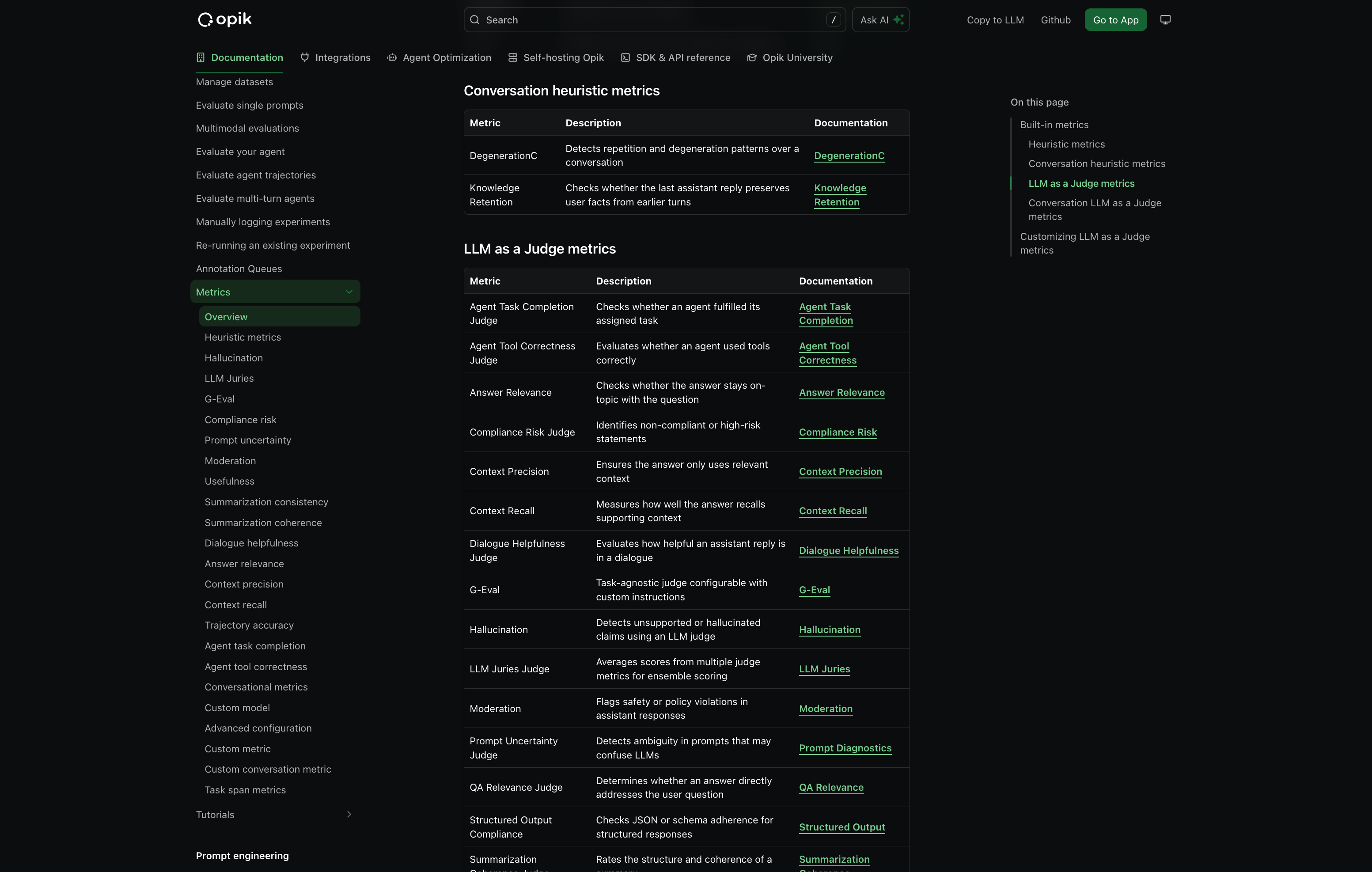

📊 More Metrics!

We have shipped 37 new built-in metrics, faster & more reliable LLM judging, plus robustness fixes.

New Metrics Added - We’ve expanded the evaluation metrics library with a comprehensive set of out-of-the-box metrics including:

- Classic NLP Heuristics - BERTScore, Sentiment analysis, Bias detection, Conversation drift, and more

- Lightweight Heuristics - Fast, non-LLM based metrics perfect for CI/CD pipelines and large-scale evaluations

- LLM-as-a-Judge Presets - More out-of-the-box presets you can use without custom configuration

LLM-as-a-Judge & G-Eval Improvements:

- Compatible with newer models - Now works seamlessly with the latest model versions

- Faster default judge - Default judge is now

gpt-5-nanofor faster, more accurate evals - LLM Jury support - Aggregate scores across multiple models/judges into a single ensemble score for more reliable evaluations

Enhanced Preprocessing:

- Improved English text handling - Better processing of English text to reduce false negatives

- Better emoji handling - Enhanced emoji processing for more accurate evaluations

Robustness Improvements:

- Automatic retries - LLM judge will retry on transient failures to avoid flaky test results

- More reliable evaluation runs - Faster, more consistent evaluation runs for CI and experiments

👉 Access the metrics docs here: Evaluation Metrics Overview

🔒 Anonymizers - PII Information Redaction

We’ve added support for PII (Personally Identifiable Information) redaction before sending data to Opik. This helps you protect sensitive information while still getting the observability insights you need.

With anonymizers, you can:

- Automatically redact PII from traces and spans before they’re sent to Opik

- Configure custom anonymization rules to match your specific privacy requirements

- Maintain compliance with data protection regulations

- Protect sensitive data without losing observability

👉 Read the full docs: Anonymizers

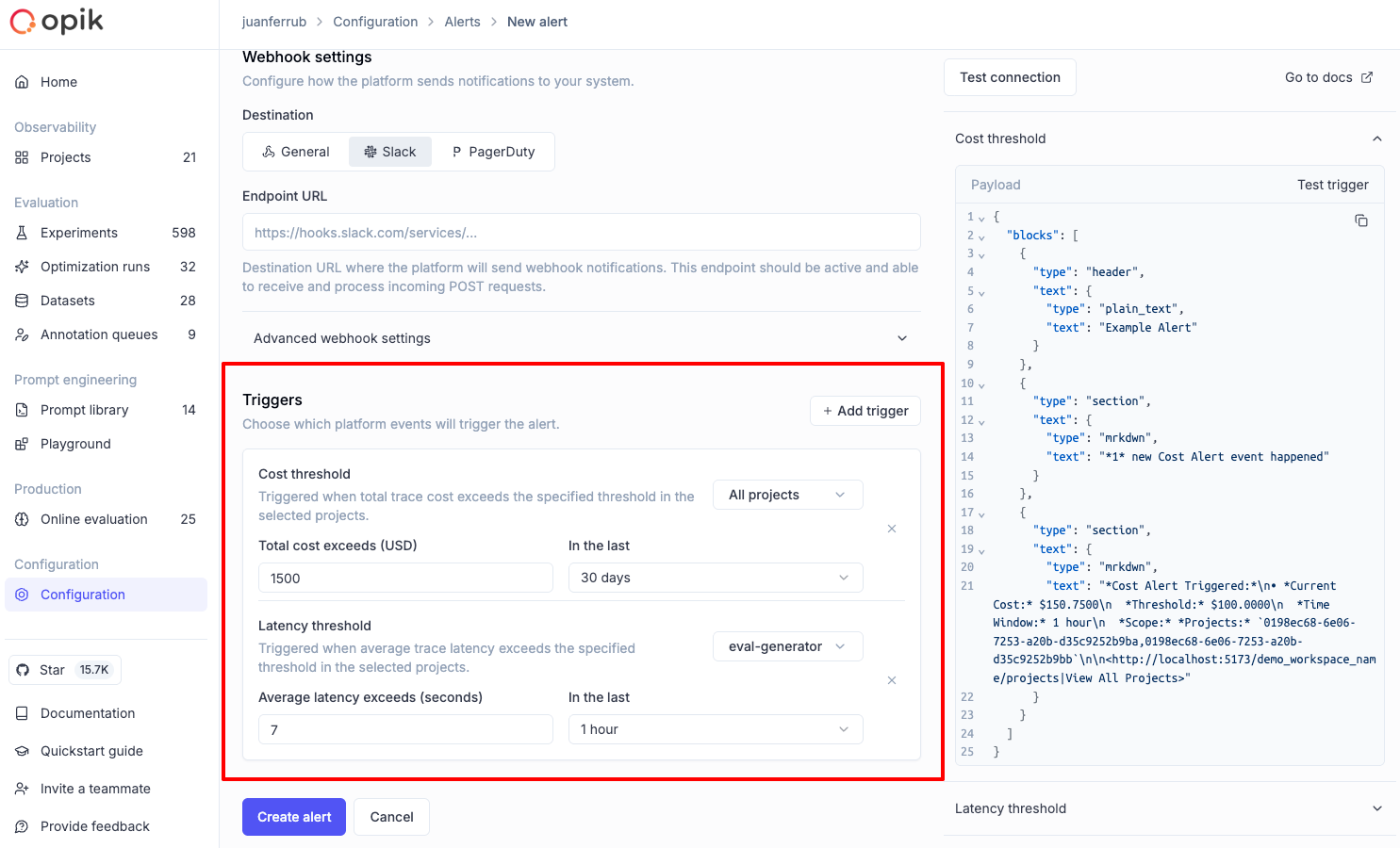

🚨 New Alert Types

We’ve expanded our alerting capabilities with new alert types and improved functionality:

- Experiment Finished Alert - Get notified when an experiment completes, so you can review results immediately or trigger your CI/CD pipelines.

- Cost Alerts - Set thresholds for cost metrics and receive alerts when spending exceeds your limits

- Latency Alerts - Monitor response times and get notified when latency exceeds configured thresholds

These new alert types help you stay on top of your LLM application’s performance and costs, enabling proactive monitoring and faster response to issues.

👉 Read more: Alerts Guide

🎥 Multimodal Support

We’ve significantly enhanced multimodal capabilities across the platform:

-

Video LLM-as-a-Judge - Added support for Video LLM-as-a-Judge, enabling evaluation of video content in your traces

-

Video Cost Tracking - Added cost tracking for video models, so you can monitor spending on video processing operations

-

Image support in LLM-as-a-Judge - Both Python and TypeScript SDKs now support image processing in LLM-as-a-Judge evaluations, allowing you to evaluate traces containing images

These enhancements make it easier to build and evaluate multimodal applications that work with images and video content.

🔌 Custom AI Providers

We’ve improved support for custom AI providers with enhanced configuration options:

- Multiple Custom Providers - Set up multiple custom AI providers for use in the Playground and online scoring

- Custom Headers Support - Configure custom headers for your custom providers, giving you more flexibility in how you connect to enterprise AI services

🧪 Enhanced Evals & Observability

We’ve added several improvements to make evaluation and observability more powerful:

- Trace and Span Metadata in Datasets - Ability to add trace and span metadata to datasets for advanced agent evaluation, enabling more sophisticated evaluation workflows

- Tokens Breakdown Display - Display tokens breakdown (input/output) in the trace view, giving you detailed visibility into token usage for each span and trace

- Binary (Boolean) Feedback Scores - New support for binary (Boolean) feedback scores, allowing you to capture simple yes/no or pass/fail evaluations

🎨 UX Improvements

We’ve made several user experience enhancements across the platform:

- Improved Pretty Mode - Enhanced pretty mode for traces, threads, and annotation queues, making it easier to read and understand your data

- Date Filtering for Traces, Threads, and Spans - Added date filtering capabilities, allowing you to focus on specific time ranges when analyzing your data

- New Optimization Runs Section - Added a new optimization runs section to the home page, giving you quick access to your optimization results

- Comet Debugger Mode - Added Comet Debugger Mode with app version and connectivity status, helping you troubleshoot issues and understand your application’s connection status. Read more about it here

And much more! 👉 See full commit log on GitHub

Releases: 1.8.98, 1.8.99, 1.8.100, 1.8.101, 1.8.102, 1.9.0, 1.9.1, 1.9.2, 1.9.3, 1.9.4, 1.9.5, 1.9.6, 1.9.7, 1.9.8, 1.9.9, 1.9.10, 1.9.11, 1.9.12, 1.9.13, 1.9.14, 1.9.15, 1.9.16, 1.9.17

Here are the most relevant improvements we’ve made since the last release:

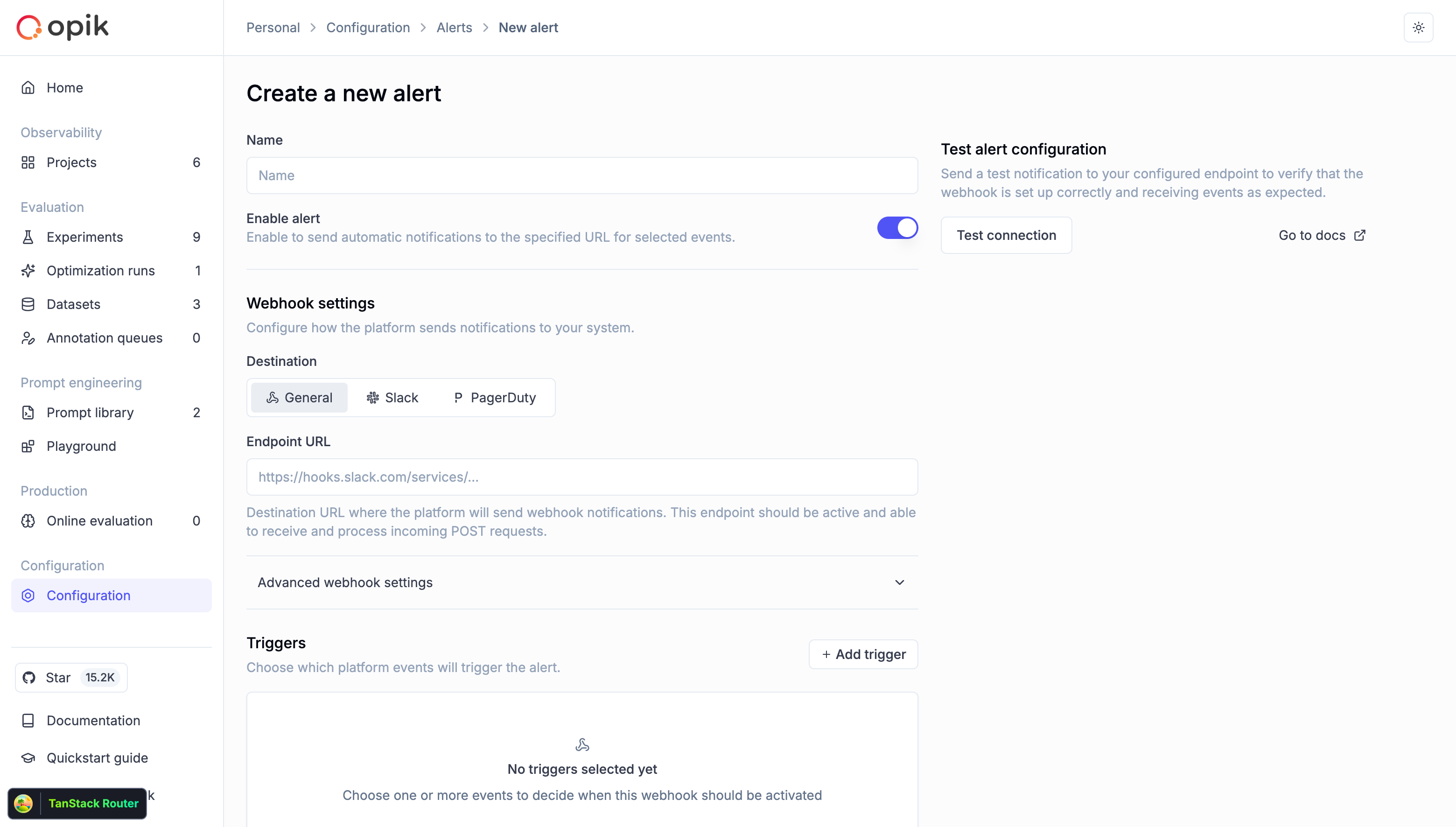

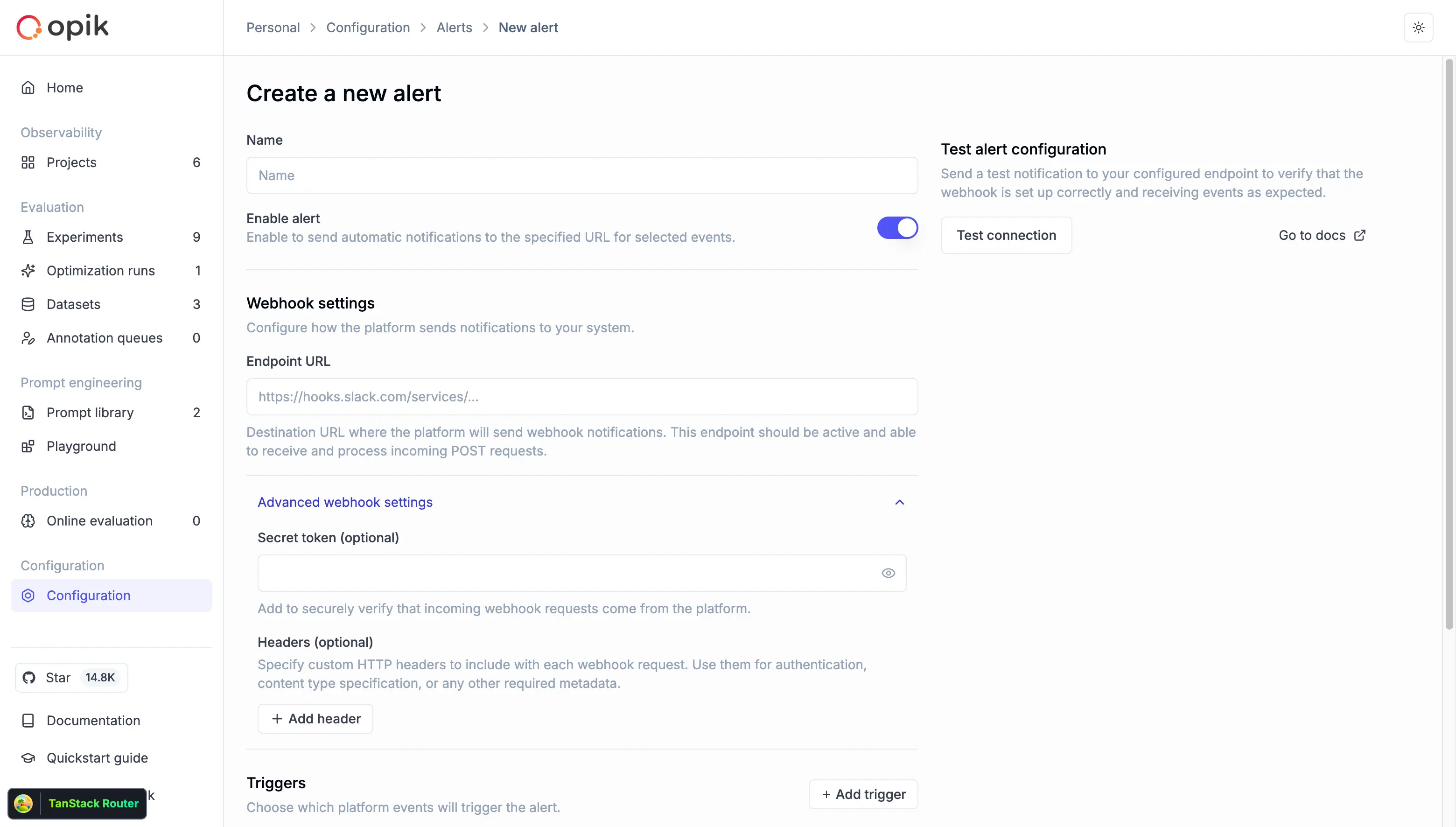

🚨 Native Slack and PagerDuty Alerts

We now offer native Slack and PagerDuty alert integrations, eliminating the need for any middleware configuration. Set up alerts directly in Opik to receive notifications when important events happen in your workspace.

With native integrations, you can:

- Configure Slack channels directly from Opik settings

- Set up PagerDuty incidents without additional webhook setup

- Receive real-time notifications for errors, feedback scores, and critical events

- Streamline your monitoring workflow with built-in integrations

👉 Read the full docs here - Alerts Guide

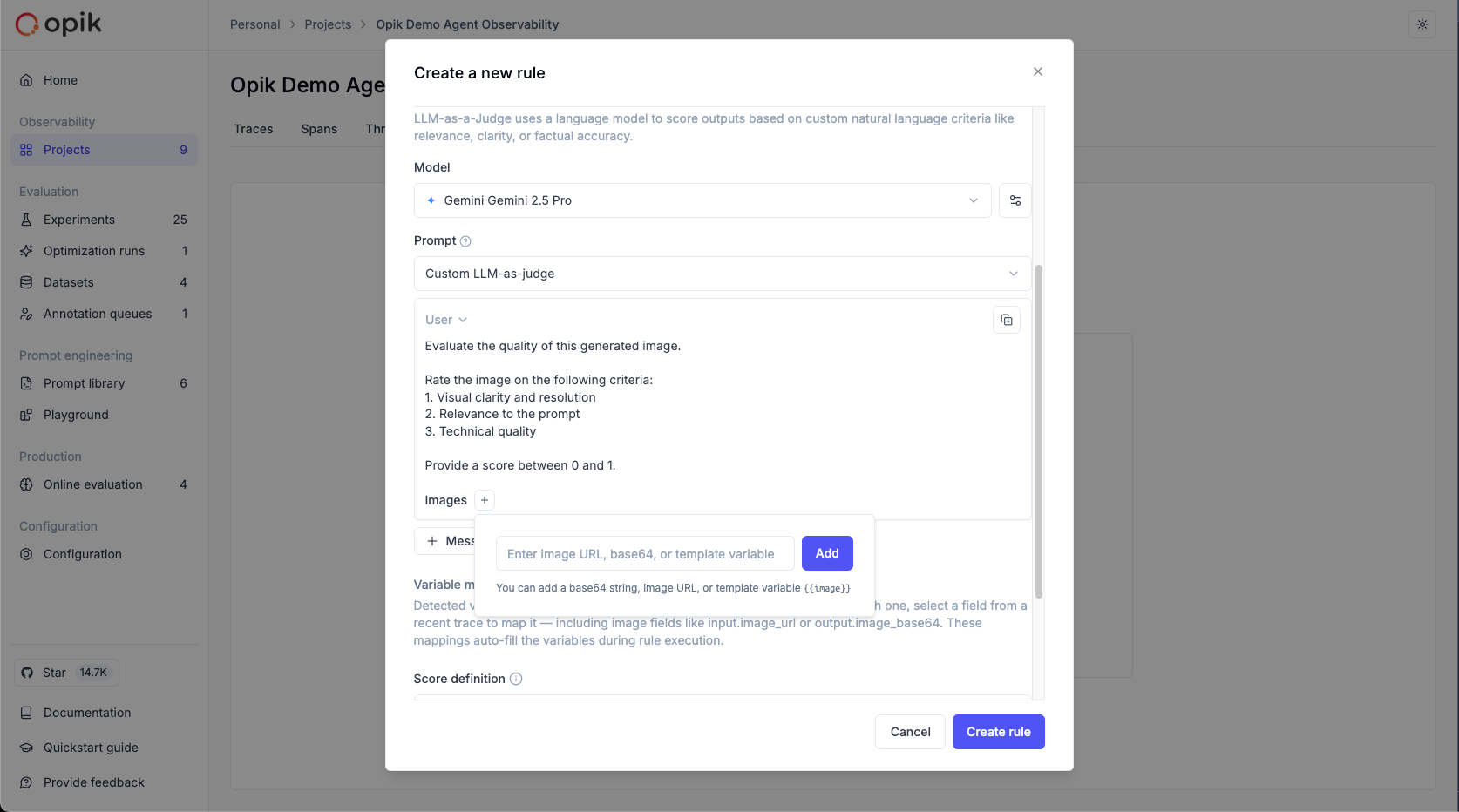

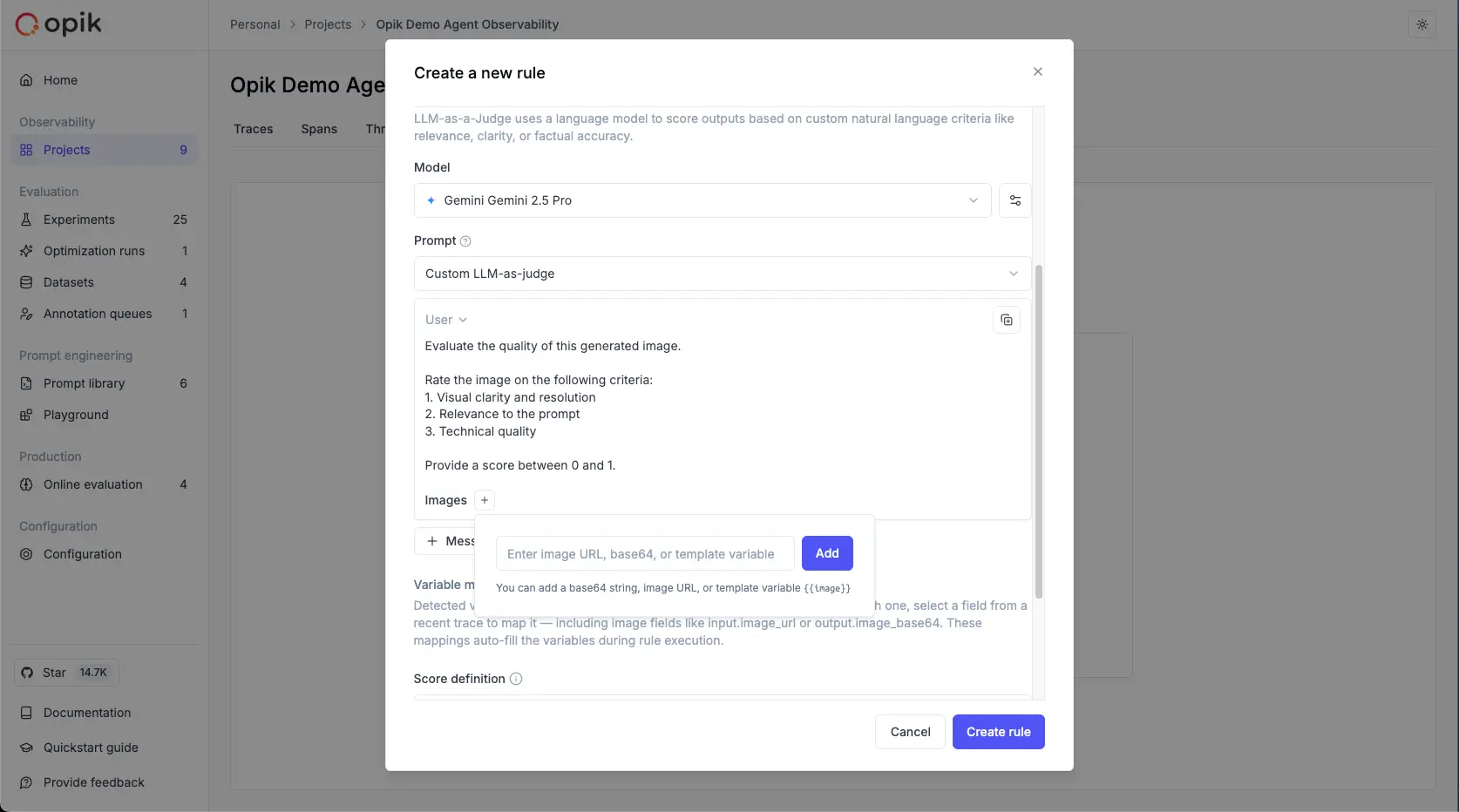

🖼️ Multimodal LLM-as-a-Judge Support for Visual Evaluation

LLM as a Judge metrics can now evaluate traces that contain images when using vision-capable models. This is useful for:

- Evaluating image generation quality - Assess the quality and relevance of generated images

- Analyzing visual content in multimodal applications - Evaluate how well your application handles visual inputs

- Validating image-based responses - Ensure your vision models produce accurate and relevant outputs

To reference image data from traces in your evaluation prompts:

- In the prompt editor, click the “Images +” button to add an image variable

- Map the image variable to the trace field containing image data using the Variable Mapping section

👉 Read more: Evaluating traces with images

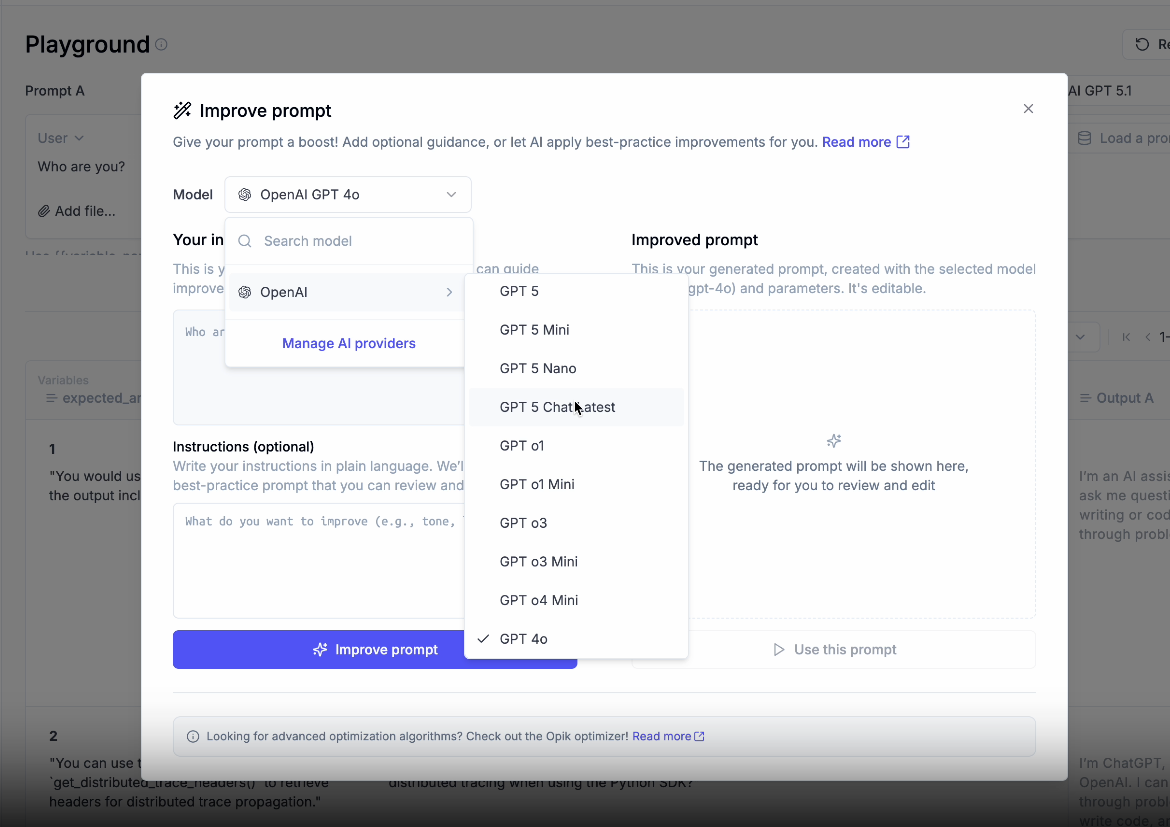

✨ Prompt Generator & Improver

We’ve launched the Prompt Generator and Prompt Improver — two AI-powered tools that help you create and refine prompts faster, directly inside the Playground.

Designed for non-technical users, these features automatically apply best practices from OpenAI, Anthropic, and Google, helping you craft clear, effective, and production-grade prompts without leaving the Playground.

Why it matters

Prompt engineering is still one of the biggest bottlenecks in LLM development. With these tools, teams can:

- Generate high-quality prompts from simple task descriptions

- Improve existing prompts for clarity, specificity, and consistency

- Iterate and test prompts seamlessly in the Playground

How it works

- Prompt Generator → Describe your task in plain language; Opik creates a complete system prompt following proven design principles

- Prompt Improver → Select an existing prompt; Opik enhances it following best practices

👉 Read the full docs: Prompt Generator & Improver

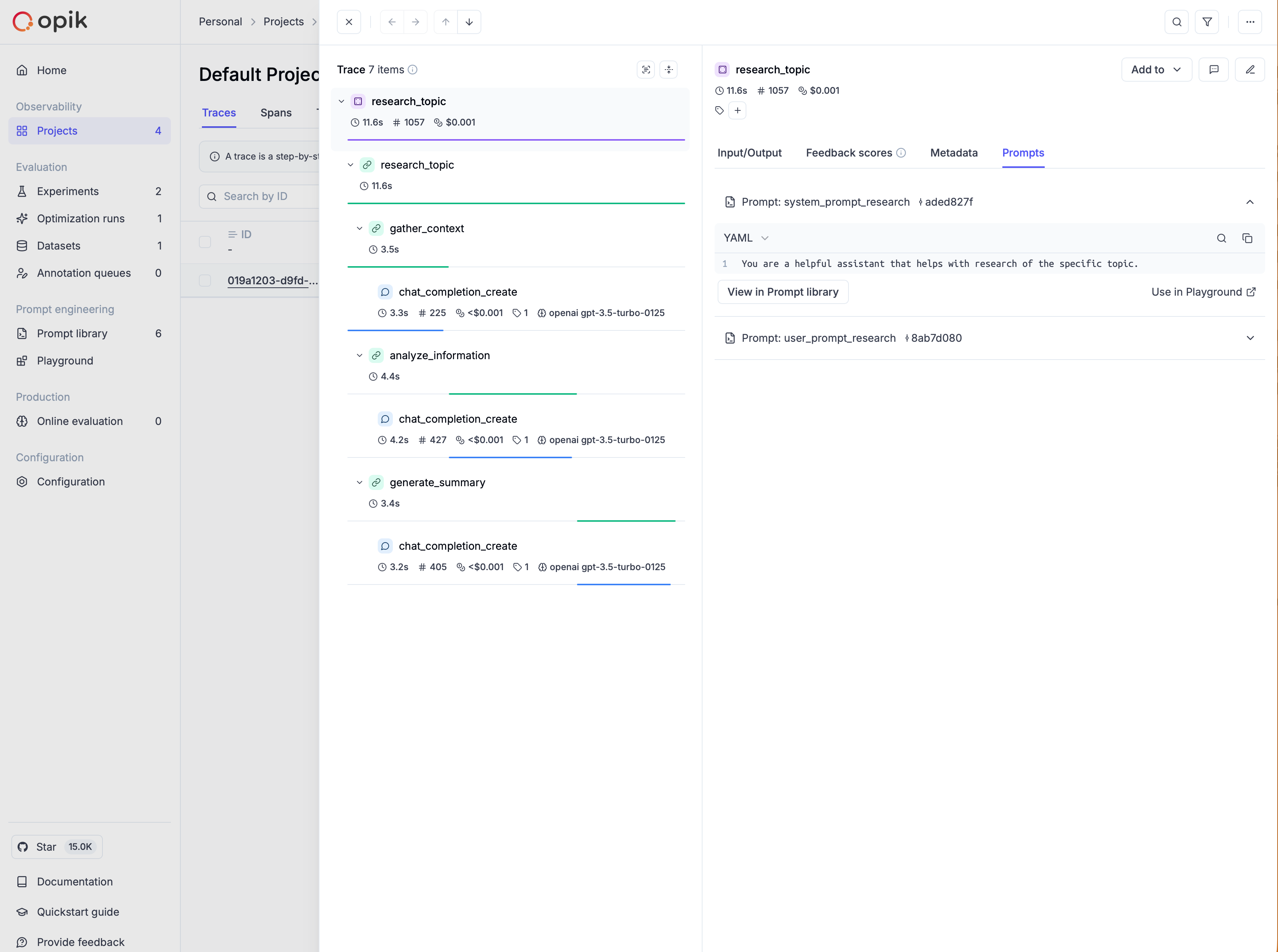

🔗 Advanced Prompt Integration in Spans & Traces

We’ve implemented prompt integration into spans and traces, creating a seamless connection between your Prompt Library, Traces, and the Playground.

You can now associate prompts directly with traces and spans using the opik_context module — so every execution is automatically tied to the exact prompt version used.

Understanding which prompt produced a given trace is key for users building both simple and advanced multi-prompt and multi-agent systems.

With this integration, you can:

- Track which prompt version was used in each function or span

- Audit and debug prompts directly from trace details

- Reproduce or improve prompts instantly in the Playground

- Close the loop between prompt design, observability, and iteration

Once added, your prompts appear in the trace details view — with links back to the Prompt Library and the Playground, so you can iterate in one click.

👉 Read more: Adding prompts to traces and spans

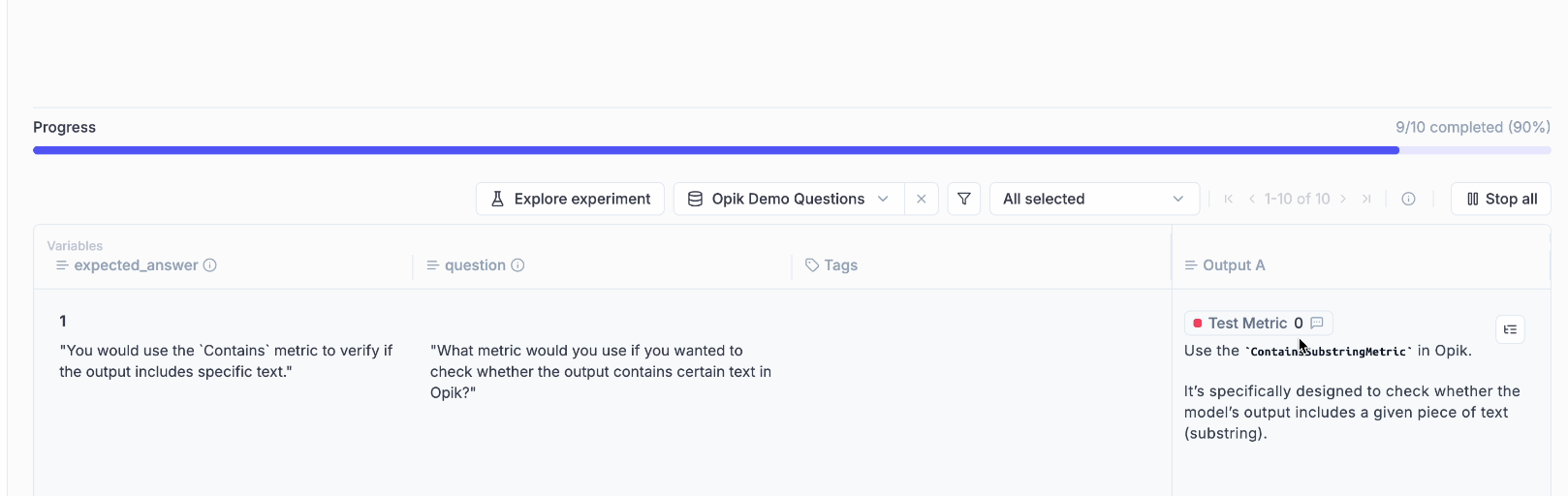

🧪 Better No-Code Experiment Capabilities in the Playground

We’ve introduced a series of improvements directly in the Playground to make experimentation easier and more powerful:

Key enhancements:

- Create or select datasets directly from the Playground

- Create or select online score rules - Ability to choose the ones that you want to use on each run

- Ability to pass dataset items to online score rules - This enables reference-based experiments, where outputs are automatically compared to expected answers or ground truth, making objective evaluation simple

- One-click navigation to experiment results - From the Playground, users can now:

- Jump into the Single Experiment View to inspect metrics and examples in detail, or

- Go to the Compare Experiments View to benchmark multiple runs side-by-side

📊 On-Demand Online Evaluation on Existing Traces and Threads

We’ve added on-demand online evaluation in Opik, letting users run metrics on already logged traces and threads — perfect for evaluating historical data or backfilling new scores.

How it works

Select traces/threads, choose any online score rule (e.g., Moderation, Equals, Contains), and run evaluations directly from the UI — no code needed.

Results appear inline as feedback scores and are fully logged for traceability.

This enables:

- Fast, no-code evaluation of existing data

- Easy retroactive measurement of model and agent performance

- Historical data analysis without re-running traces

👉 Read more: Manual Evaluation

🤖 Agent Evaluation Guides

We’ve added two new comprehensive guides on evaluating agents:

1. Evaluating Agent Trajectories

This guide helps you evaluate that your agent is making the right tool calls before returning the final answer. It’s fundamentally about evaluating and scoring what is happening within a trace.

👉 Read the full guide: Evaluating Agent Trajectories

2. Evaluating Multi-Turn Agents

Evaluating chatbots is tough because you need to evaluate not just a single LLM response but instead a conversation. This guide walks you through how you can use the new opik.simulation.SimulatedUser method to create simulated threads for your agent.

👉 Read the full guide: Evaluating Multi-Turn Agents

These new docs significantly strengthen our agent evaluation feature-set and include diagrams to visualize how each evaluation strategy works.

📦 Import/Export Commands

Added new command-line functions for importing and exporting Opik data: you can now export all traces, spans, datasets, prompts, and evaluation rules from a project to local JSON or CSV files. Also helps you import data from local JSON files into an existing project.

Top use cases it is useful for

- Migrate - Move data between projects or environments

- Backup - Create local backups of your project data

- Version control - Track changes to your prompts and evaluation rules

- Data portability - Easily transfer your Opik workspace data

Read the full docs: Import/Export Commands

And much more! 👉 See full commit log on GitHub

Releases: 1.8.83, 1.8.84, 1.8.85, 1.8.86, 1.8.87, 1.8.88, 1.8.89, 1.8.90, 1.8.91, 1.8.92, 1.8.93, 1.8.94, 1.8.95, 1.8.96, 1.8.97

Here are the most relevant improvements we’ve made since the last release:

🚨 Alerts

We’ve launched Alerts — a powerful way to get automated webhook notifications from your Opik workspace whenever important events happen (errors, feedback scores, prompt changes, and more). Opik now sends an HTTP POST to your endpoint with rich, structured event data you can route anywhere.

Now, you can make Opik a seamless part of your end-to-end workflows! With the new Alerts you can:

- Spot production errors in near-real time

- Track feedback scores to monitor model quality and user satisfaction

- Audit prompt changes across your workspace

- Funnel events into your existing workflows and CI/CD pipelines

And this is just v1.0! We’ll keep adding events and advanced filtering, thresholds and more fine-grained control in future iterations, always based on community feedback.

Read the full docs here - Alerts Guide

🖼️ Expanded Multimodal Image Support

We’ve added a better image support across our platform!

What’s new?

1. Image Support in LLM as a Judge online Evaluations - LLM as a Judge evaluations now support images alongside text, enabling you to evaluate vision models and multimodal applications. Upload images and get comprehensive feedback on both text and visual content.

2. Enhanced Playground Experience - The playground now supports image inputs, allowing you to test prompts with images before running full evaluations. Perfect for experimenting with vision models and multimodal prompts.

3. Improved Data Display - Base64 image previews in data tables, better image handling in trace views, and enhanced pretty formatting for multimodal content.

Links to official docs: Evaluating traces with images and Using images in the Plaground

Opik Optimizer Updates

1. Support Multi-Metric Optimization - Support for optimizing multiple metrics simultaneously with comprehensive frontend and backend changes. Read more

2. HRPO (Hierarchical Reflective Prompt Optimizer) - New optimizer with self-reflective capabilities. Read more about it here

Enhanced Feedback & Annotation experience

1. Improved Annotation Queue Export - Enhanced export functionality for annotation queues: export your annotated data seamlessly for further analysis.

2. Annotation Queue UX Enhancements

- Hotkeys Navigation - Improved keyboard navigation throughout the interface for a fast annotation experience

- Return to Annotation Queue Button - Easy navigation back to annotation queues

- Resume Functionality - Continue annotation work where you left off

- Queue Creation from Traces - Create annotation queues directly from trace tables

3. Inline Feedback Editing - Quickly edit user feedback directly in data tables with our new inline editing feature. Hover over feedback cells to reveal edit options, making annotation workflows faster and more intuitive.

Read more about our Annotation Queues

User Experience Enhancements

1. Dark Mode Refinements - Improved dark mode styling across UI components for better visual consistency and user experience.

2. Enhanced Prompt Readability - Better formatting and display of long prompts in the interface, making them easier to read and understand.

3. Improved Online Evaluation Page - Added search, filtering, and sorting capabilities to the online evaluation page for better data management.

4. Better token and cost control

- Thread Cost Display - Show cost information in thread sidebar headers

- Sum Statistics - Display sum statistics for cost and token columns in the traces table.

5. Filter-Aware Metric Aggregation - Better experiment item filtering in the experiments details tables for better data control.

6. Pretty Mode Enhancements - Improved the Pretty mode for Input/Output display with better formatting and readability across the product.

TypeScript SDK Updates

- Opik Configure Tool - New

opik-tsconfigure tool with a guided developer experience and local flag support - Prompt Management - Comprehensive prompt management implementation

- LangChain Integration - Aligned LangChain integration with Python architecture

Python SDK Improvements

- Context Managers - New context managers for span and trace creation

- Bedrock Integration - Enhanced Bedrock integration with invoke_model support

- Trace Updates - New

update_trace()method for easier trace modifications - Parallel Agent Support - Support for logging parallel agents in ADK integration

- Enhanced feedback score handling with better category support

Integration updates

1. OpenTelemetry Improvements

- Thread ID Support - Added support for thread_id in OpenTelemetry endpoint

- System Information in Telemetry - Enhanced telemetry with system information

2. Model Support Updates - Added support for Claude Haiku 4.5 and updated model pricing information across the platform.

And much more! 👉 See full commit log on GitHub

Releases: 1.8.63, 1.8.64, 1.8.65, 1.8.66, 1.8.67, 1.8.68, 1.8.69, 1.8.70, 1.8.71, 1.8.72, 1.8.73, 1.8.74, 1.8.75, 1.8.76, 1.8.77, 1.8.78, 1.8.79, 1.8.80, 1.8.81, 1.8.82, 1.8.83

Here are the most relevant improvements we’ve made since the last release:

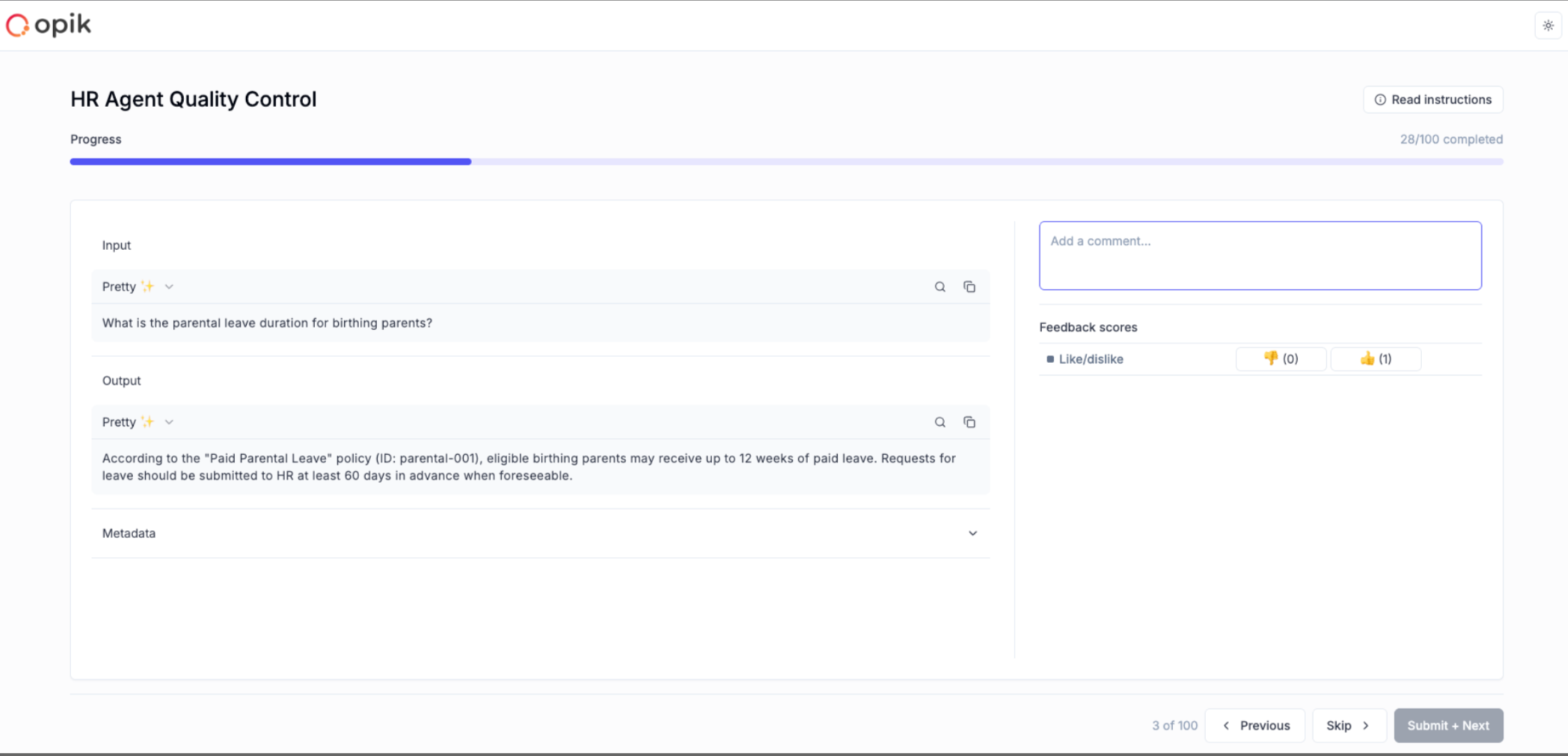

📝 Multi-Value Feedback Scores & Annotation Queues

We’re excited to announce major improvements to our evaluation and annotation capabilities!

What’s new?

1. Multi-Value Feedback Scores Multiple users can now independently score the same trace or thread. No more overwriting each other’s input—every reviewer’s perspective is preserved and is visible in the product. This enables richer, more reliable consensus-building during evaluation.

2. Annotation Queues Create queues of traces or threads that need expert review. Share them with SMEs through simple links. Organize work systematically, track progress, and collect both structured and unstructured feedback at scale.

3. Simplified Annotation Experience A clean, focused UI designed for non-technical reviewers. Support for clear instructions, predefined feedback metrics, and progress indicators. Lightweight and distraction-free, so SMEs can concentrate on providing high-quality feedback.

Full Documentation: Annotation Queues

🚀 Opik Optimizer - GEPA Algorithm & MCP Tool Optimization

What’s new?

1. GEPA (Genetic-Pareto) Support GEPA is the new algorithm for optimizing prompts from Stanford. This bolsters our existing optimizers with the latest algorithm to give users more options.

2. MCP Tool Calling Optimization The ability to tune MCP servers (external tools used by LLMs). Our solution uses our existing algorithm (MetaPrompter) to use LLMs to tune how LLMs interact with an MCP tool. The final output is a new tool signature which you can commit back to your code.

Full Documentation: Tool Optimization | GEPA Optimizer

🔍 Dataset & Search Enhancements

- Added dataset search and dataset items download functionality

🐍 Python SDK Improvements

- Implement granular support for choosing dataset items in experiments

- Better project name setting and onboarding

- Implement calculation of mean/min/max/std for each metric in experiments

- Update CrewAI to support CrewAI flows

🎨 UX Enhancements

- Add clickable links in trace metadata

- Add description field to feedback definitions

And much more! 👉 See full commit log on GitHub

Releases: 1.8.43, 1.8.44, 1.8.45, 1.8.46, 1.8.47, 1.8.48, 1.8.49, 1.8.50, 1.8.51, 1.8.52, 1.8.53, 1.8.54, 1.8.55, 1.8.56, 1.8.57, 1.8.58, 1.8.59, 1.8.60, 1.8.61, 1.8.62

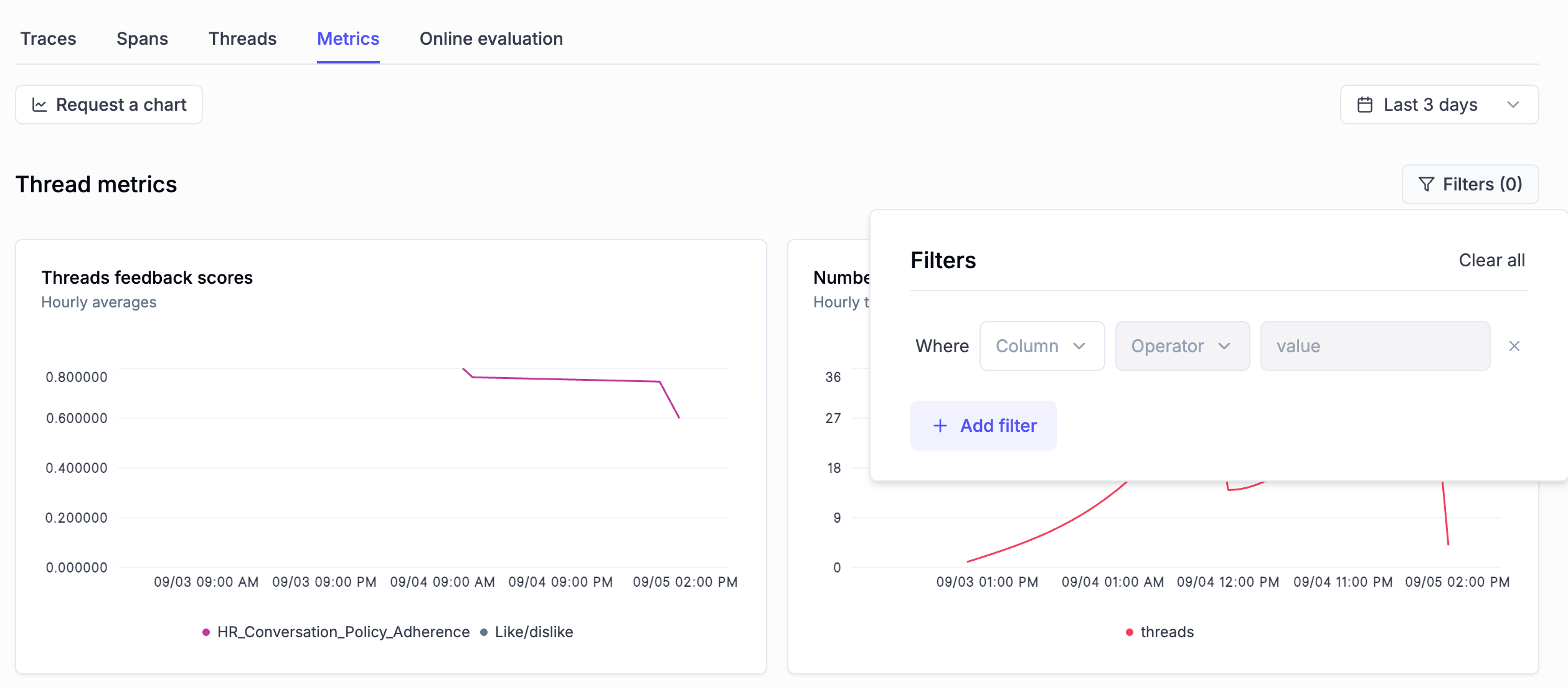

Here are the most relevant improvements we’ve made since the last release:

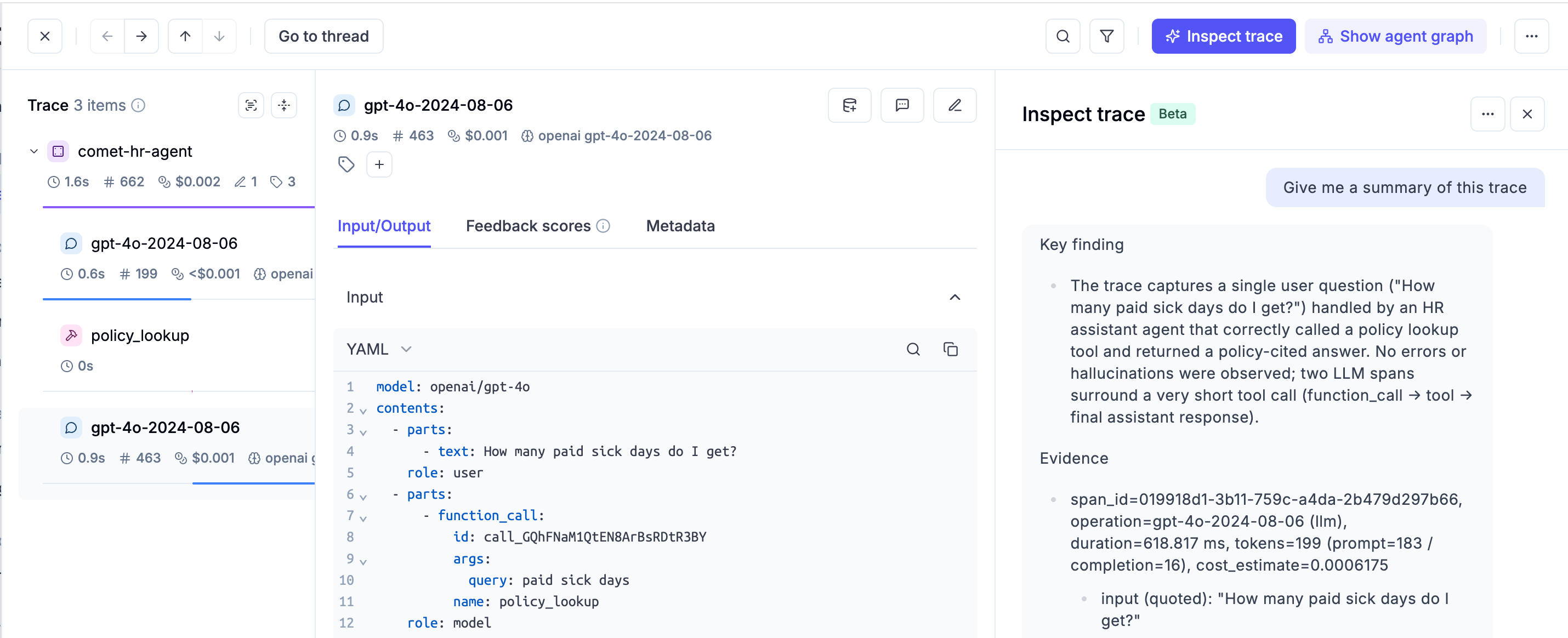

🔍 Opik Trace Analyzer Beta is Live!

We’re excited to announce the launch of Opik Trace Analyzer on Opik Cloud!

What this means: faster debugging & analysis!

Our users can now easily understand, analyze, and debug their development and production traces.

Want to give it a try? All you need to do is go to one of your traces and click on “Inspect trace” to start getting valuable insights.

✨ Features and Improvements

- We’ve finally added dark mode support! This feature has been requested many times by our community members. You can now switch your theme in your account settings.

- Now you can filter the widgets in the metrics tab by trace and threads attributes

- Annotating tons of threads? We’ve added the ability to export feedback score comments for threads to CSV for easier analysis in external tools.

- We have also improved the discoverability of the experiment comparison feature.

- Added new filter operators to the Experiments table

- Adding assets as part of your experiment’s metadata? We now display clickable links in the experiment config tab for easier navigation.

📚 Documentation

- We’ve released Opik University! This is a new section of the docs full of video guides explaining the product.

🔌 SDK & Integration Improvements

- Enhanced LangChain integration with comprehensive tests and build fixes

- Implemented new search_prompts method in the Python SDK

- Added documentation for models, providers, and frameworks supported for cost tracking

- Enhanced Google ADK integration to log error information to corresponding spans and traces

And much more! 👉 See full commit log on GitHub

Releases: 1.8.34, 1.8.35, 1.8.36, 1.8.37, 1.8.38, 1.8.39, 1.8.40, 1.8.41, 1.8.42