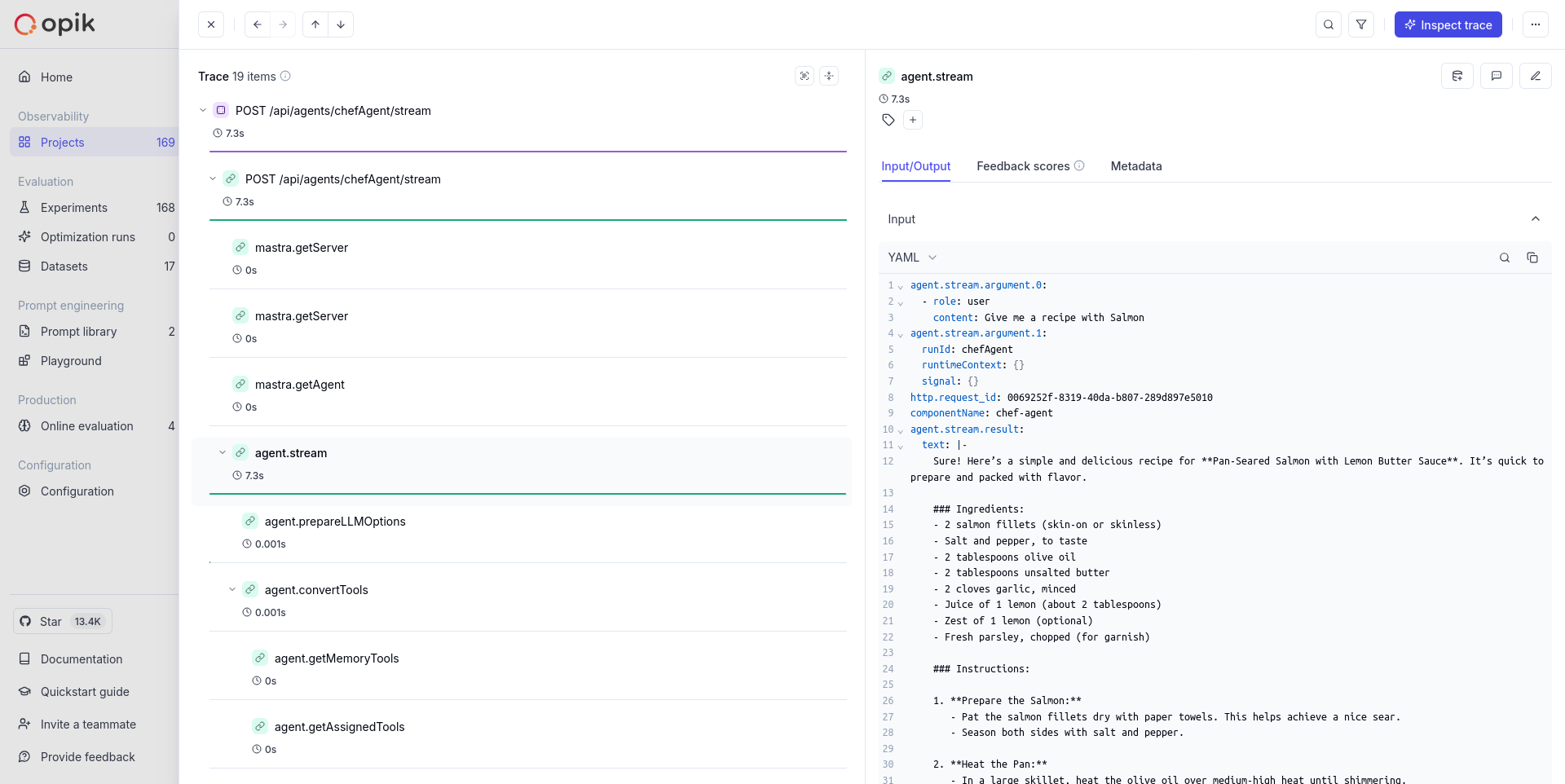

Mastra Integration via OpenTelemetry

Mastra is the TypeScript agent framework designed to provide the essential primitives for building AI applications. It enables developers to create AI agents with memory and tool-calling capabilities, implement deterministic LLM workflows, and leverage RAG for knowledge integration.

Mastra’s primary advantage is its built-in telemetry support that automatically captures agent interactions, LLM calls, and workflow executions, making it easy to monitor and debug AI applications.

Getting started

Create a Mastra project

If you don’t have a Mastra project yet, you can create one using the Mastra CLI:

Install required packages

Install the necessary dependencies for Mastra and AI SDK:

Add environment variables

Create or update your .env file with the following variables:

Opik Cloud

Enterprise deployment

Self-hosted instance

To log the traces to a specific project, you can add the

projectName parameter to the OTEL_EXPORTER_OTLP_HEADERS

environment variable:

You can also update the Comet-Workspace parameter to a different

value if you would like to log the data to a different workspace.

Set up an agent

Create an agent in your project. For example, create a file src/mastra/index.ts:

Run Mastra development server

Start the Mastra development server:

Head over to the developer playground with the provided URL and start chatting with your agent.

What gets traced

With this setup, your Mastra application will automatically trace:

- Agent interactions: Complete conversation flows with agents

- LLM calls: Model requests, responses, and token usage

- Tool executions: Function calls and their results

- Workflow steps: Individual steps in complex workflows

- Memory operations: Context and memory updates

Further improvements

If you have any questions or suggestions for improving the Mastra integration, please open an issue on our GitHub repository.