Cohere

Cohere provides state-of-the-art large language models that excel at text generation, summarization, classification, and retrieval-augmented generation.

This guide explains how to integrate Opik with Cohere using the OpenAI SDK Compatibility API. By using the track_openai method provided by opik with Cohere’s compatibility endpoint, you can easily track and evaluate your Cohere API calls within your Opik projects as Opik will automatically log the input prompt, model used, token usage, and response generated.

Getting Started

Configuring Opik

To start tracking your Cohere LLM calls, you’ll need to have both opik and openai packages installed. You can install them using pip:

In addition, you can configure Opik using the opik configure command which will prompt you for the correct local server address or if you are using the Cloud platform your API key:

Configuring Cohere

You’ll need to set your Cohere API key as an environment variable:

Tracking Cohere API calls

Leverage the OpenAI Compatibility API by replacing the base URL with Cohere’s endpoint when initializing the client:

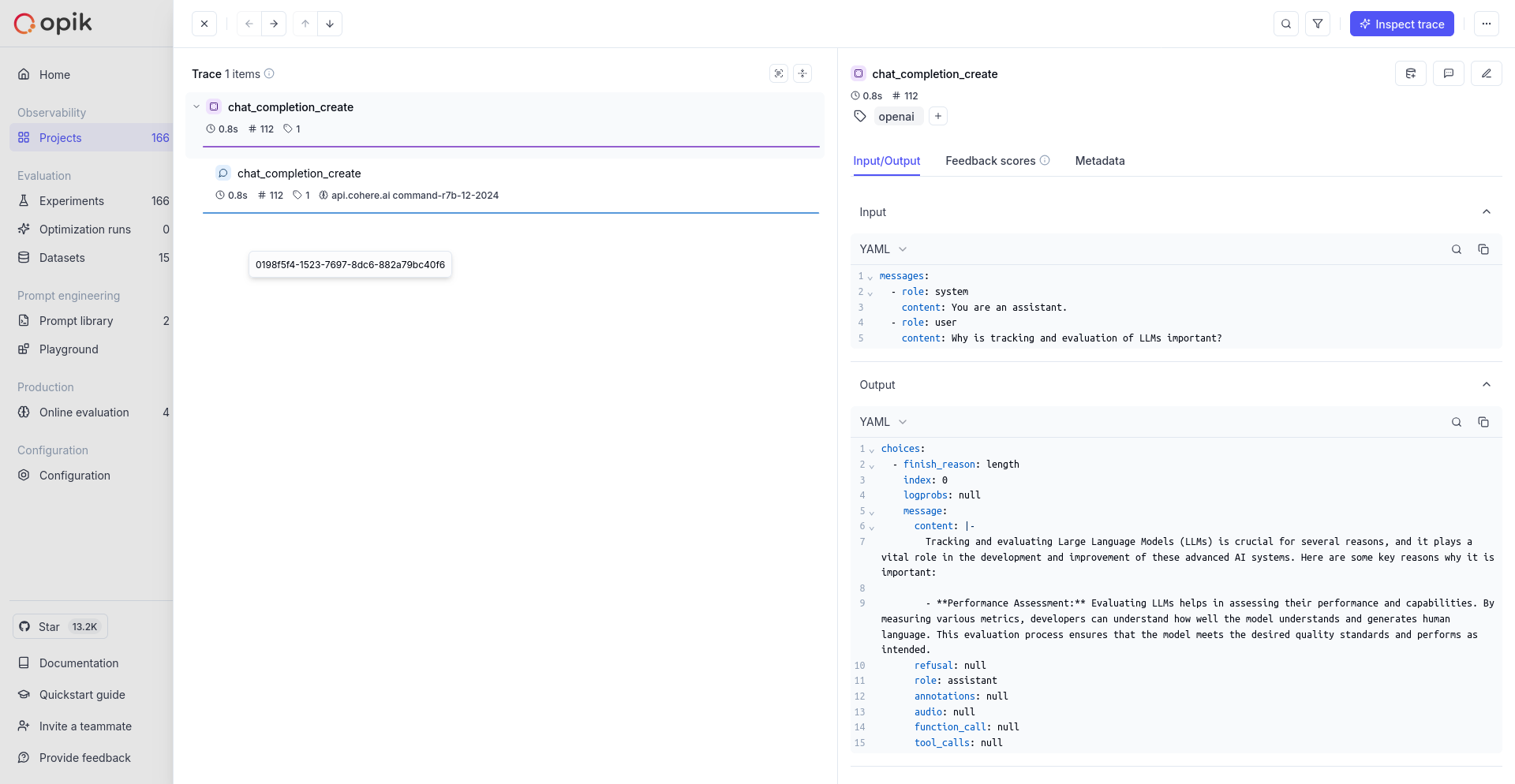

The track_openai will automatically track and log the API call, including the input prompt, model used, and response generated. You can view these logs in your Opik project dashboard.

Using Cohere within a tracked function

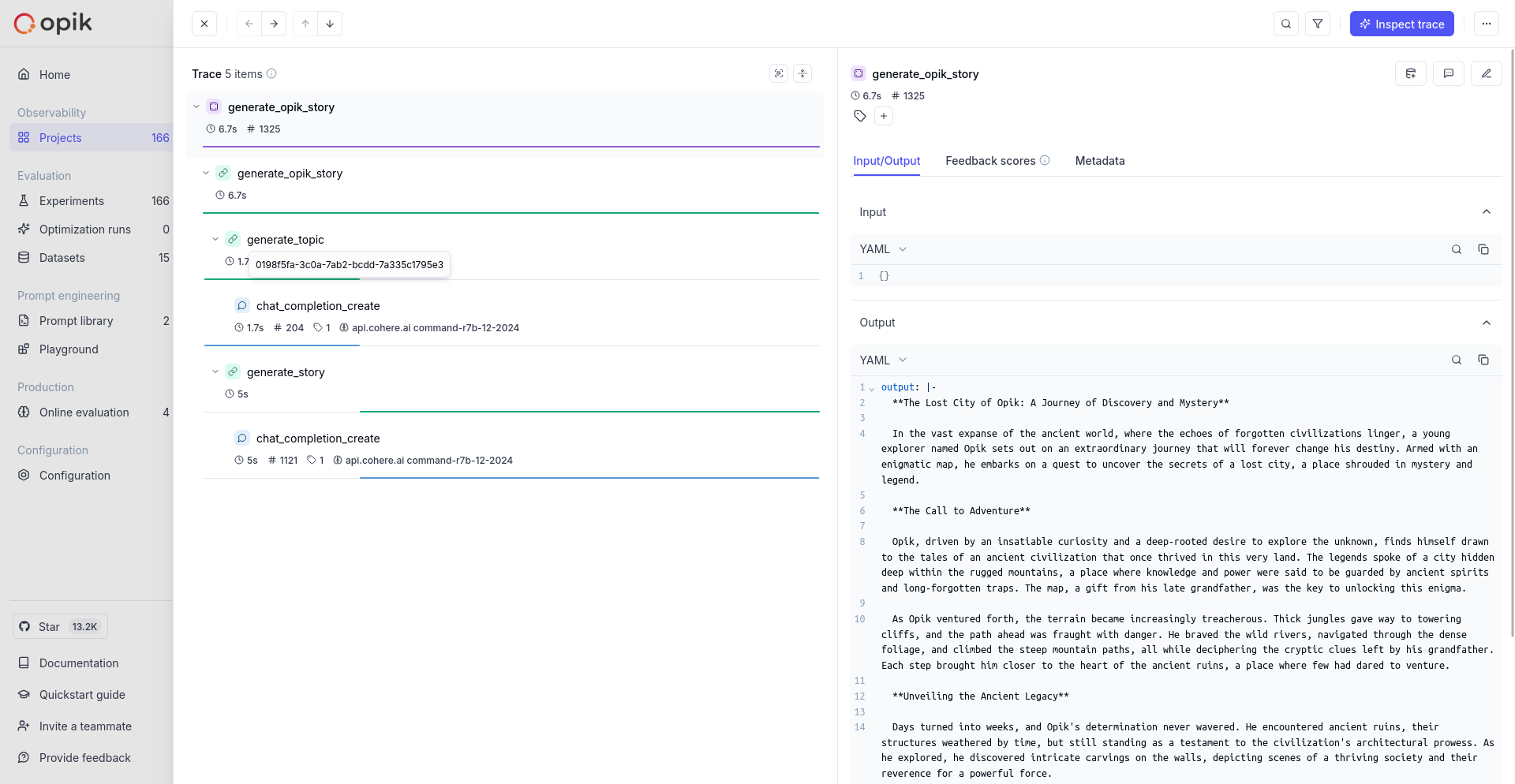

If you are using Cohere within a function tracked with the @track decorator, you can use the tracked client as normal:

Supported Cohere models

The track_openai wrapper with Cohere’s compatibility API supports the following Cohere models:

command-r7b-12-2024- Command R 7B modelcommand-r-plus- Command R Plus modelcommand-r- Command R modelcommand-light- Command Light modelcommand- Command model

Supported OpenAI methods

The track_openai wrapper supports the following OpenAI methods when used with Cohere:

client.chat.completions.create(), including support for stream=True modeclient.beta.chat.completions.parse()client.beta.chat.completions.stream()client.responses.create()

If you would like to track another OpenAI method, please let us know by opening an issue on GitHub.