BeeAI Integration via OpenTelemetry

BeeAI is an agent framework designed to simplify the development of AI agents with a focus on simplicity and performance. It provides a clean API for building agents with built-in support for tool usage, conversation management, and extensible architecture.

BeeAI’s primary advantage is its lightweight design that makes it easy to create and deploy AI agents without unnecessary complexity, while maintaining powerful capabilities for production use.

Getting started

To use the BeeAI integration with Opik, you will need to have BeeAI and the required OpenTelemetry packages installed:

Environment configuration

Configure your environment variables based on your Opik deployment:

Opik Cloud

Enterprise deployment

Self-hosted instance

If you are using Opik Cloud, you will need to set the following environment variables:

To log the traces to a specific project, you can add the

projectName parameter to the OTEL_EXPORTER_OTLP_HEADERS

environment variable:

You can also update the Comet-Workspace parameter to a different

value if you would like to log the data to a different workspace.

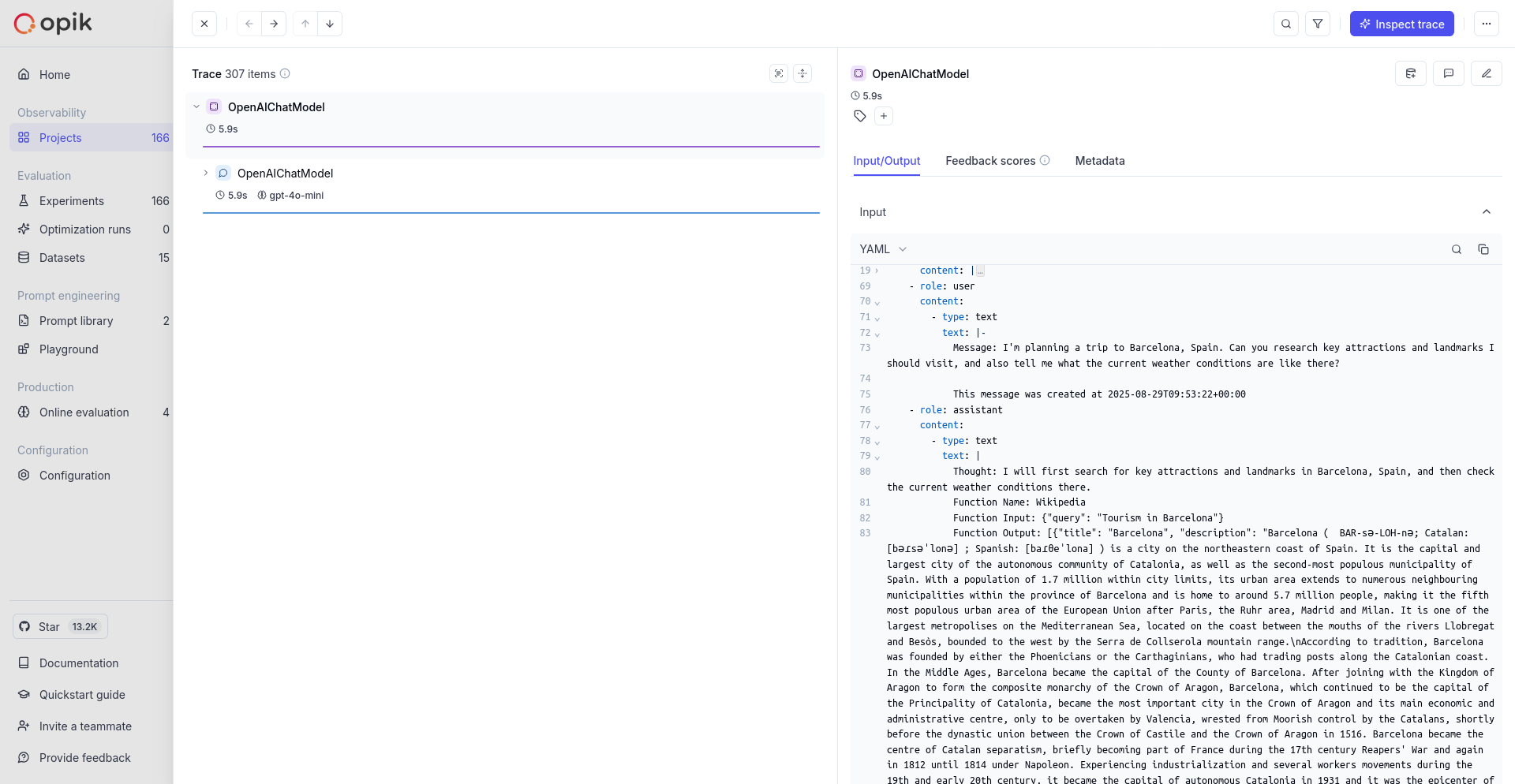

Using Opik with BeeAI

Set up OpenTelemetry instrumentation for BeeAI:

Further improvements

If you have any questions or suggestions for improving the BeeAI integration, please open an issue on our GitHub repository.