Comet Resources

How to Scale Today’s Data Science Initiatives

Learn how to define your key business objectives, manage the way you scale your ML initiatives, organize your DS team, and build out your processes and infrastructure

Standardizing the ML Experimentation Process

ML industry experts have identified the process and requirements for developing production-ready machine learning models. You'll find in this eBook that a standard approach helps to get more models in production.

Building Effective ML Teams

This eBook explains the three critical traits of successful ML teams, and the risks of missing any of these items: visibility, reproducibility, and collaboration.

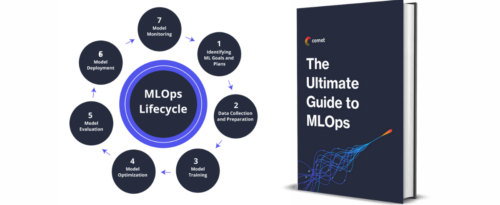

The Ultimate Guide to MLOps

MLOps provides massive returns when organizations develop a robust and efficient system. Learn how to create a stronger workflow with this ultimate guide.

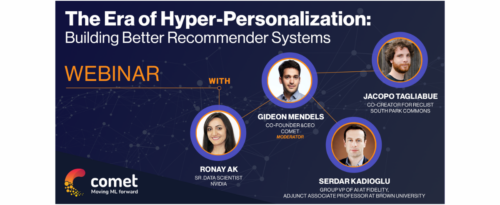

The Era of Hyper-Personalization: Building Better Recommender Systems

In this roundtable with Ronay Ak from Nvidia, Serdar Kadioglu at Fidelity Investments, and Jacopo Tagliabue, we will be discussing how to build better recommender systems. Our guest speakers shared many lessons learned from building and collaborating with others in building RecSys.

Industry Panel: Defining the MLOps Stack

What is MLOps? What does it means to you as a data scientist? Watch this on-demand webinar to learn to the answers to these questions and more.

Customer Roundtable: Developing ML at Enterprise Scale

In this session, join the teams at Superb AI, AI Infrastructure Alliance, and Comet as we cover:

How a modular approach to the ML tech stack enables teams to choose the best tools for their use case, data, and business

Accelerating AI Value with Modular MLOps

In this session, join the teams at Superb AI, AI Infrastructure Alliance, and Comet as we cover:

How a modular approach to the ML tech stack enables teams to choose the best tools for their use case, data, and business

ML System Design for Continuous Experimentation

In this webinar, we will examine some naïve ML workflows that don't take the development-production feedback loop into account and explore why they break down.