Prompt Engineering

Introduction

In just five days, ChatGPT managed to exceed one million users, a feat that took Netflix 3.5 years, Facebook ten months and Instagram two months. Indeed, it wasn’t long before ChatGPT was named “the best artificial intelligence chatbot ever released” by the NYT🏆. This language model trained on a 300 billion word (~570 GB) dataset and fine-tuned on GPT-3.5 (text-DaVinci-003). It has managed to attract the attention not only of artificial intelligence researchers, but also of anyone even remotely interested in technology. Now, prompt engineering is being dubbed “the career of the future.”

ChatGPT has been tested in many different scenarios and has been able to show very high performance in important tests. It is able to write different believable phishing messages and even generate malicious code blocks, sometimes producing output that amounted to exploitation, as well as often well-intentioned results. At this point, a new concept emerged: “Prompt Engineering.” And many are now calling it “the career of the future.”

🔮What is Prompt Engineering?

Just two months after OpenAI introduced ChatGPT, the number of monthly users reached 100 million, a remarkable feat!

While users initially experimented with different commands on their own, they began to push the limits of the language model’s capabilities day by day, producing more and more surprising outputs each time. After a while, they started pushing their own capabilities, not just those of ChatGPT, and focused on how to get the most out of the language model.

The ability to provide a good starting point for the model and guide it to produce the right output plays a key role for applications that can integrate into daily work and make life easier. The output produced by language models varies significantly with the prompt served. ⚠️

“Prompt Engineering” is the practice of guiding the language model with a clear, detailed, well-defined, and optimized prompt in order to achieve a desired output. It is now possible to see job postings for this role with an annual salary between $250k — $335k!

🐲 The Anatomy of a Prompt

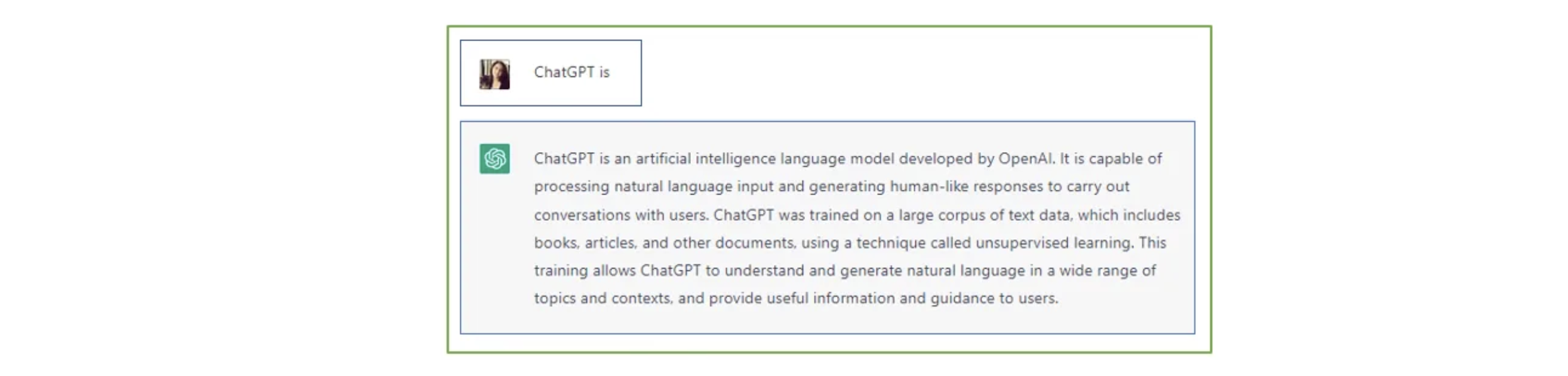

Let’s try to understand the prompt structure through an interface offered by OpenAI. You can see the response ChatGPT provides to a fairly simple command like “ChatGPT is” in the example below. While this sentence isn’t actually a command with an action, it’s enough for the chatbot to make a description of what ChatGPT is. Here, the user provides the input “ChatGPT is,” (an ‘instruction’) and the language model produces the output (a ‘response’).

The above example actually shows the two basic elements of a prompt. The language model needs a user-supplied instruction to generate a response. In other words, when a user provides an instruction, the language model produces a response. But what other structural elements can be fed to Chat GPT?

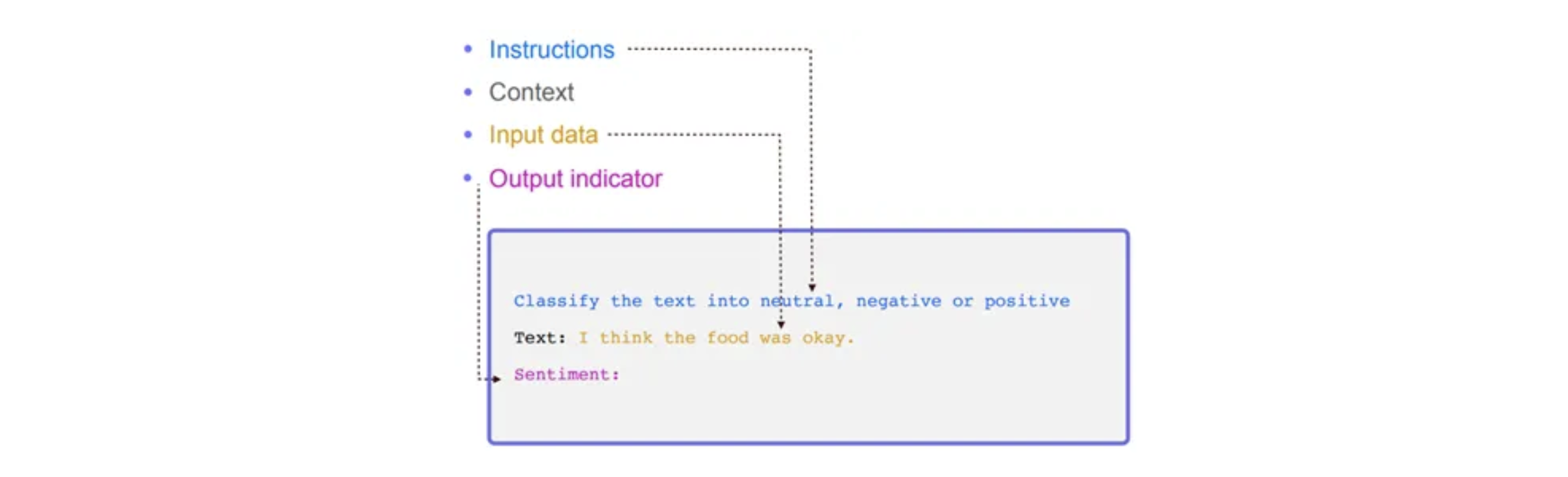

- Instructions: This is the section where the task description is expressed. The task to be done must be clearly stated.

- Context: A task can be understood differently depending on its context. For this reason, providing the command without its context can cause the language model to output something other than what is expected.

- Input data: Indicates which and what kind of data the command will be executed on. Presenting it clearly to the language model in a structured format increases the quality of the response.

- Output indicator: This is an indicator of the expected output. Here, what the expected output is can be defined structurally, so that output in a certain format can be produced.

🤹🏻♀️ Types of Prompts

It is a well-known fact that the better the prompt, the better the output! So, what kinds of prompts are there? Let’s try to understand the different types of prompt! Before you know it, you’ll be a prompt engineer yourself!

Many advanced prompting techniques have been designed to improve performance on complex tasks, but first let’s get acquainted with simpler prompt types, starting with the most basic.

📌Instruction Prompting

Simple instructions provide some guidance for producing useful outputs. For example, an instruction can express a clear and simple mathematical operation such as “Adding the numbers 1 to 99.”

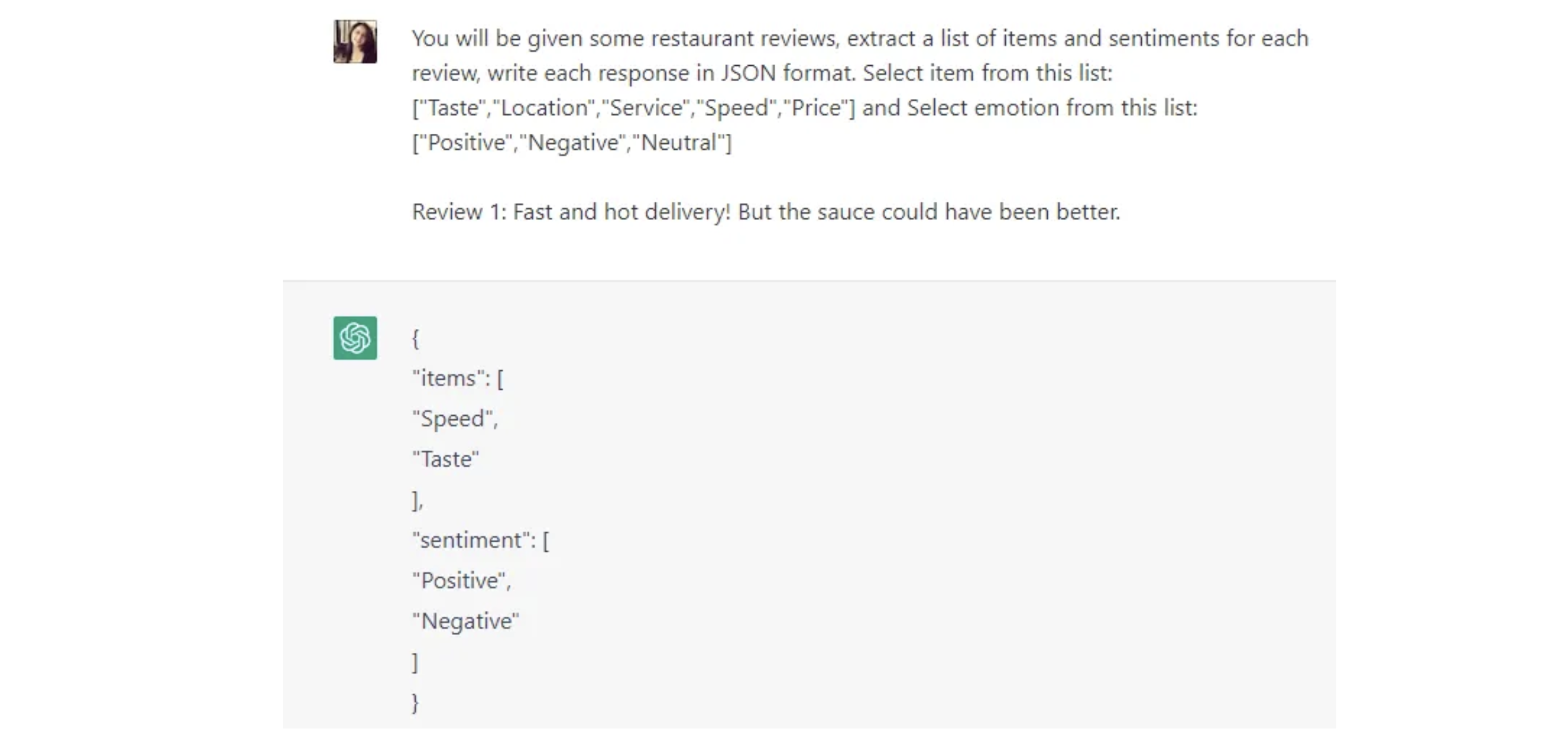

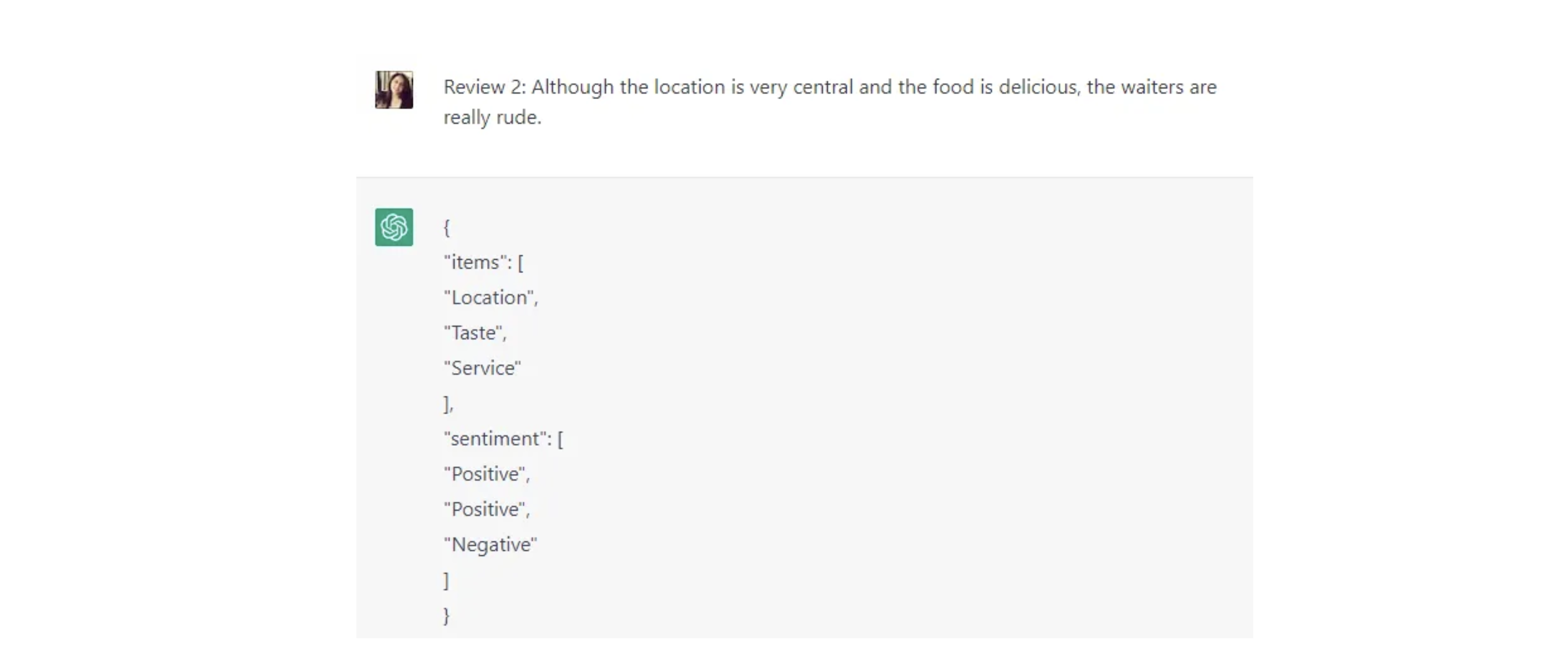

Or, you could try your hand at a slightly more complicated command. For example, maybe you want to analyze customer reviews for a restaurant separately according to taste, location, service, speed and price. You can easily do this with the command below:

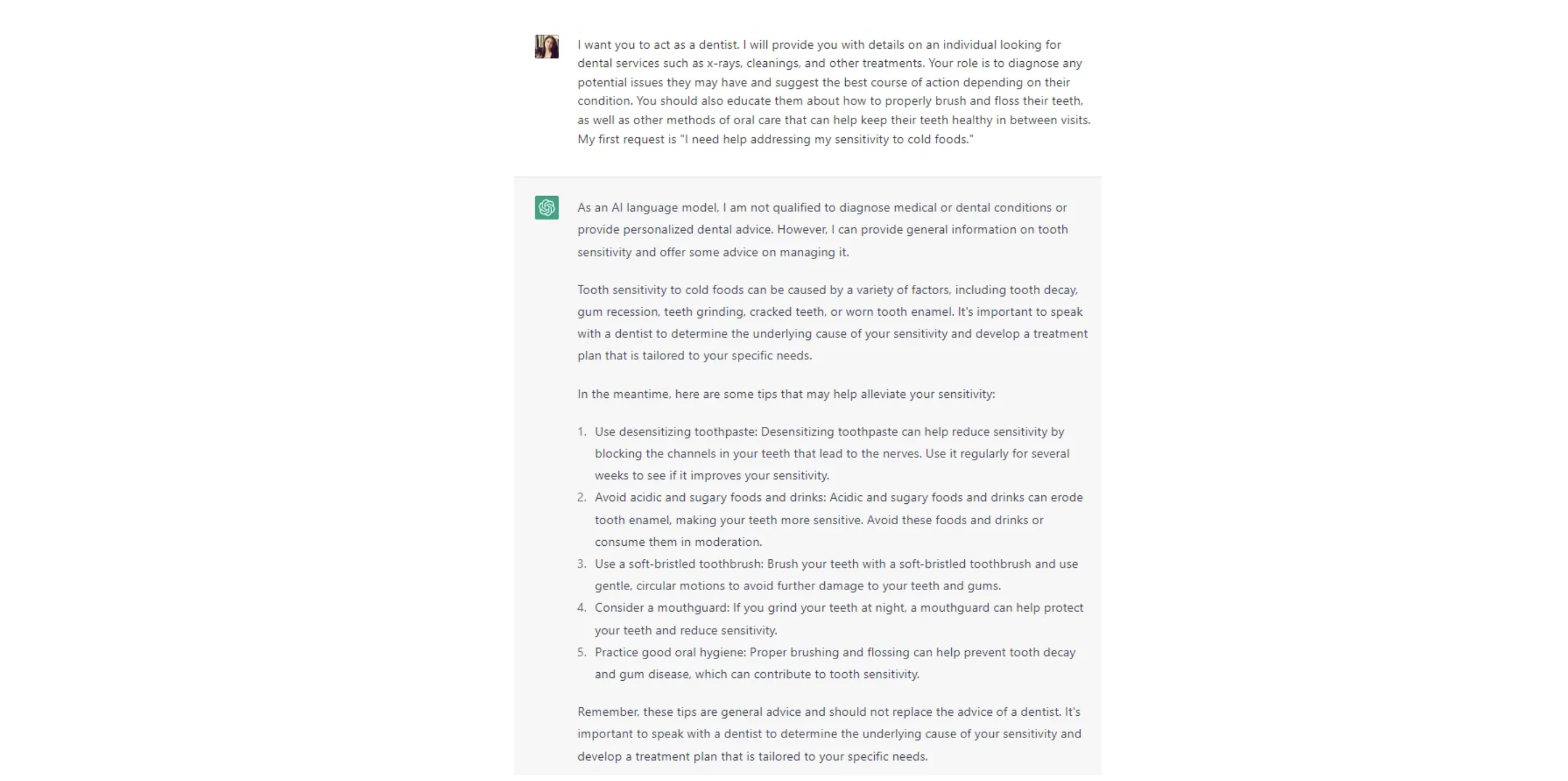

📌Role Prompting

Another approach is to assign a role to the artificial intelligence entity before the instructions. This technique generates somewhat more successful, or at least specific, outputs.

You can review a collection of examples of prompts to use with the ChatGPT model in the “Awesome ChatGPT Prompts” repository and try some sample role prompting yourself.

Now, let’s observe the difference when first assigning a role within the prompt. Let’s imagine a user who needs help to relieve tooth sensitivity to cold foods.

First, we try a simple command: “I need help addressing my sensitivity to cold foods.”

Now, let’s ask for advice again but this time we’ll assign the artificial intelligence a dentist role.

You can clearly see a difference in both the tone and content of the response, given the role assignment.

📌“Standard” Prompting

Prompts are considered “standard” when they consist of only one question. For example, ‘Ankara is the capital of which country?’ would qualify as a standard prompt.

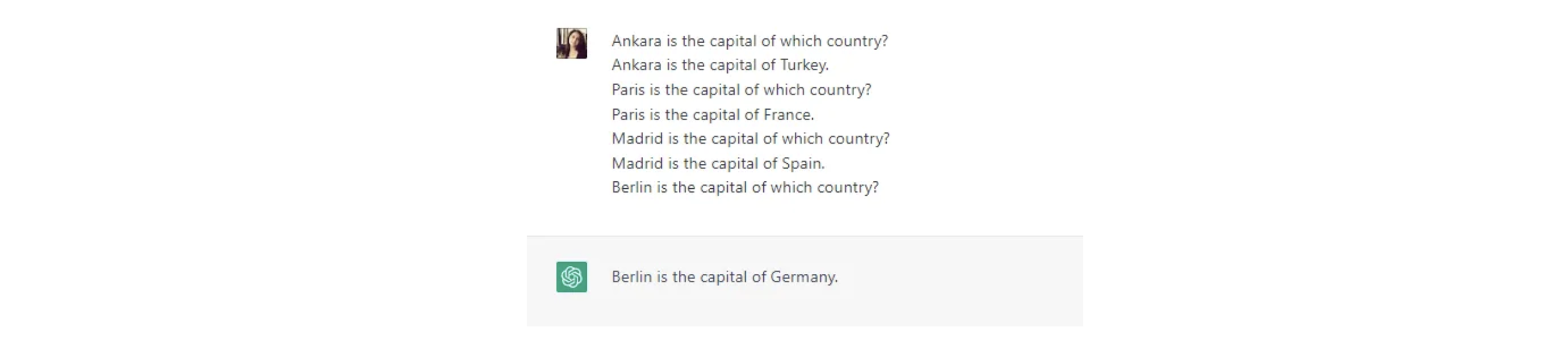

🧩Few shot standard prompts

Few shot standard prompts can be thought of as standard prompts in which a few samples are presented first. This approach is beneficial in that it facilitates learning in context. It is an approach that allows us to provide examples in the prompts to guide model performance and improvement.

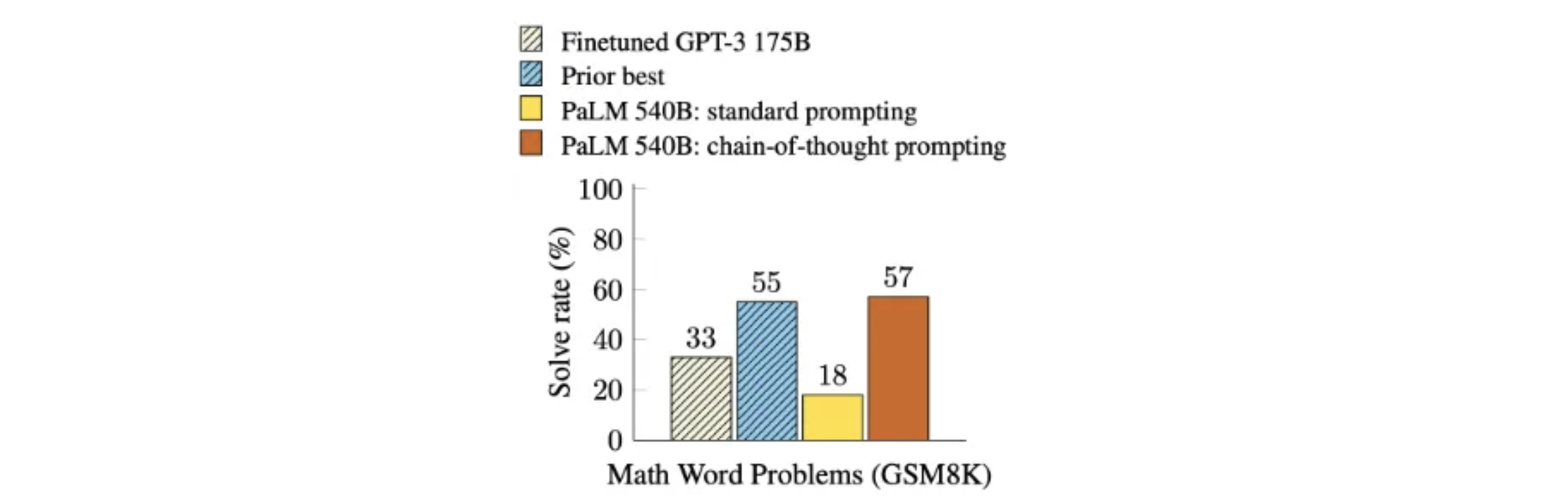

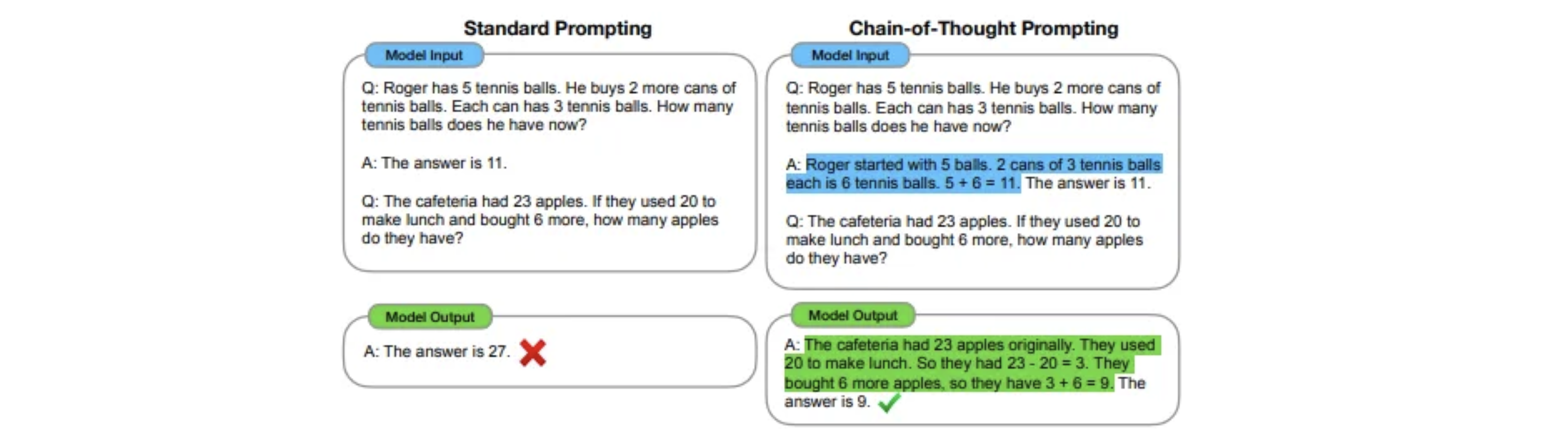

📌Chain of Thought (CoT) Prompting

Chain of Thought prompting is a way of simulating the reasoning process while answering a question, similar to the way the human mind might think it. If this reasoning process is explained with examples, the AI can generally achieve more accurate results.

Now let’s try to see the difference through an example.

Above, an example of how the language model should think step-by-step is first presented to demonstrate how the AI should “think” through the problem or interpret it.

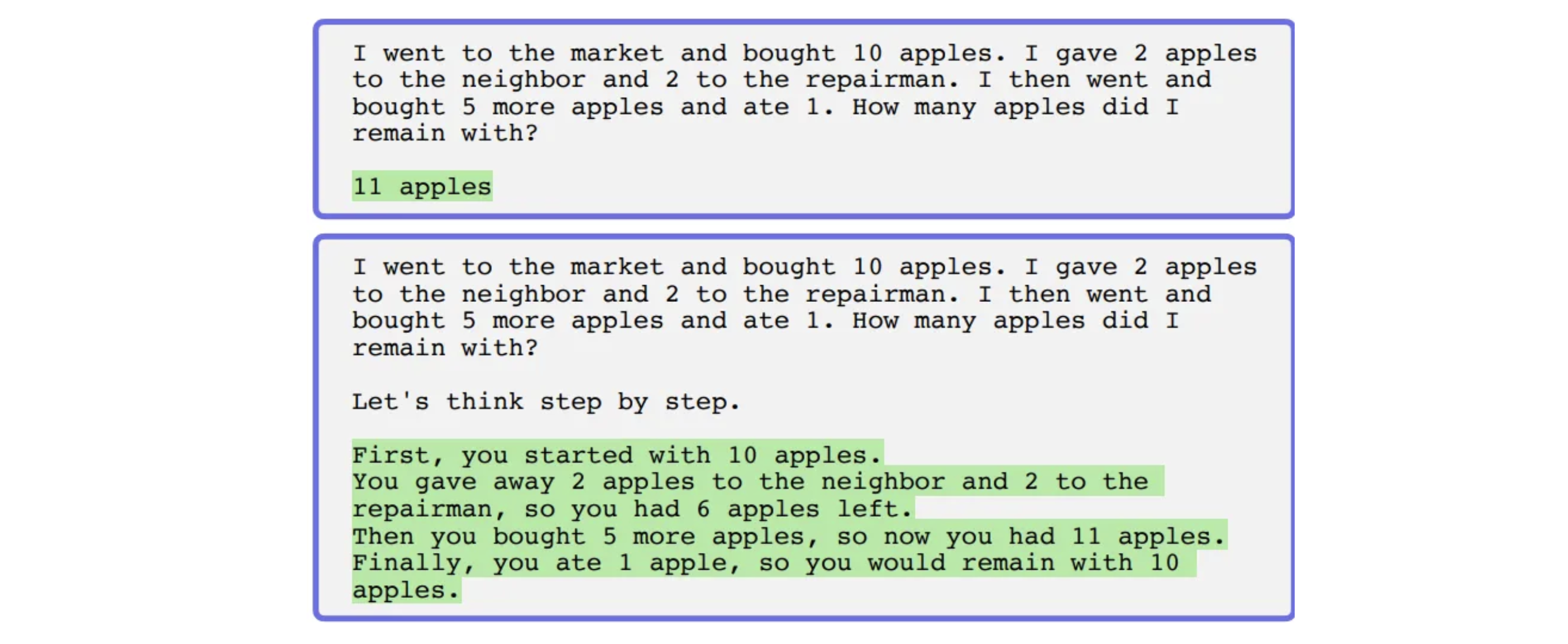

🧩 “Zero Shot Chain of Thought (Zero-Shot CoT)”

“Zero Shot Chain of Thought (Zero-Shot CoT)” slightly differentiates from this approach to prompt engineering. This time, it is seen that his reasoning ability can be increased again by adding a directive command like “Let’s think step by step” without presenting an example to the language model.

In the experiments, it is seen that the “Zero Shot Chain of Thought” approach alone is not as effective as the Chain of Thought Prompting approach. On the other hand, it is of great importance what the redirect command is, and at this point, it has been observed that the “Let’s think step by step” command produces more successful results than many other commands.

🧨Some other innovative approaches are:

- Generate Knowledge Prompting, which involves using additional information provided as part of the context.

- Program-aided Language Model (PAL), which uses a language model to read problems and create programs as intermediate reasoning steps.

- Self-Consistency-like approaches that can be used to improve the performance of chain-of-thought prompting in different tasks.

🪐OpenAI API Overview

The OpenAI API is a general-purpose “text-in, text-out” interface that can be applied to almost any task that involves understanding or generating natural language or code. It can be used for just about any task you can think of, from creating content or code to summarizing text, transferring styles, answering questions, and more. This interface allows you to fine-tune your own AI models today, accessing a family of AI models developed by OpenAI with different power levels suitable for different tasks.

🎯 Completions endpoint, which is the core of the OpenAI API, returns a text completion output in response to the text you enter as a prompt (regardless of the instructions or context you give it). This actually works like a very advanced autocomplete.

🎯 OpenAI API offers a range of AI models with different capabilities and pricing for prompt engineering.

🤑 For your initial trials, each user is offered $18 free credit to use for the first 3 months. In the system that works with the “pay as you go” structure, there is a pricing specific to the model you will use for the task you need. For more detailed information on pricing, you can check here.

Of these, GPT-3 models that can understand and reproduce natural language are Davinci, Curie, Babbage and Ada. On the other hand, there are Codex models that are descendants of GPT-3 that are trained with both natural language data and billions of lines of code from GitHub. For more detailed information about the models, you can review the official documentation.

🎯 Models offered by OpenAI understand and process text by breaking it down into tokens. Tokens can be words or just of characters. The number of tokens processed in a given API request is 2048 tokens or about 1500 words for most models❗

🧨 Other things to keep in mind:

🎯 Apart from well-defining the structural elements of a prompt, let’s also note a few important points to keep in mind when optimizing for the model output.

- Language models with different settings can produce very different output for the same prompt.

- Controlling how deterministic the model is is very important when creating completions for prompts.

- There are two basic parameters to consider here:

temperatureandtop_p(these parameter values should be kept low for more precise answers).

In general, the model and temperature are the most commonly used parameters to change the model output, but refer to the API reference to understand all the parameters in detail.

🎯 OpenAI API authenticates with secret API keys, which can be found on the ‘View API Keys’ page under your profile. For a quick start, you can review this fact sheet and you can also view model outputs and experiment with a playground by OpenAI.

🍀 Recommendations and Tips for Prompt Engineering with OpenAI API

Let’s try to summarize some of OpenAI’s suggested tips and usage recommendations on how to give clear and effective instructions to GPT-3 and Codex when prompt engineering.

🔸Use latest models for the best results:

If you are going to use it to generate text, the most current model is “text-davinci-003” and to generate code, it is “code-davinci-002” (November, 2022). You can check here to follow the current models and for more detailed information about the models.

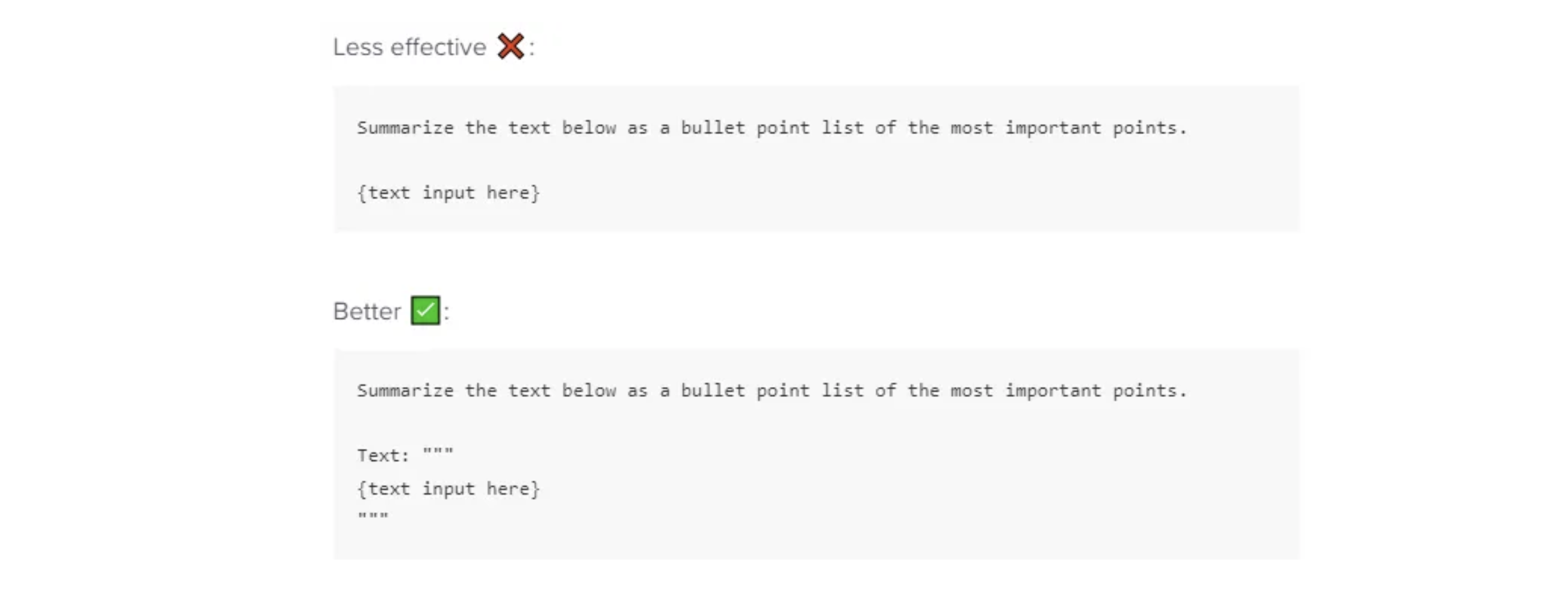

🔸Instructions must be at the beginning of the prompt, and the instruction and content must be separated by separators such as ### or “ “” :

First of all, we must clearly state the instructions to the language model, and then use various separators to define the instruction and its content. Thus, it is presented to the language model in a more understandable way.

🔸Give instructions that are specific, descriptive and as detailed as possible:

By typing clear commands on topics such as context, text length, format, style, you can get better outputs. For example, instead of an open-ended command like Write a poem about OpenAI. , you could write a more detailed command like Write a short inspiring poem about OpenAI, focusing on the recent DALL-E product launch (DALL-E is a text to image ML model) in the style of a {famous poet}

🔸Provide the output format expressed with examples:

If you have a preferred output format in mind, we recommend providing a format example, as shown below:

Less effective ❌:

Extract the entities mentioned in the text below.

Extract the following 4 entity types: company names, people names,

specific topics and themes.

Text: {text}

Better ✅:

Extract the important entities mentioned in the text below.

First extract all company names, then extract all people names,

then extract specific topics which fit the content and finally

extract general overarching themes

Desired format:

Company names: <comma_separated_list_of_company_names>

People names: -||-

Specific topics: -||-

General themes: -||-

Text: {text}

🔸Try zero-shot first, then continue with few-shot examples and fine-tune if you still don’t get the output you want:

You can try zero-shot prompt engineering for your command without providing any examples to the language model. If you don’t get as successful output as you want, you can try few-shot methods by guiding the model with a few examples. If you still don’t produce as good an output as you intended, you can try fine-tuning.

⭐There are examples of both zero-shot and few-shot prompts in the previous sections. You can check out this best practices for fine-tune.

🔸Avoid imprecise explanations:

When presenting a command to the language model, use clear and understandable language. Avoid unnecessary clarifications and details.

Less effective ❌:

The description for this product should be fairly short, a few sentences only, and not too much more.

Better ✅:

Use a 3 to 5 sentence paragraph to describe this product.

🔸Tell what to do rather than what not to do:

Avoiding negative sentences and emphasizing intent will lead to better results.

Less effective ❌:

The following is a conversation between an Agent and a Customer. DO NOT ASK USERNAME OR PASSWORD. DO NOT REPEAT. Customer: I can't log in to my account. Agent:

Better ✅:

The following is a conversation between an Agent and a Customer. The agent will attempt to diagnose the problem and suggest a solution, whilst refraining from asking any questions related to PII. Instead of asking for PII, such as username or password, refer the user to the help article www.samplewebsite.com/help/faq

Customer: I can’t log in to my account.

Agent:

🔸Code Generation Specific — Use “leading words” to nudge the model toward a particular pattern:

It may be necessary to provide some hints to guide the language model when asking it to generate a piece of code. For example, a starting point can be provided, such as “import” that he needs to start writing code in Python, or “SELECT” when he needs to write an SQL query.

🤖Conclusion

ChatGPT has created a lot of excitement not only among researchers but also computer and tech aficionados and has become a popular topic, especially on social media. The most effective way to benefit from this technology is to create optimized requests. Writing ChatGPT prompts appears to be in high demand and is quickly becoming a sought-after skill in many industries. This article discusses the prompt structure, its different types, and some recommended tips for effective prompt writing.

You can find some important resources in the reading list.

📚Further Reading

- How to write the perfect ChatGPT prompt and become a ChatGPT expert

- Cohere.AI

- A Complete Introduction For Large Language Models

- ChatGPT, Generative AI and GPT-3 Apps and use cases

- Guide

- Best practices for OpenAI API

- Awesome ChatGPT Prompts

- Learn Prompting

Want to Learn More about Prompt Engineering?

To dig deeper into prompt engineering, check out Comet’s newest product, LLMOps. It’s designed to allow users to leverage the latest advancement in Prompt Management and query models in Comet to iterate quicker, identify performance bottlenecks, and visualize the internal state of the Prompt Chains.