Organize Your Prompt Engineering with CometLLM

Introduction

Prompt Engineering is arguably the most critical aspect in harnessing the power of Large Language Models (LLMs) like ChatGPT. Whether you’re building tools for content creation, question answering, translation, or coding assistance, well designed prompts act as the roadmap for LLMs to generate accurate, ethical, and contextually relevant outputs. However; current prompt engineering workflows are incredibly tedious and cumbersome. Logging prompts and their outputs to .csv files and then visualizing them in Excel becomes inefficient and confusing. Developers are often experimenting with hundreds if not thousands of prompts for a given use-case and are in dire need of tools that scale well!

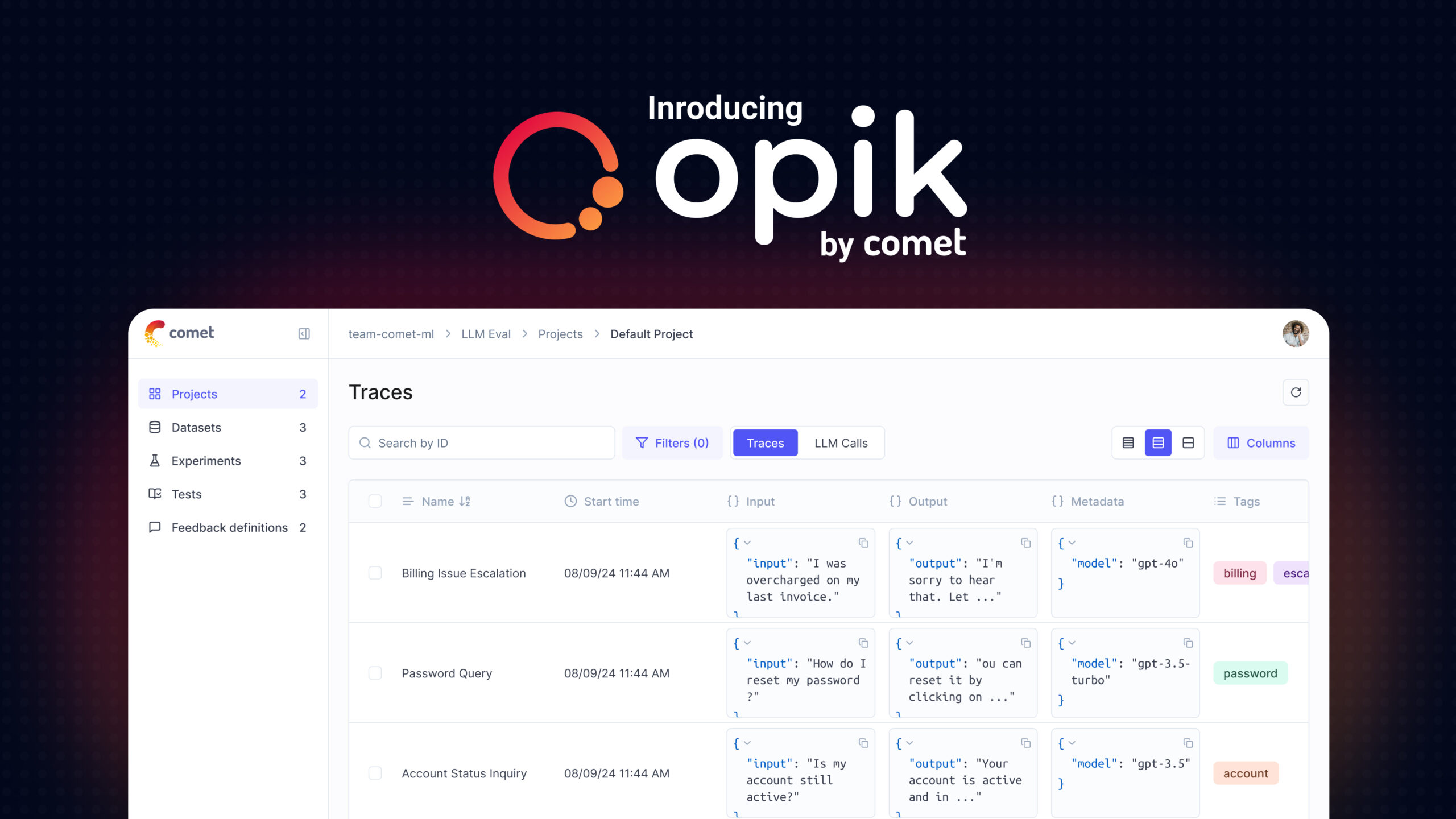

Comet is happy to announce a new solution that aims to make the entire prompt engineering process much easier. Use CometLLM to log and visualize all your prompts and chains and unleash the full potential of Large Language Models!

P.S: If you prefer to learn by code, check out our informative Colab notebook where we show you how to log prompts from open-source HuggingFace Models!

Get Started With CometLLM

Integrating CometLLM into your prompt experimentation workflows is seamless!

First install the package via pip.

pip install comet_llm

Then, head over to Comet to create a free account and get your API key! Once you have that, you’re all set to start logging prompts and their outputs to Comet.

import comet_llm

comet_llm.log_prompt(

prompt="What is your name?",

output=" My name is Alex.",

api_key="YOUR_COMET_API_KEY",

project = "YOUR_LLM_PROJECT",

)

Add Token Usage to Prompt Metadata

Prompt usage tokens refer to the number of tokens within a language model’s input that are consumed by the prompts or instructions provided to the model. In the context of OpenAI’s GPT model, the total number of tokens in an input affects the cost, response time, and availability of the model. Log your Usage Token as metadata to your prompts to make sure you are using the most cost-effective prompts for your use-case!

Score Prompt Outputs

Prompt Feedback is crucial for improving the overall quality of Large Language Models. Use the Comet UI to give human-feedback on a prompt and it will automatically document your score!

Evaluate Prompt Templates

Prompt Templates are predefined structures or formats that guide users in providing input to a language model. Templates help in improving the clarity, consistency, and the overall quality of LLM responses. Users can log the prompt template, along with their prompt input and outputs to decide which templates are providing better responses.

import comet_llm

comet_llm.log_prompt(

prompt="Answer the question and if the question can't be answered, say \"I don't know\"\n\n---\n\nQuestion: What is your name?\nAnswer:",

prompt_template="Answer the question and if the question can't be answered, say \"I don't know\"\n\n---\n\nQuestion: {{question}}?\nAnswer:",

prompt_template_variables={"question": "What is your name?"},

metadata= {

"usage.prompt_tokens": 7,

"usage.completion_tokens": 5,

"usage.total_tokens": 12,

},

output=" My name is Alex.",

duration=16.598,

)

Search for Specific Prompts

Prompt Engineering is an iterative process. Developers are often trying hundreds if not thousands of prompts. CometLLM makes it easy to find specific prompts with our search functionality. Search for a keyword within a prompt input, output or template and quickly find the relevant prompts!

Compare and Contrast LLMs

There are many powerful LLMs out there! (GPT-3.5 , LLama 2, Falcon, Davinci). But which one is the best for a particular use-case? Tag your prompt responses with the LLM that generated them. Then use CometLLM’s group-by functionality to easily group prompts by model and decide which one is generating the highest quality responses!

Visualize Chat History With Chains

Prompt chains refer to a sequence of prompts that are used to guide a language model’s response in a conversational or interactive setting. Instead of providing a single prompt, prompt chains involve sending multiple prompts in succession to maintain context and guide the model’s behavior throughout a conversation. Log your interactions with chatbots like ChatGPT as chains to quickly track and debug a LLM’s train of thought.

Check-out the Github Repo

CometLLM’s SDK is completely open-sourced and can be found on Github! Give the project a star and make sure to keep following the repository for exciting product updates!