We’ve spent the past year building tools that make LLM applications more transparent, measurable, and accountable. Since launching Opik, our open source evaluation platform, we’ve shipped dozens of features focused on monitoring, evaluating, and optimizing LLM applications. This includes heuristic and LLM-as-a-Judge metrics, realtime tracing and monitoring, prompt management capabilities, development playgrounds, automatic prompt optimization, and so much more.

Today, we’re excited to take a new step in our mission: a public beta of our Guardrails project—a lightweight system designed to help developers build safer, more robust LLM applications.

Why Guardrails?

As language models continue to unlock new capabilities, they also raise new questions about content safety, privacy, and responsible deployment. For most LLM applications, it is valuable to be able to diagnose problematic behavior post-hoc. For some LLM applications, however, it is *essential* to prevent problematic behavior in the first place.

Towards that end, we’ve heard from many users that what they need most in their workflow is a way to catch “bad behavior” early—whether it’s off-topic or inappropriate generation, the accidental leak of sensitive data, or a so-called “jailbreak” of their application. This is where Guardrails come in.

If you’re unfamiliar, “Guardrails” refer to a broad category of tools for validating the output of an LLM application and intervening in cases where the output is unacceptable. The actual way that these tools go about monitoring and intervening varies quite a bit depending on the tool in question (more on that in a moment), but at a high-level, this is the general behavior.

To put it simply: so far, Opik has been focused on measuring behavior. With Opik Guardrails, we’re starting to build tools to help shape it

What We’re Launching

This initial release is a public beta. While we’re committed to building a Guardrails product, we’re treating this an initial release as an experimental project, sharing things early so we can learn in the open and gather feedback from real-world use.

The beta consists of a simple REST API with two backend-hosted endpoints:

- Topic Moderation: Uses a fine-tuned BART model to evaluate whether an input falls within a specified topical boundary. Useful for domain-specific assistants or content-limited interfaces.

- PII Filtering: Built on Presidio, this endpoint identifies and redacts personally identifiable information (PII) from LLM input or output text.

Below, you can see a simple snippet that shows how you might interact with either of these endpoints:

from opik.guardrails import Guardrail, PII, Topic

from opik import exceptions

guardrail = Guardrail(

guards=[

Topic(restricted_topics=["finance", "health"], threshold=0.9),

PII(blocked_entities=["CREDIT_CARD", "PERSON"]),

]

)

llm_response = "You should buy some NVIDIA stocks!"

try:

guardrail.validate(llm_response)

except exceptions.GuardrailValidationFailed as e:

print(e) For a more advanced exploration, you can read the Guardrails docs here.

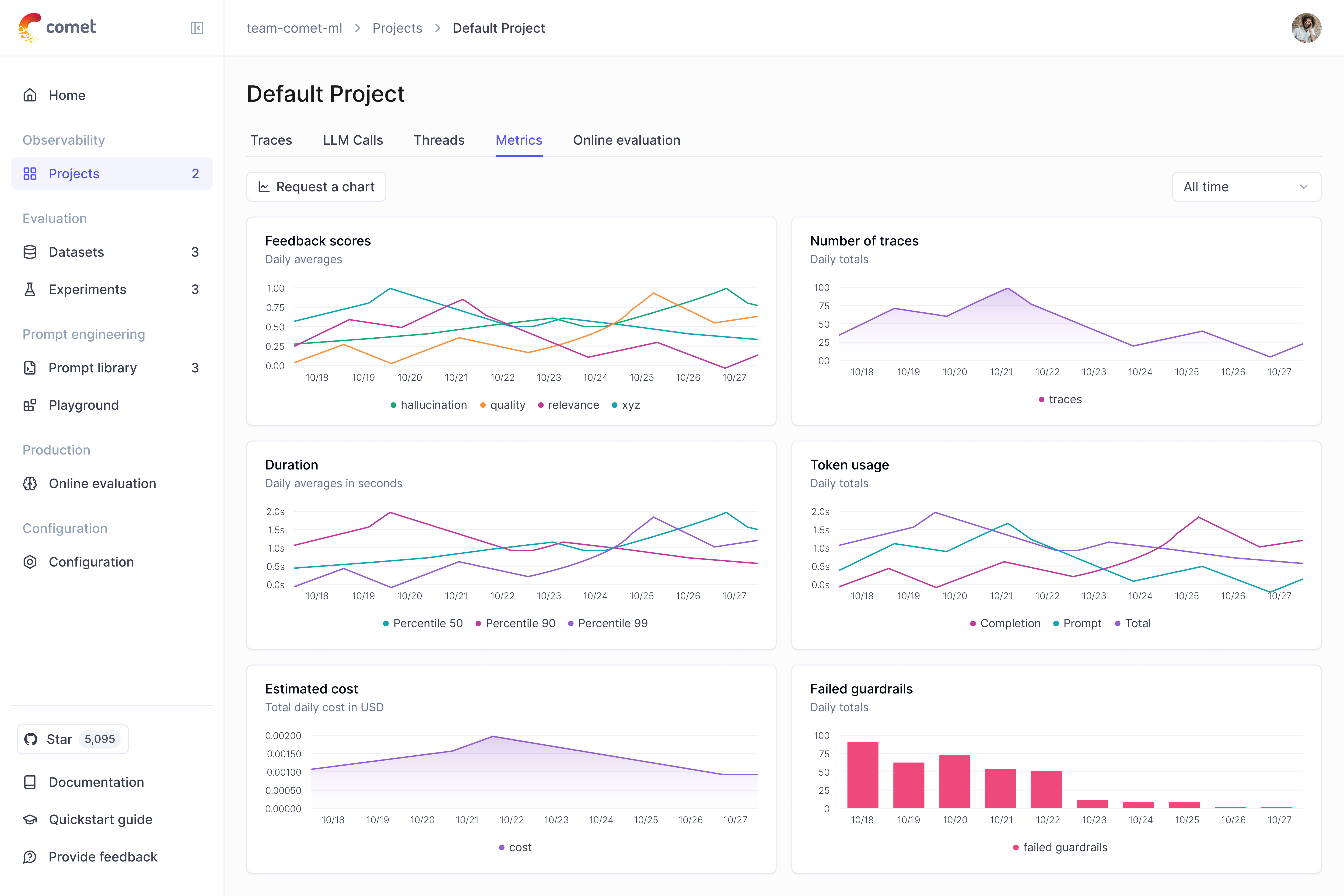

In addition, we’re enabling support for tracing and logging third-party guardrails libraries—including those that run on the client-side. This means you’ll be able to visualize any guardrails logic within the Opik UI, even if you’re not using our API directly. The current support relies on logging spans of type “guardrail”, if you would like us to add an integration with existing guardrails libraries, please open up a ticket on the Opik Github repo.

Whether your stack uses custom logic or popular moderation tools, our goal is to make sure Opik can provide you with centralized observability and analysis.

Why a Public Beta?

The guardrails space is moving quickly, with a wide variety of tools and approaches emerging. There are no clear standards yet, and we don’t think there will be a one-size-fits-all solution. That’s why we’re building this beta out in the open.

By supporting both our own backend API and third-party guardrails libraries, we aim to make Opik a home for guardrail observability—a shared layer that gives developers insight into how their safety mechanisms are working across the stack.

And just because we’re calling this a public beta does not mean Opik Guardrails are not production-ready. As part of Opik’s operating philosophy, anything we officially release has been built with production in mind. The “beta” designation refers more to rapidly the project will be developed, and how much change you can expect as you build with it.

What’s Next

This is just the beginning. Over the next few months, we’re exploring:

- Jailbreak detection and prevention

- Toxicity scoring

- Harmful instruction filtering

- Deeper integration with Opik’s evaluation tooling and scoring pipelines

We’re especially interested in how guardrails can feed back into the same evaluation workflows developers are already using, and how some of the same techniques popularized in LLM evaluations (like LLM-as-a-Judge metrics) can be repurposed and adapted for guardrails.

Get Involved

You can start using the Guardrails beta right now by following on with our quickstart:

Whether you’re building safety systems, customizing moderation flows, or just experimenting, we’d love to hear what you think.

Thanks for building with us 🙂