Hyperparameter Tuning for Optimizing ML Performance

Hyperparameter tuning is a key step in order to optimize your machine learning model’s performance. Learn what it is and how to do it here!

The art of enhancing machine learning model performance through beginner-friendly hyperparameter tuning techniques

Table of Contents:

- Introduction

- Why Hyperparameter Tuning Matters

- Steps to Perform Hyperparameter Tuning

- Influence of Hyperparameters on Models

- Real-World Example: Customer Churn Prediction

- Automating Hyperparameter Tuning with Comet ML

- Conclusion

💡I write about Machine Learning on Medium || Github || Kaggle || Linkedin. 🔔 Follow “Nhi Yen” for future updates!

Introduction

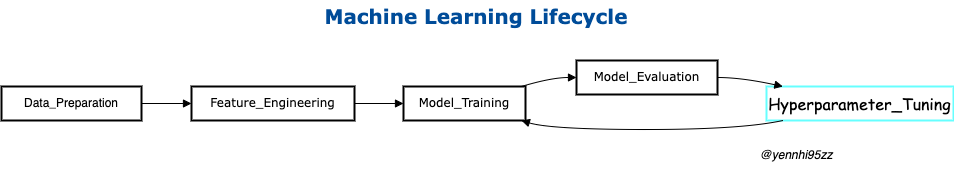

In the world of machine learning, where algorithms learn from data to make predictions, it’s important to get the best out of our models. But, how do we ensure that our models perform at their best? This is where hyperparameter tuning comes in. In this article, we will explore how to tune hyperparameters, making complex ideas easy to understand, especially for those just starting out in machine learning.

1. Why Hyperparameter Tuning Matters

Imagine that you are baking a cake and you need to decide the baking temperature and time. Similarly, in machine learning, hyperparameters are the settings that we choose before training a model. These parameters significantly influence how the model learns and makes predictions. Choosing the right hyperparameter can turn an inefficient model into a superstar. This is why hyperparameter tuning is important: it is the process of finding the best combination of these settings to maximize model accuracy.

2. Steps to Perform Hyperparameter Tuning

- Select Hyperparameters to Tune: Different algorithms have different hyperparameters. Determining the correct ones for the chosen algorithm is the first step.

- Choose a Search Space: This is the range of values each hyperparameter can take. The larger the search space, the more options the match will consider.

- Optimization Techniques:

There are several techniques available, each with its own approach. Including:

- Manual Search: Manually try different hyperparameter values. Simple, but time consuming.

- Random Search: Random samples from the search space. Efficient, but may miss optimal values.

- Grid Search: Systematically explore all possible combinations. Complete, but computationally expensive.

- Bayesian Optimization: Use previous reviews to make informed decisions about where to look next. Efficient and effective.

- Genetic Algorithms: Inspired by natural selection, better sets of hyperparameters evolve over generations.

4. Evaluate Performance: For each set of hyperparameters, measure the model’s performance on the validation dataset using metrics such as accuracy, precision, or recall.

5. Select Best Hyperparameters: Choose the set of hyperparameters that lead to the best model performance.

Tired of manually tracking your prompts and prompt variables? Try CometLLM, a free, open-source tool to log, visualize, and search your LLM prompts and metadata.

3. Influence of Hyperparameters on Models

Imagine a symphony orchestra tuning their instruments before a performance. Just as tuning each instrument affects the overall harmony, hyperparameters play a similar role in fine-tuning a machine learning model. Just as a violin that is out of tune can disrupt the tone, incorrect hyperparameters can make it difficult for a model to play.

Let’s take a closer look at some essential hyperparameters and their influence on shaping the behavior of the model.

3.1. Train-Test Split Estimator

Before diving into the world of hyperparameters specific to machine learning algorithms, it’s important to discuss the first step: the training-test split estimator. This is not a hyperparameter in the traditional sense, but it affects the learning process of the model. When we are training a model, we need data to train and data to test its performance. The training test split estimator helps us to split our dataset into these two parts.

For example, using the train_test_split function, we can allocate 60% of our data for training and 40% for testing. The random_state parameter ensures that the same piece of data is always generated, helping to maintain consistency in model evaluation. Without this control, model evaluation can become a complex puzzle, and ignoring the random state can lead to unpredictable behavior of the model. Essentially, random_state serves as the seed for the random number generator, stabilizing the behavior of the model.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.4, random_state=0)

3.2. Logistic Regression Classifier:

When we’re talking about classifying things, one common go-to is the Logistic Regression Classifier. Inside its workings, there’s a special knob called C, and it’s connected to something called the ‘regularization parameter,’ let’s call it λ (that’s a Greek letter “lambda”).

Now, imagine it’s like adjusting a car’s gas pedal and brake. When you increase C, it’s like pushing the gas pedal harder, but it also eases up on the brake. This ‘C’ helps us control how much the model should stick closely to the data. If you crank up C too much, it might memorize the data too well (overfitting), but if you keep C low, it might not capture the data’s patterns well (underfitting). So, finding the right Cis like finding the sweet spot between driving fast and driving safe.

Mathematically: C = 1/λ

from sklearn.linear_model import LogisticRegression

logreg = LogisticRegression(C=1000.0, random_state=0)

3.3. K-Nearest Neighbors (KNN) Classifier:

The KNN algorithm relies on selecting the right number of neighbors and a power parameter p. The n_neighbors parameter determines how many data points are considered for making predictions. Additionally, the p parameter influences the distance metric used for calculating the neighbors. When p = 1, the Manhattan distance is used, while p = 2 corresponds to the Euclidean distance.

Mathematically:

- For p = 1: Manhattan Distance

- For p = 2: Euclidean Distance

from sklearn.neighbors import KNeighborsClassifier

knn = KNeighborsClassifier(n_neighbors=5, p=2, metric='minkowski')

These are just a few examples of how hyperparameters can shape the behavior of a machine learning model. Each parameter acts as a tuning knob, allowing you to fine-tune the model’s performance for your particular problem. As you explore different algorithms, remember that understanding these hyperparameters is like understanding the keys of a musical piece: each key contributes to the overall masterpiece.

4. Real-World Example: Customer Churn Prediction

Now, let’s put these ideas into practice with a real-life situation: predicting customer churn, which occurs when a customer stops using a service. Imagine a company that wants to keep its customers happy and engaged. We will be working with a Kaggle dataset called the “Telco Customer Churn” dataset. This data set is like a puzzle filled with information about customers and whether they left or stayed.

With the power of hyperparameter tuning, we can create a smart model that is really good at telling us which customers are likely to walk away. It’s like having a crystal ball for customer behavior! By using the right hyperparameters, we can tune this crystal ball to be super precise. This helps businesses take action and keep their valuable customers happy and loyal.

Although we won’t show the full code here, you can envision writing lines of Python code to read the dataset, split it into parts for training and testing, and then use magic Adjust the super parameter to create this super smart crystal ball. The code could be something like this:

# Import the necessary libraries

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

# Load the dataset

data = pd.read_csv("telco_churn_dataset.csv")

# Split the data into features (X) and target (y)

X = data.drop(columns=["Churn"])

y = data["Churn"]

# Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Set up hyperparameter options for tuning

param_grid = {

"n_estimators": [50, 100, 200],

"max_depth": [None, 10, 20],

"min_samples_split": [2, 5, 10],

"min_samples_leaf": [1, 2, 4]

}

# Create a model with hyperparameter tuning

model = GridSearchCV(RandomForestClassifier(random_state=42), param_grid, cv=5)

model.fit(X_train, y_train)

# Evaluate the model

accuracy = model.score(X_test, y_test)

print("Model accuracy:", accuracy)

Remember, this is just a simple example, and the actual code can become more complex depending on the dataset and algorithm you use. But this gives you an idea of how to tweak the hyperparameters like having a wizard tweak your model settings to make it perform as well as possible!

5. Automating Hyperparameter Tuning with Comet ML

To streamline the hyperparameter tuning process, tools like Comet ML come into play. Comet ML provides a platform for test tracking and hyperparameter optimization. By using Comet ML, you can automate the process of testing different hyperparameters and monitor their impact on model performance. This saves time and effort while ensuring you get the best results possible.

Comet ML simplifies and automates this process by providing a framework for managing hyperparameter tuning experiments. Here’s a step-by-step guide on how to use Comet ML for automating hyperparameter tuning:

Step 1: Create a Comet ML Account

First, you need to create an account on the Comet ML platform. Once registered, you’ll obtain an API key, which you’ll use to authenticate your Python scripts and log experiments to your Comet project.

Step 2: Install Required Libraries

Ensure you have the necessary libraries installed. You’ll need Optuna for hyperparameter optimization and Comet ML for experiment tracking. You can install them using pip:

pip install optuna comet_ml

Step 4: Initialize Comet ML and Optuna

Initialize Comet ML by providing your API key and project name. This allows you to track and visualize the results of your experiments on the Comet ML platform. Create an Optuna study object, specifying the optimization direction (minimize or maximize).

import optuna

import comet_ml

# Set your Comet.ml API key and project name

comet_api_key = 'YOUR_API_KEY'

comet_project_name = 'YOUR_PROJECT_NAME'

# Initialize Comet.ml

comet_experiment = comet_ml.Experiment(api_key=comet_api_key, project_name=comet_project_name)

Step 3: Define the Objective Function

In your Python script, define the objective function that represents the machine learning experiment you want to optimize. Within this function, you specify the hyperparameters you want to tune and your model training logic. Here’s an example of an objective function:

def objective(trial):

# Define hyperparameters to optimize

learning_rate = trial.suggest_loguniform('learning_rate', 1e-5, 1e-1)

batch_size = trial.suggest_categorical('batch_size', [16, 32, 64])

num_hidden_units = trial.suggest_int('num_hidden_units', 16, 256)

# Create your machine learning model and training code

model = create_model(learning_rate, batch_size, num_hidden_units)

loss, accuracy = train_model(model)

# Log metrics to Comet ML

comet_experiment.log_metric('loss', loss)

comet_experiment.log_metric('accuracy', accuracy)

# Return the metric to optimize (e.g., minimize loss or maximize accuracy)

return loss

Step 5: Start the Optimization Process

Invoke the study.optimize method to start the hyperparameter optimization process. This method runs a specified number of trials (e.g., 50) and searches for the best hyperparameters that minimize or maximize the objective function, depending on the optimization direction chosen.

# Create an Optuna study object

study = optuna.create_study(direction='minimize')

# Start the optimization process

study.optimize(objective, n_trials=50)

Step 6: Monitor and Visualize Results

As the optimization process runs, Comet ML automatically logs the metrics and results of each trial. You can monitor the progress of your hyperparameter tuning experiments in real-time through the Comet ML dashboard. It provides visualizations and insights into how different hyperparameters impact your model’s performance.

# Print the best hyperparameters and their corresponding loss

best_params = study.best_params

best_loss = study.best_value

print("Best Hyperparameters:", best_params)

print("Best Loss:", best_loss)

Step 7: End the Experiment

Finally, remember to end the Comet ML experiment once the hyperparameter tuning is complete. This ensures that all experiment data is logged and saved for future reference.

# End the Comet.ml experiment

comet_experiment.end()

👉 Check out the A Hands-on Project: Enhancing Customer Churn Prediction with Continuous Experiment Tracking in Machine Learning where I’ll walk you through step-by-step the Hyperparameter Tuning process using CometML.

Conclusion

Hyperparameter tuning may seem like a complicated puzzle, but it is a puzzle worth solving. By finding the right combination of hyperparameters, you can turn a trivial machine learning model into a powerful tool for making accurate predictions. As you begin your machine learning journey, remember that hyperparameter tuning is an essential skill in your toolkit, one that can take your models from good to great.