-

Release Highlights: Discover Opik Agent Optimizer, Guardrails, & New Integrations

As LLMs power more complex, multi-step agentic systems, the need for precise optimization and control is growing. In case you…

-

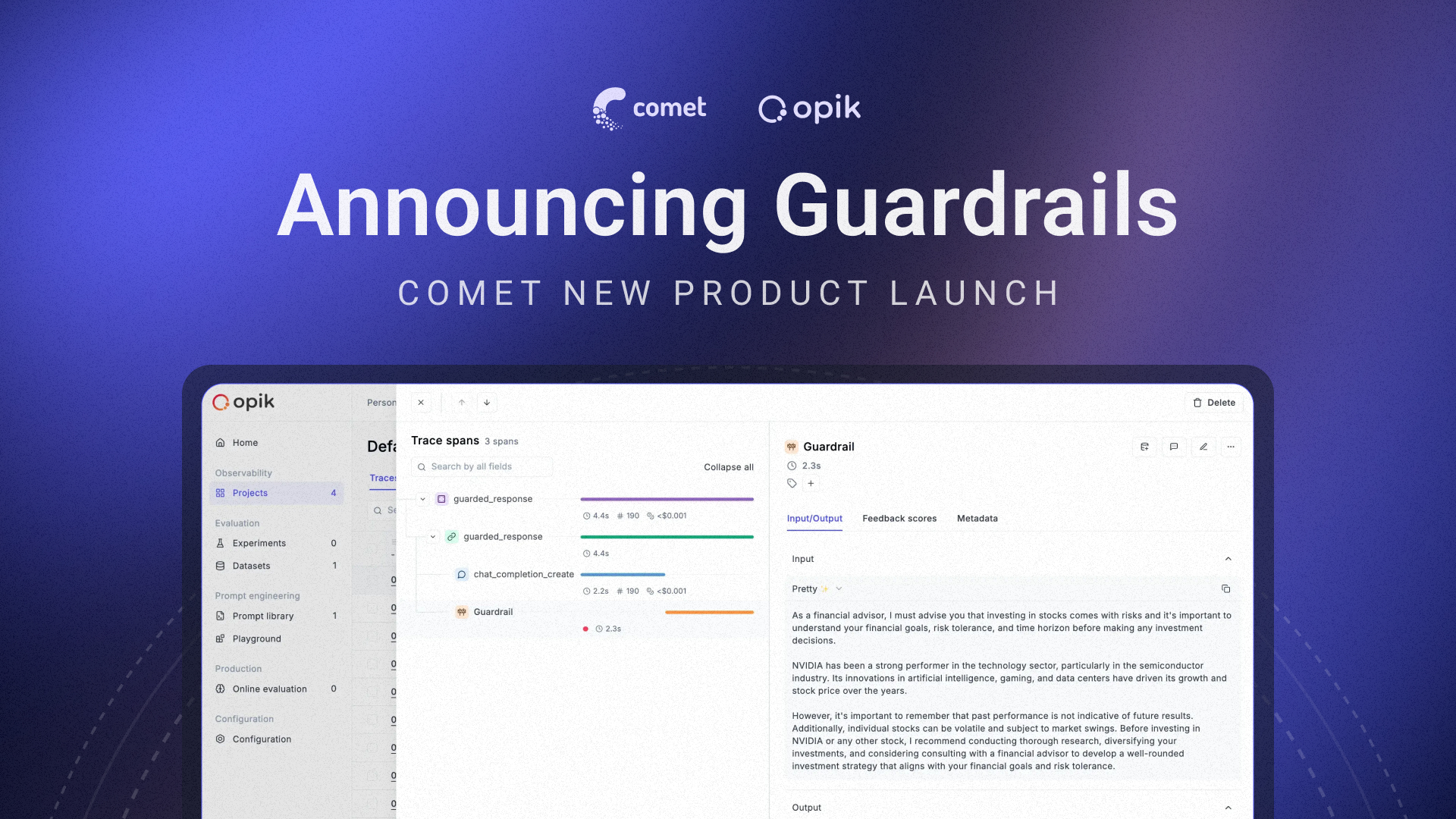

Announcing Opik’s Guardrails Beta: Moderate LLM Applications in Real-Time

We’ve spent the past year building tools that make LLM applications more transparent, measurable, and accountable. Since launching Opik, our…

-

Major Releases: MCP Server & Google Agent Dev Kit Support

We’ve just rolled out two major updates in Opik, Comet’s open-source LLM evaluation platform, that make it easier than ever…

-

How Contributing to Open Source Projects Helped Me Build My Dream Career in AI

6 years ago, I decided to open-source my Python code for a personal project I was working on, which led…

-

SelfCheckGPT for LLM Evaluation

Detecting hallucinations in language models is challenging. There are three general approaches: The problem with many LLM-as-a-Judge techniques is that…

-

LLM Hallucination Detection in App Development

Even ChatGPT knows it’s not always right. When prompted, “Are large language models (LLMs) always accurate?” ChatGPT says no and…

-

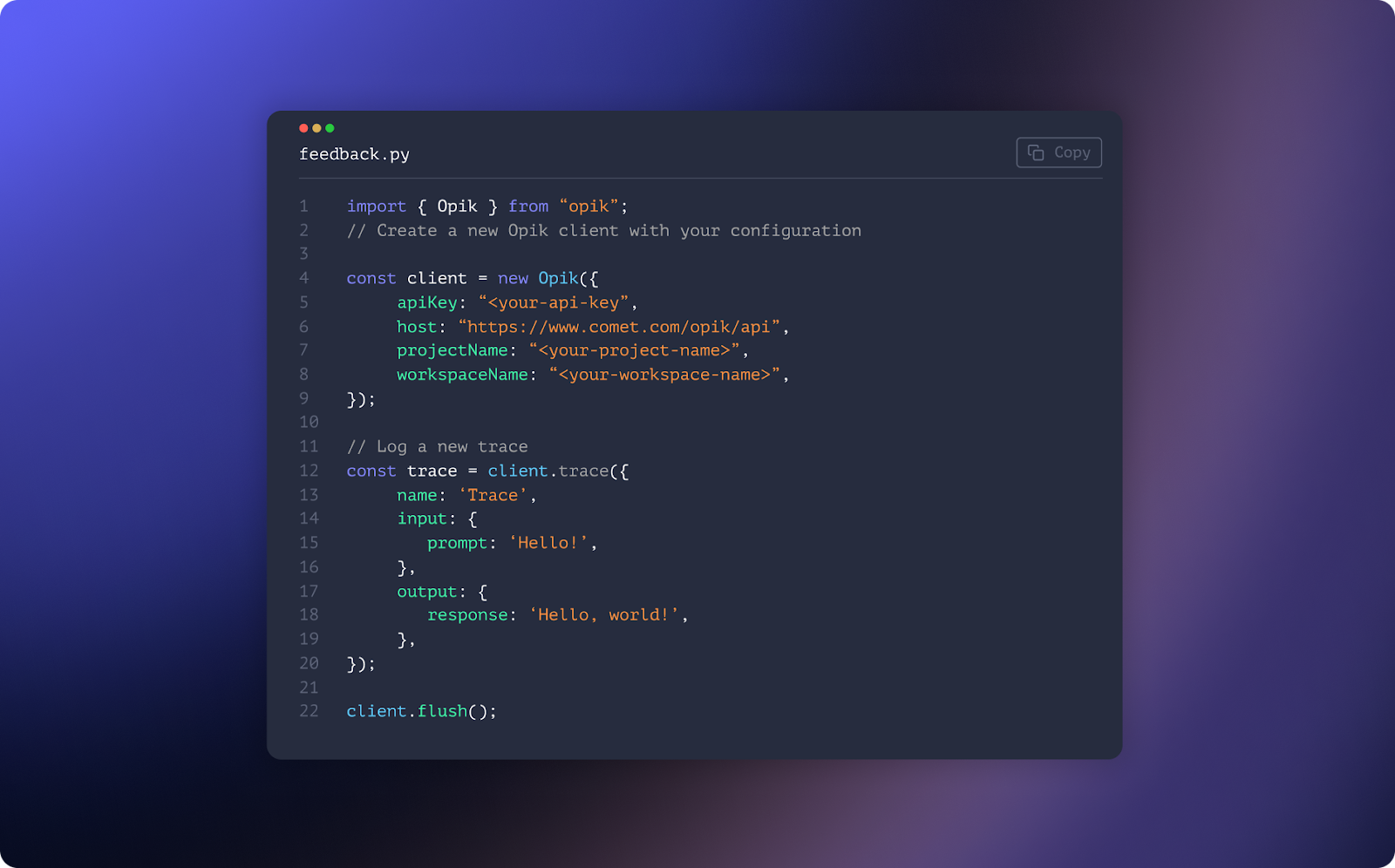

Major Releases: TypeScript for LLM Evals, Total Fidelity ML Metrics, & More

Spring is in the air, and we’re excited to bring you four fresh releases in the Comet platform to make…

-

LLM Evaluation Frameworks: Head-to-Head Comparison

As teams work on complex AI agents and expand what LLM-powered applications can achieve, a variety of LLM evaluation frameworks…

-

LLM Juries for Evaluation

Evaluating the correctness of generated responses is an inherently challenging task. LLM-as-a-Judge evaluators have gained popularity for their ability to…

Run open source LLM evaluations with Opik!

StarComet Blog

-

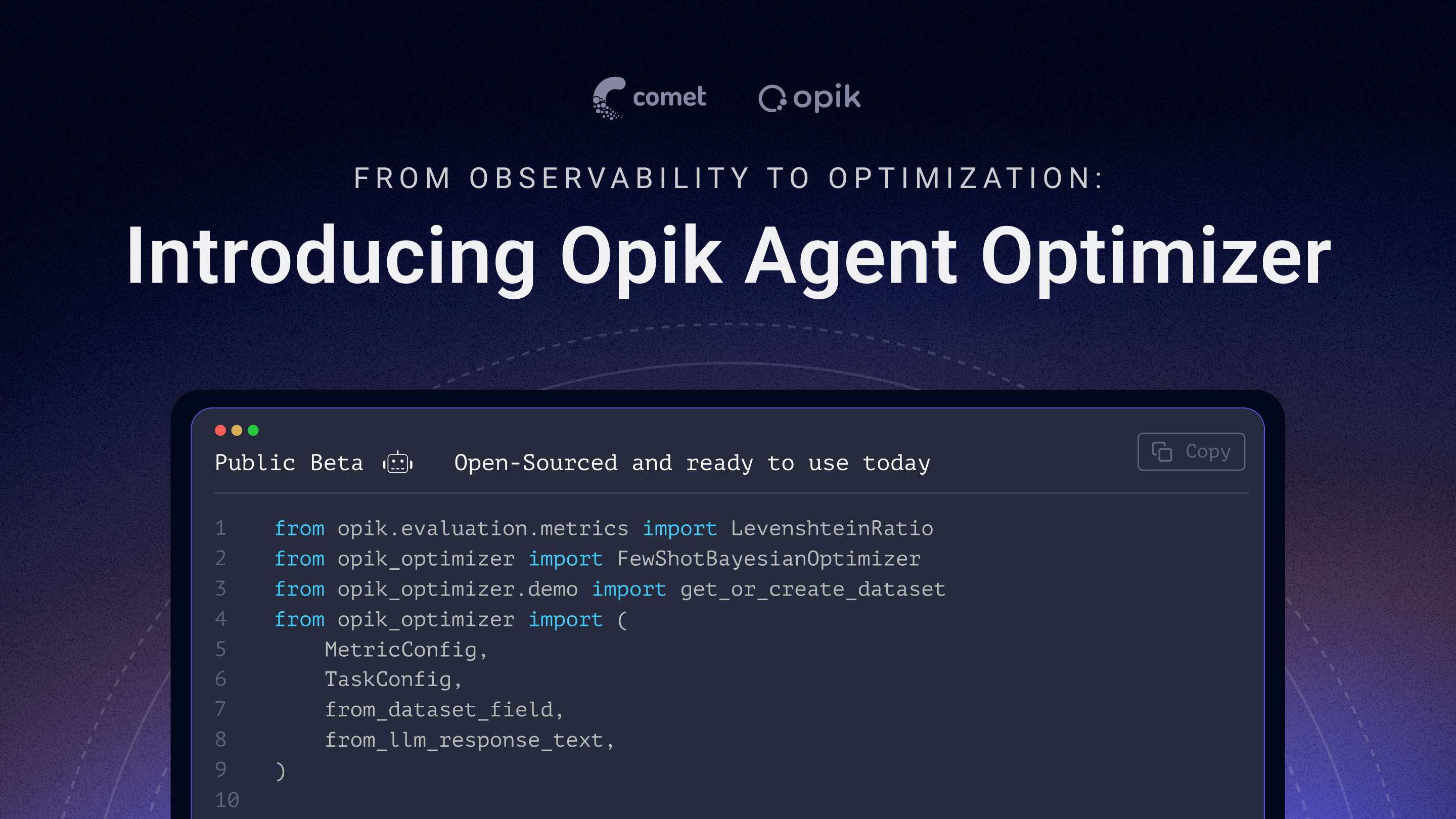

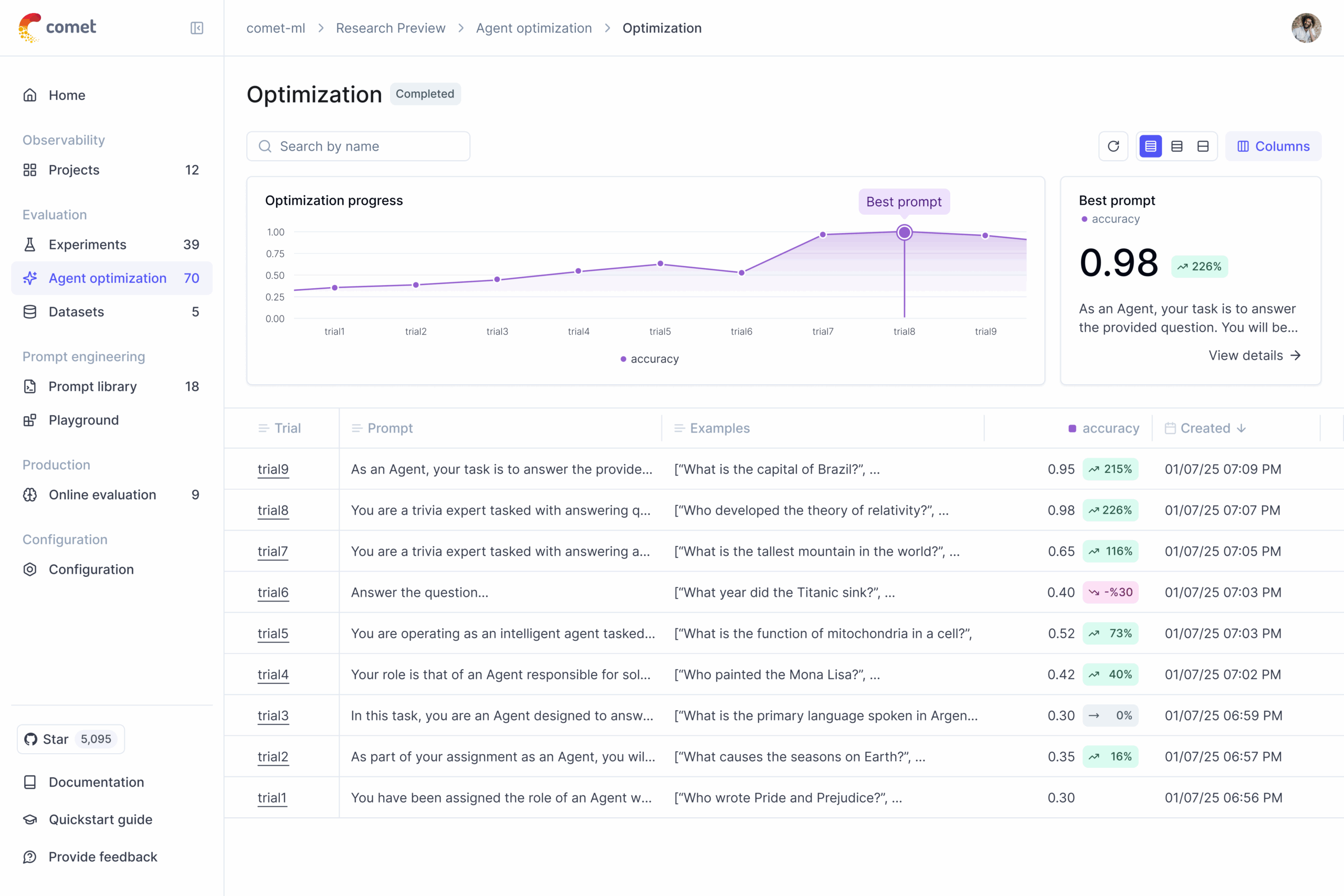

From Observability to Optimization: Announcing the Opik Agent Optimizer Public Beta

At Comet, we’re driven by a commitment to advance innovation in AI, particularly in the realm of LLM observability. Our…

Get started today for free.

Trusted by Thousands of Data Scientists