Observability for AG2 with Opik

AG2 is an open-source programming framework for building AI agents and facilitating cooperation among multiple agents to solve tasks.

AG2’s primary advantage is its multi-agent conversation patterns and autonomous workflows, making it ideal for complex tasks that require collaboration between specialized agents with different roles and capabilities.

Getting started

To use the AG2 integration with Opik, you will need to have the following packages installed:

In addition, you will need to set the following environment variables to configure the OpenTelemetry integration:

Opik Cloud

Enterprise deployment

Self-hosted instance

If you are using Opik Cloud, you will need to set the following environment variables:

To log the traces to a specific project, you can add the

projectName parameter to the OTEL_EXPORTER_OTLP_HEADERS

environment variable:

You can also update the Comet-Workspace parameter to a different

value if you would like to log the data to a different workspace.

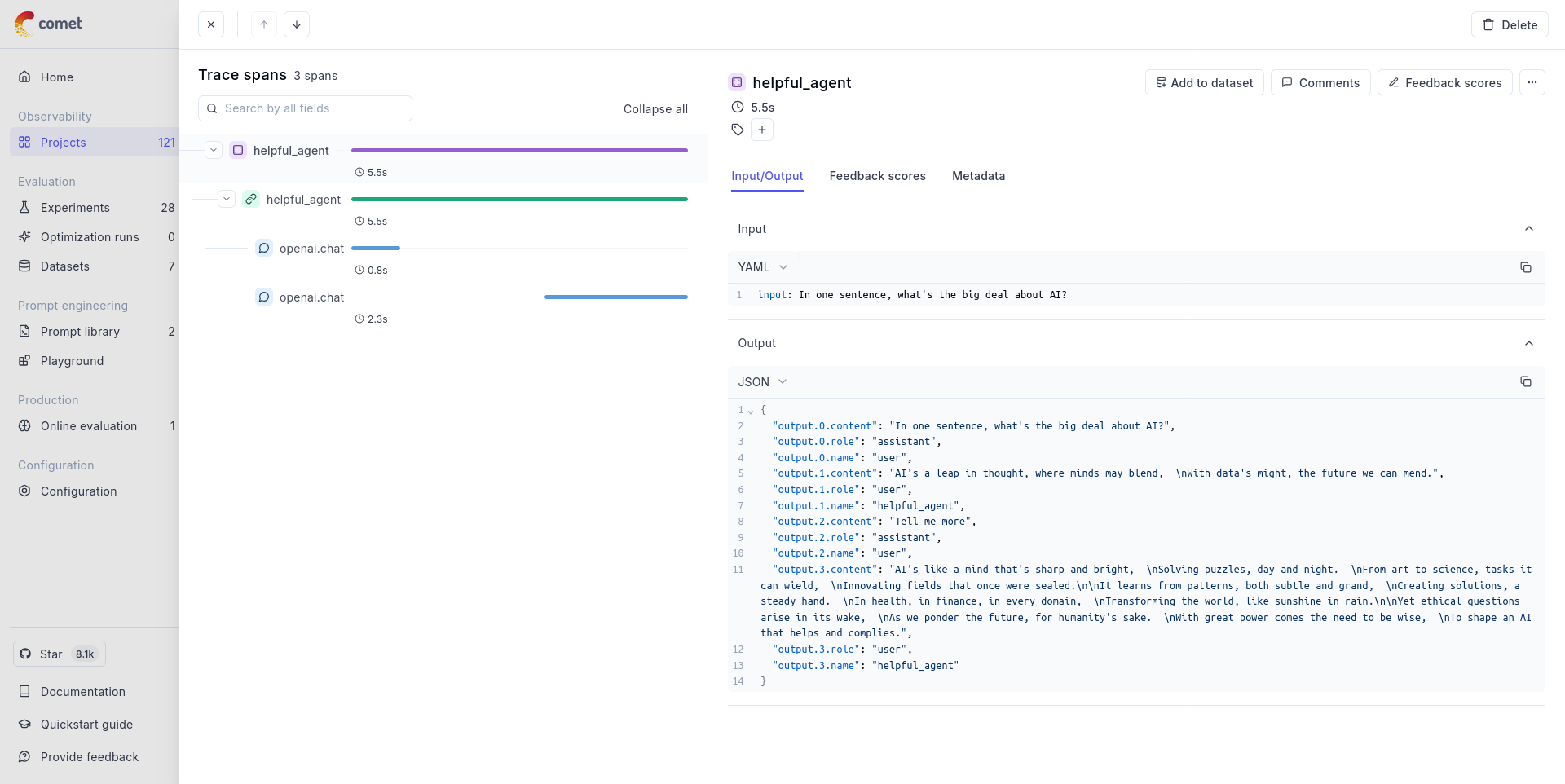

Using Opik with AG2

The example below shows how to use the AG2 integration with Opik:

Further improvements

If you would like to see us improve this integration, simply open a new feature request on Github.