As LLM applications and agents scale, visibility and iteration speed matter more than ever. This month’s Opik updates close the loop between experimentation, evaluation, and monitoring with real-time alerts, advanced AI-powered prompt tooling, and no-code experimentation now built into the Playground. Together, these improvements bring faster feedback, deeper insight, and greater reproducibility to every stage of your workflow.

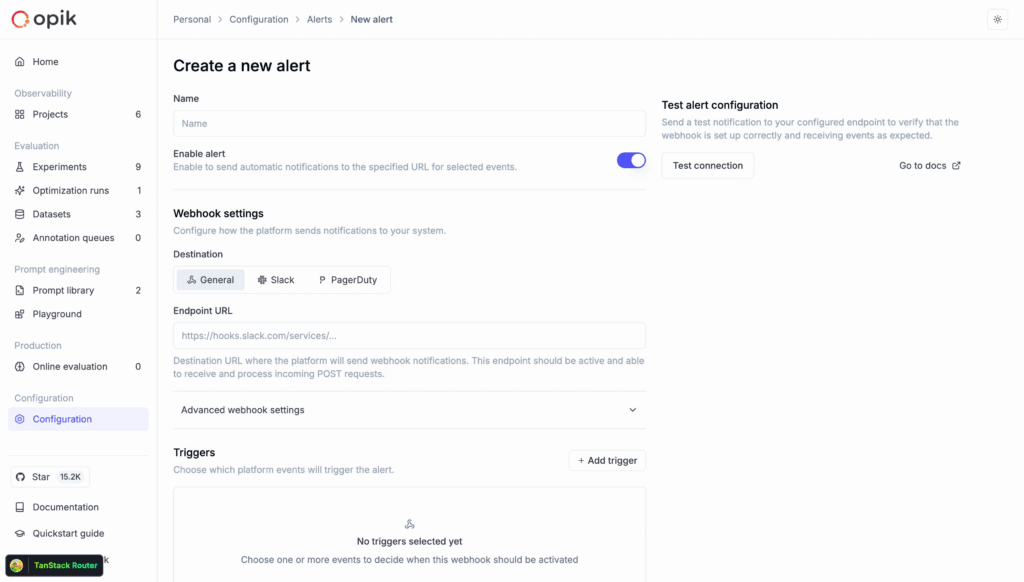

Real-Time Production Alerts

Opik now supports alerts, making it easy to stay informed the moment key events happen. Get real-time notifications for errors, feedback scores, and prompt changes, all delivered as structured event data to Slack, PagerDuty, or other desired endpoints. Use alerts to spot production issues as they happen, monitor model quality over time, audit prompt updates, and integrate notifications into your existing workflows and CI/CD pipelines.

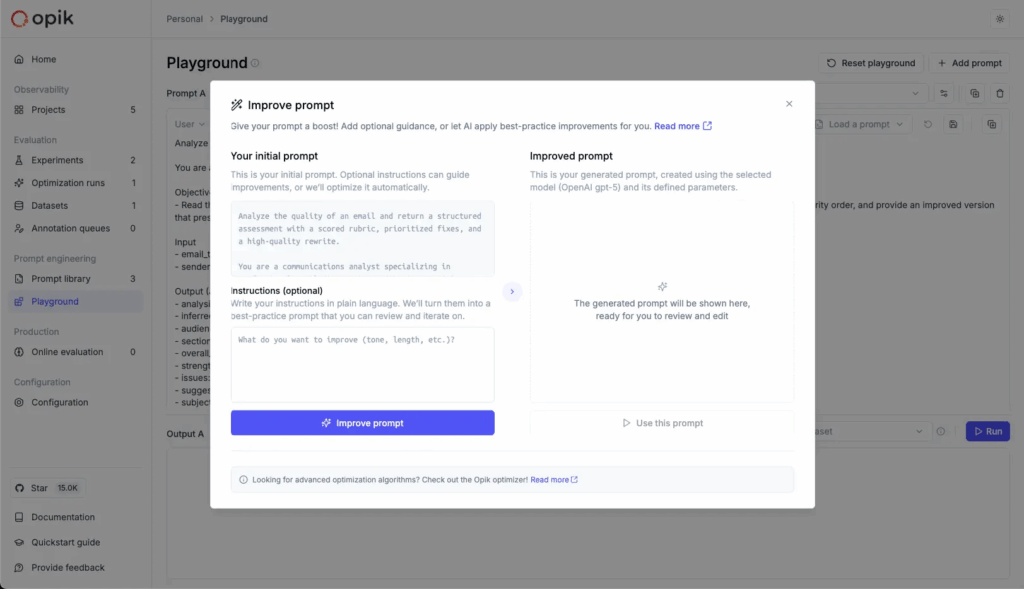

AI-Powered Prompt Generator & Improver

Two new AI-powered features are now live within Opik. The Prompt Generator and Prompt Improver apply best practices from OpenAI, Anthropic, and Google, enabling you to design and refine prompts directly in the Playground with no prior expertise in prompt engineering required.

- Prompt Generator: Convert plain-language task descriptions into comprehensive system prompts that adhere to proven design principles.

- Prompt Improver: Enhance any existing prompt for greater clarity, specificity, and consistency.

Available in both the Playground and Prompt Library, these features enable prompt iteration to be faster, easier, and more accessible.

No-Code Experimentation in the Playground

The Prompt Playground now supports complete, no-code experimentation, from setup to analysis, all in one place. You can now select or create datasets directly in the playground, choose which online scoring rules to apply during each run, and pass ground-truth answers for reference-based evaluation. Once your test is complete, you can jump straight to experiment results with a single click to inspect metrics or compare runs side by side.

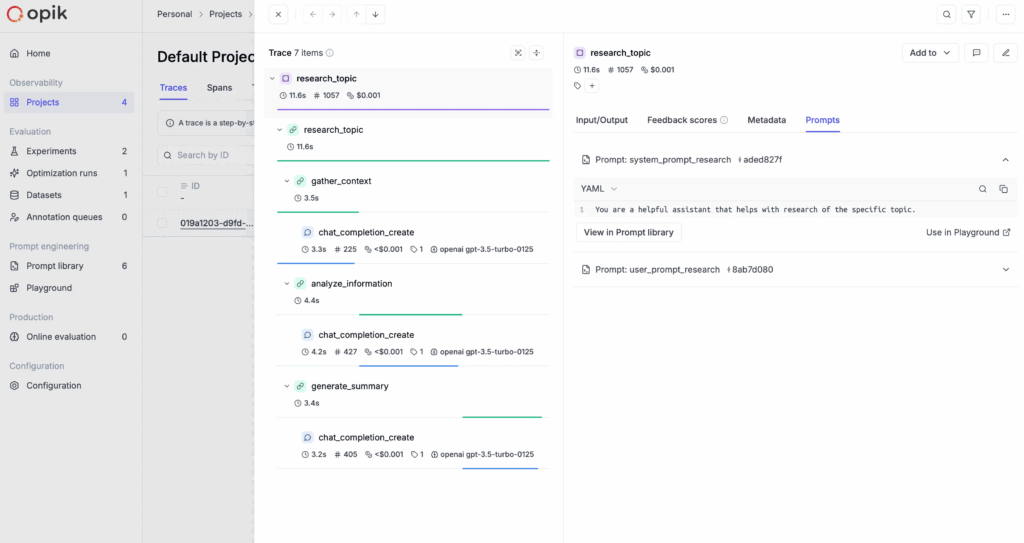

Advanced Prompt Integration in Spans & Traces

The new prompt integration across spans and traces gives you a unified connection between the prompt library, traces, and the prompt playground. Using the opik_context module, prompts can now automatically link to spans and functions, so you always know which prompt version produced a given result. You can audit directly from traces, reproduce and improve prompts, and jump back to the playground or prompt library in one click, bringing complete prompt lineage and reproducibility to your workflows.

On-Demand Evaluation for Existing Traces & Threads

On-Demand Online Evaluation enables you to retroactively apply scoring rules to logged traces and threads in Opik, streamlining evaluation without requiring any code. Simply select traces or threads in the UI, choose an online scoring rule such as ‘contains’, ‘equals’, or ‘moderation’, and view the results inline as fully logged feedback scores. It’s a fast, flexible way to assess historical data, test new scoring strategies, and measure model performance over time.

Connect & Learn with Fellow AI Developers

Join us online and in person, connect with other AI builders, and grow your skills at our upcoming hackathons and community events.

- Live Paper Reading – Demystifying Reinforcement Learning in Agentic Reasoning – Virtual, November 17, 4:00 p.m. EST

- AI By the Bay – Oakland, CA, November 17-19

- Webinar: Comet and AWS SageMaker Partner AI App: Building Reliable AI Agents – November 20, 1:00 p.m. EST

- AI Engineer Code Summit – New York City, November 20-22