In recent years, LLMs (large language models) have emerged as the most significant development in the AI space. They are now working as transformative tools in various domains, ranging from content creation to problem-solving. One thing that you will commonly notice is that even after spending days building an LLM-powered application, you might not have any specific numbers about how the application is actually performing. Since LLMs have generative capabilities, they can produce different results for the same set of user inputs. This is one of the reasons why traditional machine learning (ML) performance metrics like precision, recall, RMSE, etc. cannot be used.

One of the most intriguing and rapidly developing applications of these LLMs is their use as evaluators, a concept popularly referred to as LLM-as-a-Judge. In this approach, LLMs are employed to provide judgments on tasks, decisions, and creative outputs. While there are other LLM evaluation metrics like BLEU, ROUGE, BERTScore, etc. available for the majority of the generation tasks, LLM-as-a-Judge is overshadowing all of them due to its creative decision-making process. Tasks such as evaluating the quality of an AI-written article, deciding which marketing campaign design is more effective, or judging between conflicting perspectives in a discussion are increasingly being handled by LLMs. Moreover, it is reshaping fields like content generation, education, and even ethical decision-making by producing nearly accurate evaluation results.

According to the paper Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena, LLMs are mainly used in three different contexts to evaluate other LLM outputs:

- Single Output Scoring (without reference): LLM evaluates a single output based on predefined criteria without comparing it to any reference or benchmark. In this context, LLM provides scores and feedback purely by analyzing the output in isolation.

- Single Output Scoring (with reference): Sometimes you might have the reference results with you; in those cases, LLM evaluates an output by comparing it against a predefined reference or “gold standard.” In this approach, LLM measures how closely the output aligns with the expected result.

- Pairwise Comparison: Finally, LLMs can be used to compare two outputs to determine which one better meets a given set of criteria. It is commonly used in ranking or preference-based evaluations.

As the adoption of LLMs continues to grow, it presents exciting opportunities and challenges. In this article, you will learn about the complete concept of LLM-as-a-Judge, how it is reshaping judgment tasks, and what the future might hold for this innovative application.

The Mechanics of LLM-as-a-Judge

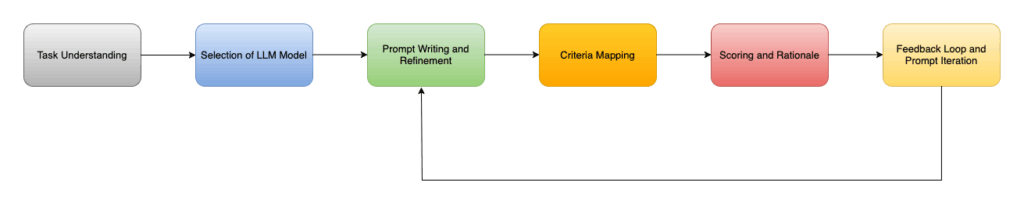

While the concept of LLM-as-a-Judge might sound a little complex, at its core, it is straightforward to implement. It follows a structured approach to evaluate various kinds of LLM tasks and make decisions. These steps make sure that evaluation is carried out systematically and reliably. Let’s check out each stage in detail:

- Task Understanding: This is a general step where users need to understand what kind of evaluation task they are dealing with. As LLMs are being deployed for a variety of use cases, understanding what exactly to measure in an LLM output is the most important task. For example, in the code review task (eg. Python language), assessing the quality of code for readability, efficiency, and adherence to PEP-8 standards is all that matters. On the other hand, in AI-generated content, evaluating the AI-generated summary for accuracy, conciseness, and relevance to the original text is usually a requirement.

- Selection of an LLM Model: Once the task is identified, you need to finalize an LLM model that can work effectively for your evaluation task. While there are multiple ways to select an LLM (based on memory usage, size, speed, cost, etc.), one effective way is to look for one of the LLM leaderboards and finalize a model for yourself.

- Prompt Writing and Refinement: This stage is considered the heart of LLM-as-a-Judge. This step involves the iterations of creating prompts to define the LLM’s evaluation task and criteria. The prompt is filled with all the necessary information, including the context of the task, criteria for evaluation, information to evaluate, and some other necessary instructions and background information (if needed).

- Criteria Mapping: This is an internal (abstract) process where the LLM maps the input content against the criteria specified in the prompt. Here, the LLM looks for the patterns, errors, or qualities related to the task. If you specify the explicit instructions in the prompt, this is the stage where LLM will pay attention to those instructions.

- Scoring and Rationale: Finally, the stage that we as GenAI developers anticipate the most i.e. LLM scoring and justification. In this stage, LLM generates a score or a categorical judgment as specified in the prompt. These scores or judgments are assigned based on the thresholds or rubrics provided in the prompt. This is the reason why you should always use a logical scoring scale or criteria. However, a score itself can not justify whether the generated output is of good quality, as it is quite common for LLMs to misunderstand some information. This is the reason why you should always ask LLM to produce a justification (rationale) for producing a particular score.

- Feedback Loop and Prompt Iteration: The last stage involves some human experts who evaluate how well LLM-as-a-Judge is performing. If the model performs well, then there is no further processing required. On the other hand, if the model does not perform well, then the prompts are revised to address gaps in clarity or increase the effectiveness of the evaluation.

Note: You might find different stages involved in LLM-as-Judge in different articles. But do not confuse yourself with all those steps. While all of them are true, the steps mentioned in this article are more concrete steps that are involved on a high level for LLM evaluation using LLM-as-a-Judge.

Metrics for LLM-as-a-Judge

On an abstract level, you know that LLM-as-a-Judge can assess the performance of any kind of LLM application. But, the first question that should come to your mind is “What exactly does LLM-as-a-Judge measure, and how does it evaluate any arbitrary task?”. The answer to this question is the use of various evaluation metrics. These metrics serve as measurable standards to assess how well the LLM is performing in evaluating tasks, resolving disputes, or scoring outputs. The LLM-generated text has a lot of dimensions that need to be evaluated, for example, syntax and semantic accuracy of the text, fairness in thoughts, explainability of concepts, etc. This is the reason why LLM-as-a-Judge becomes dependent on a set of metrics. Let’s have a look at the different types of metrics used by LLM-as-a-Judge that increase its credibility and usability.

Accuracy Metrics

Accuracy metrics are responsible for measuring how often the LLM aligns with human judgments or predefined standards. They are critical in validating whether the LLM-produced results are meeting the expectations, standards, or expert opinions. Accuracy does not directly mean correctness; it also means other task-specific requirements. For example, while grading an essay, checking the grammar is not the only requirement. It should also focus on the style, argument strength, and emotional impact of the text used in the essay.

Fairness Metrics

Fairness refers to the LLM’s ability to generate unbiased and equitable decisions. Similarly, Fairness metrics evaluate the LLM’s ability to deliver unbiased, impartial, and equitable judgments. These metrics play the most critical role in applications where biased decisions can lead to significant ethical and social concerns. LLM-as-a-Judge must be able to clearly analyze how the LLM models perform across various demographics and changing data distributions. You must understand that fairness is not only about avoiding explicit bias, but it is also about tackling the subtle biases that may emerge from the training data. For example, if an LLM frequently scores certain writing styles lower, it may reflect the inherent bias.

Explainability Metrics

Explainability metrics are the most prominent part of LLM-as-a-Judge that focuses on how transparently and coherently an LLM can justify its decisions. If LLM-as-a-Judge only produces a score for evaluation, it will be really hard to justify its evaluation. Users should understand why a model provided a specific judgment to build trust and ensure accountability for its results. Explainability is quite crucial for applications in domains like legal or ethical where every decision made by LLM must be justified so that humans can easily understand it. The good news is that there is a lot of advancement, especially in this part. Most of the modern LLMs often provide interpretability tools like attention heatmaps, saliency maps, or token-level importance scores to explain their outputs.

Consistency Metrics

These metrics help evaluate whether the LLMs provide stable and reproducible judgments with the same and slightly modified inputs. Consistency is really important for LLM-as-a-Judge to maintain credibility, mainly in repetitive and high-stakes tasks. One great example of this scenario is if the same essay is submitted twice but receives different grades, users might not have complete trust in the judgments of the LLMs.

Generalization Metrics

Having a task-specific LLM that can only perform judgment on a single type of task is really an overkill. Generalization metrics measure the LLM’s ability to perform the same across a diverse range of domains, tasks, and contexts without requiring task-specific retraining. For example, a robust “LLM-as-a-Judge” should seamlessly work for evaluating essays to moderating online disputes, or assessing creative designs.

You might have guessed it right, you need to define these metrics in the prompt itself, so the LLM in charge can understand what your area of focus is for the evaluation task.

The Case for LLM-as-a-Judge

Using the LLM to evaluate another LLM output seems a little confusing and unreliable, don’t you think? For example, if you are using GPT3 for generating a paragraph and then again asking GPT3 to evaluate the same, highly likely that GPT3 will consider the generated output of apex quality as it has the same knowledge for generation and evaluation. So, how can LLM-as-a-Judge be a good evaluator for any of the LLM tasks? In this section, you will see the answer to this question, and you will also see why people are heavily relying on them even after they have other automated metrics like BLEU, ROUGE, etc.

Growing Reliance on LLMs for Content Evaluation

The evaluation journey of LLM outputs started with the human expert evaluations, where a team of experts might have evaluated the LLM-generated outputs. This process was quite tedious and required a lot of time and cost for evaluation. This problem was the key driver for the evolution of metrics like BLEU, ROUGE, BERTScore, etc. While these metrics were fast enough, they only worked by matching the syntax of generated and reference text pieces. They were not good for the semantic evaluations. This compelled researchers to go a step forward and look for options that can evaluate both the syntax and semantics of the generated text. Then, the idea of using the LLM model but with different prompts and configurations (one for generation and the other for evaluation) was tried out, and guess what? It worked out.

The key principle behind using the LLMs for evaluation is that you need to use different LLMs and prompts for the generation and evaluation tasks. When you provide a prompt to the model that is majorly focused on evaluation, you activate another capability of the model that differs from the generation task. You are simply asking an LLM model to provide its opinion on another LLM’s generated text using a fine-grained prompt. So, LLMs need not refine the answer that other LLMs generated, they just need to provide a score and justification behind that score.

Need for Scalability in Subjective Evaluations

Tasks that require subjective evaluation, such as grading essays, traditionally depend on human expertise and can be resource-intensive and difficult to scale. Using LLMs for evaluation is a quick solution that can also scale effectively while preserving the quality. One plausible question that comes to mind is that LLM-based evaluation is not 100% correct. Yes, that is true, but the same is the case for human evaluation. Human judgments are also sometimes influenced by bias, fatigue, community support, etc., and can introduce subjectivity in the evaluation. So, a small margin of error should always be acceptable if you are using LLMs for evaluation.

Augmenting Human Judgment in Complex or Time-Intensive Scenarios.

You must understand that LLM-as-a-Judge is not a one-stop solution for all your evaluation needs. Some tasks need human verification even after using the LLMs for evaluation. In complex scenarios, where human judgment is essential but time is limited, LLMs can serve as an augmentation tool. Some common examples include Legal reviews, ethical assessments, and even medical research reviews. LLMs can work as initial filters to provide the primary analysis so that experts can focus more on high-level decision-making. This approach can enhance the overall efficiency of any time-consuming system.

Advantages to Implementing LLM-as-a-Judge

LLM-as-a-Judge systems, since their inception, have introduced several advantages, making them an appealing choice for tasks that require evaluation or judgment. They offer numerous benefits compared to human evaluation or other automated metrics. Let’s take a look at the four major benefits of LLM-as-a-Judge that make it an effective choice for the evaluation task.

Scalability

The primary requirement for any LLM evaluation task is scalability, i.e. ability to evaluate huge amounts of predictions without the performance lag. LLM-as-a-Judge truly excels in this area, as it can evaluate thousands or even millions of inputs in a fraction of the time that would take days for humans to complete the same task. Without an LLM, the workload is usually divided into a team of graders, which significantly increases time and resource demands. With the LLMs, evaluations are not only done faster, but they can also handle the spikes in demand effortlessly to ensure timely feedback.

Consistency

As you know, human judgments are often influenced by factors like mood, fatigue, or unconscious bias, leading to inconsistencies in evaluations. LLMs, on the other hand, follow a predefined set of instructions for evaluation and ensure consistent results across all tasks. For example, while evaluating a customer chatbot’s outputs, an LLM can be programmed to apply the same scoring rubric uniformly across all entries. This way, it can avoid the variability that can arise from different human interpretations. Some popular fields, such as performance reviews, legal document analysis, and content quality checks, require consistency as a crucial component for the evaluation task.

Objectivity

LLM-as-a-Judge is a great cure for subjectivity as it brings a degree of objectivity to tasks that might otherwise be influenced by human biases. Since LLMs always stick to neutral evaluation parameters, they can minimize subjective favoritism. For example, in recruitment processes, an LLM can evaluate candidates based solely on their qualifications, skills, and test results, while ignoring factors such as age, gender, or ethnicity that might influence a human evaluator. But you might understand that LLMs are not completely free from bias, especially if the bias is present in the training data of that LLM. However, they offer a better approach to mitigate this issue of subjectivity.

Cost-Efficiency

This one is quite an obvious advantage of LLM-as-a-Judge. Traditional judgment tasks, like peer reviews, content evaluations, or application screenings, require significant human effort and often lead to higher costs for organizations. Implementing LLM-as-a-Judge reduces the human evaluation dependency to a great extent and lowers the operational expenses over time. Moreover, if you use an open-source LLM for judgment tasks, upfront investment in training or fine-tuning that LLM often pays off in long-term savings due to reduced reliance on manual processes and paid LLM APIs.

Challenges Associated With LLM-as-a-Judge

While LLM-as-a-Judge offers a lot of advantages, it also comes with notable limitations that can impact its reliability and effectiveness. Understanding these drawbacks is quite necessary to ensure the responsible development and deployment of such systems. Let’s have a look at some of the major issues of LLM-as-a-Judge for evaluation.

Bias and Fairness Issues

While LLMs used as judges promise objectivity, they can struggle or sometimes amplify biases present in their training data. If the data used for training an LLM contains biases related to gender, race, culture, or socioeconomic factors, it may produce unfair judgments. Addressing these biases is a fairly complex task, as they often exist in fairly large datasets and are quite difficult to detect and correct.

Lack of Contextual Understanding

LLMs rely on the patterns in the data rather than true text comprehension, which leads to potential errors in context-sensitive situations. One great example of this scenario is the evaluation of a legal argument, where an LLM might misinterpret information related to jurisdiction-specific laws or human intent. This lack of deep contextual understanding surely affects the reliability of LLMs in areas where detailed interpretation is critical.

Explainability Challenges

Explainability is another major area of concern for LLM-as-a-Judge, as LLMs operate as black boxes and make it difficult to understand how a specific decision or judgment was reached. The black-box nature of LLMs can erode users’ trust in the system, particularly in high-stakes scenarios such as legal judgments and hiring processes.

Ethical Concerns

The use of LLMs also raises some ethical concerns, particularly regarding accountability and the potential for misuse. Who is responsible if an LLM makes an incorrect or harmful decision? For example, if an LLM is used to determine parole eligibility and makes an unfair judgment, it could have severe consequences for individuals’ lives. Moreover, issues like prompt injection can lead to exposure to confidential information.

Cultural Variations

Cultural variations can further complicate the use of LLM as a judge, as what is accepted in one culture might not be acceptable to others. For example, an LLM evaluating the Speech might focus on direct and assertive arguments, a style common in Western contexts. However, in many Asian cultures, indirect or harmonious approaches are more culturally appropriate. If LLMs used for evaluation are not trained on culturally diverse datasets, they likely misjudge individuals from underrepresented groups, and you should avoid those LLMs.

Lack of Universal Standards

Since the field of LLM evaluation is not fully mature yet, there is a lack of universal standards in many evaluation tasks right now. This absence of standards creates inconsistencies in how LLMs are trained and deployed as judges. For example, when assessing the quality of a customer service chatbot, one company might focus on the response speed, while another might emphasize empathy and personalization. Without a shared framework to define these metrics, LLM might produce evaluation results that will align only with one company.

Due to all the challenges mentioned above, users may struggle to trust LLM-as-a-Judge systems. Building trust requires not only addressing technical limitations but also ensuring that users understand the system’s capabilities and limitations. Since these are significant challenges, it is quite obvious that organizations are doing serious work to resolve these issues. Some of the approaches, like incorporating human-in-the-loop feedback to refine LLM evaluations, training models on diverse, representative datasets, and collaborating with domain experts to define and adopt clear, standardized criteria, etc., are being used to fight these shortcomings of LLM-as-a-Judge for evaluation.

Why LLM-as-a-Judge Matters Moving Forward

After reading this article, you now know that LLM-as-a-Judge is a groundbreaking approach to automate evaluations while offering scalability, consistency, and cost-efficiency across various domains. You have seen that while these systems can enhance fairness and reduce human biases in evaluation, they are not without challenges, including biases in training data, a lack of contextual understanding, and ethical concerns. Moreover, even after fully automating the evaluations, these systems require humans in the loop for verification.

LLM-as-a-judge stands as the most reliable option for now due to its scalability and consistency in the evaluation process, and is transforming how developers evaluate and refine their AI applications. To get started implementing LLM-as-a-Judge in your LLM evaluations, try Opik, Comet’s LLM Evaluation framework. Opik comes equipped with built-in LLM-as-a-Judge evaluation features alongside a full suite of other LLM observability & evaluation features that enable you to ship measurable improvements within your AI apps. Opik is open-source, easy to install, and backed by a community of developers pushing the boundaries of LLM evaluation. Try it here →