Issue 5: Comet + Gradio, AI Microchip Floor Planning, Reinforcement Learning for Android

Using Gradio to run ML models in the Comet UI, AI beating humans on microchip floorplanning, reinforcement learning for Android, and more

Welcome to issue #5 of The Comet Newsletter!

This week, we take a closer look at a GPT-3-like model that reportedly achieves similar performance to the original, along with a new way to run and test ML models in the Comet UI with Gradio.

Additionally, you might enjoy learning about an AI system that outperforms humans in designing microchip floorplans, as well as a Reinforcement Learning environment on Android that promises more customized user experiences.

Like what you’re reading? Subscribe here.

And be sure to follow us on Twitter and LinkedIn — drop us a note if you have something we should cover in an upcoming issue!

Happy Reading,

Austin

Head of Community, Comet

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

EleutherAI claims new NLP model approaches GPT-3-level performance

EleutherAI is a decentralized, grassroots collective of volunteer researchers, engineers, and developers focused on AI alignment, scaling, and open source AI research.

Their flagship project is the GPT-Neo family of models, which aim to replicate the functionality of GPT-3. Last week, the group released GPT-J-6B (GPT-J), a model they claim achieves performance on par with an equivalent-sized GPT-3 model on various tasks.

GPT-J is a 6 billion parameter model that took roughly 5 weeks to train on an 835 GB collection of 22 datasets. This dataset consisted of 400 billion tokens, ranging from academic texts on arXiv and Pubmed to code repositories from GitHub.

EleutherAI claims to have performed “extensive bias analysis” on The Pile and made “tough editorial decisions” to exclude datasets they felt were “unacceptably negatively biased” toward certain groups or views.

GPT-J’s code and the trained model are open-sourced under the Apache 2.0 license and can be used for free via EleutherAI’s website.

Read Kyle Wiggers’ full article here.

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

AI system outperforms humans in designing floorplans for microchips

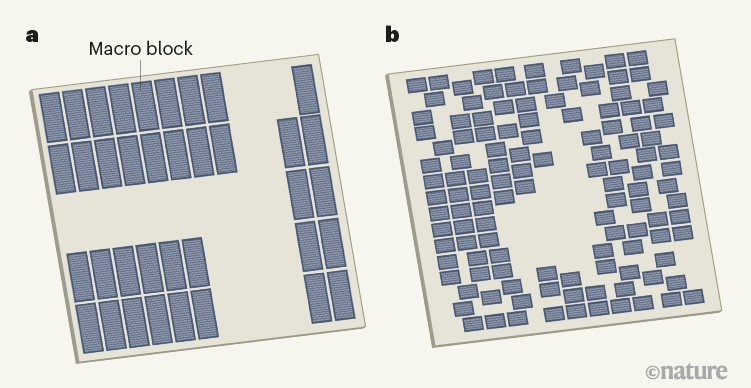

Modern chips are a miracle of technology and economics, with billions of transistors laid out and interconnected on a piece of silicon the size of a fingernail. Each chip can contain tens of millions of logic gates, called standard cells, along with thousands of memory blocks, known as macro blocks, or macros.

Macro blocks can be thousands or even millions of times larger than standard cells, and placing cells and blocks simultaneously is extremely challenging. Modern chip-design methods therefore place the macro blocks first, in a step called floorplanning.

Success or failure in designing microchips depends heavily on these floorplanning steps, which determine where memory and logic elements are located on a chip. The locations, in turn, strongly affect whether the completed chip design can satisfy operational requirements such as processing speed and power efficiency. Currently this task is performed iteratively and painstakingly, over weeks or months, by expert human engineers.

Optimizing the placement of these components has been beyond the scope of automation. That is until researchers at Google led by Azalia Mirhoseini (@Azaliamirh) demonstrated that their Reinforcement Learning approach to optimizing floorplanning can produce placements that are superior to those developed by human experts for existing chips in under 6 hours! More interestingly, the proposed designs from their system look very different from the ones created by humans.

Advancements in the automation of chip placement help keep the trajectory of Moore’s Law alive. The most telling revelation of Mirhoseini’s paper is that these designs are already being incorporated into Google’s next-gen AI processors, which means that they’re good enough for mass production. Expect to see other chip manufacturers partnering with Google or developing their own automated solutions based on these learnings.

Read the full article in Nature.

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

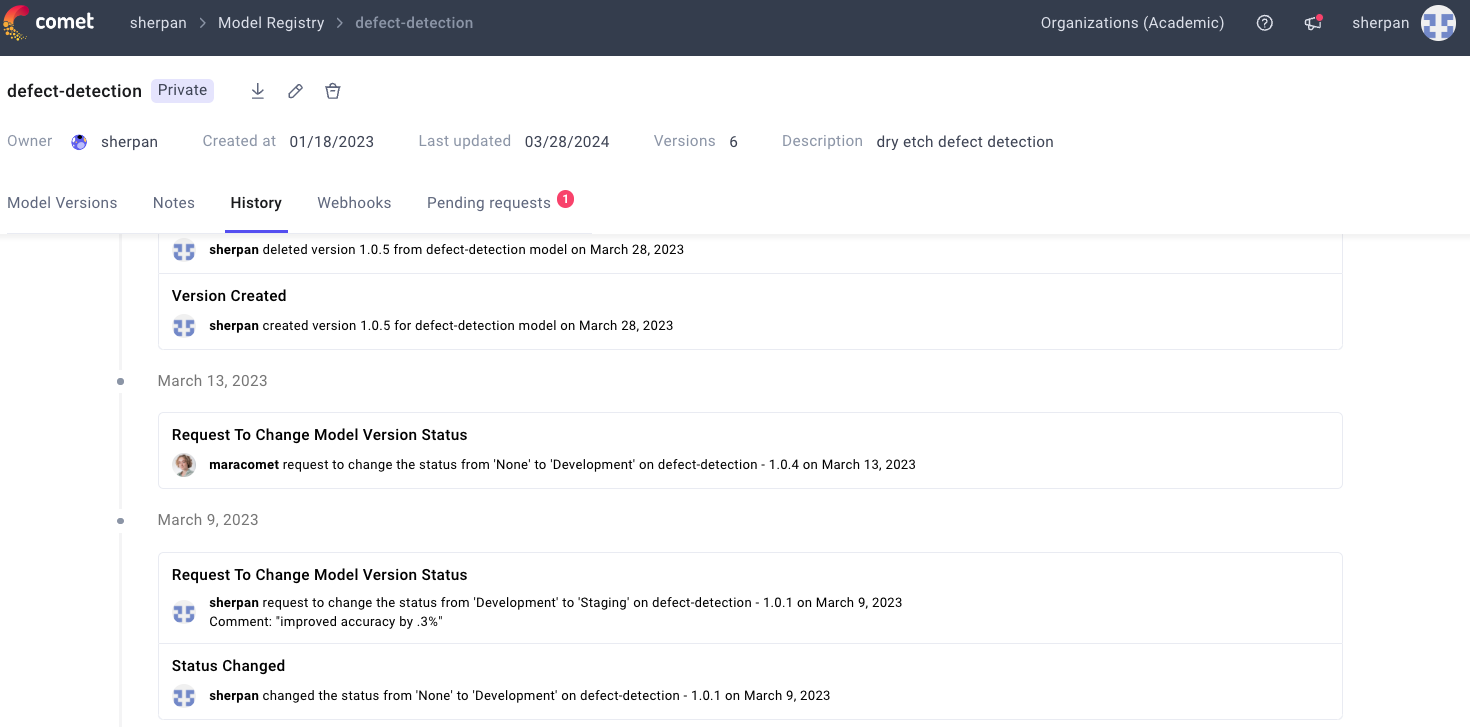

New Comet Integration: Gradio

Gradio is an excellent open-source Python library (with more than 2,600 stars) that enables ML engineers and teams to create demos and GUIs from their models very easily, with just a few lines of Python.

Gradio’s focus on increasing visibility and collaboration within the ML lifecycle makes it a perfect addition to Comet’s experiment management platform, which is centered on creating a system of record for experimentation pipelines.

In this overview of the new integration, Gradio’s Co-founder and CEO Abubakar Abid explores how well Gradio and Comet work together, giving you even more ways to visualize and understand your ML experiments.

Read Abubakar’s full overview here.

Running PyTorch Hub Models in Your Browser with Gradio

Gradio’s integration with Comet isn’t the only news to report this week—Gradio is also now integrated directly into PyTorch’s Model Hub, so you can easily try out a model before using it in your own projects. And Gradio’s new integration with Comet allows you to try out and visualize these models directly in the Comet UI.

Read the full blog post by Gradio’s Abubakar Abid.

AndroidEnv: The Android Reinforcement Learning Environment

AndroidEnv is a Python library from Deepmind that exposes an Android device as a Reinforcement Learning (RL) environment. The library provides a flexible platform for defining custom tasks on top of the Android OS, including any Android application. Agents interact with the device through a universal action interface.

The release of the AndroidEnv points to a future where personal learning agents run continuously on a user’s smartphone data, ias a means of understanding behaviors and executing complex actions that are unique to individual users. For instance, your personal agent would be able to learn the common actions you perform in the morning and adapt to changes in these routines over time.

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

Biden’s AI czar focuses on societal risks, preventing harm

Last week, the Biden administration announced that they would be appointing Dr. Lynne Parker as the Director of the National AI Initiative Office. Her job will entail establishing U.S. government partnerships with small businesses, research universities, and international allies on efforts in artificial intelligence.

Dr. Parker’s focus is to enact policy that “[Ensures the] responsible use of AI so that we’re not disadvantaging certain people, we’re not achieving biased outcomes.” She points to the policies introduced in Europe that aim to regulate the risky applications of the technology as a source of guidance.

“All of those efforts are very much about like-minded countries coming together to demonstrate how we can use AI in an appropriate way, not violate the human rights that has been demonstrated in China and other authoritarian countries.”

Dr. Parker has a long history of work in cooperative robotics, earning her Ph.D. in computer science from the Massachusetts Institute of Technology. Her dissertation research (1994) on ALLIANCE, a distributed architecture for multi-robot cooperation, was the first PhD dissertation worldwide on the topic of multi-robot systems, and is considered a pioneering work in the field.

Additionally, Dr. Parker is a leading international researcher in the field of cooperative multi-robot systems, and has performed research in the areas of mobile robot cooperation, human-robot cooperation, robotic learning, intelligent agent architectures, and robot navigation.

Before being appointed to the position of Director at the National AI Initiative Office, she served as an an Associate Dean and Professor in the Tickle College of Engineering at the University of Tennessee-Knoxville (UTK).

Read the full article from The AP here.

Comet CEO Gideon Mendels on The Inference Podcast

And last but not least, check out the newest episode of The Inference Podcast, in which host Merve Noyan (@mervenoyann) chats with Comet CEO and Co-founder Gideon Mendels about key differences between MLOps and DevOps, MLOps best practices, and much more.