-

AI Assisted Coding with Cursor AI and Opik

How AI can help you move beyond vibe coding and become an effective AI engineer faster than you think Dear…

-

Release Highlights: Discover Opik Agent Optimizer, Guardrails, & New Integrations

As LLMs power more complex, multi-step agentic systems, the need for precise optimization and control is growing. In case you…

-

Announcing Opik’s Guardrails Beta: Moderate LLM Applications in Real-Time

We’ve spent the past year building tools that make LLM applications more transparent, measurable, and accountable. Since launching Opik, our…

-

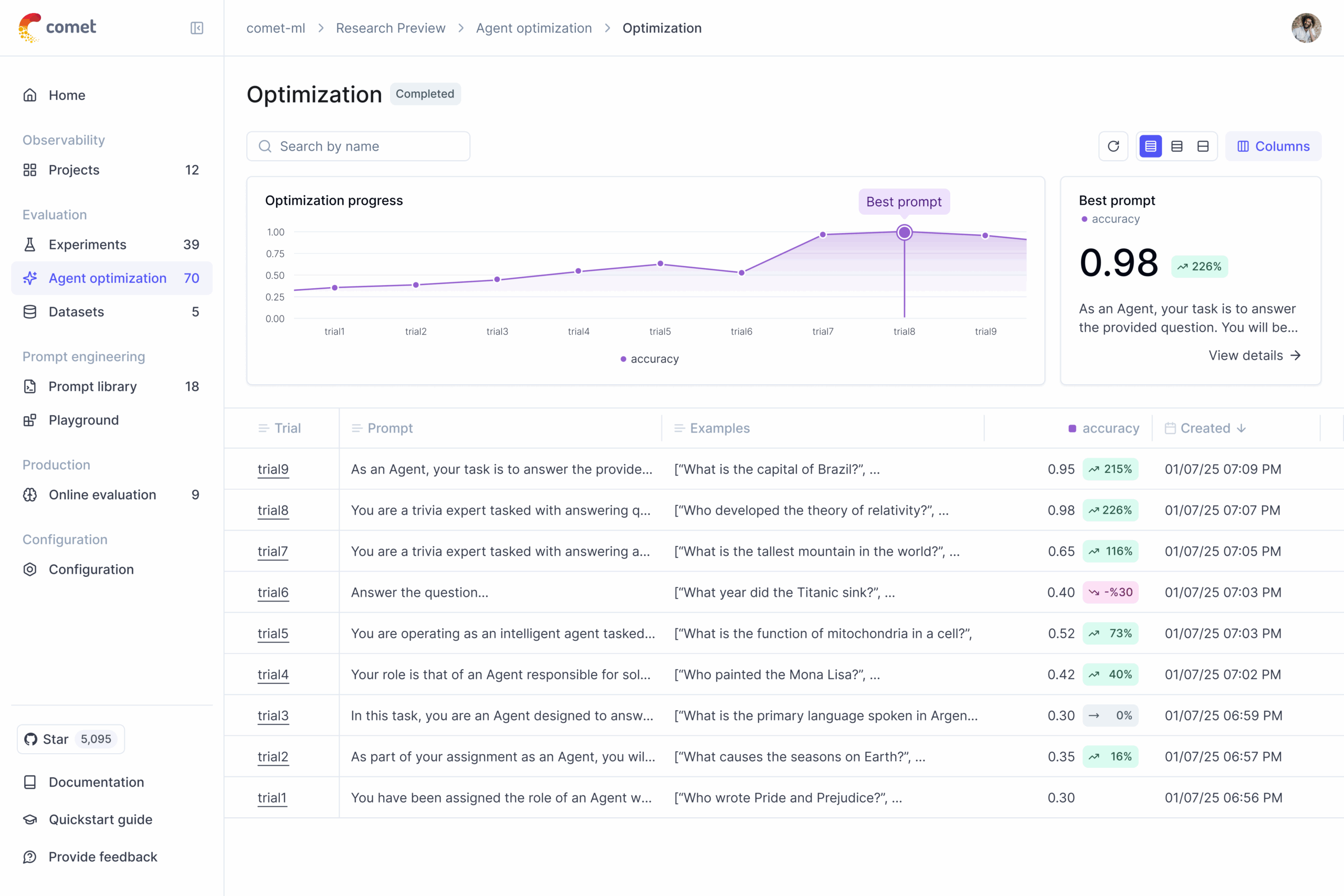

From Observability to Optimization: Announcing the Opik Agent Optimizer Public Beta

At Comet, we’re driven by a commitment to advance innovation in AI, particularly in the realm of LLM observability. Our…

-

Major Releases: MCP Server & Google Agent Dev Kit Support

We’ve just rolled out two major updates in Opik, Comet’s open-source LLM evaluation platform, that make it easier than ever…

-

SelfCheckGPT for LLM Evaluation

Detecting hallucinations in language models is challenging. There are three general approaches: The problem with many LLM-as-a-Judge techniques is that…

-

LLM Hallucination Detection in App Development

Even ChatGPT knows it’s not always right. When prompted, “Are large language models (LLMs) always accurate?” ChatGPT says no and…

-

LLM Evaluation Frameworks: Head-to-Head Comparison

As teams work on complex AI agents and expand what LLM-powered applications can achieve, a variety of LLM evaluation frameworks…

-

LLM Juries for Evaluation

Evaluating the correctness of generated responses is an inherently challenging task. LLM-as-a-Judge evaluators have gained popularity for their ability to…

-

A Simple Recipe for LLM Observability

So, you’re building an AI application on top of an LLM, and you’re planning on setting it live in production.…

Build AI tools in our virtual hackathon | $30,000 in prizes!

Category: LLMOps

Get started today for free.

You don’t need a credit card to sign up, and your Comet account comes with a generous free tier you can actually use—for as long as you like.