At Comet, we’re driven by a commitment to advance innovation in AI, particularly in the realm of LLM observability. Our journey with Opik began with providing tools to make LLM applications more transparent, measurable, and accountable. As one of the largest growing open-source solutions in this space, our team has been deeply researching “what’s next.” We’ve seen compelling early work in prompt and agent optimization, and we believe Opik is uniquely positioned to pioneer this frontier. Our thesis is simple: optimizing your prompts and agents is the logical next step from understanding their behavior through traces and evaluations.

Today, we’re excited to translate this vision into reality with the public beta of Opik Agent Optimizer, a new suite of tools designed to automate and elevate your prompt and agent optimization workflows.

The Challenge: Why Manual Prompt Engineering Isn’t Scaling

The power of Large Language Models is undeniable, but unlocking their full potential often hinges on the art and science of prompt engineering. As many development teams know, this can be a painstaking, manual process: a constant cycle of tweaking prompts, running experiments, reviewing results, and repeating. This “manual grind” consumes valuable development time that we have heard from our customers and ourselves when working with LLMs.

Furthermore, the LLM landscape is evolving at breakneck speed. New models are released daily, and what worked yesterday might not work tomorrow. Re-engineering prompts for each new model or even minor version updates is a significant hurdle, making scalability and adaptability a constant concern. This is why we asked ourselves: “Does prompt engineering have to be this manual?” Our answer is Opik Agent Optimizer.

Solution: Simple, Flexible, and Framework-Agnostic Optimization

We believe the current paradigm for LLM optimization needs a shift. Existing libraries often tie optimization to specific orchestration frameworks, forcing developers to rebuild their applications from scratch. This is a fundamental flaw. The vast majority of developers already have a preferred framework or choose to work without one; they shouldn’t be penalized for it.

Opik Agent Optimizer is built on a different philosophy:

- Optimize Your Way: We provide a simple, powerful SDK that allows you to optimize agents and prompts regardless of how they’re built—from a simple

prompt in -> prompt outfunction to a complex agent. No framework lock-in. - Model Agnostic: Our optimizers are designed to be compatible with a wide array of models, leveraging LiteLLM for broad compatibility (OpenAI, Azure, Anthropic, Google, local Ollama instances, and more). You’re not bound to specific model providers.

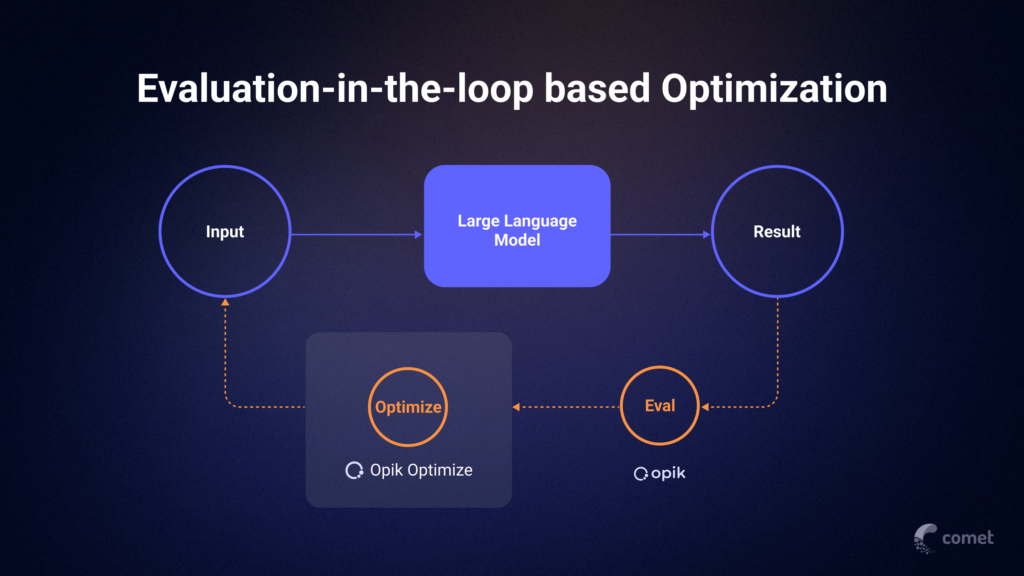

- Building on a Foundation of Insight: Opik’s roots in observability mean we understand the importance of evaluation. Optimization is a natural extension of the insights gained from traces and rigorous evaluation. One can’t optimize without a solid feedback loop of evaluation.

We’re launching this beta with a suite of optimizers, including MIPRO (Meta-Prompt-based agent Optimizer that works with and without tools), which we chose as a starting point due to its readily available library implementation, allowing for quick adaptation. Alongside MIPRO, our research team has been developing three novel optimization algorithms (papers pending) that explore new approaches to enhancing prompt and agent performance:

- Meta Prompter Optimizer: A recursive LLM-as-an-optimizer approach with the LLM acting as a prompt engineer. Using reasoning models to critique and iteratively refine an initial instruction prompt.

- Bayesian Few-Shot Optimizer: Specifically for chat models, this optimizer uses Bayesian optimization to find the optimal number and combination of few-shot examples (demonstrations) to accompany a system prompt.

- Evolutionary Optimizer: Employs genetic algorithms to evolve a population of prompts. Can discover novel prompt structures and supports multi-objective optimization (e.g., score vs. length). Uses LLMs to mutate prompts through each round.

Seeing Is Believing: Opik Agent Optimizer In The UI

Although this an executable Python SDK package, all the traces and experiments that are logged as part of your optimization are sent to Opik and optimization process along with each iteration (Trial) and specific improvements, changes in prompts and various run details can be seen in the Opik UI today.

Running With a Few Lines of Code: In Practice

Here’s a brief look at how you might use the SDK’s FewShotBayesianOptimizer , one of the many optimizer algorithm’s we have available:

from opik.evaluation.metrics import LevenshteinRatio

from opik_optimizer import FewShotBayesianOptimizer

from opik_optimizer.demo import get_or_create_dataset

from opik_optimizer import (

MetricConfig,

TaskConfig,

from_dataset_field,

from_llm_response_text,

)

# Get or create a test dataset

hot_pot_dataset = get_or_create_dataset("hotpot-300")

project_name = "optimize-few-shot-bayesian-hotpot"

# Define the initial prompt to optimize, intentionally vague

prompt_instruction = """

Answer the question.

"""

# Initialize the optimizer

optimizer = FewShotBayesianOptimizer(

model="gpt-4o-mini",

project_name=project_name,

min_examples=3,

max_examples=8,

n_threads=16,

seed=42, # reproducibility

)

# Configure the metric for evaluation

metric_config = MetricConfig(

metric=LevenshteinRatio(project_name=project_name),

inputs={

"output": from_llm_response_text(),

"reference": from_dataset_field(name="answer"),

},

)

# Configure the task details, i.e dataset mapping

task_config = TaskConfig(

instruction_prompt=prompt_instruction,

input_dataset_fields=["question"],

output_dataset_field="answer",

use_chat_prompt=True,

)

# Run the optimization

result = optimizer.optimize_prompt(

dataset=hot_pot_dataset,

metric_config=metric_config,

task_config=task_config,

n_trials=10,

n_samples=150,

)

# Display the results, including the best prompt found

result.display()

Embedding Auto-Optimization Into Your Workflow

Opik Agent Optimizer is designed to seamlessly integrate into and elevate your LLM development lifecycle, transforming it into a more agile, data-driven, and efficient process. We see it as a pivotal component in the how you evolve from classic prompt engineering to evaluation-in-the-loop thinking, guiding you from initial concept to continuously optimized production systems:

- Foundation & Rapid Prototyping (Conception): Begin by quickly developing a functional baseline version of your LLM application that handles core tasks. This provides the initial scaffold for iteration and improvement. Or take an existing system and establish a baseline evaluation.

- Fueling Optimization (Curate Examples): Assemble a comprehensive and diverse dataset of high-quality examples that accurately reflect the tasks your application needs to master. This dataset becomes the bedrock for effective automated optimization. Where curated human-labeled datasets might be a hard task we have developed an example using synthetic generation based on your Opik traces.

- Insight through Observation (Experimentation & Tracing): Before full automation, leverage Opik’s observability tools. Run initial experiments, then dive deep into traces and results to gain critical insights. This stage is crucial for uncovering edge cases and identifying areas for improvement that might extend beyond prompt engineering, especially when developing sophisticated, multi-step agents.

- Systematic Refinement (Optimization Run): With a solid understanding and a curated dataset, unlock the power of Opik Agent Optimizer. It will systematically explore the vast possibility space, rigorously testing and evaluating different prompt structures and agent configurations to pinpoint those that deliver peak performance against your defined metrics.

- Optimization Development Lifecycle (Continuous Adaptation): Extend optimization into your production environment. By implementing automated nightly or weekly optimization routines that learn from live production data, your LLM application can dynamically adapt to evolving user behaviors, new data patterns, and concept drift. This ensures sustained performance and opens avenues for advanced strategies, such as tailoring differently optimized agents for distinct user segments or automatically re-validating against new foundation models.

Whats Next with Opik Agent Optimizer

This public beta is just the beginning, and we’re already charting an ambitious course for Opik Optimizer. Our roadmap is focused on expanding its capabilities, intelligence, and integration within the broader LLM development ecosystem:

- Broadening Optimization Horizons:

- Beyond Prompts: We’re working to extend optimization capabilities to encompass other crucial parameters of your LLM systems (like temperature), offering more holistic performance tuning including tool use and selection for agents.

- Multi-Modal Support: As AI applications increasingly handle diverse data types, we plan to introduce support for optimizing multi-modal systems (text, images, audio, and more).

- Advancing Optimizer Intelligence and Automation:

- Smarter Algorithms: We will continuously expand our arsenal of optimization techniques, incorporating more established methods like prompt gradients and pioneering novel approaches.

- Automated Adaptation: Imagine your system automatically testing its optimized prompts and agents against newly released foundation models, triggering re-optimization when beneficial. This level of intelligent adaptation is a key area of our research.

- Deepening Ecosystem Synergy:

- Seamless Evaluation Feedback Loops: We are committed to creating even tighter, more intuitive integrations between Opik Agent Optimizer and Opik’s comprehensive evaluation suites. This will enable a powerful, continuous cycle of evaluation, insight, and optimization.

- SDK Ease of Use: This includes refining our API design (which currently utilizes

TaskConfigandMetricConfigobjects) to be even more intuitive and powerful.

We welcome suggestions, pull-requests and feedback on any of the preview items and future roadmap for Opik Agent Optimizer.

Get Involved!

Opik Agent Optimizer is here to empower you to build better, more efficient, and more adaptable LLM applications. We’re building this in the open and value your feedback immensely.