As AI agents and LLM applications grow more powerful and complex, this month’s Opik updates integrate leading-edge technologies to help you maximize performance — and simplify your development workflows along the way.

With two new optimization algorithms in the Opik Agent Optimizer for refining prompts and tool use, new AI-powered features that accelerate debugging and expand test coverage, and simplified workflows for collaborating with subject matter experts, these updates free you to spend less time on manual overhead and more time building reliable, production-ready AI systems.

Opik Optimizer Version 2.1

New releases in the Opik Agent Optimizer SDK bring the total number of optimization algorithms to six, giving you greater flexibility to perfect system prompts, tool calling, orchestration steps, and more without tedious manual drafting and testing.

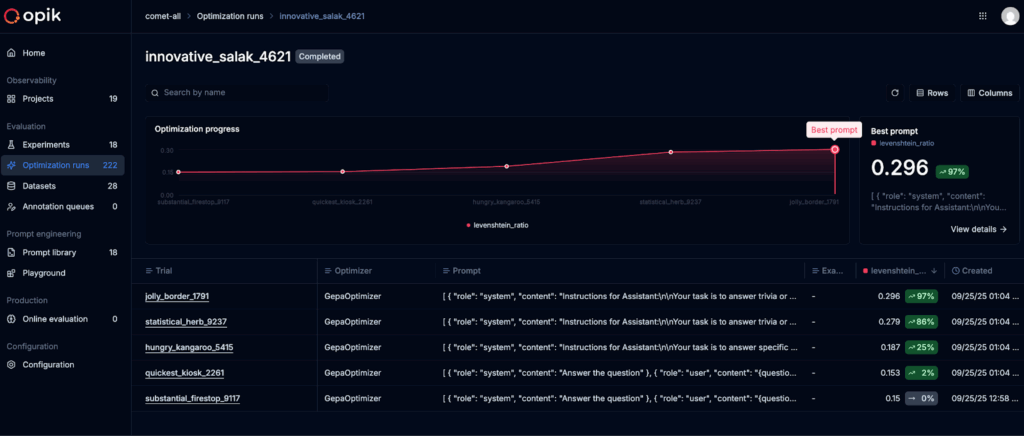

- GEPA Optimizer: Opik Optimizer now supports GEPA, a reflection-driven optimizer that guides LLMs to automatically refine single-turn system prompts using evaluation feedback. The GEPA optimizer seamlessly integrates with Opiks datasets and metrics, using task and reflection models to iterate and improve prompts and agents, resulting in high-quality prompts without manual trial and error.

- Tool Optimization (MCP & Function Calling): The new MCP tool-calling feature applies Opik’s MetaPrompt Optimizer to agentic prompts, allowing you to fine-tune how your models interact with external tools and enabling you to commit improved signatures directly back to your code.

With expanded test coverage and SDK improvements, Opik Optimizer is quickly becoming the tool of choice for automated agent and prompt optimization.

Opik Assist: AI-Powered Trace Analysis

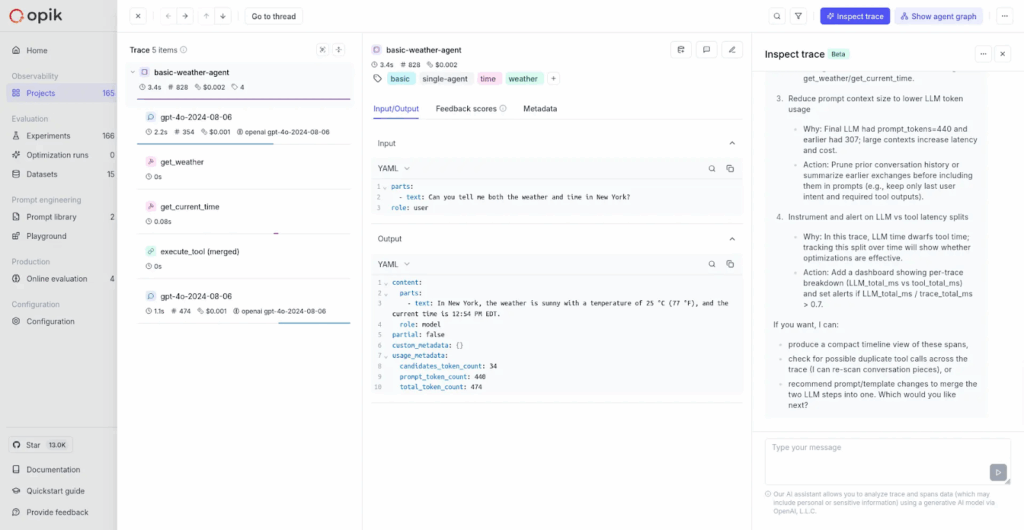

Spot-checking traces gives you a sense of overall application performance, but it can be cumbersome to comb through many rows in context and identify what worked, what didn’t, and why. Instead, just click on Inspect Trace to take advantage of AI summarization and analysis right inside the platform. Opik Assist surfaces key insights, identifies performance patterns, and flags issues with latency, token usage, error frequency, and output quality, so you can debug faster than ever.

AI-Powered Data Expansion for Agent Simulation

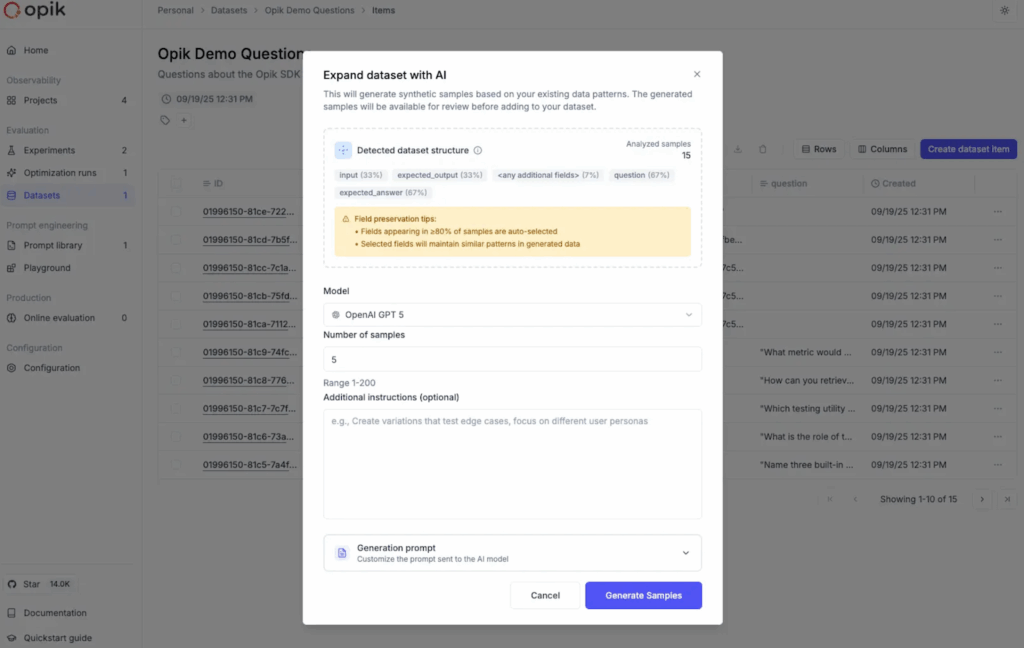

To run effective evaluations and accurately score an LLM application’s performance, you need a wide range of test data. Opik’s new AI-powered data expansion automates dataset creation, analyzing your existing datasets to generate additional test cases, edge cases, and evaluation scenarios. This results in more intelligent variations that expand coverage and relevance, allowing you to build more robust evaluation suites without manual overhead.

Expanded Cross-Functional Collaboration & Human Review Workflows

Opik’s newest human feedback features make it simple to involve subject matter experts (SMEs) and capture high-quality insights in your workflows.

- Multi-Value Feedback Scores: Multiple reviewers can independently score the same trace or thread, preserving multiple perspectives and enabling richer consensus-building during evaluation.

- Annotation Queue: Create and share queues of traces or threads that need expert review, so you can organize SME work, track progress, and collect feedback at a large scale.

- Simplified Annotation Experience: A new distraction-free UI built for non-technical reviewers with clear instructions, predefined metrics, and progress indicators.

Opik University: On-Demand Setup Tutorials

Get the benefit of guided onboarding sessions from our Customer Success team, on demand in our new Opik University video series. This learning hub helps you get the most out of Opik’s LLM observability and evaluation tools through structured, hands-on lessons, interactive tutorials, real-world use cases, and practical exercises that take you from basic trace logging to advanced evaluation and optimization strategies.

Connect & Learn with Fellow AI & ML Developers

Join us online and in-person, connect with other AI builders, and grow your skills at our upcoming hackathons and community events.

Webinar: Making LLM Agents Observable & Debuggable – October 16th, 7:00 PM EDT

NeurIPS 2025 – San Diego, CA, December 2nd-7th 2025

Comet Event Calendar on Luma – Join our developer advocates for hands-on tech talks and networking events, both virtual and in-person.