This month’s Opik releases strengthen the connection between experimentation, evaluation, and measurable performance with the launch of the Optimization Studio, enhanced custom dashboard capabilities, and expanded model provider and integration support. Whether you’re refining prompts or shipping agents to production, these updates help you automate workflows, run more structured experiments, and give clarity into what is driving improvement.

Read more about each new release below.

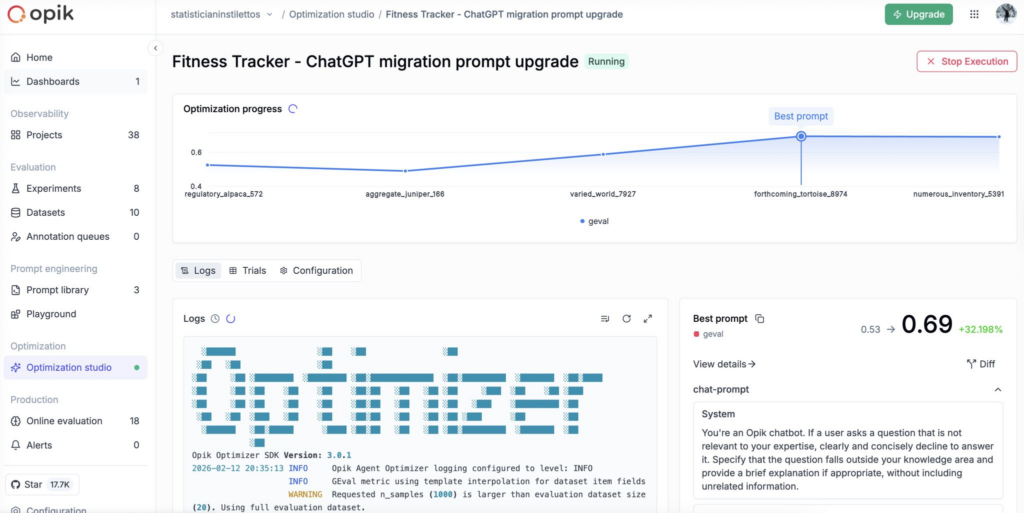

Optimization Studio

You can now refine prompts directly within the Opik UI by defining your own evaluation criteria and running structured optimization against your chosen data. Choose from optimization strategies such as GEPA for single-turn prompts and quick improvements, or HRPO for deeper analysis of why your prompt is failing. You can apply strict or model-graded scoring, monitor performance trends as runs progress, and compare runs side-by-side to identify what criteria improved your results easily.

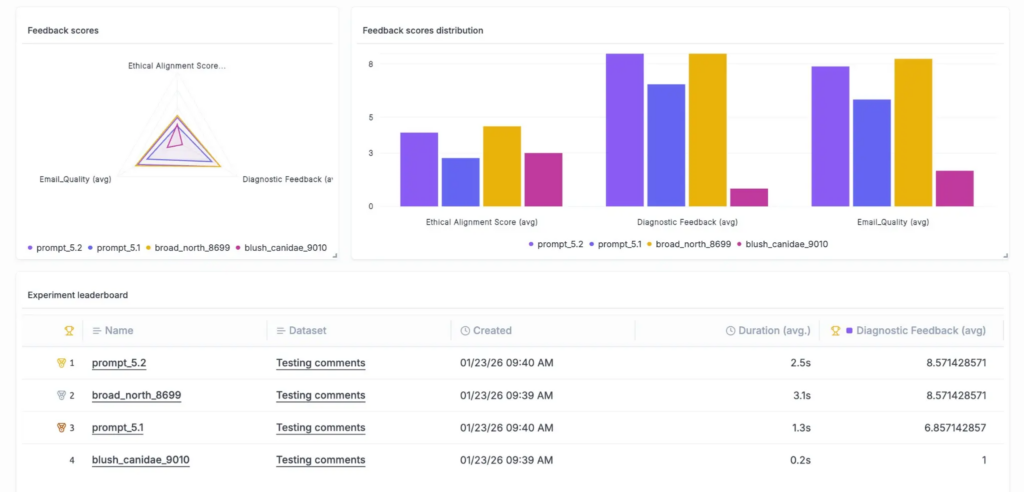

Custom Dashboard Improvements

Three new features in Opik’s custom dashboards make it easier for you to compare and analyze experiment performance at a glance.

- Experiment Leaderboard Widget: Rank and compare experiments at a glance to quickly identify your top-performing configurations.

- Grouped By Metrics Widget: Break down metrics by your choice of model, dataset, or version to analyze performance.

- Span-Level Metrics Charts: Visualize granular, end-to-end behavior with span-level metrics to understand how individual steps impact overall results.

Python & TypeScript SDK Improvements

The Python and TypeScript SDKs have received a round of improvements to make your evaluation workflows more reproducible and easier to automate.

Python & TypeScript

- Annotation Queue Support: Manage your human-in-the-loop workflows programmatically to gain more control over evaluation and feedback loops directly from your code.

- Dataset Versioning: You can now run reproducible experiments with full dataset version control to clearly track how data changes impact particular results.

TypeScript

- Opik Query Language: Use a SQL-like interface to search and filter prompts, traces, and threads with precision, making it easier to analyze the data you care about.

LLM Provider & Integrations

New providers and integrations expand Opik’s functionality, enabling you to evaluate and trace your AI applications without additional setup.

- Ollama Support: Opik now provides native Ollama support in the UI and playground, with automatic model discovery and compatibility across local, remote, and Docker instances.

- Claude Opus 4.6 Support: Newly added support for Anthropic’s newest model for evaluation and optimization.

- OpenAI SORA Integration: Log and track video generation outputs from OpenAI’s SORA model directly in Opik.

- Google Veo Integration: Full support for Google’s Veo video generation API, including automatic logging of video outputs and metadata.

- LangChain Tool Descriptions: Tool descriptions are now automatically captured within spans, providing you with clearer visibility into how your agents select and use tools.

Connect & Learn with Fellow AI Developers

Join us online and in person, connect with other AI builders, and grow your skills at our upcoming hackathons and community events.

- Global AI Agents Hackathon Final, February 20th – Virtual

- Lightning Session: The Agentic Lifecycle with Opik: Trace, Evaluate, Iterate, February 26th – Virtual

- Opik Virtual Learning Series: Live Paper Reading, March 19th – Virtual