The following is an adaptation of two talks I recently gave at the O’Reilly AI Conference and DroidCon in London. Slides are available at the end of this post.

Earlier this year, researchers at NVIDIA announced MegatronLM, a massive transformer model with 8.3 billion parameters (24 times larger than BERT) that achieved state-of-the-art performance on a variety of language tasks. While this was an undoubtedly impressive technical achievement, I couldn’t help but ask myself: is deep learning going in the right direction?

The parameters alone weigh in at just over 33 GB on disk. Training the final model took 512 V100 GPUs running continuously for 9.2 days. Given the power requirements per card, a back of the envelope estimate put the amount of energy used to train this model at over 3X the yearly energy consumption of the average American.

I don’t mean to single out this particular project. There are many examples of massive models being trained to achieve ever-so-slightly higher accuracy on various benchmarks. Despite being 24X larger than BERT, MegatronLM is only 34% better at its language modeling task. As a one-off experiment to demonstrate the performance of new hardware, there isn’t much harm here. But in the long term, this trend is going to cause a few problems.

First, it hinders democratization. If we believe in a world where millions of engineers are going to use deep learning to make every application and device better, we won’t get there with massive models that take large amounts of time and money to train.

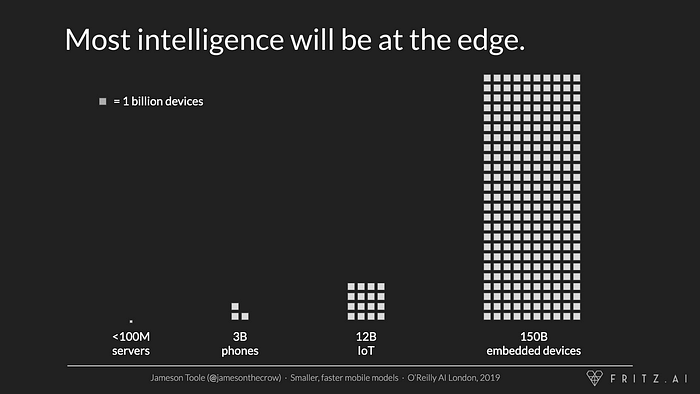

Second, it restricts scale. There are probably less than 100 million processors in every public and private cloud in the world. But there are already 3 billion mobile phones, 12 billion IoT devices, and 150 billion micro-controllers out there. In the long term, it’s these small, low power devices that will consume the most deep learning, and massive models simply won’t be an option.

To make sure deep learning lives up to its promise, we need to re-orient research away from state-of-the-art accuracy and towards state-of-the-art efficiency. We need to ask if models enable the largest number of people to iterate as fast as possible using the fewest amount of resources on the most devices.

The good news is that work is being done to make deep learning models smaller, faster, and more efficient. Early returns are incredible. Take, for example, one result from a 2015 paper by Han et al.

“On the ImageNet dataset, our method reduced the storage required by AlexNet by 35x, from 240MB to 6.9MB, without loss of accuracy. Our method reduced the size of VGG-16 by 49x from 552MB to 11.3MB, again with no loss of accuracy.”

To achieve results like this, we have to consider the entire machine learning lifecycle—from model selection to training to deployment. For the rest of this article, we’ll dive into those phases and look at ways to make smaller, faster, more efficient models.

Model Selection

The best way to end up with a smaller, more efficient model is to start with one. The graph above plots the rough size (in megabytes) of various model architectures. I’ve overlaid lines denoting the typical size of mobile applications (code and assets included), as well as the amount of SRAM that might be available in an embedded device.

The logarithmic scale on the Y-axis softens the visual blow, but the unfortunate truth is that the majority of model architectures are orders of magnitude too large for deployment anywhere but the larger corners of a datacenter.

Incredibly, the smaller architectures to the right don’t perform much worse than the large ones to the left. An architecture like VGG-16 (300–500MB) performs about as well as a MobileNet (20MB) model, despite being nearly 25X smaller.

What makes smaller architectures like MobileNet and SqueezeNet so efficient? Based on experiments by Iandola et al (SqueezeNet), Howard et al(MobileNetV3), and Chen et al (DeepLab V3), some answers lie in the macro- and micro-architectures of models.

Macro-architecture refers the types of layers used by a model and how they are arranged into modules and blocks. To produce efficient macro-architectures:

- Keep activation maps large by downsampling later or using atrous (dilated) convolutions

- Use more channels, but fewer layers

- Use skip connections and residual connections to improve accuracy and re-use parameters during calculation

- Replace standard convolutions with depthwise separable ones

A model’s micro-architecture is defined by choices related to individual layers. Best practices include:

- Making input and output blocks as efficient as possible, as they are typically 15–25% of a model’s computation cost

- Decreasing the size of convolution kernels

- Adding a width multiplier to control the number of channels per convolution with a hyperparameter, alpha

- Arranging layers so that parameters can be fused (e.g. bias and batch normalization)

Model Training

After a model architecture has been selected, there’s still a lot that can be done to shrink it and make it more efficient during training. In case it wasn’t already obvious, most neural networks are over-parameterized. Many trained weights have little impact on overall accuracy and can be removed. Frankle et al find that in many networks, 80–90% of network weights can be removed — along with most of the precision in those weights — with little loss in accuracy.

There are three main strategies for finding and removing these parameters: knowledge distillation, pruning, and quantization. They can be applied together or separately.

Knowledge Distillation

Knowledge distillation uses a larger “teacher” model to train a smaller “student” model. First conceived by Hinton et al in 2015, the keys to this technique are two loss terms: one for the hard predictions of the student model and a second based on the ability of the student to produce the same distribution of scores across all output classes.

Polino et al were able to achieve a 46X reduction in size for ResNet models trained on CIFAR10 with only 10% loss in accuracy, and a 2X reduction in size on ImageNet with only a 2% loss in accuracy. More recently, Jiao et al distilled BERT to create TinyBERT: 7.5X smaller, 9.4X faster, and only 3% less accurate. There are a few great open source libraries with implementations of distillation frameworks including Distiller and Distil* for transformers.

Pruning

The second technique to shrink models is pruning. Pruning involves assessing the importance of weights in a model and removing those that contribute the least to overall model accuracy. Pruning can be done at multiple scales in a network. The smallest models are achieved by pruning at the individual weight level. Weights with small magnitudes are set to zero. When models are compressed or stored in a sparse format, these zeros are very efficient to store.

Han et al use this approach to shrink common computer vision architectures by 9–13X with negligible changes in accuracy. Unfortunately, a lack of support for fast sparse matrix operations means that weight-level pruning doesn’t also increase runtime speeds.

To create models that are both smaller and faster, pruning needs to be done at filter or layer levels—for example, removing the filters of a convolution layer that contribute least to overall prediction accuracy. Models pruned at the filter level aren’t quite as small but are typically faster. Li et al were able to reduce the size and runtime of a VGG model by 34% with no loss in accuracy using this technique.

Finally, it’s worth noting that Liu et al have shown mixed results as to whether it’s better to start from a larger model and prune or train a smaller model from scratch.

Quantization

After a model has been trained, it needs to be prepared for deployment. Here, too, there are techniques to squeeze even more optimizations out of a model. Typically, the weights of a models are stored as 32-bit floating point numbers, but for most applications, this is far more precision than necessary. We can save space and (sometimes) time by quantizing these weights, again with minimal impact on accuracy.

Quantization maps each floating point weight to a fixed precision integer containing fewer bits than the original. While there are a number of quantization techniques, the two most important factors are the bit depth of the final model and whether weights are quantized during or after training (quantization-aware training and post-training quantization, respectively).

Finally, it’s important to quantize both weights and activations to speed up model runtime. Activation functions are mathematical operations that will naturally produce floating point numbers. If these functions aren’t modified to produce quantized outputs, models can even run slower due to the necessary conversion.

In a fantastic review, Krishnamoorthi tests a number of quantization schemes and configurations to provide a set of best practices:

Results:

- Post-training can generally be applied down to 8 bits, resulting in 4X smaller models with <2% accuracy loss

- Training-aware quantization allows a reduction of bit depth to 4 or 2 bits (8–16X smaller models) with minimal accuracy loss

- Quantizing weights and activations can result in a 2–3X speed increase on CPUs

Deployment

A common thread among these techniques is that they generate a continuum of models, each with different shapes, sizes, and accuracies. While this creates a bit of a management and organization problem, it maps nicely onto the wide variety of hardware and software conditions models will face in the wild.

The graph above shows the runtime speed of a MobileNetV2 model across various smartphones. There can be an 80X speed difference between the lowest and highest end devices. In order to deliver users a consistent experience, it’s important to put the right model on the right device. This means training multiple models and deploying them to different devices based on available resources.

Typically, the best on-device performance is achieved by:

- Using native formats and frameworks (e.g Core ML on iOS and TFLite on Android)

- Leveraging any available accelerators like GPUs or DSPs by using supported operations only

- Monitoring performance across devices, identifying model bottlenecks, and iterating architectures for specific hardware

Putting it all together

By applying these techniques, it’s possible to shrink and speed up most models by at least an order of magnitude. To quote just a few papers discussed thus far:

- “TinyBERT is empirically effective and achieves comparable results with BERT in GLUE datasets, while being 7.5x smaller and 9.4x faster on inference.” — Jiao et al

- “Our method reduced the size of VGG-16 by 49x from 552MB to 11.3MB, again with no loss of accuracy.” — Han et al

- “The model itself takes up less than 20KB of Flash storage space … and it only needs 30KB of RAM to operate.” — Peter Warden at TensorFlow Dev Summit 2019

To prove that it can be done by mere mortals, I took the liberty of creating a tiny 17KB style transfer model that contains just 11,686 parameters, yet still produces results that look as good as a 1.6 million parameter model.

I am consistently floored that results like this are easily achievable, yet aren’t done as a standard process in every paper. If we don’t change our practices, I worry we’ll waste time, money, and resources, while failing to bring deep learning to applications and devices that could benefit from it.

The good news, though, is that the marginal benefits of bigger models seem to be falling, and thanks to the techniques outlined here, we can make optimizations to size and speed that don’t sacrifice much accuracy. We can have our cake and eat it, too.

Some open questions about what’s next

Thus far, I believe we’ve only scratched the surface of what’s possible in terms of model optimization. With more research and experimentation, I think it’s possible to go even further. To that end, here are some areas that I think are ripe for additional work:

- Better framework support for quantized operations and quantized-aware training

- A more rigorous study of model optimization vs task complexity

- Additional work to determine the usefulness of platform-aware neural architecture search

- Continued investment in a multi-level intermediate representation (MLIR)

Additional Resources

- Distiller — A library for optimizing PyTorch models

- TensorFlow Model Optimization Toolkit

- Keras Tuner — Hyperparameter optimization for Keras

- TinyML — Group dedicated to embedded ML