Medical image analysis involves extracting valuable information from various imaging modalities like X-rays, CT scans, MRI, ultrasound, and PET scans. These images aid in disease diagnosis, treatment planning, and monitoring patient progress.

Deep learning automates and improves medical picture analysis. Convolutional neural networks (CNNs) can learn complicated patterns and features from enormous datasets, emulating the human visual system.

Fundamentals of Deep Learning in Medical Image Analysis

Deep learning systems automate and accurately analyze complicated imaging data, revolutionizing medical image analysis.

Convolutional Neural Networks (CNNs)

Deep learning in medical image analysis relies on CNNs. These networks are optimized for high-dimensional picture data and learning complicated features and patterns. By employing a series of convolutional, pooling, and fully connected layers, CNNs can automatically extract meaningful representations from medical images.

The convolutional layers of a CNN apply filters or kernels to the input image, convolving them across the image to capture local patterns. These local patterns combine to form more complex and abstract features in subsequent layers. Pooling layers reduce the spatial dimensions of the feature maps, allowing the network to focus on the most salient information. Finally, fully connected layers aggregate the learned features and perform classification or regression tasks.

Training and Learning from Data

Deep learning models are trained on large labeled datasets of medical images to learn the relationships between input images and their corresponding annotations. The training process involves forward propagation, where the input images pass through the network, and the predicted outputs are compared to the ground truth labels. Backpropagation is then used to compute gradients and update the network’s parameters, iteratively improving its performance.

The abundance of labeled medical image datasets, such as the publicly available ImageNet and disease-specific datasets, has played a crucial role in training deep learning models for medical image analysis. These large-scale datasets allow deep learning models to learn rich global and local information representations, contributing to accurate and robust research.

Transfer Learning and Pretrained Models

Transfer learning is a powerful technique in medical image analysis. Pre-trained models, which are deep learning models trained on large-scale datasets such as ImageNet, can be leveraged to initialize the weights of a model in the target medical imaging task. By utilizing the learned representations from general image features, transfer learning enables the practical adaptation of deep learning models to specific medical image analysis tasks, even with limited labeled medical image datasets.

Transfer learning offers several advantages, including reduced training time and improved performance, particularly when the target medical imaging task has limited labeled data. Fine-tuning, a process where pre-trained models are further trained on task-specific data, allows the model to adapt and refine its representations to the specific medical imaging domain.

Interpretability and Explainability

One challenge with deep learning models in medical image analysis is their black-box nature. It can be challenging to understand why the model made a particular prediction. Interpreting and explaining the decisions of deep learning models is crucial for building trust and confidence in their application.

Researchers are actively developing model interpretability and explainability techniques in medical image analysis. Methods such as attention mechanisms, saliency maps, and gradient-based visualization provide insights into which regions of the image contribute most to the model’s decision. Healthcare professionals can gain valuable insights and make informed decisions by understanding the reasoning behind the model’s predictions.

Current Trends in Deep Learning for Medical Image Analysis

Deep learning has revolutionized medical picture interpretation and analysis. This section discusses deep learning trends in this sector.

Disease Diagnosis and Classification

Deep learning models have demonstrated remarkable success in disease diagnosis and classification tasks. Learning from large annotated datasets allows these models to identify patterns and features indicative of specific diseases within medical images.

Deep learning algorithms can accurately detect lung cancer nodules in CT scans, diabetic retinopathy in retinal pictures, and breast cancer in mammograms. Deep learning can automate and improve illness diagnosis, helping healthcare practitioners make better judgments.

Image Segmentation and Localization

Accurate image segmentation and localization are crucial in medical image analysis for precise delineation of organs, tumors, and abnormalities. Deep learning models, particularly fully convolutional networks (FCNs), have gained popularity in these tasks. FCNs leverage skip connections and upsampling layers to generate pixel-wise segmentation masks, providing detailed information about structures’ spatial extent and boundaries within medical images.

Deep learning applications in image segmentation include brain tumor segmentation in MRI scans, lung nodule segmentation in CT scans, and cardiac structure segmentation in echocardiography. These techniques enable improved visualization, surgical planning, and treatment response assessment.

Quantitative Image Analysis

Deep learning enables quantitative analysis of medical images, extracting meaningful measurements and biomarkers. For example, deep learning models can estimate tumor volume, measure disease progression over time, or assess treatment response by quantifying changes in medical images.

This quantitative information assists clinicians in making objective assessments, monitoring disease progression, and tailoring treatment plans. Deep learning-based radiomics approaches also leverage extracted image features to predict patient outcomes, prognosis, and personalized treatment strategies.

Multimodal Fusion and Integration

Medical imaging often involves multiple modalities, such as combining CT and MRI scans or incorporating imaging data with clinical information. Deep learning facilitates the fusion and integration of multimodal data, enabling comprehensive analysis.

These approaches capture complementary information from different modalities by leveraging deep neural networks, improving accuracy, and a more holistic understanding of complex diseases. Multimodal fusion techniques find applications in areas such as neuroimaging, where combining structural and functional imaging data enhances the study of brain connectivity and neurodegenerative diseases.

Adversarial Learning and Generative Models

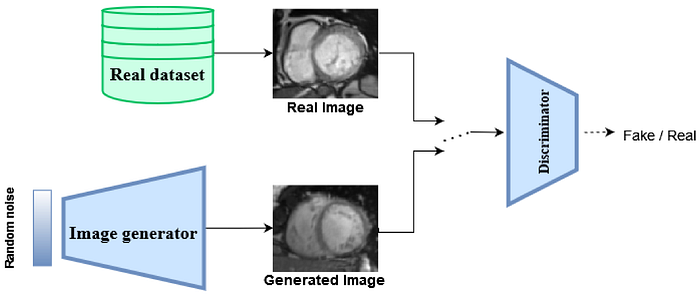

Adversarial learning, in the form of generative adversarial networks (GANs), has emerged as a powerful technique in medical image analysis. GANs can generate synthetic medical images that resemble accurate patient data, augmenting limited datasets and preserving privacy.

GANs can also assist in data synthesis for rare conditions, training robust models, and simulating realistic medical imaging scenarios for algorithm development and validation.

Challenges and Limitations

Despite the tremendous progress made in deep learning for medical image analysis, several challenges and limitations persist. Recognizing and addressing these issues is essential to ensure the responsible and practical application of deep learning models in healthcare.

Data Scarcity and Quality Issues in Medical Imaging

One significant challenge in medical image analysis is the need for labeled data, especially for rare diseases or specific patient populations. Deep learning models heavily rely on large annotated datasets to learn accurate representations and make reliable predictions. The limited availability of labeled medical images can hinder the development and generalizability of deep learning models.

Moreover, the quality and variability of medical imaging data pose additional challenges. Factors such as imaging protocols, equipment variations, noise, artifacts, and inter-observer variability can affect the performance and generalizability of deep learning models. Ensuring the collection of high-quality, diverse, and representative datasets remains a crucial area of focus to enhance the robustness and reliability of deep learning models in medical image analysis.

Interpretability and Explainability Challenges of Deep Learning Models

Deep learning models are often considered black boxes, making interpreting and explaining their decisions challenging. This lack of transparency raises concerns in critical healthcare applications where understanding the reasoning behind a model’s predictions is crucial. Explaining the basis of deep learning models’ decisions is a complex task and an active area of research.

Efforts are being made to develop interpretability techniques that provide insights into deep learning models’ learned features and decision-making processes. By addressing the interpretability and explainability challenges, healthcare professionals can trust and validate the outputs of deep learning models, improving their acceptance and integration into clinical practice.

Ethical Considerations and Potential Biases in Medical Image Analysis

The ethical implications of deep learning in medical image analysis must be considered. It is vital to consider the potential biases that may be present in the training data and their impact on the model’s performance. Biases in data collection, annotation, and patient populations can introduce disparities in the accuracy and fairness of deep learning models.

Ensuring diversity and inclusivity in training datasets and rigorous validation and testing across different patient populations are crucial steps to mitigate biases in medical image analysis. Additionally, ethical considerations concerning patient privacy, informed consent, data security, and regulatory compliance must be carefully addressed when deploying deep learning models in healthcare settings.

Addressing these challenges and limitations requires collaborative efforts from researchers, healthcare professionals, policymakers, and regulatory bodies. By fostering transparency, promoting data-sharing initiatives, developing robust evaluation standards, and adhering to ethical guidelines, the potential of deep learning in medical image analysis can be harnessed responsibly and effectively.

Future Directions and Emerging Technologies in Deep Learning for Medical Image Analysis

As deep learning continues to evolve, several exciting future directions and emerging technologies hold immense promise for advancing medical image analysis. This section will explore some of these directions and technologies, highlighting their potential impact on the field.

Explainable AI and Interpretability

The decision-making process of deep learning models is unintelligible and inexplicable, making medical picture interpretation difficult. Researchers are working to make deep learning models more transparent and interpretable so healthcare providers can trust their predictions.

This includes the development of attention mechanisms, saliency maps, and visualization techniques that provide insights into the specific regions of interest within medical images that contribute to the model’s decision. Enhancing interpretability will facilitate clinical decision-making and help address regulatory and ethical considerations in adopting deep learning models in healthcare.

Federated Learning and Privacy Preservation

Privacy preservation is a critical concern when working with sensitive medical data. Federated learning addresses this concern by training deep learning models directly on local devices or data centers without sharing patient-specific data.

Instead of centralizing data, federated learning allows models to be trained collaboratively on distributed data sources, such as hospitals or research institutions. This decentralized approach maintains data privacy while leveraging the collective knowledge from diverse datasets. Federated learning has the potential to unlock the power of large-scale medical imaging datasets while preserving patient privacy, enabling robust and generalizable deep learning models.

Continual Learning and Lifelong Adaptation

Medical image analysis is a dynamic field where new imaging techniques, diseases, and clinical challenges continuously emerge. Continual learning aims to enable deep learning models to adapt and learn incrementally over time, incorporating new knowledge while preserving previously learned information.

Lifelong adaptation allows models to continuously update their representations and adapt to new imaging modalities, patient populations, and disease characteristics. By fostering lifelong learning capabilities, deep learning models can stay up-to-date with the latest advancements and provide accurate analysis in evolving healthcare scenarios.

Reinforcement Learning and Clinical Decision Support

Reinforcement learning, a branch of machine learning, can enhance clinical decision support systems in medical image analysis. By using reinforcement learning techniques, models can learn optimal decision-making policies based on rewards or feedback from healthcare professionals.

These models can assist in treatment planning, disease management, and personalized interventions. Reinforcement learning also allows for exploring adaptive imaging protocols, where imaging parameters can be dynamically adjusted based on patient-specific characteristics, optimizing image quality and reducing radiation exposure.

Integration with Electronic Health Records (EHR) and Clinical Data

Integrating deep learning models with electronic health records (EHR) and clinical data offers the opportunity to leverage comprehensive patient information for enhanced medical image analysis. By incorporating clinical data such as patient demographics, medical history, and laboratory results, deep learning models can account for individual patient characteristics and improve diagnostic accuracy. The fusion of imaging and clinical data holds the potential for more personalized and precise healthcare interventions, enabling tailored treatment strategies and improved patient outcomes.

Future advancements in deep learning for medical image analysis will improve interpretability, preserve privacy, facilitate lifelong adaptation, enable clinical decision support, and integrate imaging data with clinical information. These developing technologies and directions could transform medical image analysis, improving patient care and healthcare delivery.

Deep learning in medical image analysis can improve healthcare outcomes, disease identification, treatment options, and personalized medicine, as discussed here. By leveraging the wealth of information contained within medical images, deep learning can empower healthcare professionals with powerful tools to enhance patient care and transform the field of medical imaging.

Unleashing the Power of Deep Learning: Transforming Medical Image Analysis for Personalized and Efficient Healthcare

Deep learning in medical image analysis represents a remarkable convergence of technology and healthcare, opening new frontiers in diagnosis, treatment planning, and patient management. The ongoing advancements and future directions discussed in this article provide a glimpse into the exciting possibilities.

By embracing these innovations, we can unlock the full potential of deep learning and shape a future where medical image analysis plays a pivotal role in delivering personalized, precise, and efficient healthcare.