Building and scaling GenAI applications involves numerous moving parts, from logging your first LLM trace to managing experiments across complex agentic systems. The latest Opik updates make that process faster and clearer with expanded model support & enhanced integrations, experiment grouping, new quick-start options, and powerful new search and filtering capabilities. Explore the complete breakdown below of each new feature and learn how you can implement them into your projects.

Model Provider & Integration Updates

Opik now offers more extensive model support and enhanced integration features, providing the flexibility you need when building AI applications.

- Model Providers: 144 newly supported models, including OpenAI’s new GPT-5, Grok 4, DeepSeek v3, Qwen 3, Anthropic Claude Opus 4.1, and more

- LangChain Integration: Improved provider and model logging functionality

- Google ADK Integration: Improved graph-building capabilities

- Bedrock Integration: Addition of comprehensive cost tracking for ChatBedrock and ChatBedrockConverse

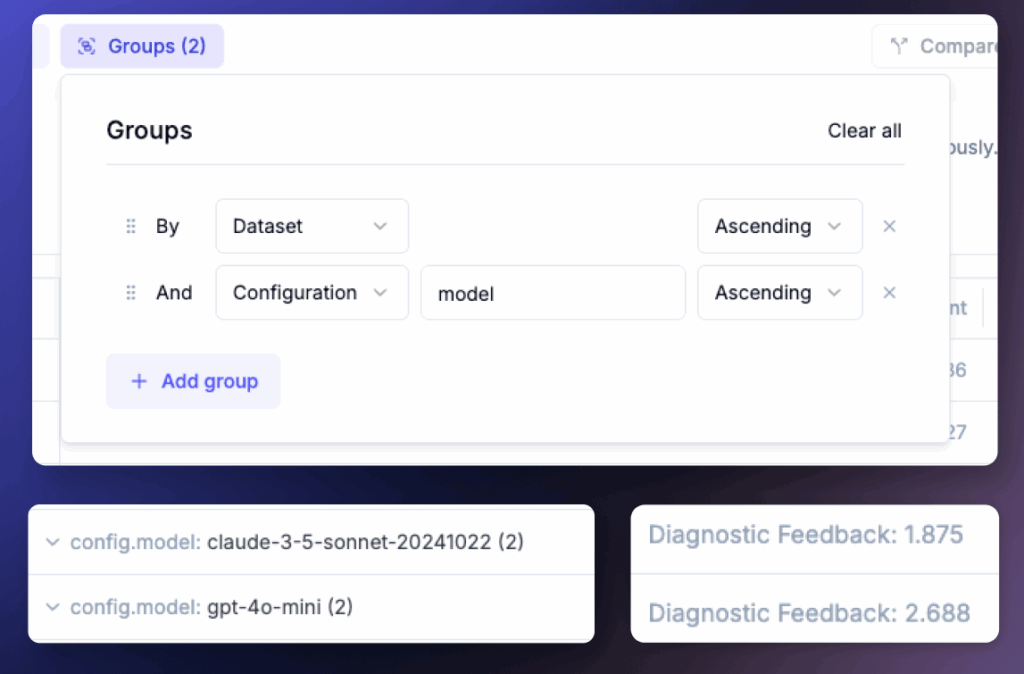

LLM Experiment Grouping

Identifying patterns in hundreds of experiments without manual filtering or combing through spreadsheets is now possible. The new Group by feature instantly organizes results by your chosen metadata, such as model, provider, tool usage, or duration, and views aggregated statistics for each group. You can quickly uncover your best model for a golden dataset, spot parameter combinations that hinder inference time, and compare model providers to see which one yields the strongest results.

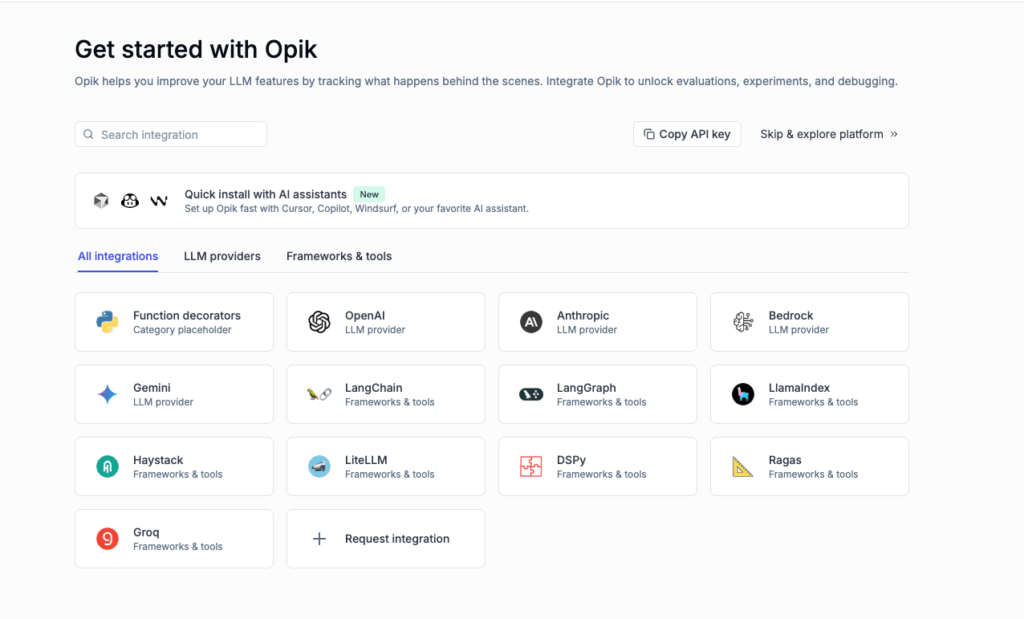

Logging Your First Trace

Logging your first trace within Opik is now simpler than ever. Use the new integration and framework search to get one-click setup instructions for your desired model provider or integration, and the AI-assisted install to paste a prompt into tools like Cursor or Copilot to configure Opik automatically, plus obtain instant access to collaborate with teammates or request support via Slack.

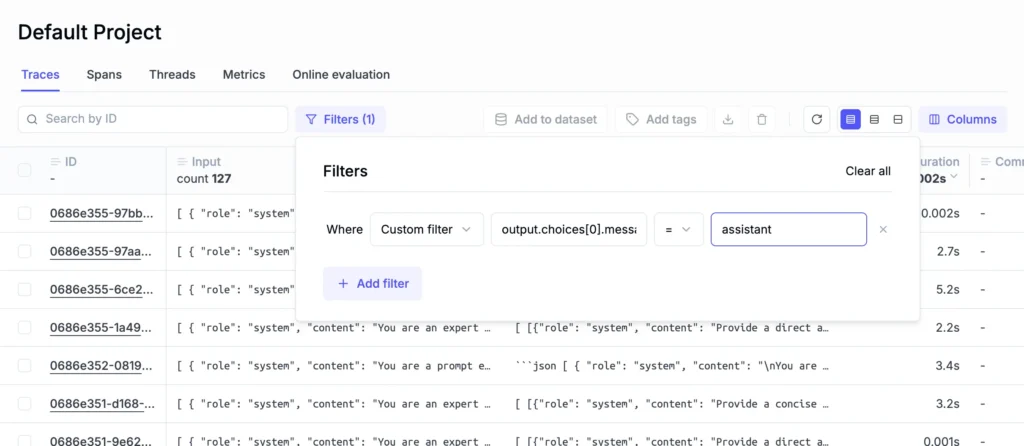

Advanced Filtering and Search Capabilities

You can now access an extensive range of search, sorting, and filtering capabilities to make insight detection across Opik simple. Create custom trace filters on input/output fields for traces and spans to achieve precise data filtering. Search within code blocks to yield better search results. Filter your experiments by dataset or prompt ID, and avoid unwanted crashes with the crash filtering feature, which supports values containing special characters.

Insights From the Comet Team

Pretraining: Breaking Down the Modern LLM Training Pipeline

The line between pretraining and fine-tuning in LLMs is increasingly blurred, making it harder to define what “training” means today. Abigail Morgan, Developer Advocate at Comet, delves into the evolving methods, inconsistent terminology, and opaque pipelines that complicate understanding model behavior, while emphasizing the critical role of pretraining and data curation to scale LLMs responsibly.

Beyond Vibe Coding: AI-Assisted Coding with Cursor AI and Opik

Discover how a senior software engineer navigates the pros and cons of vibecoding, exploring how to integrate prompt engineering, evaluation, and trace logging with proven developer best practices. This approach results in a workflow that leverages the strengths of AI coding tools while maintaining human guidance for long-term scalability and maintainability.

Connect & Learn with Fellow GenAI & ML Developers

Join us live in the coming weeks for the following conferences, workshops, prizes, and opportunities to connect with fellow AI builders:

- AI Tinkerers Demo Day – NYC, August 26th 2025

- MLOps World GenAI Summit 2025 – Austin, TX, October 7th-9th 2025