-

Context Window: What It Is and Why It Matters for AI Agents

Your AI customer support agent successfully handles 47 steps of a complex return request, from retrieving order details, checking inventory,…

-

Announcing the Future of AI Engineering: Self-Optimizing Agents

When ChatGPT launched in 2022, generative AI seemed like magic. With a simple API call and prompt, any application could…

-

Agent Orchestration Explained

The moment an LLM can decide which tool to call next, you’ve crossed a threshold. You’ve moved from building a…

-

LLM Testing: A Complete Guide for Application Developers

In July 2025, tech founder Jason Lemkin watched in horror as an AI coding assistant deleted a live company database,…

-

AI Agents: The Definitive Guide to Agentic Systems and How to Build Them for Production

What if your AI system was more than a chatbot? What if it could book flights, debug code, or process…

-

Human-in-the-Loop Review Workflows for LLM Applications & Agents

You’ve been testing a new AI assistant. It sounds confident, reasons step-by-step, cites sources, and handles 90% of real user…

-

Best LLM Observability Tools of 2025: Top Platforms & Features

LLM applications are everywhere now, and they’re fundamentally different from traditional software. They’re non-deterministic. They hallucinate. They can fail in…

-

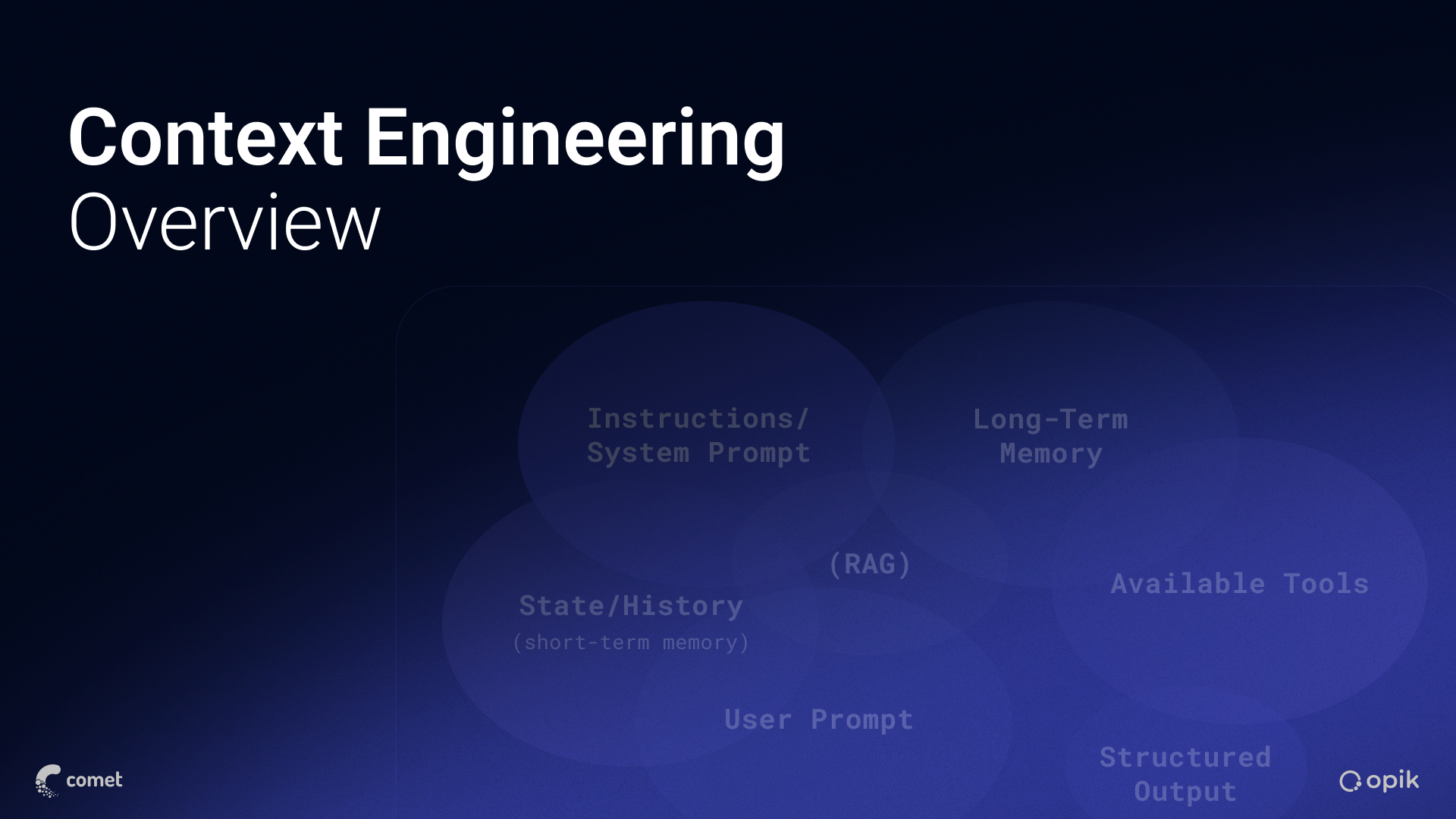

Context Engineering: The Discipline Behind Reliable LLM Applications & Agents

Teams cannot ship dependable LLM systems with prompt templates alone. Model outputs depend on the full set of instructions, facts,…

-

LLM Tracing: The Foundation of Reliable AI Applications

Your RAG pipeline works perfectly in testing. You’ve validated the retrieval logic, tuned the prompts, and confirmed the model returns…

-

LLM Monitoring: From Models to Agentic Systems

As software teams entrust a growing number of tasks to large language models (LLMs), LLM monitoring has become a vital…

Build AI tools in our virtual hackathon | $30,000 in prizes!

Category: LLMOps

Get started today for free.

You don’t need a credit card to sign up, and your Comet account comes with a generous free tier you can actually use—for as long as you like.