-

Human-in-the-Loop Review Workflows for LLM Applications & Agents

You’ve been testing a new AI assistant. It sounds confident, reasons step-by-step, cites sources, and handles 90% of real user…

-

Best LLM Observability Tools of 2025: Top Platforms & Features

LLM applications are everywhere now, and they’re fundamentally different from traditional software. They’re non-deterministic. They hallucinate. They can fail in…

-

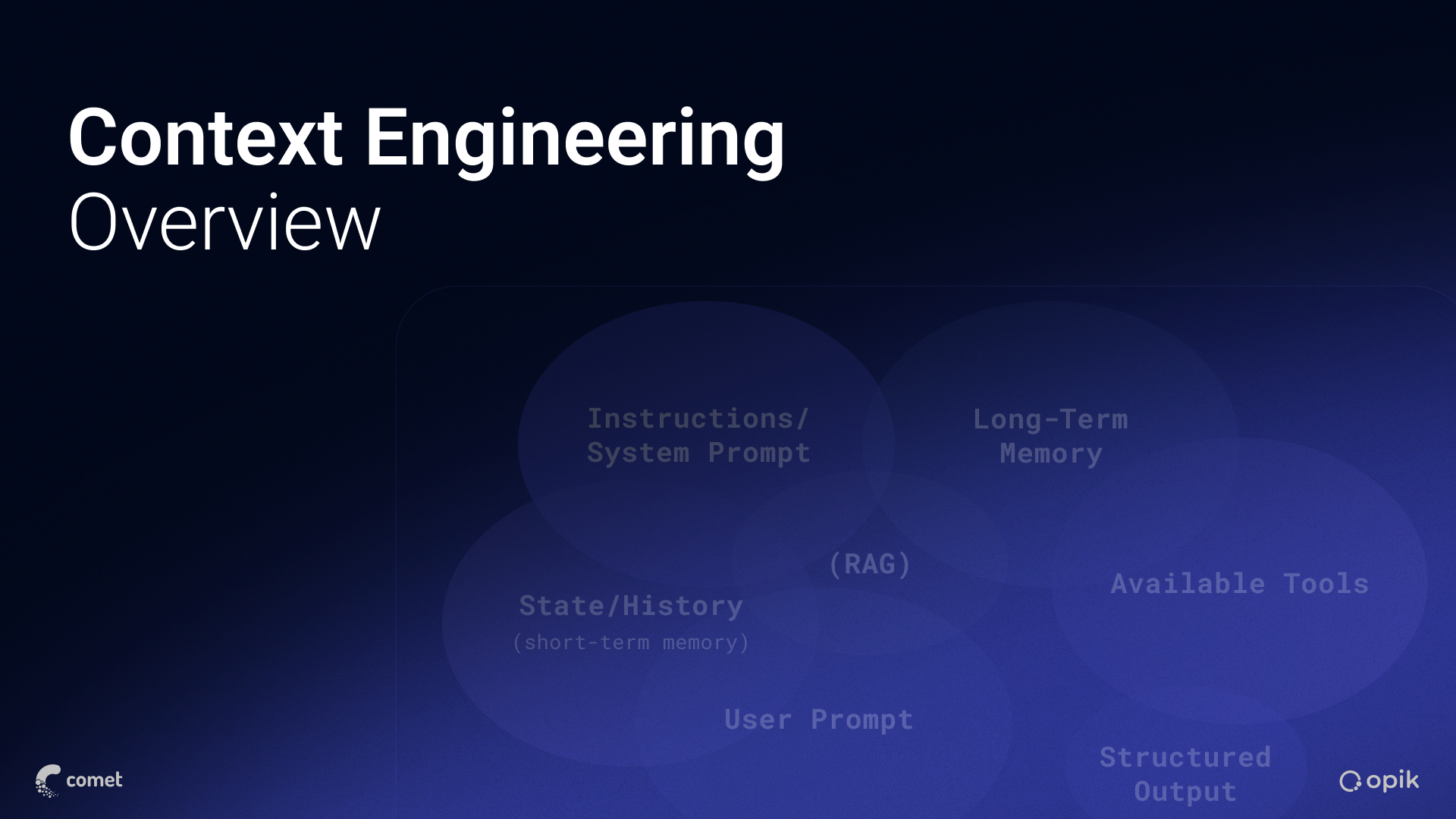

Context Engineering: The Discipline Behind Reliable LLM Applications & Agents

Teams cannot ship dependable LLM systems with prompt templates alone. Model outputs depend on the full set of instructions, facts,…

-

LLM Tracing: The Foundation of Reliable AI Applications

Your RAG pipeline works perfectly in testing. You’ve validated the retrieval logic, tuned the prompts, and confirmed the model returns…

-

LLM Monitoring: From Models to Agentic Systems

As software teams entrust a growing number of tasks to large language models (LLMs), LLM monitoring has become a vital…

-

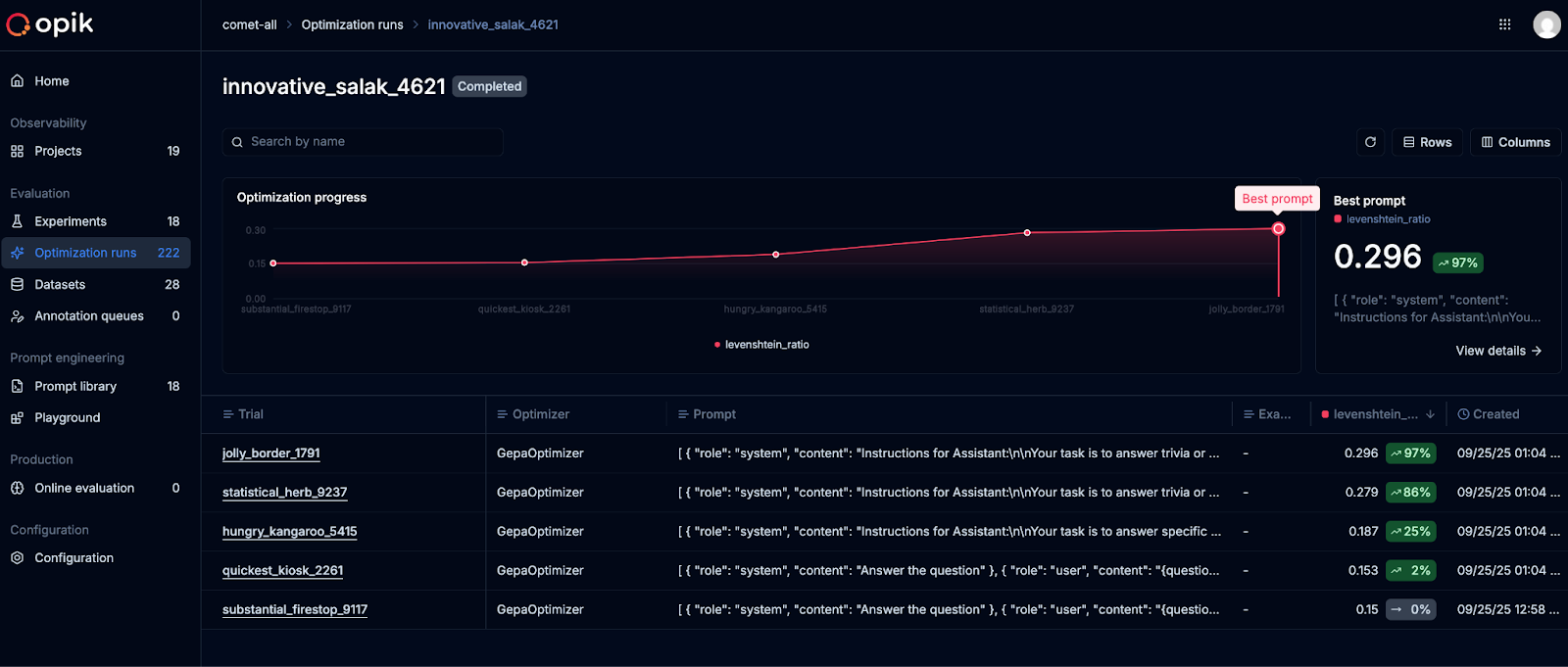

Opik Release Highlights: GEPA Agent Optimization, MCP Tool-Calling, and Automated Trace Analysis

As AI agents and LLM applications grow more powerful and complex, this month’s Opik updates integrate leading-edge technologies to help…

-

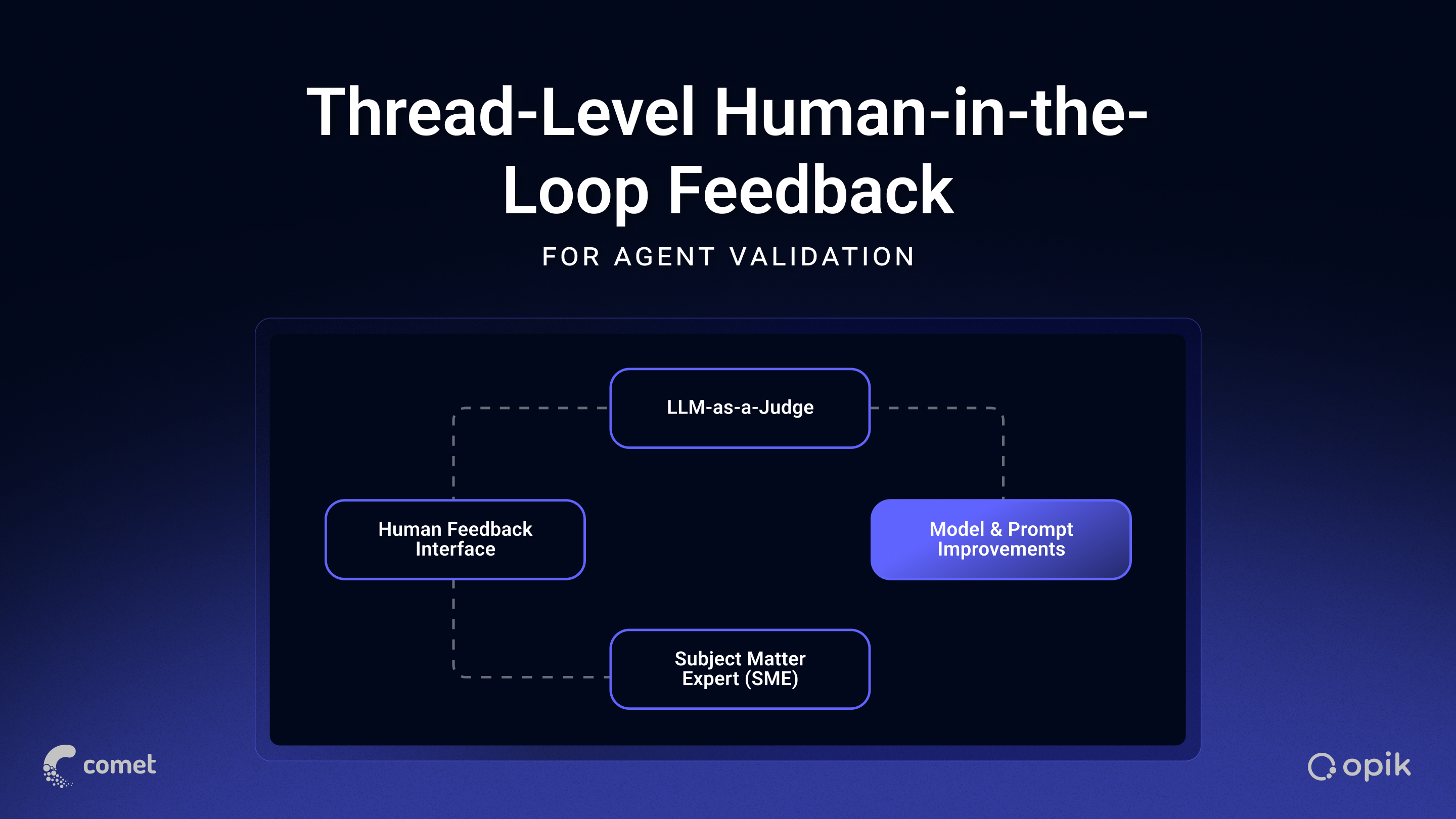

Thread-Level Human-in-the-Loop Feedback for Agent Validation

Imagine you are a developer building an agentic AI application or chatbot. You are probably not just coding a single…

-

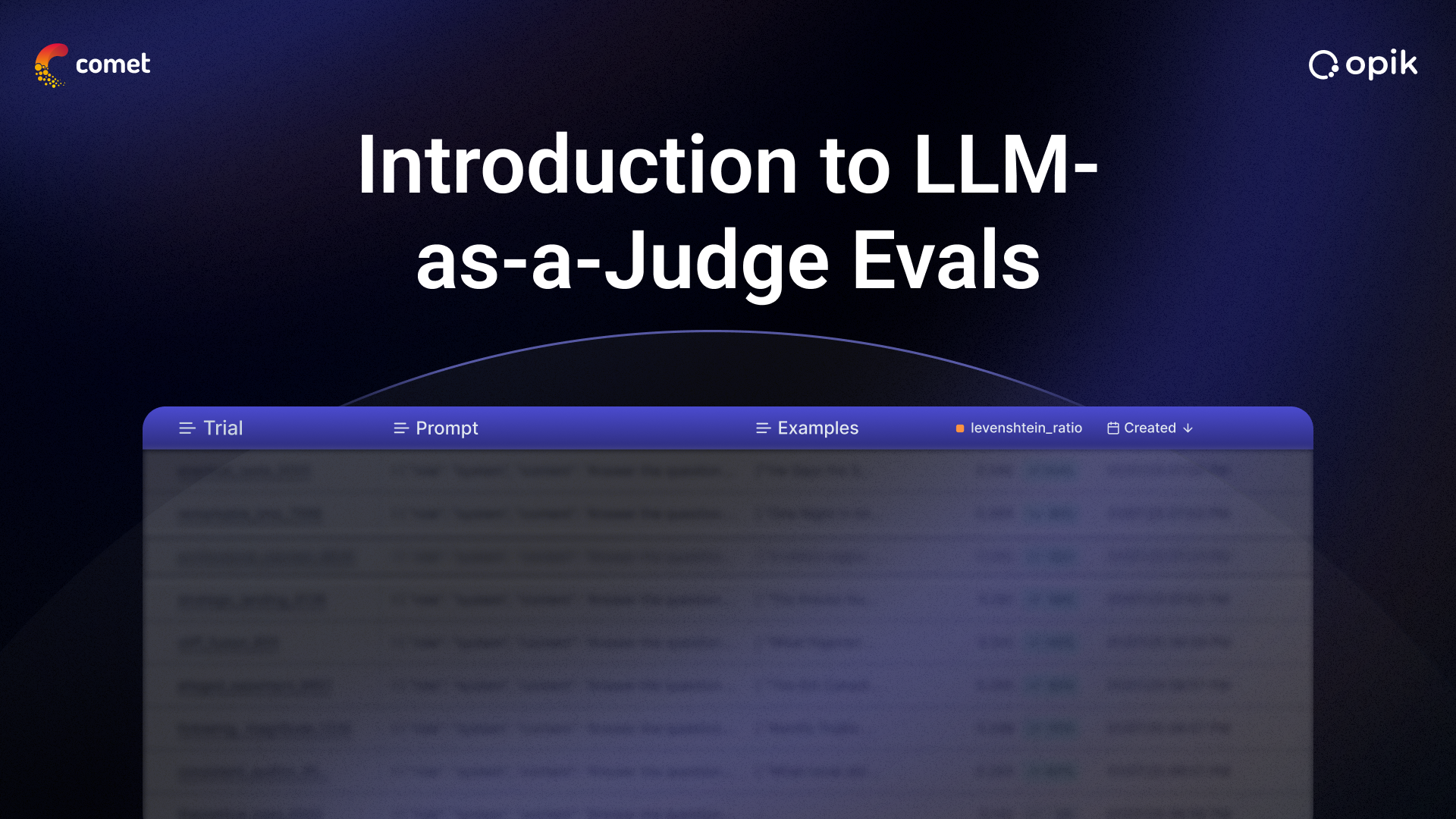

Introduction to LLM-as-a-Judge For Evals

In recent years, LLMs (large language models) have emerged as the most significant development in the AI space. They are…

-

LLM Evaluation: The Ultimate Guide to Metrics, Methods & Best Practices

The meteoric rise of large language models (LLMs) and their widespread use across more applications and user experiences raises an…

-

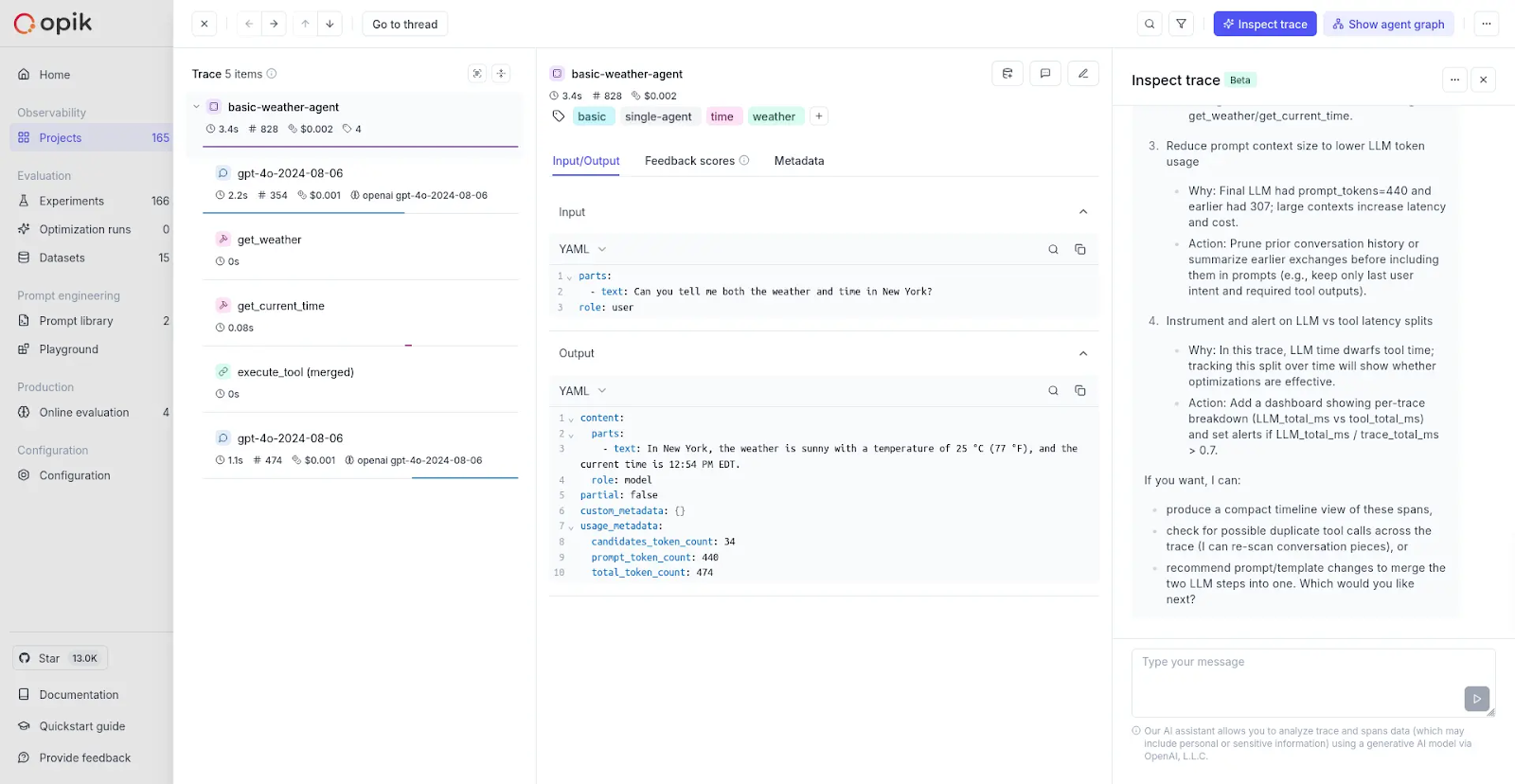

How We Used Opik to Build AI-Powered Trace Analysis

Within the GenAI development cycle, Opik does often-overlooked — yet essential — work of logging, testing, comparing, and optimizing steps…

Comet Blog

-

Announcing the Future of AI Engineering: Self-Optimizing Agents

When ChatGPT launched in 2022, generative AI seemed like magic. With a simple API call and prompt, any application could…

Get started today for free.

You don’t need a credit card to sign up, and your Comet account comes with a generous free tier you can actually use—for as long as you like.