Observability for Predibase with Opik

Predibase is a platform for fine-tuning and serving open-source Large Language Models (LLMs). It’s built on top of open-source LoRAX.

Account Setup

Comet provides a hosted version of the Opik platform, simply create an account and grab your API Key.

You can also run the Opik platform locally, see the installation guide for more information.

Tracking your LLM calls

Predibase can be used to serve open-source LLMs and is available as a model provider in LangChain. We will leverage the Opik integration with LangChain to track the LLM calls made using Predibase models.

Getting Started

Installation

To use the Opik integration with Predibase, you’ll need to have both the opik, predibase and langchain packages installed. You can install them using pip:

Configuring Opik

Configure the Opik Python SDK for your deployment type. See the Python SDK Configuration guide for detailed instructions on:

- CLI configuration:

opik configure - Code configuration:

opik.configure() - Self-hosted vs Cloud vs Enterprise setup

- Configuration files and environment variables

Configuring Predibase

You will also need to set the PREDIBASE_API_TOKEN environment variable to your Predibase API token. You can set it as an environment variable:

Or set it programmatically:

Logging LLM calls

In order to log the LLM calls to Opik, you will need to wrap the Predibase model with the OpikTracer from the LangChain integration. When making calls with that wrapped model, all calls will be logged to Opik:

In addition to passing the OpikTracer to the invoke method, you can also define it during the creation of the Predibase object:

You can learn more about the Opik integration with LangChain in our LangChain integration guide.

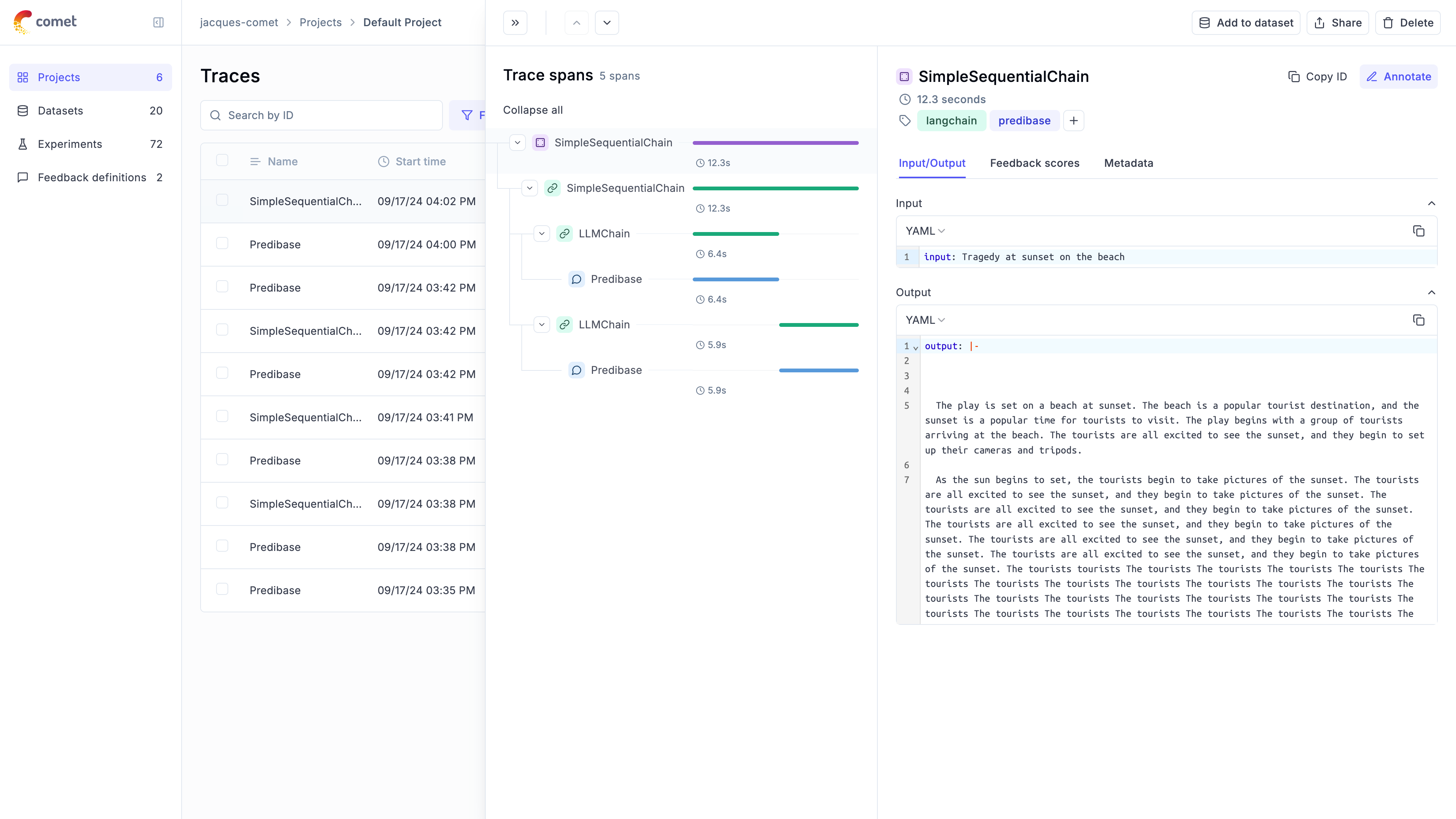

The trace will now be available in the Opik UI for further analysis.

Advanced Usage

SequentialChain Example

Now, let’s create a more complex chain and run it with Opik tracing:

Accessing Logged Traces

We can access the trace IDs collected by the Opik tracer:

Fine-tuned LLM Example

Finally, let’s use a fine-tuned model with Opik tracing:

Note: In order to use a fine-tuned model, you will need to have access to the model and the correct model ID. The code below will return a NotFoundError unless the model and adapter_id are updated.

Tracking your fine-tuning training runs

If you are using Predibase to fine-tune an LLM, we recommend using Predibase’s integration with Comet’s Experiment Management functionality. You can learn more about how to set this up in the Comet integration guide in the Predibase documentation. If you are already using an Experiment Tracking platform, worth checking if it has an integration with Predibase.