Observability for Strands Agents with Opik

Strands Agents is a simple yet powerful SDK that takes a model-driven approach to building and running AI agents.

The framework’s primary advantage is its ability to scale from simple conversational assistants to complex autonomous workflows, supporting both local development and production deployment with built-in observability.

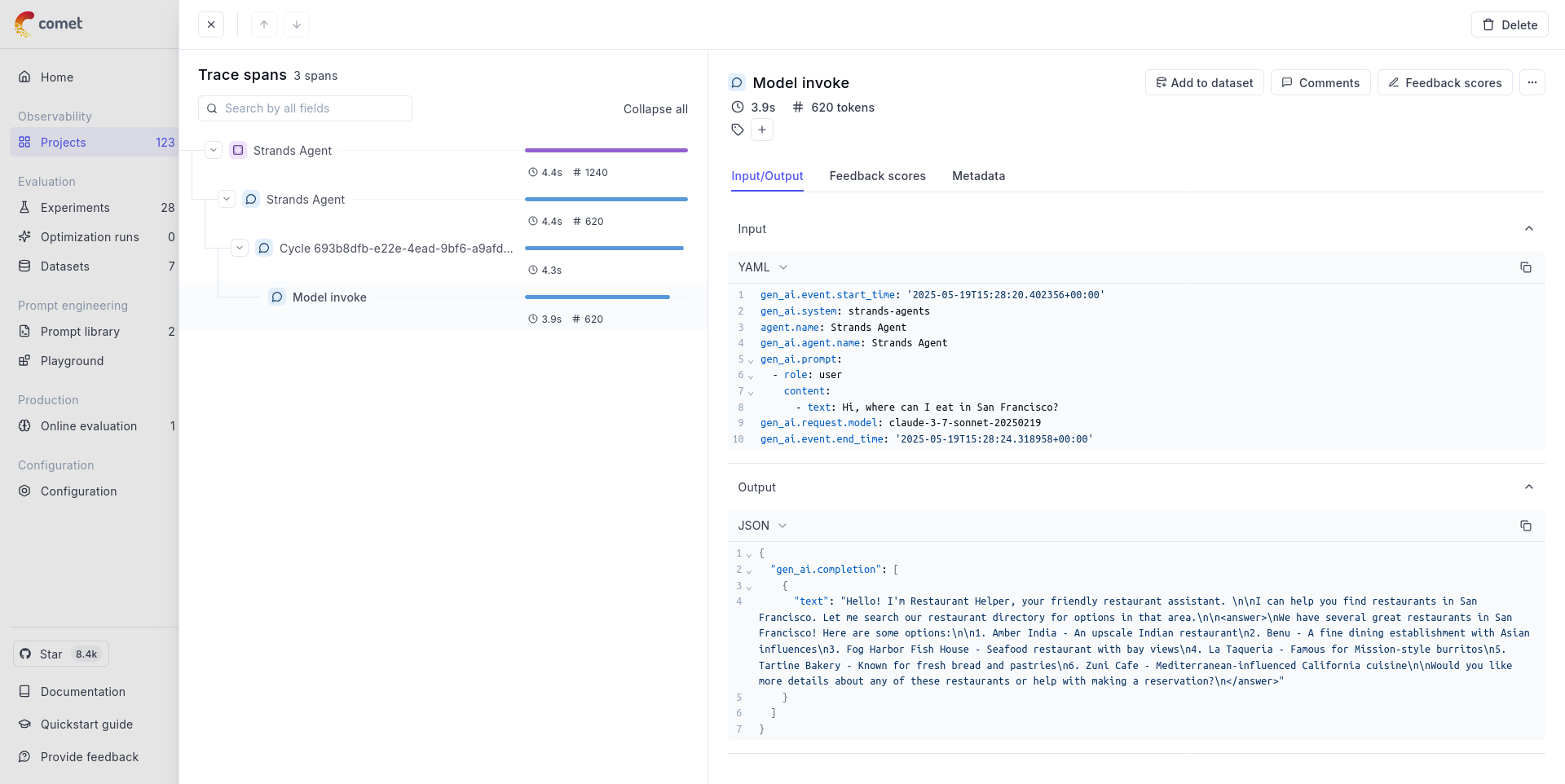

After running your Strands Agents workflow with the OpenTelemetry configuration, you’ll see detailed traces in the Opik UI showing agent interactions, model calls, and conversation flows as demonstrated in the screenshot above.

Getting started

To use the Strands Agents integration with Opik, you will need to have Strands Agents and the required OpenTelemetry packages installed:

In addition, you will need to set the following environment variables to configure the OpenTelemetry integration:

Opik Cloud

Enterprise deployment

Self-hosted instance

If you are using Opik Cloud, you will need to set the following environment variables:

To log the traces to a specific project, you can add the

projectName parameter to the OTEL_EXPORTER_OTLP_HEADERS

environment variable:

You can also update the Comet-Workspace parameter to a different

value if you would like to log the data to a different workspace.

Using Opik with Strands Agents

The example below shows how to use the Strands Agents integration with Opik:

Further improvements

If you would like to see us improve this integration, simply open a new feature request on Github.