Observability for Pydantic AI with Opik

Pydantic AI is a Python agent framework designed to build production grade applications with Generative AI.

Pydantic AI’s primary advantage is its integration of Pydantic’s type-safe data validation, ensuring structured and reliable responses in AI applications.

Account Setup

Comet provides a hosted version of the Opik platform, simply create an account and grab your API Key.

You can also run the Opik platform locally, see the installation guide for more information.

Getting Started

Installation

To use the Pydantic AI integration with Opik, you will need to have Pydantic AI and logfire installed:

Configuring Pydantic AI

In order to use Pydantic AI, you will need to configure your LLM provider API keys. For this example, we’ll use OpenAI. You can find or create your API keys in these pages:

You can set them as environment variables:

Or set them programmatically:

Configuring OpenTelemetry

You will need to set the following environment variables to make sure the data is logged to Opik:

Opik Cloud

Enterprise deployment

Self-hosted instance

If you are using Opik Cloud, you will need to set the following environment variables:

To log the traces to a specific project, you can add the projectName parameter to the OTEL_EXPORTER_OTLP_HEADERS environment variable:

You can also update the Comet-Workspace parameter to a different value if you would like to log the data

to a different workspace.

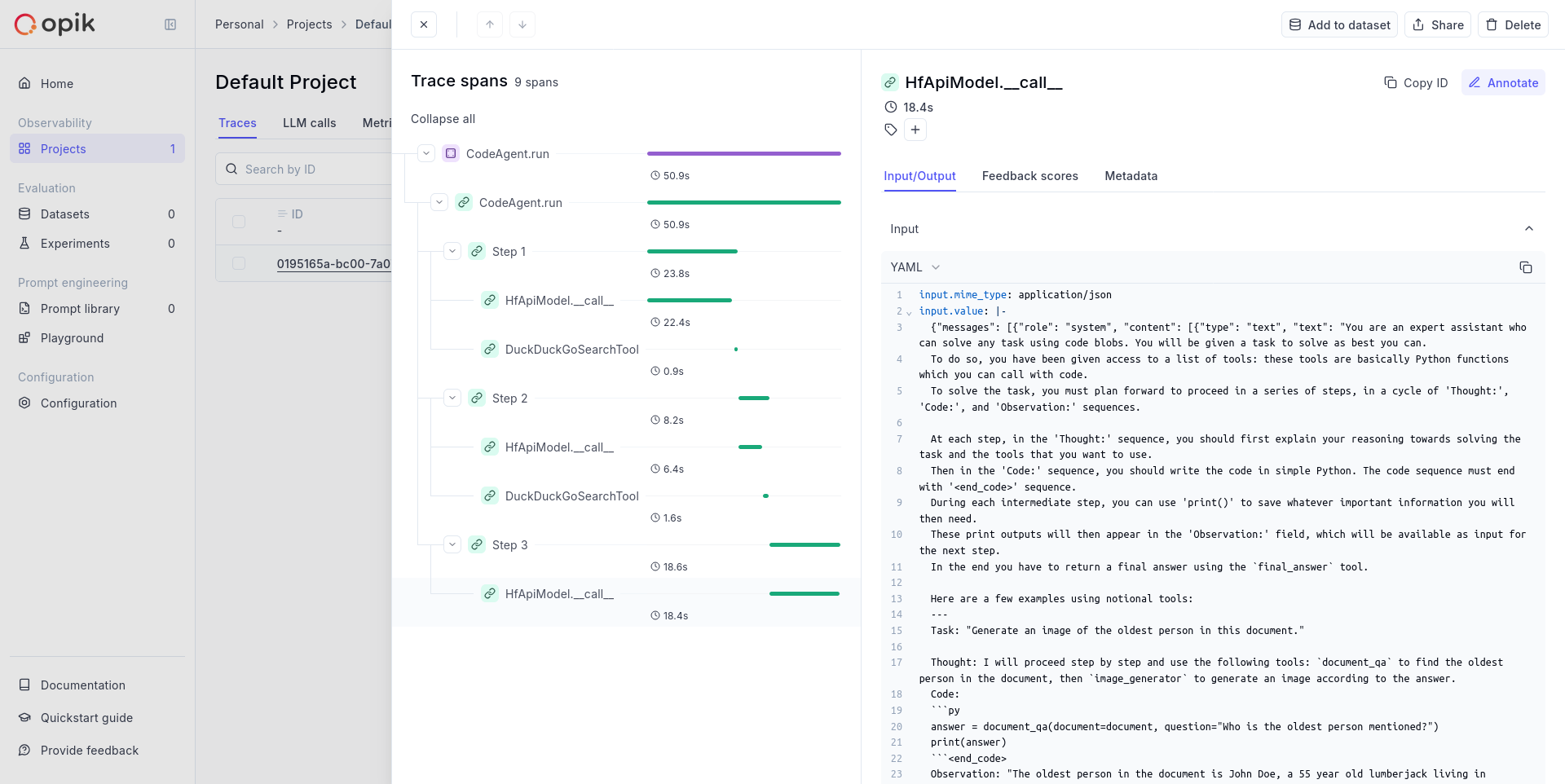

Using Opik with Pydantic AI

To track your Pydantic AI agents, you will need to configure logfire as this is the framework used by Pydantic AI to enable tracing.

Practical Example

Now that everything is configured, you can create and run Pydantic AI agents:

Advanced usage

You can reduce the amount of data logged to Opik by setting capture_all to False:

When this parameter is set to False, we will not log the exact request made

to the LLM provider.

Further improvements

If you would like to see us improve this integration, simply open a new feature request on Github.