Observability for Ollama with Opik

Ollama allows users to run, interact with, and deploy AI models locally on their machines without the need for complex infrastructure or cloud dependencies.

There are multiple ways to interact with Ollama from Python including but not limited to the ollama python package, LangChain or by using the OpenAI library. We will cover how to trace your LLM calls for each of these methods.

Account Setup

Comet provides a hosted version of the Opik platform, simply create an account and grab your API Key.

You can also run the Opik platform locally, see the installation guide for more information.

Getting started

Configure Ollama

Before starting, you will need to have an Ollama instance running. You can install Ollama by following the quickstart guide which will automatically start the Ollama API server. If the Ollama server is not running, you can start it using ollama serve.

Once Ollama is running, you can download the llama3.1 model by running ollama pull llama3.1. For a full list of models available on Ollama, please refer to the Ollama library.

Installation

You will also need to have Opik installed. You can install it by running:

Configuring Opik

Configure the Opik Python SDK for your deployment type. See the Python SDK Configuration guide for detailed instructions on:

- CLI configuration:

opik configure - Code configuration:

opik.configure() - Self-hosted vs Cloud vs Enterprise setup

- Configuration files and environment variables

Tracking Ollama calls made with Ollama Python Package

To get started you will need to install the Ollama Python package:

We will then utilize the track decorator to log all the traces to Opik:

The trace will now be displayed in the Opik platform.

Tracking Ollama calls made with OpenAI

Ollama is compatible with the OpenAI format and can be used with the OpenAI Python library. You can therefore leverage the Opik integration for OpenAI to trace your Ollama calls:

The local LLM call is now traced and logged to Opik.

Tracking Ollama calls made with LangChain

In order to trace Ollama calls made with LangChain, you will need to first install the langchain-ollama package:

You will now be able to use the OpikTracer class to log all your Ollama calls made with LangChain to Opik:

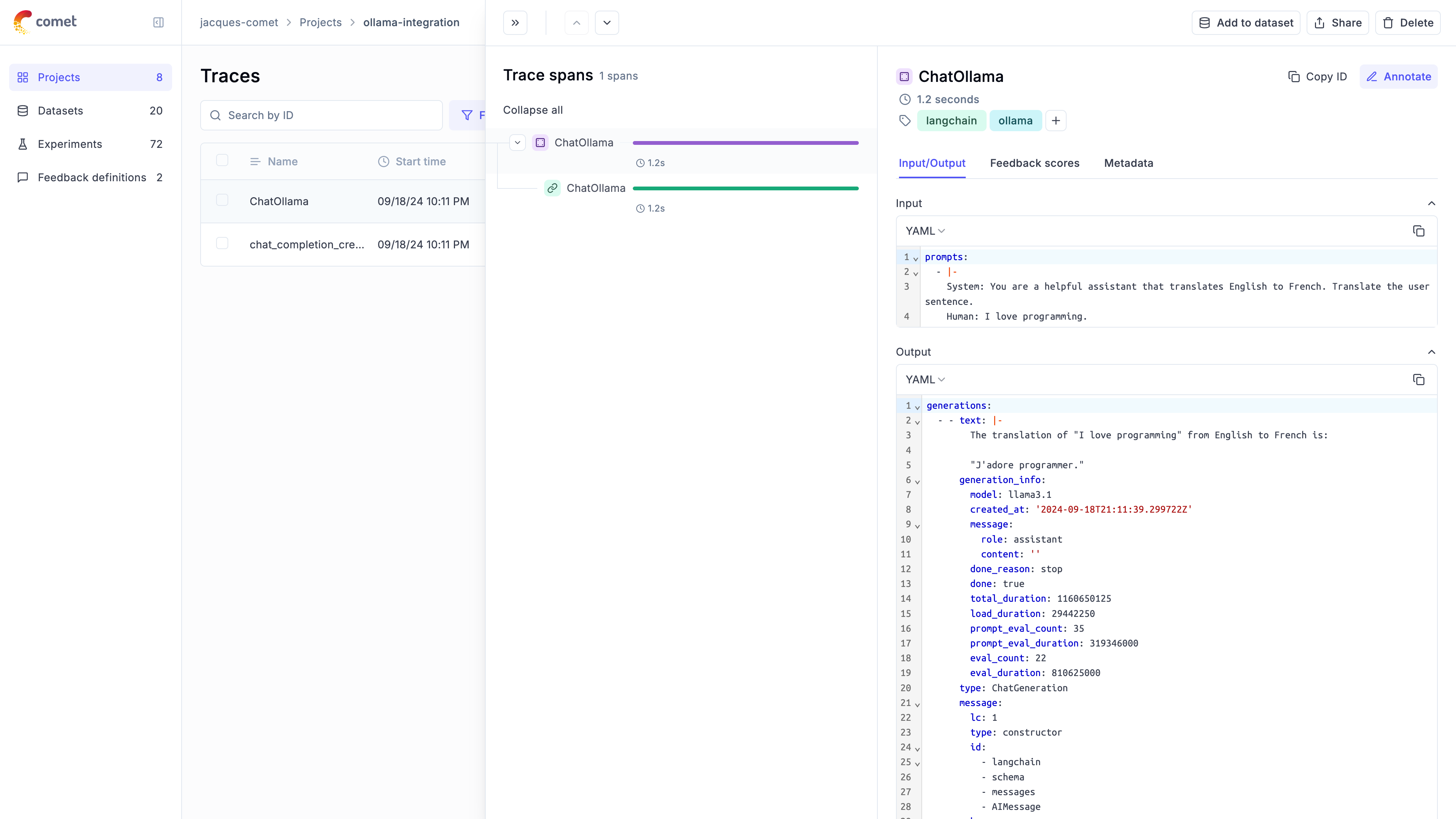

You can now go to the Opik app to see the trace: