Observability for LiveKit with Opik

LiveKit Agents is an open-source Python framework for building production-grade multimodal and voice AI agents. It provides a complete set of tools and abstractions for feeding realtime media through AI pipelines, supporting both high-performance STT-LLM-TTS voice pipelines and speech-to-speech models.

LiveKit Agents’ primary advantage is its built-in OpenTelemetry support for comprehensive observability, making it easy to monitor agent sessions, LLM calls, function tools, and TTS operations in real-time applications.

Getting started

To use the LiveKit Agents integration with Opik, you will need to have LiveKit Agents and the required OpenTelemetry packages installed:

Environment configuration

Configure your environment variables based on your Opik deployment:

Opik Cloud

Enterprise deployment

Self-hosted instance

If you are using Opik Cloud, you will need to set the following environment variables:

To log the traces to a specific project, you can add the

projectName parameter to the OTEL_EXPORTER_OTLP_HEADERS

environment variable:

You can also update the Comet-Workspace parameter to a different

value if you would like to log the data to a different workspace.

Using Opik with LiveKit Agents

LiveKit Agents includes built-in OpenTelemetry support. To enable telemetry, configure a tracer provider using set_tracer_provider in your entrypoint function:

Make sure to create a .env file with the environment variables you configured above as well as LiveKit,

DeepGram and OpenAI API keys and credentials. It should look something like this:

Then, run the application with following command:

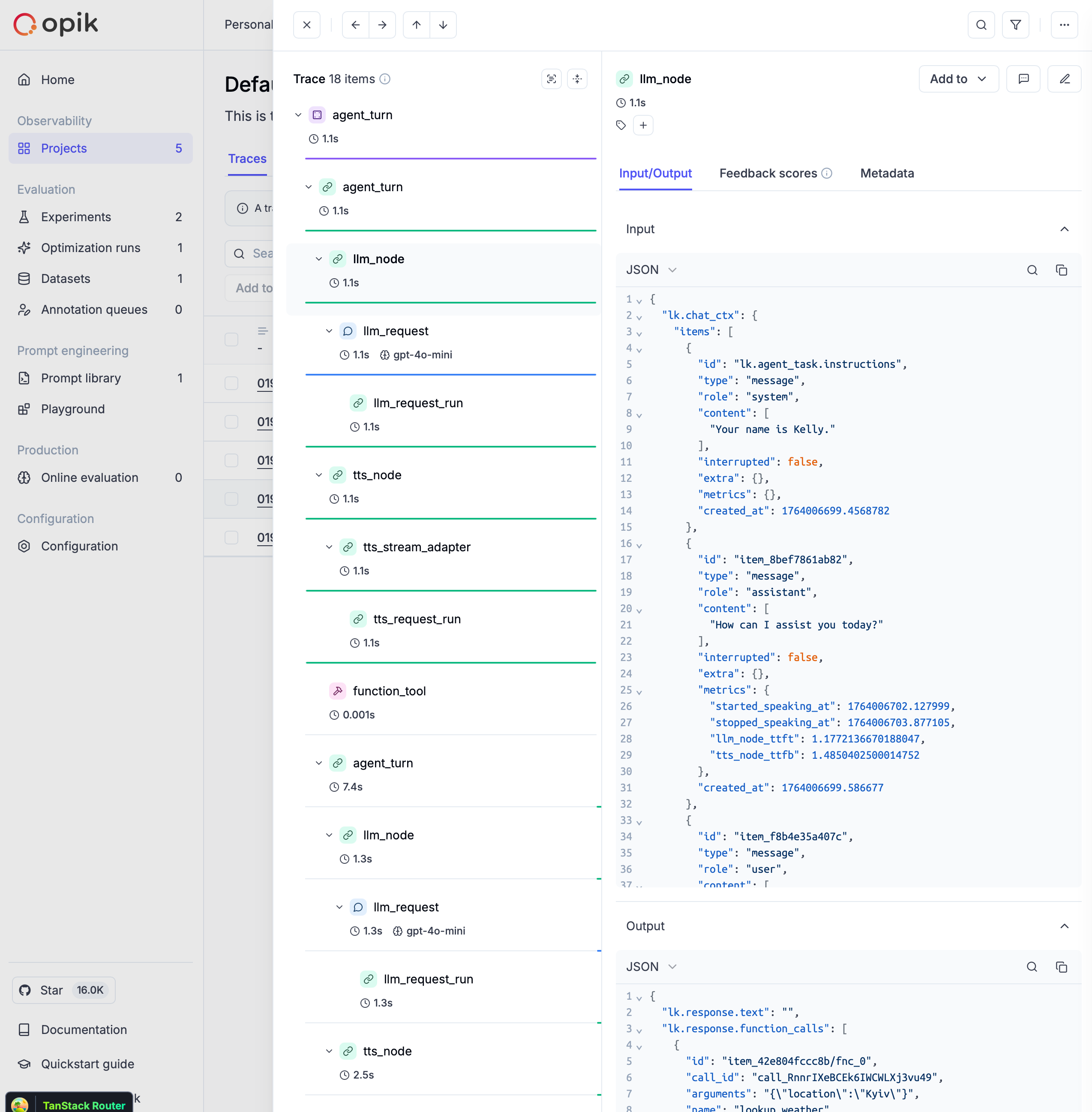

After a few seconds, you should see traces in Comet ML:

What gets traced

With this setup, your LiveKit agent will automatically trace:

- Session events: Session start and end with metadata

- Agent turns: Complete conversation turns with timing

- LLM operations: Model calls, prompts, responses, and token usage

- Function tools: Tool executions with inputs and outputs

- TTS operations: Text-to-speech conversions with audio metadata

- STT operations: Speech-to-text transcriptions

- End-of-turn detection: Conversation flow events

Further improvements

If you have any questions or suggestions for improving the LiveKit Agents integration, please open an issue on our GitHub repository.