Observability for Google Gemini (TypeScript) with Opik

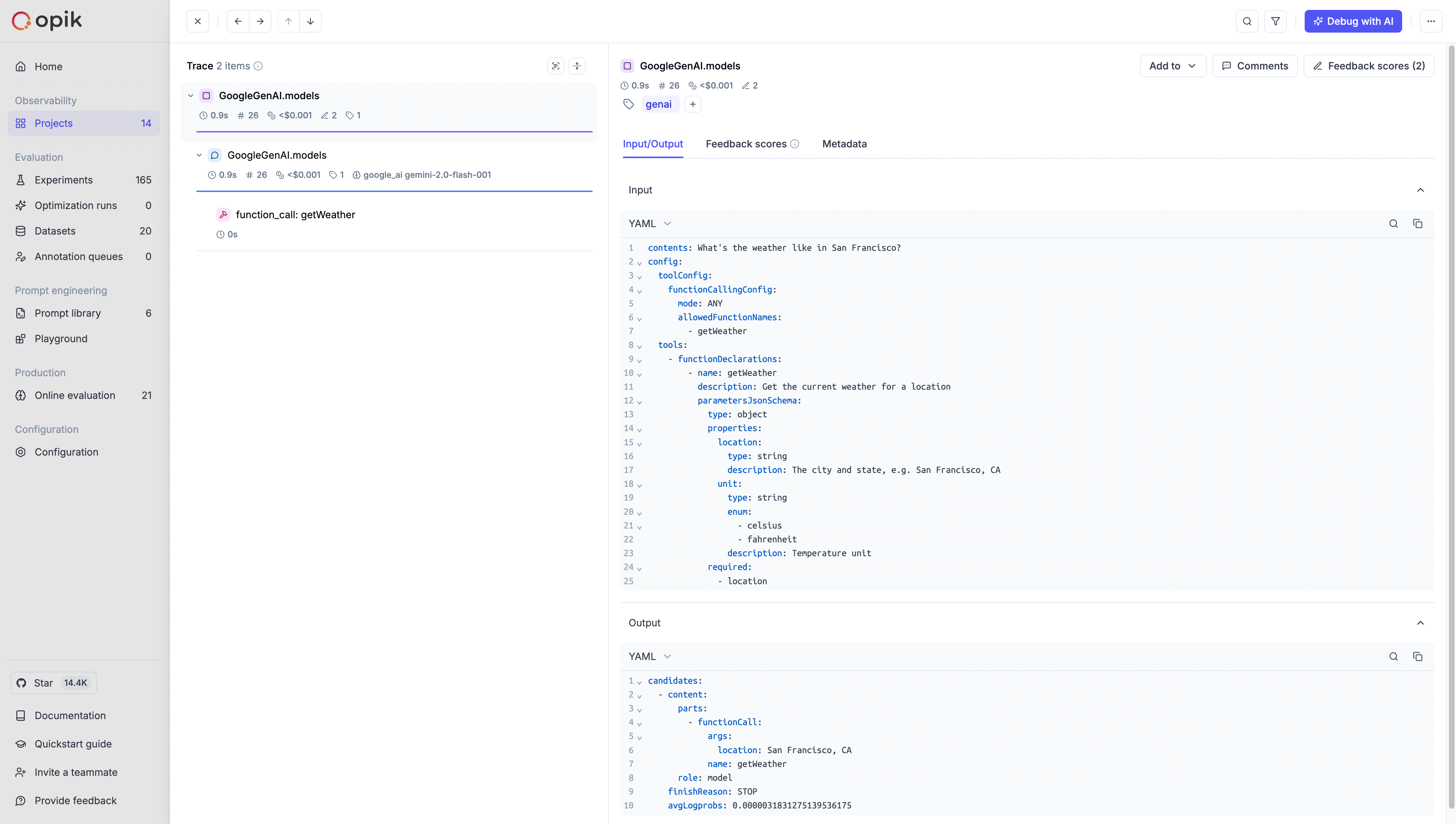

Opik provides seamless integration with the Google Generative AI Node.js SDK (@google/genai) through the opik-gemini package, allowing you to trace, monitor, and debug your Gemini API calls.

Features

- Comprehensive Tracing: Automatically trace Gemini API calls, including text generation, chat, and multimodal interactions

- Hierarchical Visualization: View your Gemini requests as structured traces with parent-child relationships

- Detailed Metadata Capture: Record model names, prompts, completions, token usage, and custom metadata

- Error Handling: Capture and visualize errors encountered during Gemini API interactions

- Custom Tagging: Add custom tags to organize and filter your traces

- Streaming Support: Full support for streamed responses with token-by-token tracing

- VertexAI Support: Works with both Google AI Studio and Vertex AI endpoints

Installation

Option 1: Using npm

Option 2: Using yarn

Requirements

- Node.js ≥ 18

- Google Generative AI SDK (

@google/genai≥ 1.0.0) - Opik SDK (automatically installed as a dependency)

Note: The official Google GenAI SDK package is @google/genai (not @google/generative-ai). This is Google Deepmind’s unified SDK for both Gemini Developer API and Vertex AI.

Basic Usage

Using with Google Generative AI Client

To trace your Gemini API calls, you need to wrap your Gemini client instance with the trackGemini function:

Using with Streaming Responses

The integration fully supports Gemini’s streaming responses:

Advanced Configuration

The trackGemini function accepts an optional configuration object to customize the integration:

Using with VertexAI

The integration also supports Google’s VertexAI platform. Simply configure your Gemini client for VertexAI and wrap it with trackGemini:

Chat Conversations

Track multi-turn chat conversations with Gemini:

Troubleshooting

Missing Traces: Ensure your Gemini and Opik API keys are correct and that you’re calling await trackedGenAI.flush() before your application exits.

Incomplete Data: For streaming responses, make sure you’re consuming the entire stream before ending your application.

Hierarchical Traces: To create proper parent-child relationships, use the parent option in the configuration when you want Gemini calls to be children of another trace.

Performance Impact: The Opik integration adds minimal overhead to your Gemini API calls.

VertexAI Authentication: When using VertexAI, ensure you have properly configured your Google Cloud project credentials.