Active Contour Models (Snakes): Unraveling the Secrets of Image Segmentation and Boundary Detection

Imagine a world without computers that can perceive and understand images! The field of computer vision has brought us a step closer to this reality by developing sophisticated algorithms to extract meaningful information from visual data automatically. Image segmentation, a fundamental task in computer vision, plays a crucial role in object recognition, scene understanding, and medical imaging.

One remarkable technique that has stood the test of time is the Active Contour Model, affectionately known as “snakes.” In this article, we delve into the depths of snakes and explore how they gracefully dance through images, seeking and capturing object boundaries with precision. We will also compare their performance with the formidable deep learning-based segmentation techniques, bringing out the unique strengths of each approach.

The Birth of Snakes: Origins and Motivation

In 1987, a group of visionaries — Michael Kass, Andrew Witkin, and Demetri Terzopoulos — presented a groundbreaking idea to revolutionize image segmentation. The concept of active contour models was born, inspired by the analogy of snakes actively exploring their surroundings. Just like snakes can adapt their shape and form to the environment’s contours, these models aimed to do the same for objects in images.

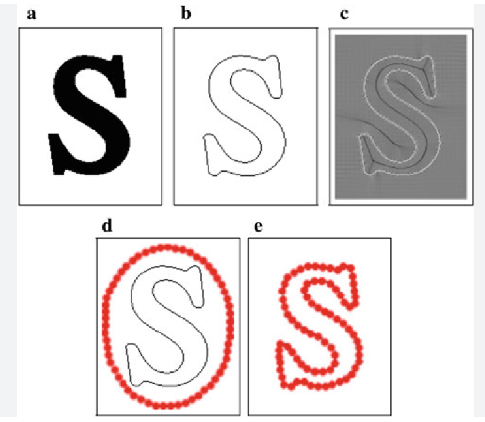

Setting the Stage: Snake Initialization

The success of the snake’s performance relies heavily on its initial placement on the image canvas. But how do we start this dance? There are several ways to initialize the snake, with edge detection being one of the most common methods. Edges guide the snake to the object’s silhouette, where the dance begins.

Another approach involves user interaction, where a user draws an approximate boundary around the object. The snake then gracefully takes over, refining the boundary with its smooth motion. This initialization allows the snake to showcase its skills while following the user’s guidance.

Optimization: Snake Convergence

Rhythm is necessary for a beautiful performance. The snake, too, follows an iterative optimization process to converge towards the object’s actual boundary. The energy functional acts guide the snake’s graceful movements through the image space.

Using optimization algorithms such as gradient descent, the snake performs its intricate steps, gracefully adjusting its shape and position. With each iteration, the snake gets closer to perfection, a testament to the elegance and versatility of active contour models.

Active Contour Models: Mastering Object Boundary Detection

In the previous section, we introduced the enigmatic concept of active contour models, known as “snakes,” and explored their elegant dance toward accurate image segmentation. Now, we delve deeper into the intricacies of these models, understanding how they gracefully adapt to varying object boundaries and handle complex shapes.

Flexibility and Robustness

One of the most remarkable features of active contour models is their ability to handle complex object shapes. Snakes can flex and bend, adapting themselves to the intricacies of the object’s contour. Whether it’s a smooth circle, an irregular polygon, or a winding serpentine, the snake gracefully glides along the edges, capturing every subtle curve and twist.

This flexibility allows active contour models to be applied in various domains, from medical image analysis, where they accurately delineate organs, to industrial inspections, where they identify intricate defects on complex surfaces. Active contour models demonstrate their prowess across diverse image segmentation tasks.

The Struggle with Unclear Boundaries

Despite their grace, active contour models face challenges when encountering objects with unclear or weak boundaries. The snake’s performance can falter without precise edges. The external energy term heavily relies on edge information, and the snake may struggle to converge accurately when edges are faint or ambiguous.

However, researchers have devised various solutions to address this issue. Adding gradient magnitude information, texture cues, or region-based information to the external energy term can help the snake navigate these challenging situations.

Active Contour Models in Real-Time

One of the most appealing aspects of active contour models is their computational efficiency, allowing them to perform real-time segmentation on live video streams. They can smoothly and swiftly adapt to object movements, making them suitable for various applications, such as object tracking and human-computer interaction.

Real-time segmentation using snakes has proven invaluable in robotics, enabling robots to navigate and interact with their environment autonomously. The ability to perform on-the-fly segmentation showcases the elegance and efficiency of active contour models.

Deep Learning Takes the Stage: A Symphony of Image Segmentation with Neural Networks

In the previous sections, we explored the captivating world of active contour models (snakes) and accurate image segmentation. Now, we venture into a new realm, where neural networks come to the stage, combining image segmentation and boundary detection.

The Rise of Deep Learning in Computer Vision

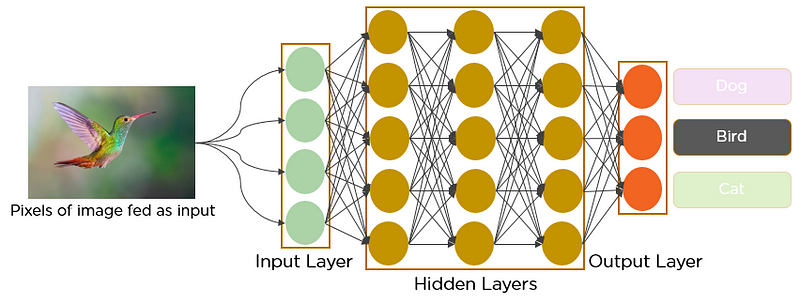

Deep learning has transformed computer vision during the past decade, reviving image analysis workloads. Convolutional Neural Networks (CNNs) have enthralled audiences with their prodigious capacity to extract nuanced patterns and features from massive volumes of data. CNNs orchestrate a symphony of convolutions, pooling, and activations to create representations that reveal the essence of the visual world.

The Convolutional Ballet: From Pixels to Features

In deep learning, the pixels in an image take center stage as the raw notes. The CNN’s first act begins with convolutions, where filters glide through the image, detecting edges, textures, and shapes. These learned features provide abstract representations that encapsulate the essence of the objects.

This ballet of convolutions allows deep learning models to generalize across various objects and scenes. The power of CNNs lies in their ability to identify meaningful patterns, enabling them to detect objects of varying shapes and appearances, even in the presence of noise and clutter.

U-Net: Embracing Semantic Segmentation

One of the most enchanting acts in deep learning is U-Net. With its encoder-decoder design, U-Net architecture gracefully navigates through the complexities of semantic segmentation. The encoder compresses the spatial information while the decoder expands it, revealing the fine details of the segmented objects.

This waltz between the encoder and decoder is particularly captivating in medical image segmentation, where U-Net has shown remarkable performance in delineating structures like tumors, blood vessels, and organs. U-Net gracefully pairs spatial compression and expansion, creating detailed and precise segmentations.

The DeepLab Concerto: Harmonizing Global and Local Information

DeepLab is a conductor of global and local information in deep learning-based segmentation. DeepLab leverages dilated convolutions, enabling a broader receptive field without losing resolution. This strikes a perfect balance between capturing global context and preserving local details.

With DeepLab, deep learning models produce exquisite segmentations akin to a perfectly orchestrated harmony, combining context and detail. In applications like semantic image segmentation, object detection, and scene parsing, DeepLab has enchanted the computer vision community, demonstrating its brilliance in synthesizing information across different scales.

Deep Learning and Large Datasets

Deep learning models thrive on extensive datasets, and the availability of vast labeled datasets has been instrumental in nurturing the capabilities of neural networks. From ImageNet to COCO, these datasets have acted as grand stages, allowing deep learning models to showcase their prowess.

However, the insatiable hunger for labeled data poses challenges in domains with limited or scarce data. Unlike snakes, which can adapt with minimal training data, deep learning models demand large datasets to perform their symphony effectively.

Active Contour Models and Deep Learning Unite

In the previous sections, we marveled at the elegance of active contour models (snakes) and the symphony of deep learning-based segmentation techniques. Now, we witness a harmonious duet — a fusion of their strengths creating a more robust and versatile performance in image segmentation and boundary detection.

The Power of Fusion: Combining Snakes and CNNs

Active contour models and deep learning techniques complement each other, compensating for their limitations. Fusion techniques leverage the strengths of both approaches, enhancing their performance and expanding their applications to a broader range of scenarios.

Hybrid Approaches: Fusion

One common fusion technique involves employing active contour models to refine the segmentation results obtained from deep learning models. The deep learning model takes the lead, producing an initial segmentation. Then, the active contour model follows, fine-tuning the boundaries and correcting any inaccuracies.

This fusion ballet is particularly useful when dealing with objects with indistinct boundaries. The deep learning model captures global context, while the active contour model focuses on local details, resulting in more precise segmentations. This duet combines the strengths of both approaches, delivering high-quality performance.

Transfer Learning

Transfer learning adds another dimension to this fusion of techniques. It allows deep learning models pre-trained on large datasets to be fine-tuned on smaller, domain-specific datasets.

For instance, a CNN trained on a vast dataset of natural images can be transferred and adapted to medical image segmentation. This process leverages the pre-learned features, saving time and computational resources, while the active contour model refines the segmentation for the specific medical domain. The outcome showcases the versatility of these fusion techniques.

Data Augmentation

Data augmentation injects diversity into the dataset, enabling the deep learning model to generalize better and capture a broader range of object variations. This augments the fusion of active contour models and deep learning techniques, resulting in more robust and accurate segmentation. The deep learning model gains exposure to various scenarios by transforming and augmenting the training data.

Beyond Image Segmentation

The relationship between active contour models and deep learning extends beyond image segmentation, showcasing their versatility in various computer vision tasks. Object tracking, semantic image understanding, and even generative tasks like image-to-image translation are enriched by the fusion of these techniques.

For instance, combining active contour models with Generative Adversarial Networks (GANs) opens up new possibilities for image synthesis with precise object boundaries. This fusion blurs the line between real and synthetic images.

Wrapping Up…

As we conclude our exploration, we celebrate the versatility and elegance of active contour models and deep learning approaches. Active contour models and their robustness and real-time performance synergize with deep learning and its ability to learn intricate patterns and features.

Image segmentation and boundary detection will evolve with new techniques and approaches and continue captivating researchers and practitioners, inspiring breakthroughs and enriching the visual understanding of our ever-changing world. We eagerly await the next act in the enchanting realm of computer vision.