Use non-Comet optimizers¶

Comet provides its own powerful hyperparameter optimizer, which is designed for experiment management and supports different search algorithms, including random, grid, and bayes.

Alternatively, you can use your own or any third-party optimizer. Use Experiment.log_optimization to log all important optimization data to Comet:

def trial(...):

experiment = comet_ml.Experiment(...)

experiment.log_optimization(

optimization_id=OPTIMIZATION_ID, # This should be unique across all trials of the same sweep

metric_name="loss",

metric_value=loss,

parameters={"learning_rate": learning_rate, "n_layers": n_layers},

objective="minimize",

)

Note

The Experiment.log_optimization method is available in version 3.33.10 and later of the Comet Python SDK.

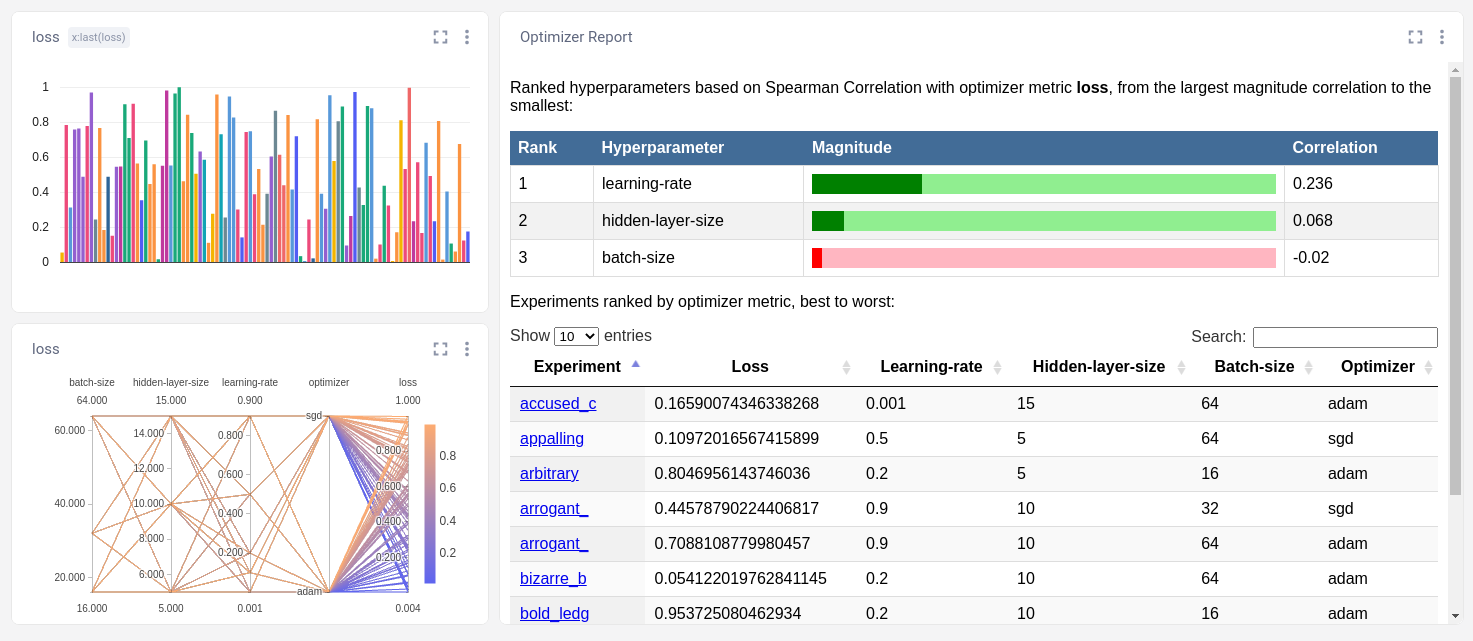

By using Comet's optimizer or logging optimization data with Experiment.log_optimization, you gain access to Comet's powerful built-in tools. These tools include the Optimizer Report panel and Parallel Coordinate Report:

Below is an example search algorithm. Note that it has many limitations, including:

- When selecting a combination randomly, all combinations are in memory and could crash your computer if there are too many.

- If a training example doesn't complete (for example, it crashes) then there is no mechanism to try the combination again.

- It only runs one trial for each combination.

- It can't be used in a distributed manner (you need a centralized server to provide combinations).

- It only does random and grid search, not a sophisticated Bayes search.

You can substitute a proper hyperparameter search for this simple version. Of course, Comet's Optimizer solves all of the issues listed.

# A DIY Hyperparameter search

from comet_ml import Experiment

import itertools

import json

import random

import os

def get_parameters(params, shuffle=True):

"""

Return a dictionary of parameter settings given

lists of possible values for each.

Args:

params: dict of lists of possible values

shuffle: bool; if True, shuffle the value orders

Returns a dict of key/value for each combination.

"""

if shuffle:

combinations = []

for values in itertools.product(*params.values()):

combinations.append(dict(zip(params, values)))

random.shuffle(combinations)

for combo in combinations:

yield combo

else:

for values in itertools.product(*params.values()):

yield dict(zip(params, values))

hyperparameters = {

"learning-rate": [0.001, 0.1, 0.2, 0.5, 0.9],

"batch-size": [16, 32, 64],

"hidden-layer-size": [5, 10, 15],

"optimizer": ["adam", "sgd"],

}

def train(**hyperparams):

# a dummy training function that returns loss

return random.random()

count = 0

metric_name = "loss"

objective = "minimize"

for parameters in get_parameters(hyperparameters):

count += 1

experiment = Experiment(project_name="diy-search")

experiment.log_parameters(parameters)

metric_value = train(**parameters)

experiment.log_metric(metric_name, metric_value, step=0)

experiment.log_other("optimizer_metric", metric_name)

experiment.log_other("optimizer_metric_value", metric_value)

experiment.log_other("optimizer_version", "diy-1.0")

experiment.log_other("optimizer_process", os.getpid())

experiment.log_other("optimizer_count", count)

experiment.log_other("optimizer_objective", objective)

experiment.log_other("optimizer_parameters", json.dumps(parameters))

experiment.log_other("optimizer_name", "diy-optimizer-001")