Prompt table

LLM Projects LLM Projects have been designed specifically with prompt engineering workflows in mind. LLM projects are under active development, feel free to reach out by email at product@comet.com or raise an issue on the comet-llm Github repository with questions or suggestions.

Using LLM Projects¶

Given that LLM projects have been designed with prompt engineering at it's core, we have focused the functionality not only around analyzing prompt text and responses, but also prompt templates and variables. It is also possible to log text metadata to a prompt, which is particularly useful to keep track of token usage.

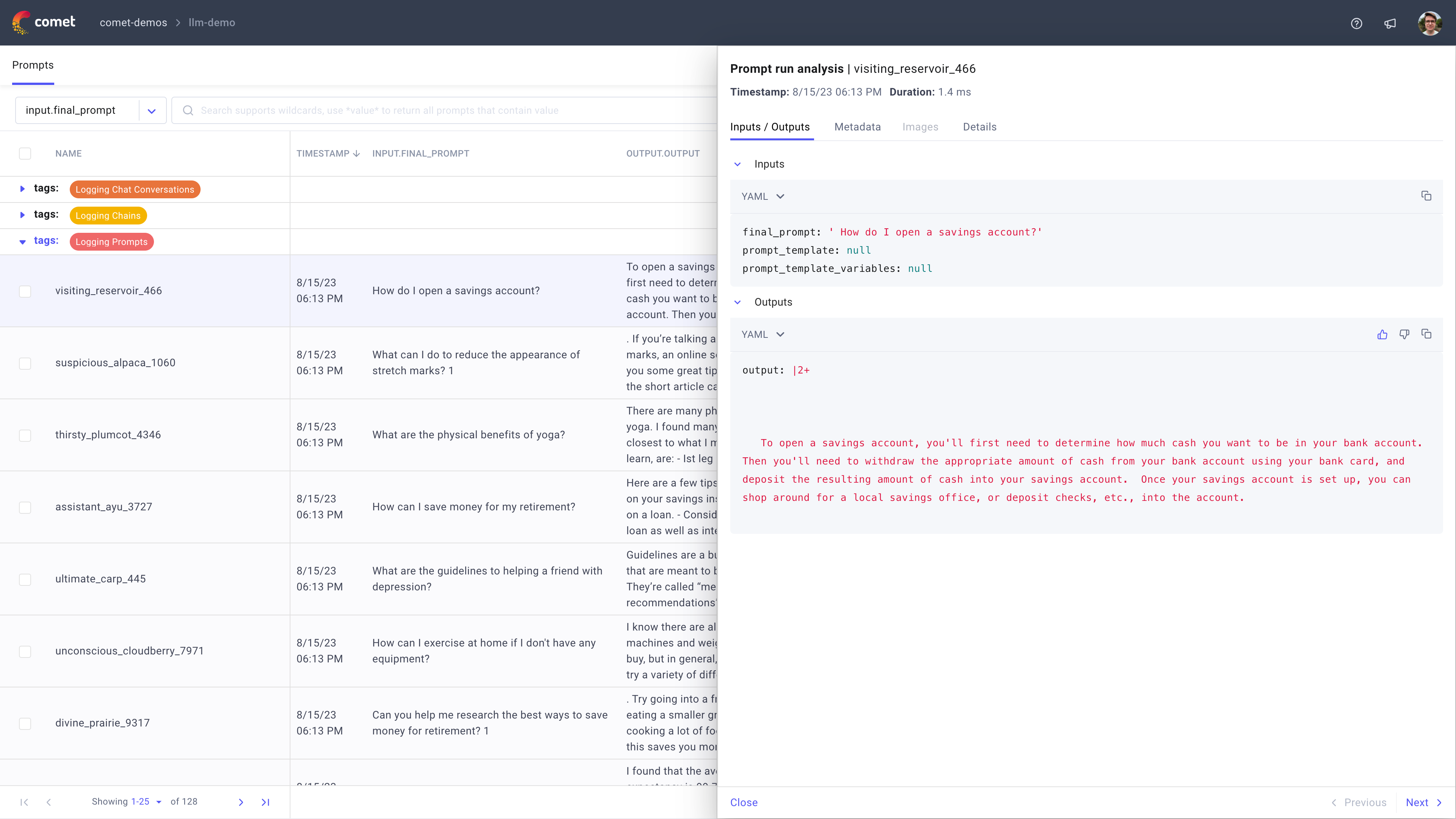

All the information logged to a prompt (including metadata, response, prompt template and variables) can be viewed in the table sidebar by clicking on a specific row.

You can view a demo project here.

Search prompts¶

To search for prompts in an LLM project, use the search box located above the table. First, select the column you want to search on. You can search your input prompt, the prompt template and variables as well as any of the response objects.

After selecting the column, enter your search query. By default, Comet will be perform an approximate search and return all prompts that contain the word or phrase you have searched for.

In addition to approximate search, Comet also supports:

- Exact search: Wrapping your phrase with

"will return only the prompts that match this phrase exactly. - Wildcards: You can use the wildcard

*to create more complicated search patterns.

The search terms you enter will be highlighted both in the main table and in the sidebar of the table.

Configure the prompt table¶

You can update the columns displayed in the table by using the Columns. This will allow you to add, remove and change the order of the columns in the table.

You can also use the Group by button to organize your prompts based on any of the metadata fields logged to your prompt or chains. For example, if you logged a unique identifier for each prompt template you are using you could group by this indetifier to better understand the performance of each template.

Note

For each group, you will see the average of the User Feedback column and Duration columns allowing you to easily compare multiple groups to one another.

Prompt Sidebar¶

The prompt sidebar is used to view all the information logged to a prompt or chain.

If you have logged a prompt or chain, you can view all the information logged in the prompt sidebar.

The sidebar has 4 sections:

- Inputs / Outputs: Displays JSON or YAML format all the inputs and outputs logged to the prompt, chain or chain span. You can add a feedback score using the thumb up or thumb down icons in the Outputs section.

- Metadata: Displays all the metadata fields logged, this can be used to track the model used, number of tokens or any other information you want to record.

- Images: Displays images for this prompt, chain or chain span. Today this information is only logged through the OpenAI integration. You can find an example project here.

- Details: Information logged by Comet like the prompt name, timestamps and the user that has logged the prompt or chain.

Note

Pro tip: While the sidebar is open, you can quickly navigate between prompts by either clicking on a different row in the sidebar or by clicking on previous / next.

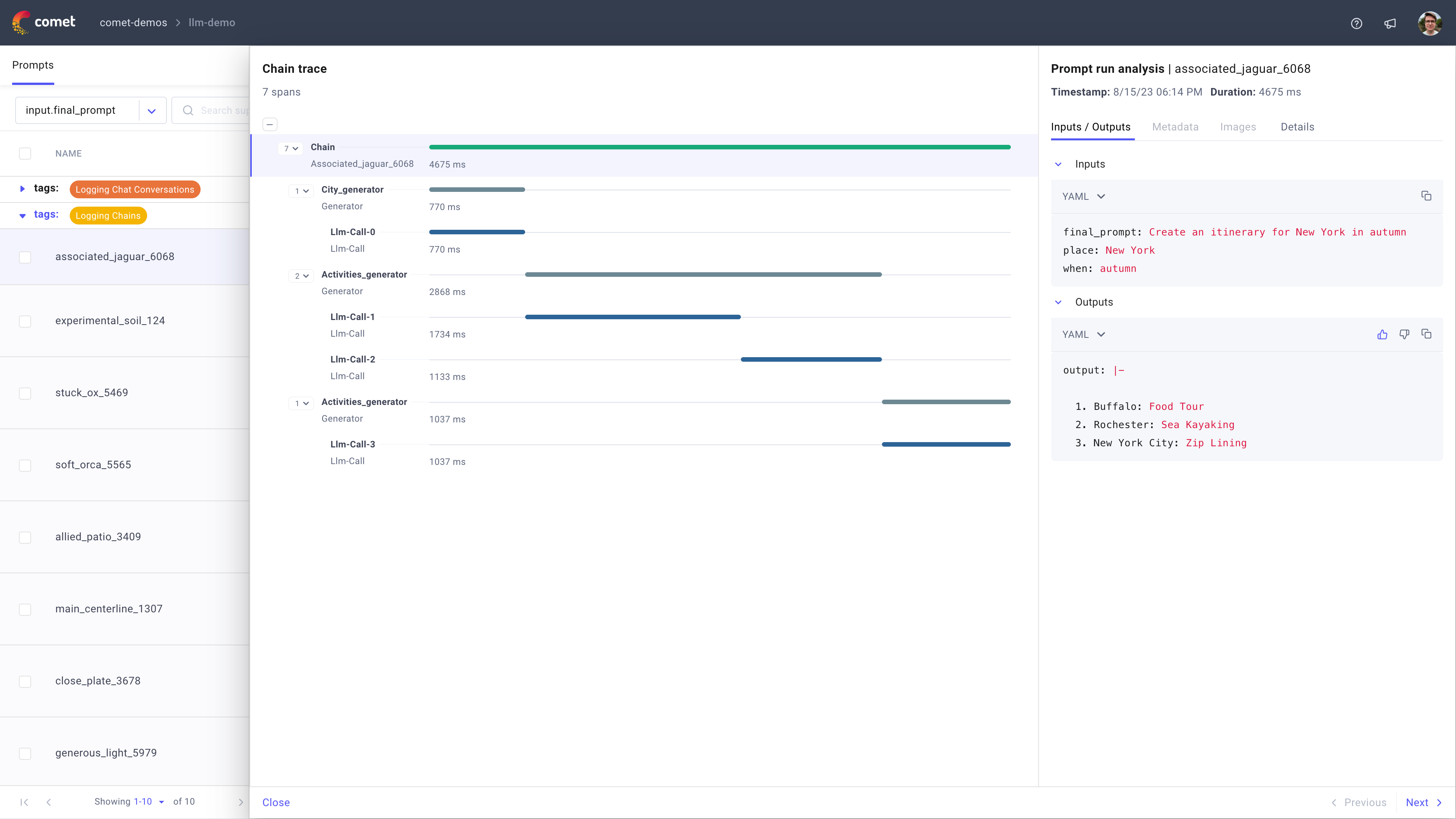

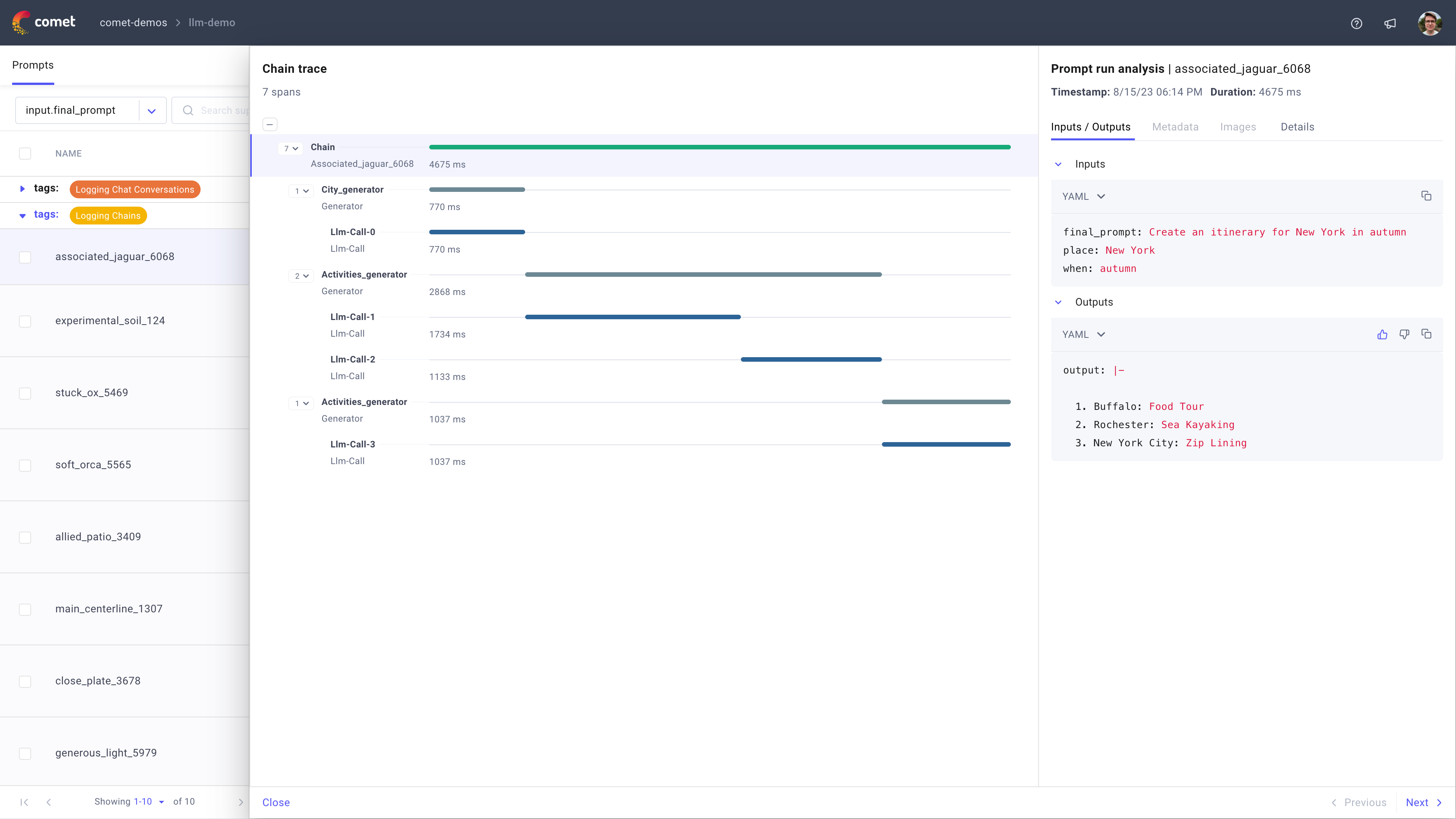

Chain sidebar¶

In addition to the information described in the section above, we display an interactive chain viewer in the sidebar.

This chain viewer allows you to see how long the chain took to execute, all the individual steps of the pipeline and the inputs and outputs of each step. When selecting a span, the sidebar to the right will update to display the information for that particular span allowing you to better understand exactly what happened during the execution of the chain.