Integrate with torchtune¶

torchtune is a PyTorch-native library for easily authoring, fine-tuning and experimenting with LLMs.

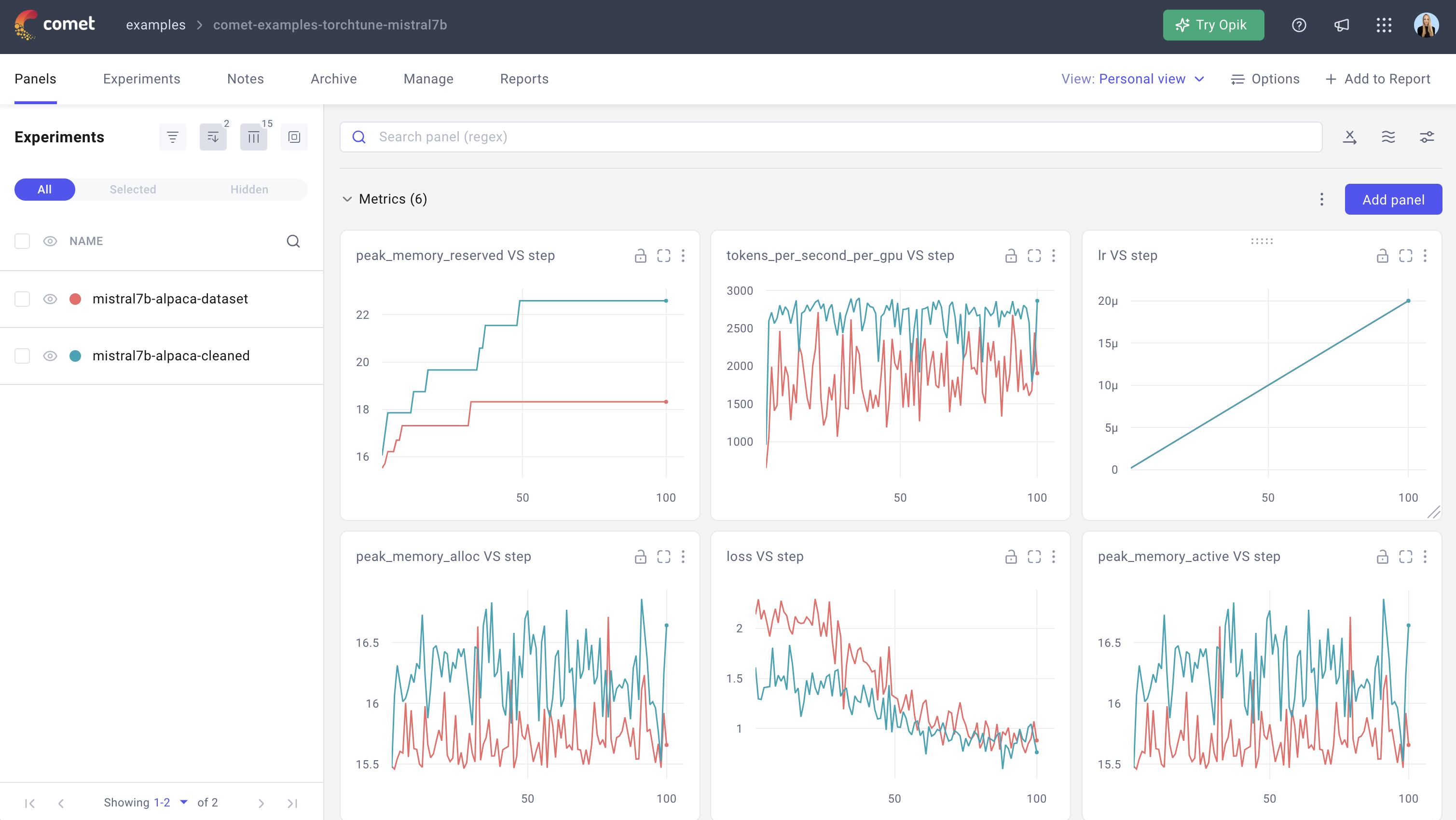

Instrument your runs with Comet to start managing experiments, create dataset versions and track hyperparameters for faster and easier reproducibility and collaboration.

| Comet SDK | Minimum SDK version | Minimum torchtune version |

|---|---|---|

| Python-SDK | 3.45.0 | master |

Start logging¶

Enable the Comet Logger in your recipe's config:

metric_logger:

_component_: torchtune.training.metric_logging.CometLogger

project: <your-project-name>

experiment_name: <my-experiment-name>

Tip

Find a full list of torchtune recipe configs here.

Log automatically¶

When using the CometMLLogger, Comet automatically logs the following items, by default, with no additional configuration:

- Training metrics like

loss, andtokens_per_second_per_gpu. - All hyperparameters like

lora_rank,lora_alphaand anything else included in the config file.

End-to-end example¶

The following is a basic example of using Comet with torchtune using Mistral7B.

If you can't wait, check out the results of this example torchtune experiment for a preview of what's to come.

Clone the repo¶

git clone https://github.com/pytorch/torchtune/

Install dependencies¶

python -m pip install ".torchtune[dev]" comet_ml

Log-in to Comet¶

comet_ml login

Download the model¶

tune download mistralai/Mistral-7B-v0.1 --output-dir ./Mistral-7B-v0.1/

Tip

Set your environment variable HF_TOKEN or pass in --hf-token to the command in order to validate your access. You can find your token at https://huggingface.co/settings/tokens. For more information on how to set your Hugging Face token, see here.

Write the torchtune config with Comet¶

Write the following config file to mistral_comet_lora.yaml:

tokenizer:

_component_: torchtune.models.mistral.mistral_tokenizer

path: ./Mistral-7B-v0.1/tokenizer.model

# Dataset

dataset:

_component_: torchtune.datasets.alpaca_dataset

train_on_input: True

seed: null

shuffle: True

# Model Arguments

model:

_component_: torchtune.models.mistral.lora_mistral_7b

lora_attn_modules: ["q_proj", "k_proj", "v_proj"]

apply_lora_to_mlp: True

apply_lora_to_output: True

lora_rank: 64

lora_alpha: 16

checkpointer:

_component_: torchtune.training.FullModelHFCheckpointer

checkpoint_dir: ./Mistral-7B-v0.1

checkpoint_files:

[pytorch_model-00001-of-00002.bin, pytorch_model-00002-of-00002.bin]

recipe_checkpoint: null

output_dir: ./Mistral-7B-v0.1

model_type: MISTRAL

resume_from_checkpoint: False

optimizer:

_component_: torch.optim.AdamW

lr: 2e-5

lr_scheduler:

_component_: torchtune.modules.get_cosine_schedule_with_warmup

num_warmup_steps: 100

loss:

_component_: torch.nn.CrossEntropyLoss

# Fine-tuning arguments

batch_size: 4

epochs: 1

max_steps_per_epoch: 100

gradient_accumulation_steps: 2

compile: False

# Training env

device: cuda

# Memory management

enable_activation_checkpointing: True

# Reduced precision

dtype: bf16

############################### Enable Comet ###################################

################################################################################

# Logging

# enable logging to the built-in CometLogger

metric_logger:

_component_: torchtune.training.metric_logging.CometLogger

# the Comet project to log to

project: comet-examples-torchtune-mistral7b

experiment_name: mistral7b-alpaca-cleaned

################################################################################

################################################################################

output_dir: ./Mistral-7B-v0.1

log_peak_memory_stats: True

# Profiler (disabled)

profiler:

_component_: torchtune.training.setup_torch_profiler

enabled: False

Run the example with a single GPU¶

tune run lora_finetune_single_device --config mistral_comet_lora.yaml

Try it out!¶

Try out an example of using Comet with torchtune and Mistral7B in this Colab notebook.