Model Fairness¶

As models are increasingly used for critical business applications, it is important to ensure models are fair not just when they are trained but also when they are running in production. Existing legislation in the USA and the proposed AI act in Europe makes tracking model fairness in production a legal requirement.

MPM (Model Production Monitoring) tracks model fairness metrics in realtime allowing Data Science teams to ensure models remain fair.

Model Fairness metrics¶

The fairness metric tracked by Comet out of the box is called Disparate Impact. It is the only metric currently codified in US regulation and is used to ensure that a model's percentage of positive outcomes is similar between a reference group and a sensitive group.

Track disparate impact for your models¶

The Disparate Impact metric in Comet is defined for all classification models tracked in Comet and can be access through the Fairness tab.

Getting started¶

In order to start tracking Disparate Impact, you will first need to define MPM Segments for your models. For each MPM Segment that you create, you will be to track the disparate impact across the unique values taken by that feature. MPM Segments are often created on features that represent Gender, Country, Demographic. You can learn more about configuring MPM Segments here.

Configuring fairness metrics¶

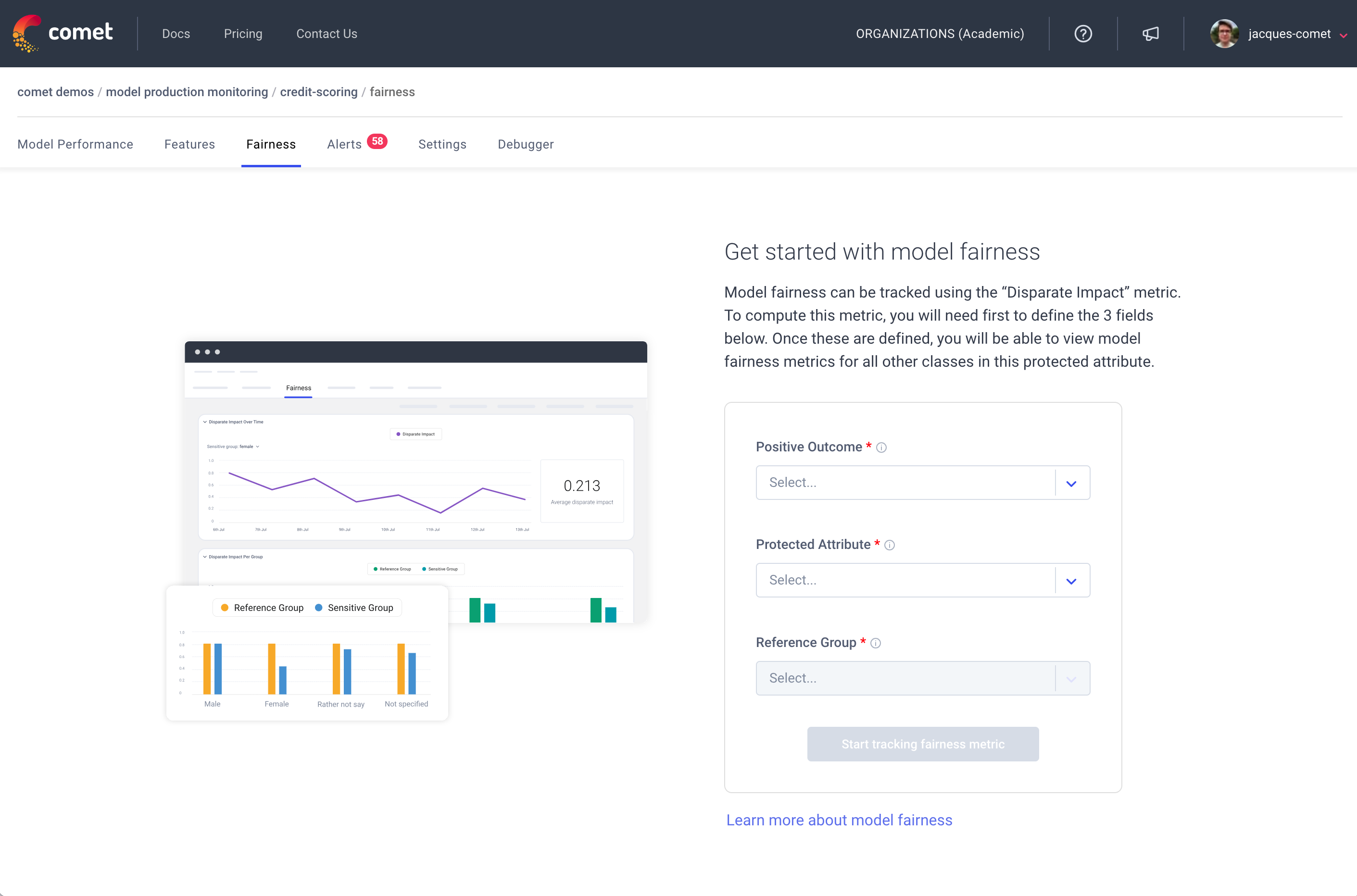

When navigating to the Fairness tab, you will be asked to set a few settings before seeing the fairness metrics.

In the model fairness landing page, you will be asked to specify:

- Positive Outcome: The model prediction that corresponds to a positive outcome. The values available in this field are taken from the unique values of the

output.valuefeature. - Protected Attribute: The protected attribute corresponds to the Segment you want to ensure are fair. The values available in this field are taken for the list of Segments created for this model.

- Reference Group: The reference group corresponds to the subset of users that should be used as a baseline when computing the

Disparate Impactmetric. The values available in this field are taken from the unique values taken by the Segment selected as theProtected Attribute.

Once these fields are populated you will be able to view your model's fairness metrics.

Analyzing model fairness¶

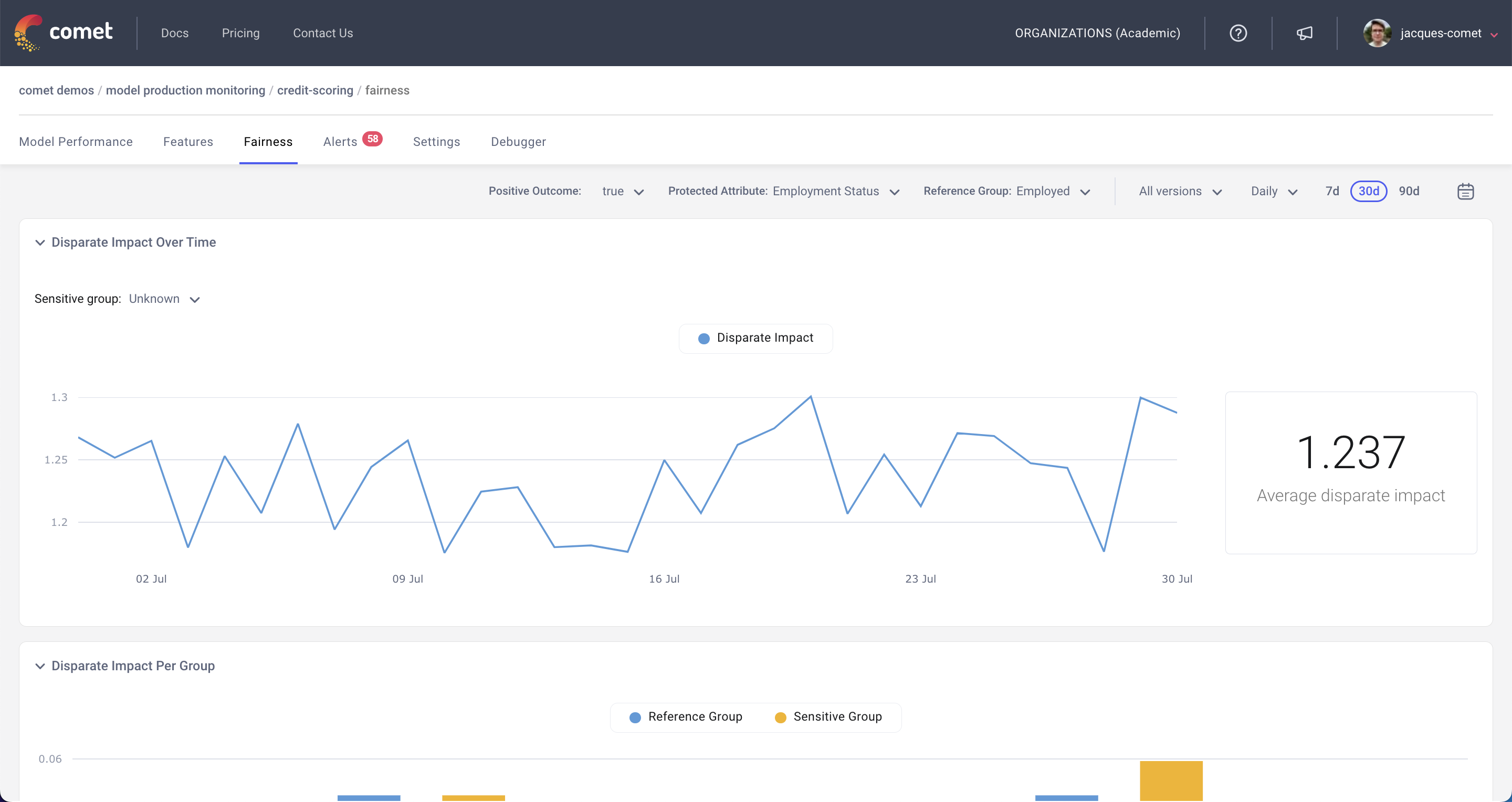

Comet's model fariness dashboard allows you to analyze your model's bias using the Disparate Impact metric defined above.

Comet's model fairness dashboard has two sections:

Disparate Impact Over Time: Tracks model fairness over time and displays on the right hand side a summary metric which is an average of the values displayed in the chart. The sensitive group can be configured using the dropdown calledSensitive group.Disparate Impact Per Group: Tracks model fairness across all groups in the protected attribute. For each group, you will be able to view the number of predictions with a positive outcome. The blue bar represents the senstive group and will therefore always be the same, the yellow bar with represent the % of prediriction with a positive outcome for the group specified in the x-axis label.