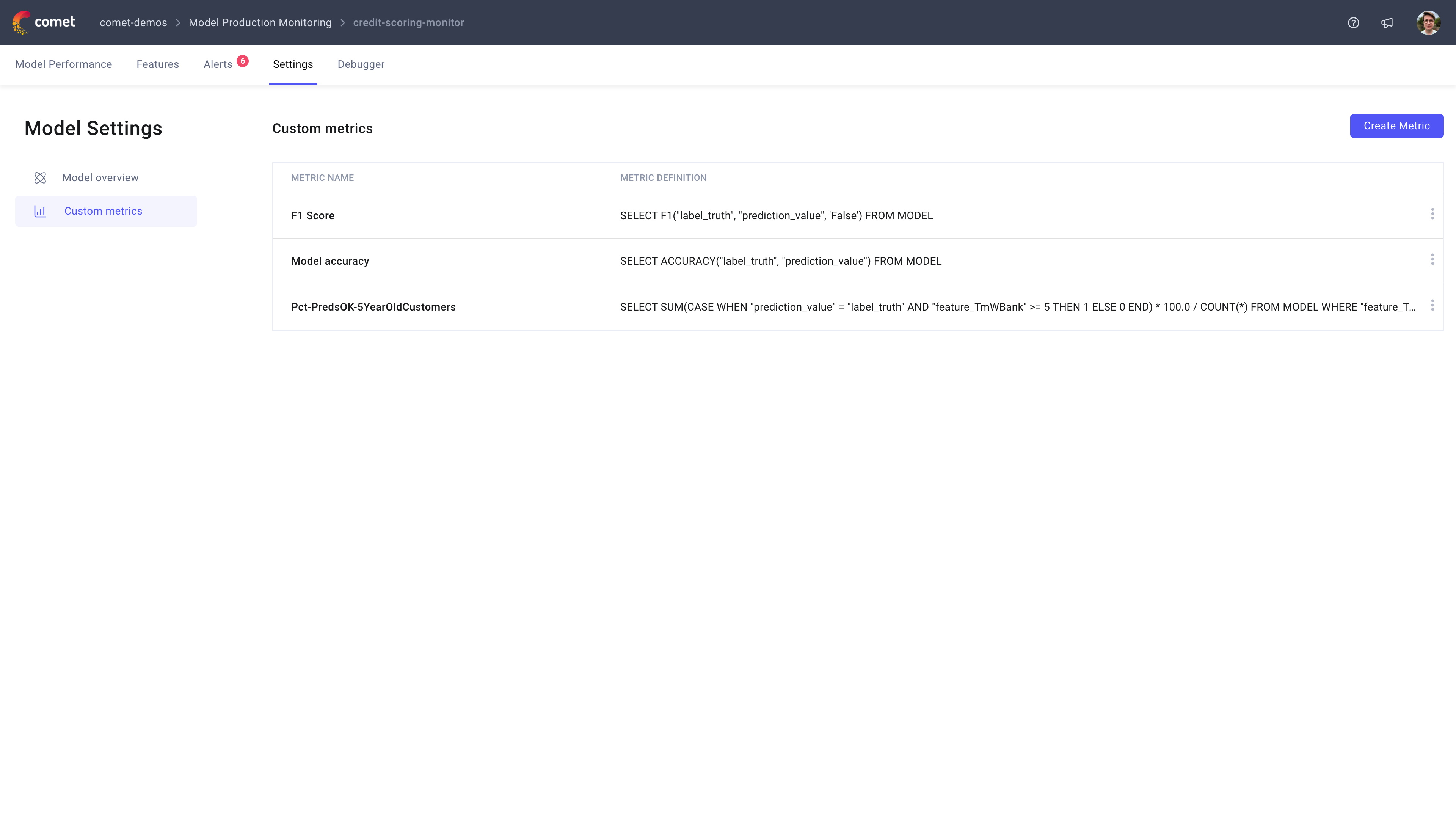

Custom metrics

Every machine learning model is unique and no monitoring solution is a one size fits all. MPM provides tracks the distribution of features, data drift and accuracy metrics out of the box but sometimes you just need more. This is where MPM Custom Metrics come into play.

MPM Custom Metrics are based on a SQL like language and can be created through from the MPM settings tab.

Writing a custom metric¶

Custom metrics are defined using the MPM settings tab under Custom Metrics.

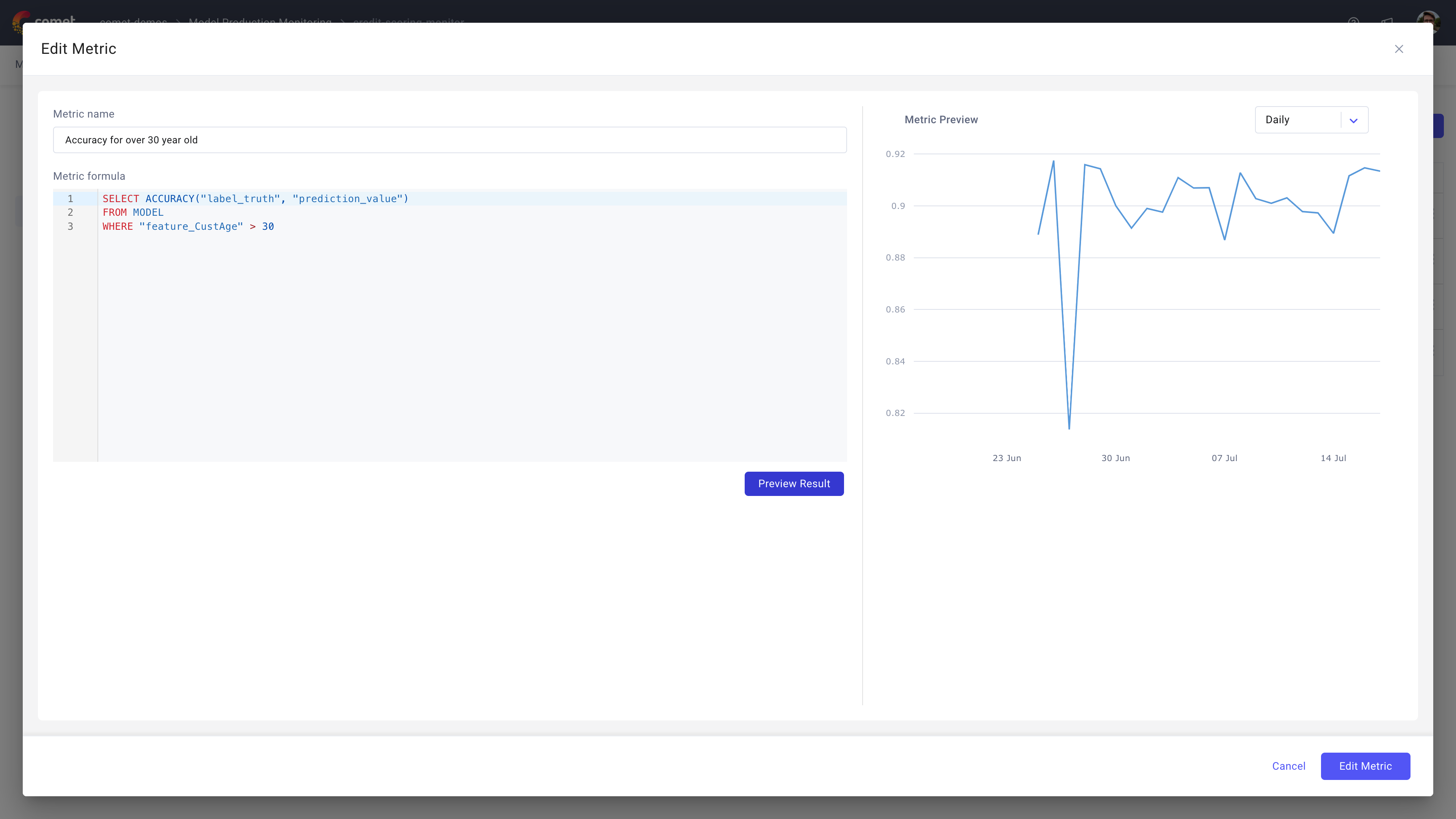

In order to define a metric you will need to specify a name for the metric as well as the formula for that metric.

A custom metric needs to follow the following format:

SELECT

<METRIC FORMULA>

FROM

MODEL

WHERE

<OPTIONAL FILTER>

Note

In order for the metric to be valid, the metric formula needs to be an aggregation of the predictions or labels that returns a single value.

While writing the custom metric definition, you will be able to view a preview of this metric in the right hand side of the screen.

Once a metric is saved, you will be able to view the metrics in the Model Performance page. MPM will also automatically convert this formula to a valid SQL query based on the filters you have selected (hourly vs daily data, specific model version, etc).

Note

You can create an alert on any of the custom metrics you have created.

Example custom metrics¶

Custom metrics for a subset of predictions¶

In order to compute a custom metric for a subset of the predictions, you can use the WHERE clause:

SELECT count(*)

FROM MODEL

WHERE "feature_CustAge" > 30

Note

If you would like to filter by a feature in the SELECT clause to compute the percentage of predictions with a certain value, you can use a CASE statement:

SELECT SUM(

CASE WHEN "feature_TmWBank" >= 5

THEN 1

ELSE 0

END) * 100.0 /

COUNT(*) FROM MODEL

Accuracy related metrics¶

In order to make it easy for you to create common custom metrics, we have created SQL functions for common machine learning metrics:

ACCURACY(prediction, label): Model accuracyPRECISION(prediction, label): Model precisionRECALL(prediction, label): Model recallMAE(prediction, label): Model mean absolute errorMSE(prediction, label): Model mean squared errorRMSE(prediction, label): Model root mean squared errorF1(label, prediction, positive_label): Model F1 scoreFNR(label, prediction, negative_label): False negative rateFPR(label, prediction, positive_label): False positive rateTNR(label, prediction, negative_label): True negative rateTPR(label, prediction, positive_label): True positive rate

All metrics and functions¶

You can use both metric and aggregate functions in your custom metric formulas:

- Aggregate metrics:

APPROX_COUNT_DISTINCTAVGMAXMINSTDDEV_POPSTDDEV_SAMPSUMVAR_POPVAR_SAMP

- Metric functions:

ABSCEILFLOOREXPLNLOG10SQRT