Integrate CometLLM with OpenAI¶

CometLLM offers a direct integration with OpenAI.

You just need to initialize the Comet LLM SDK, and CometLLM will automatically log the prompt, model output, and other relevant metadata for any OpenAI client.chat.completions.create() call.

Below you can find step-by-step instructions on how to add CometLLM tracking to your OpenAI projects and an end-to-end example. Additional information are also available in the Integration: Third-party tools section of the Comet docs.

Tip

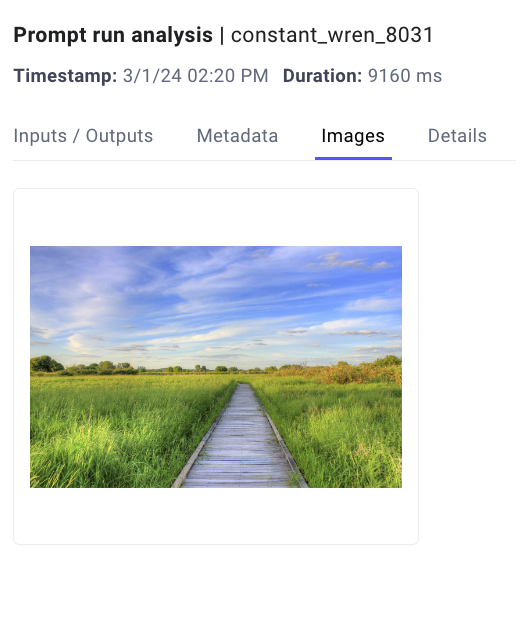

CometLLM supports both text and images as inputs for your OpenAI LLM calls!

What information is automatically tracked?¶

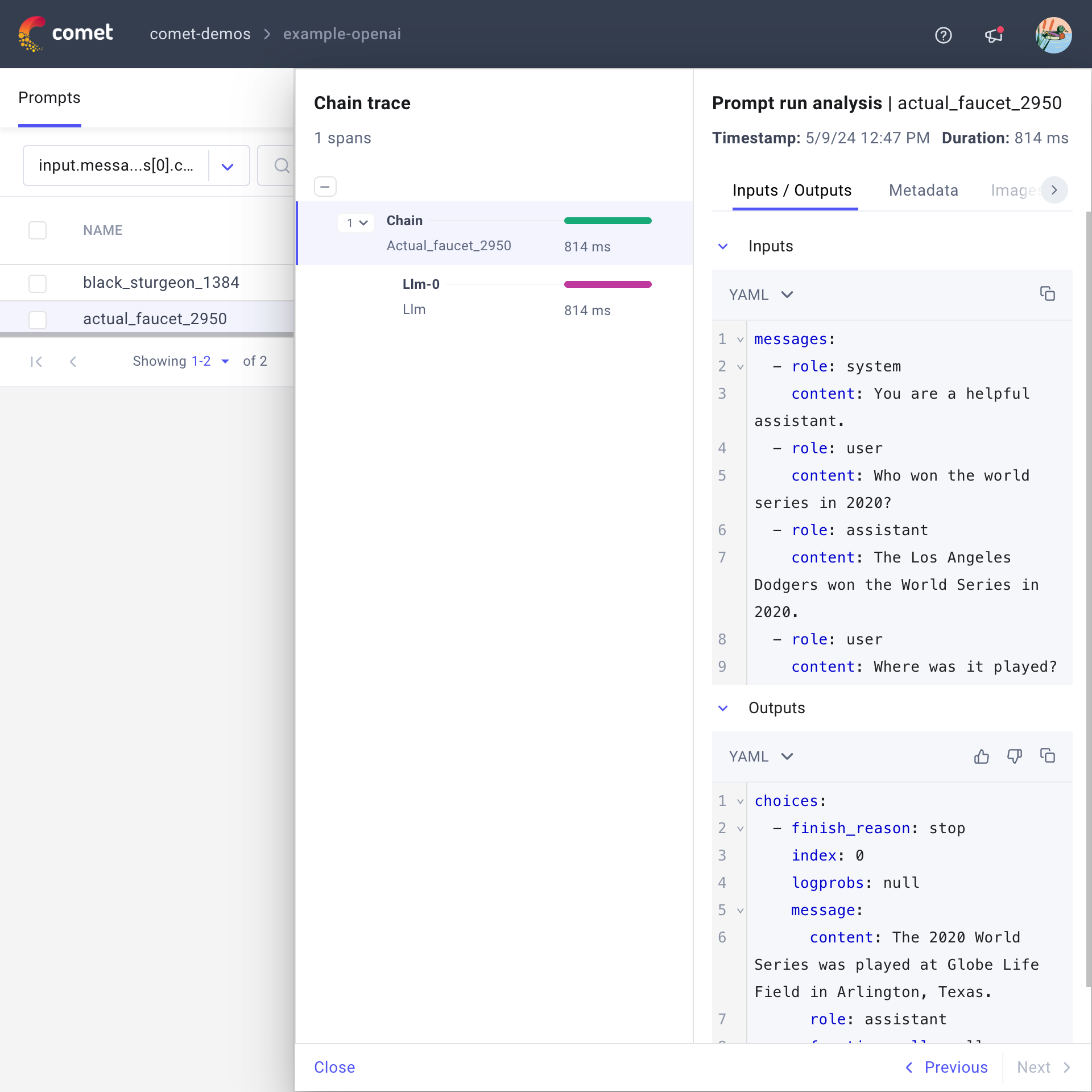

Each OpenAI call is logged as a chain in the CometLLM Project.

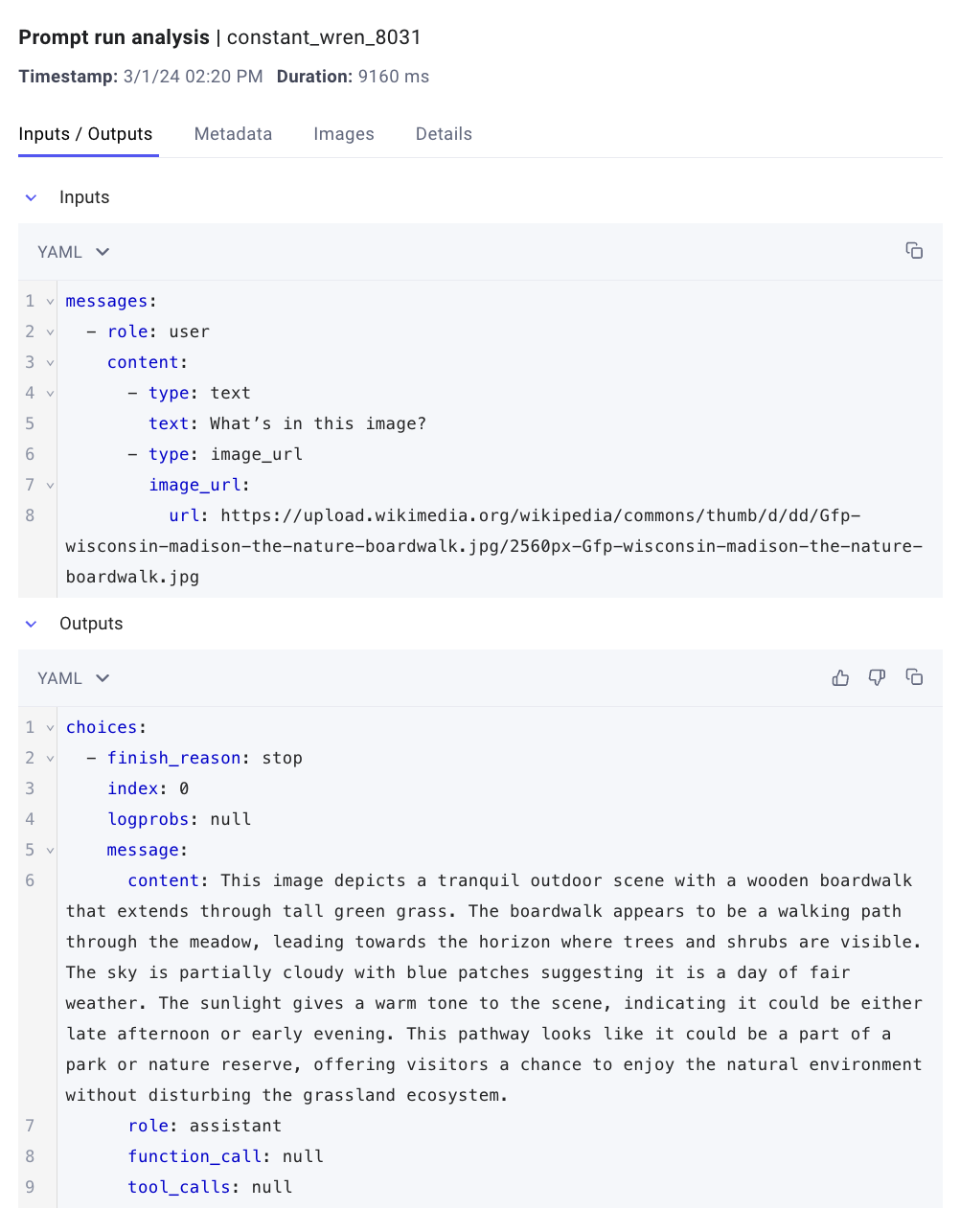

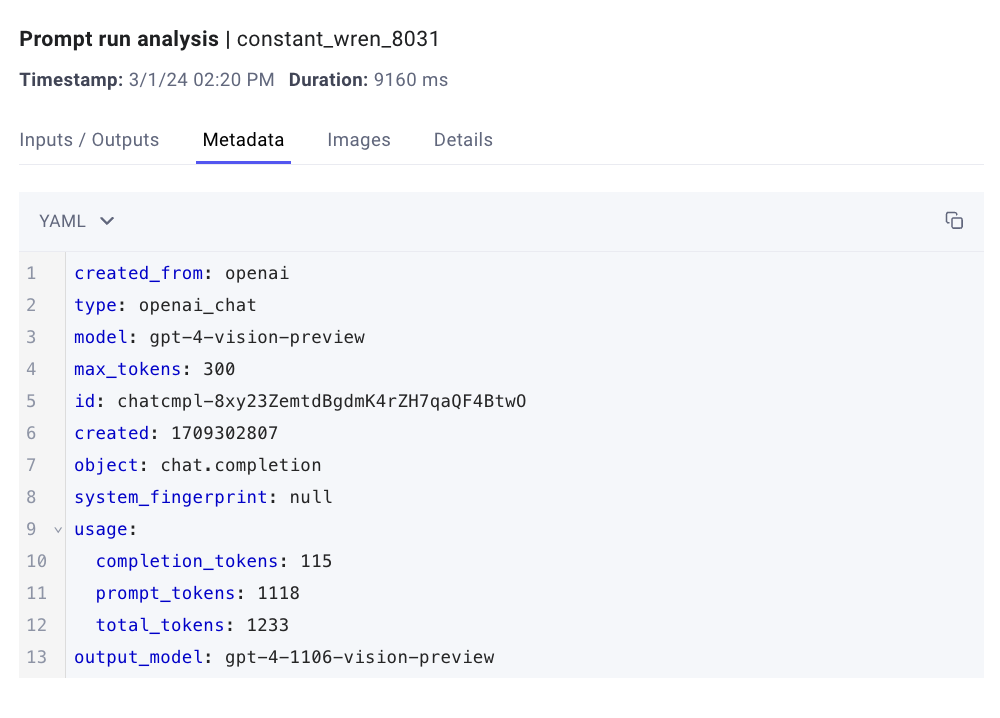

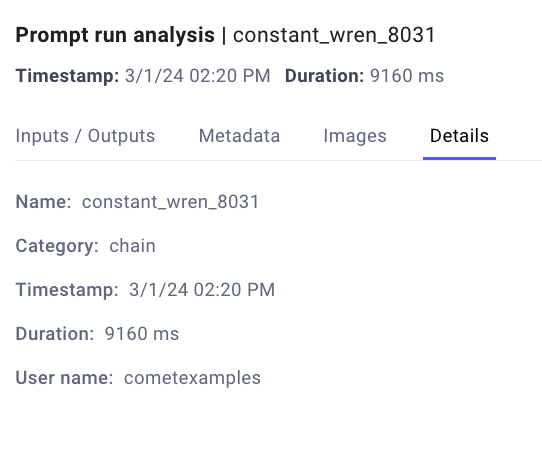

Below is a description of the information you can find in each tab of the Prompt Run Analysis sidebar module for prompt management with OpenAI.

Please refer to the EXAMPLE screenshot for information on the exact metadata fields automatically logged, and resulting YAML format returned, by CometLLM.

Add Comet tracking to your OpenAI code¶

The CometLLM integration with OpenAI is a one-step process where you initialize comet-llm with your Comet API key, and desired project and workspace values.

Pre-requisites¶

Before getting started, you need to make sure to:

- Install the

comet_llmandopenaipackages with pip. Store the OpenAI API key as an environment variable with:

export OPEN_API_KEY="<your-api-key>"Find more instructions in the OpenAI Best Practices for API Key safety guide.

Write your OpenAI application inside a Python script or Jupyter notebook.

1. Initialize comet-llm¶

The first and only step to set up the CometLLM OpenAI integration is to initialize the Comet LLM SDK.

This requires you to add the following two lines of code:

1 2 3 | |

And provide your Comet API key when prompted in the terminal.

That's it! Simply run your OpenAI application and take advantage of CometLLM logging to track and manage your OpenAI prompt engineering workflows.

End-to-end example¶

Below is an example OpenAI model call with CometLLM tracking set up.

import comet_llm

from openai import OpenAI

# Initialize the Comet LLM SDK

comet_llm.init(project="example-openai")

# Create your OpenAI application

client = OpenAI()

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{

"role": "system",

"content": "You will be provided with text, and your task is to translate it into emojis. Do not use any regular text. Do your best with emojis only."

},

{

"role": "user",

"content": "Artificial intelligence is a technology with great promise."

}

],

temperature=0.8,

max_tokens=64,

top_p=1

)

print(response.choices[0].message.content)

Executing this code prints the following output to your terminal:

🤖💡👍🏼

You can access the model output plus input prompts and other useful details, such as temperature and token usage, from the Prompt sidebar in the Comet UI, as showcased in the screenshot below.

Try it out!¶

Click on the button below to explore example projects with text and vision input prompts, and get started with OpenAI LLM Projects in the Comet UI!

Additionally, you can review the codebase behind the two Comet projects above by clicking on the Colab link below.