Integrate CometLLM with LangChain¶

LangChain offers a direct integration with CometLLM as part of its community module via the CometTracer callback.

The integration is a one-line addition to your LangChain LLM invocation(s) which enables CometLLM to automatically log for you:

- LLM parameters

- Input prompts

- Model output

- Execution details

- Tools calls and configurations

Below you can find step-by-step instructions on how to add CometLLM tracking to your LangChain projects and an end-to-end example. Additional information are also available in the Integration: Third-party tools section of the Comet docs.

Add Comet tracking to your LangChain code¶

The CometLLM integration with LangChain is a two-step process:

- Initialize

comet-llmwith your Comet API key and desired project<>workspace. - Add the

CometTracercallback to your model invocation.

Pre-requisites¶

Before getting started, you need to make sure to:

- Install the

comet_llmandlangchainpackages with pip. Install the chosen LangChain module for your LLM application and set up your API key for the LLM provider as an environment variable.

For this guide, you'll need to run:

pip install langchain-anthropic export ANTHROPIC_API_KEY="<your-api-key>"Find more instructions in the LangChain quickstart guide.

Write your LangChain application inside a Python script or Jupyter notebook.

1. Initialize comet-llm¶

The first step to set up the CometLLM LangChain integration is to initialize the Comet LLM SDK

This requires you to add the following two lines of code:

1 2 3 | |

And provide your Comet API key when prompted in the terminal.

2. Add the CometTracer callback¶

The second and last step is to pass the CometTracer callback to your LLM model invocation.

For example:

5 6 7 8 9 10 11 12 13 14 15 | |

End-to-end example¶

Below is an example LangChain chain with CometLLM tracking set up.

import comet_llm

from langchain_anthropic import ChatAnthropic

from langchain_core.prompts import ChatPromptTemplate

from langchain_community.callbacks.tracers.comet import CometTracer

# Initialize the Comet LLM SDK

comet_llm.init(project="example-langchain")

# Create your LangChain application

model = ChatAnthropic(model='claude-3-opus-20240229')

system = (

"You are a helpful assistant that translates {input_language} to {output_language}."

)

human = "{text}"

prompt = ChatPromptTemplate.from_messages([("system", system), ("human", human)])

chain = prompt | model

chain.invoke(

{

"input_language": "English",

"output_language": "Korean",

"text": "I love Python",

},

config = {'callbacks' : [CometTracer()]}

)

Executing this code prints the following output to your terminal:

AIMessage(content='저는 파이썬을 사랑합니다.\n\n(jeo-neun pa-i-sseon-eul sa-rang-ham-ni-da.)', response_metadata={'id': 'msg_01ShZPYUvCms1YuqykGMN8yU', 'model': 'claude-3-opus-20240229', 'stop_reason': 'end_turn', 'stop_sequence': None, 'usage': {'input_tokens': 22, 'output_tokens': 46}}, id='run-1ecbc6d6-d2f4-42b4-bb6f-cd390131d22e-0')

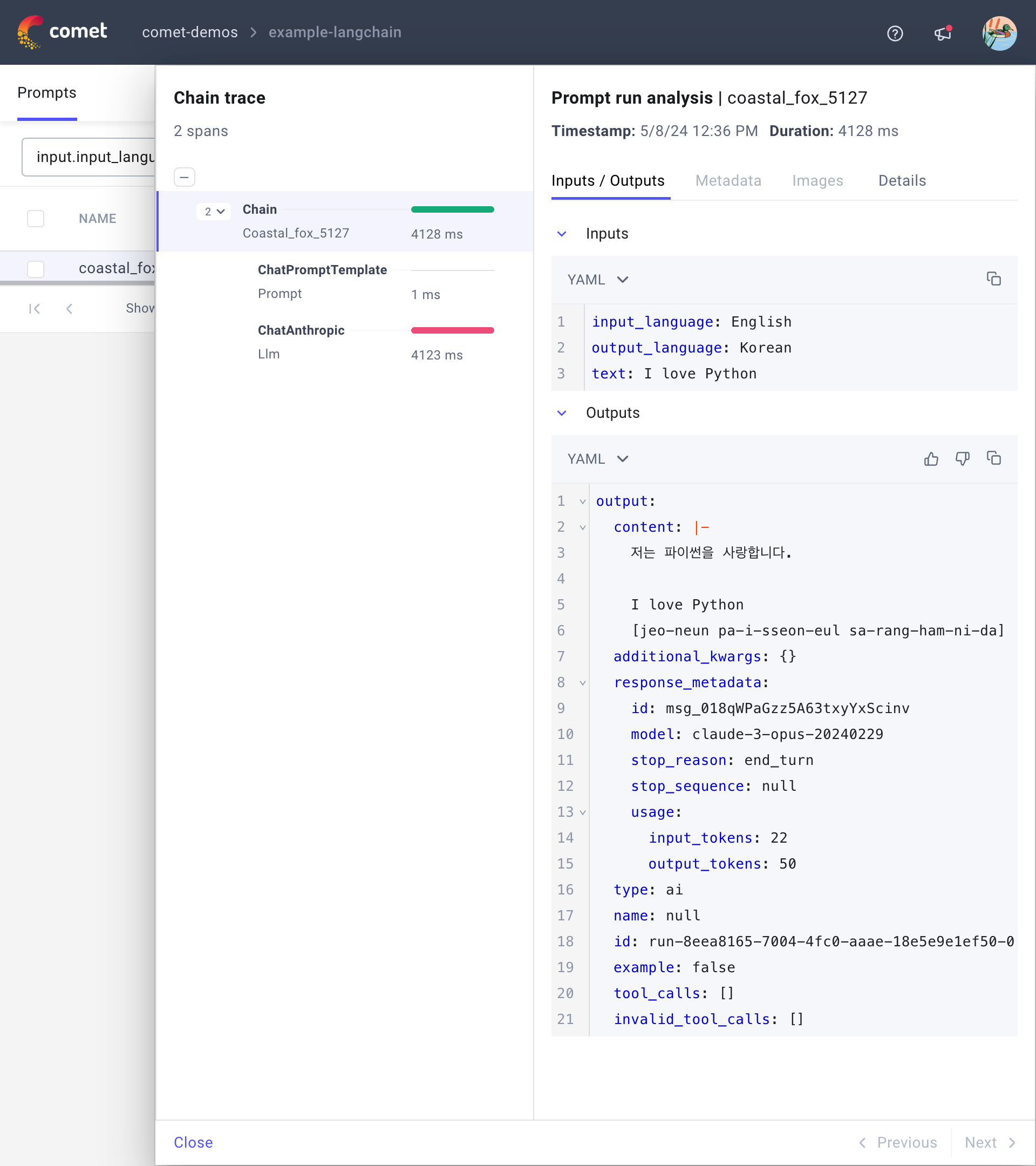

You can access the same information (i.e., model output and response metadata) plus input prompt and other useful details, such as execution duration and timestamp, from the Prompt sidebar in the Comet UI, as showcased in the screenshot below.

Tip

You can add the CometTracer() callback to most LangChain objects of the LangChain API such as Chains, Models, Tools, Agents, etc.

Find more information in the official LangChain Callbacks documentation.

Try it out!¶

Click on the button below to explore an example project and get started with LangChain LLM Projects in the Comet UI!

Additionally, you can review an example notebook with more Comet-powered LangChain snippets by clicking on the tutorial below.