Configure the Optimizer¶

Comet Optimizer offers you an easy-to-use interface for model tuning which supports any ml framework and can easily be integrated in any of your workflows.

The main set-up step is to define the tuning configuration for Optimizer inside a configuration dictionary.

Below, we provide you with a complete reference for the Optimizer configuration specs followed by configuration examples for all supported search algorithms.

Note

For information on how to run end-to-end tuning, please refer to the Quickstart.

🚀 Going beyond? Jump to the Run hyperparameter tuning in parallel page to learn how to parallelize your tuning job!

The structure of the configuration dictionary¶

The Optimizer configuration is defined as a JSON dictionary that consists of five sections:

| Algorithm | Type | Description |

|---|---|---|

algorithm | String | The search algorithm to use. |

spec | Dictionary | The algorithm-specific specifications. |

parameters | Dictionary | The parameter distribution space. |

name | String | (Optional) A personalizable name to associate with the hyperparameter search instance. |

trials | Integer | (Optional) The number of trials to run per parameter set. Defaults to 1. |

The configuration dictionary can be defined in code as a map or inside a configuration file to be accessed within your script.

Tip

We recommend defining your configuration dictionary in a optimizer.config file, rather than directly in code, to unlock better reproducibility and visibility!

Deep-dive into the dictionary configuration¶

You can find here a detailed description of the five sections, i.e. keys, of the Optimizer configuration dictionary.

The algorithm field¶

algorithm is a mandatory configuration key that specifies the search algorithm to use.

Possible options are: random, grid, and bayes.

| Algorithm | Description |

|---|---|

"random" | For the Random search algorithm. Random search randomly selects hyperparameters given the defined search space and for the number of trials specified. |

"grid" | For the Grid search algorithm. Grid is a search algorithm based on picking parameter values from discrete, possibly sampled, regions. |

"bayes" | For the Bayesian search algorithm. Bayes ia a search algorithm based on distributions and balancing exploitation vs. exploration to make suggestions for what hyperparameter values to try next. |

You can discover more about each algorithm from our Hyperparameter Optimization with Comet blog post.

The spec field¶

spec is a mandatory configuration key used to define algorithm-specific specifications.

Note that the search algorithms slightly vary on supported specs, as highlighted in the "Use it for" column in the table below.

| Key | Value | Use it for |

|---|---|---|

metric | String. The metric name that you want to optimize for. Default: "loss". | random, grid, bayes |

objective | String. Whether you want to "minimize" or "maximize" for the objective metric. Default: "minimize". | bayes |

maxCombo | Integer. The maximum number of parameter combinations to try. Default: None, i.e. all possible combinations (considering the specified gridSize). | random, grid, bayes |

gridSize | Integer. The number of points or intervals to use when discretizing the parameter space in grid ranges. Default: 10. | random, grid |

minSampleSize | Integer. The minimum number of samples or data points used to find appropriate grid ranges. Default: 100. | random, grid |

retryLimit | Integer. The maximum number of retries for creating a unique parameter set before giving up. Default: 20. | random, grid, bayes |

retryAssignLimit | Integer. The maximum number of retries for re-assigning non-completed experiments. Default: 0. | random, grid, bayes |

When setting the values for these specs, keep in mind that:

objectiveis only needed for the bayes algorithm to drive its smart hyperparameter selection.maxComboshould be left to default for a complete grid search, but consider setting it to a lower value than the maximum number of combinations for random search and bayes search to take advantage of their underlying hyperparameter selection logic.Note that the maximum number of combinations is defined as:

\[ max\_combos = (gridSize * n\_continuous) \times (\prod_{i=1}^{n\_discrete} n\_discrete\_values_i) \]where \(n\_continuous\) refers to the number of continuous parameters, \(n\_discrete\) refers to the number of discrete parameters, and \(n\_discrete\_values\) refers to the number of possible values (for the discrete parameter).

Find example calculations in the Examples of configuration dictionary section below.

gridSize, andminSampleSizeare useful to help control the computational complexity of the optimization process in the compromise between search completeness and cost, which is fundamental when dealing with large search spaces.Note that for the bayes search algorithm, each parameter is considered as a distribution to sample according to the performance of the previous hyperparameter selection hence these two spec keys don't apply.

retryLimitfailures could be due to numerical instability, resource constraints, or data issues as the script runs.retryAssignLimitfailures could be do to network instability or resource allocation issues before the script runs.

Note

In total, you run maxCombo*trials number of tuning trials.

Tip

To optimize multiple metrics simultaneously, you can set metric as the weighted sum of more metrics. For example:

1 | |

The parameters field¶

parameters is a mandatory configuration key that expects a dictionary of all the hyperparameters to be optimized.

The following example shows a possible parameters definition with three different parameter types:

1 2 3 4 5 | |

The hyperparameter format is inspired by Google's Vizier as exemplified by the open source version called Advisor. As such, each hyperparameter is defined as a key-value pair where the key is the hyperparameter name and the value is a dictionary with the following attributes:

type: one of integer, float, double, categorical, discrete.For categorical and discrete types only:

values: a list of string or numerical values, respectively.

For integer, float, and double types only:

scaling_type: one of linear (default), uniform, normal, loguniform, and lognormal.For linear, uniform, and loguniform scaling types only:

min: lower bound of the range.max: upper bound of the range.

For normal and lognormal scaling types only:

mu: mean of the distribution.sigma: standard deviation of the distribution.

For grid and random search algorithm only:

grid_size: integer resolution size for how to divide up the parameter space into discrete bins. You can also specify this at a higher level for all parameters with thegridSizespec key.

Tip

You should use:

{"type": "integer", "scaling_type": "linear", ...}for an independent distribution where values have no relationship with one another (e.g., a seed value).{"type": "integer", "scaling_type": "uniform", ...}if the distribution is meaningful (e.g., 2 is closer to 1 than it is to 6).

The name field¶

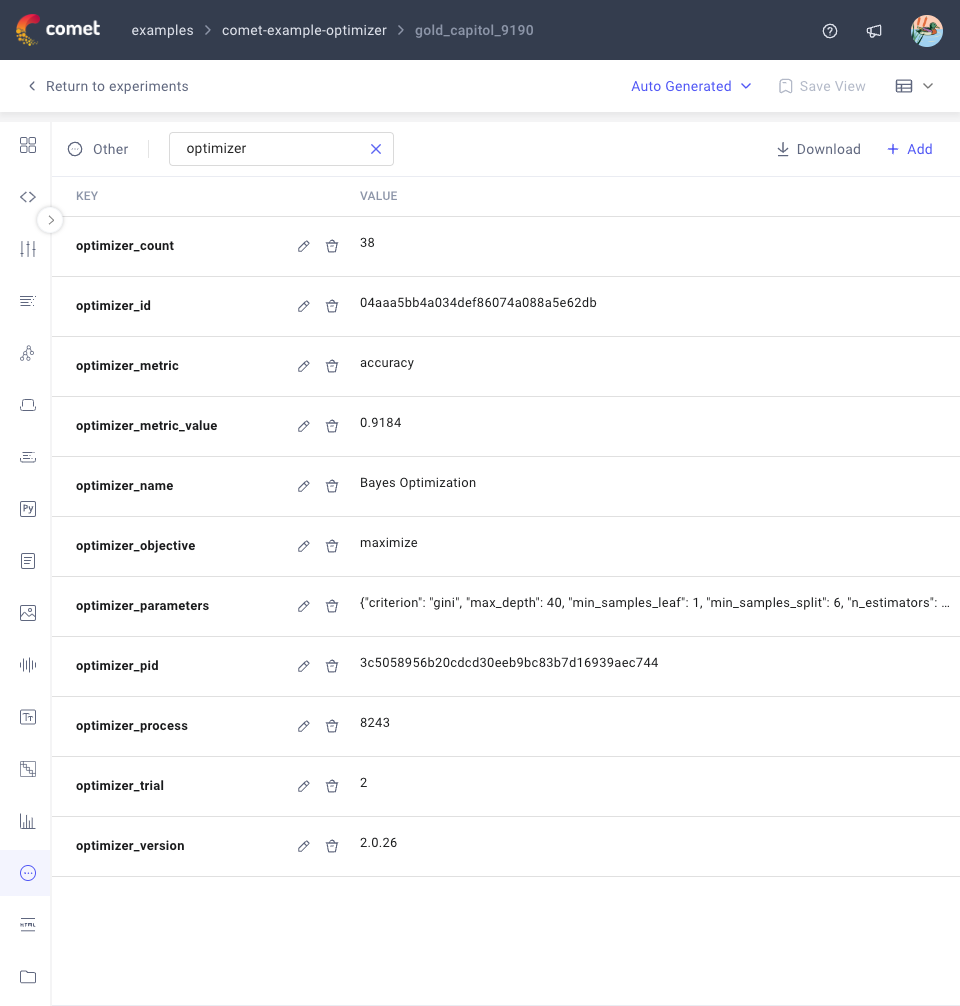

name is an optional configuration key that you can use to specify a name for your tuning runs. The name is logged in the Other tab of the Single Experiment page under the key "optimizer_name".

The default value is the Optimizer.ID.

Use it to easily track and organize multiple optimization experiments.

The trials field¶

trials is an optional configuration key that allows you to specify the integer number of tuning trials to run for a single parameter set.

The default value is 1.

Use it to compensate for the variability in training runs due to the non-deterministic nature of ML by averaging performance across trials.

Examples of configuration dictionary¶

Below we provide an example of configuration dictionary for each algorithm type, with associated considerations on the chosen specs and tuning output.

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

This optimization configuration performs an exhaustive search across the complete parameters space for a maximum of 30 combinations in one single trial, i.e. each parameter set is only repeated once.

The Optimizer automatically logs learning_rate and batch_size as parameters, the loss metric, optimizer specs and algorithm as other parameters, and the custom name for this tuning trial.

Why 30 combinations? Because

maxCombois left as default. Thus, it creates all possible combinations, i.e. (gridSize* 1) * (3) = 30 since we have one continuous parameter (learning_rate) and one discrete parameter with three possible values (batch_size).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

This optimization configuration performs a random search across the parameters space for a maximum of 25 combinations for each of two trials (i.e. each parameter set is repeated twice) for a total of 50 training runs. Also, the minSampleSize is higher than the default to provide a more fine-grained grid definition; note that this takes more computational power than if using the default value.

The Optimizer automatically logs hidden-layer-size, hidden2-layer-size, and optimizer as parameters, the accuracy metric, optimizer specs and algorithm as other parameters, and the custom name for these tuning trials.

Why 25 combinations (per trial)? Because

maxCombois set to 25. If it was left as default, then there would be (gridSize* 1) * (3*3) = 45 runs since we have one continuous parameter (hidden-layer-size) and two discrete parameters with three possible values each (hidden2-layer-size and optimizer).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | |

This optimization configuration performs a Bayesian optimization search across the parameters space for a maximum of 20 combinations for a single trial that aims to maximize the AUC metric. Also, the retryLimit is reduced from 20 to 10 and the retryAssignLimit is increased to 10 as example ways to control gracious failover (e.g., if we decide to use preemptible machines for the tuning).

The Optimizer automatically logs gamma, eta, and n_estimators as parameters, the auc metric, optimizer specs and algorithm as other parameters, and the custom name for this tuning trial.

Why 20 combinations? Because

maxCombois set to 20. If it was left as default, then there would be (gridSize* 3) = 30 runs since all parameters are continuous.